4 Policy Features

These section explains Oracle Communications Cloud Native Core, Converged Policy features.

Note:

The performance and capacity of the Policy system may vary based on the Call model, Feature/Interface configuration, underlying CNE, and hardware environment, including but not limited to the complexity of deployed policies, policy table size, object expression and custom json usage in Policy design.4.1 Optimizing PolicyDS Database Query With Configurable UE IDs

When PDS receives a request from any of the microservices, it queries the PolicyDS database to retrieve the details.

PolicyDS database includes:

PDSProfile: Contains PDS Profile information that includes subscriber ID related information such as SUPI and GPSI.PDSSubscriber: Contains subscriber information.

Policy supports configuring the query on PolicyDS database based on single UE ID, multi UE IDs or based on a specific preference using the subscriber IDs.

Type of search to query and view details from PolicyDS database

Depending on the selected type of search, single UE ID, multi UE IDs, or

preferential query, PDS queries data from PDSProfile and

PDSSubscriber.

If the selected type of search is single UE ID, PDS queries all the

details from PDSSubscriber.

If the selected type of search is multi UE IDs, or preferential query,

PDS queries the details from PDSProfile.

The details of current and historical type of search configurations on

various site is managed in pdssettings table.

PDS refers to pdssettings table to identify the

siteID's and the configuration in each site.

Single UE ID Based Query

PDS query on PolicyDS database using Single UE ID approach allows to

query PDSSubscriber using a primary key combination.

Note:

There cannot be multiple SUPIs for one GPSI or multiple GPSIs associated with one SUPI.The primary key combination for Single UE ID based search can be modified. That is, primary key can be changed from SUPI to GPSI or from GPSI to SUPI.

On selecting the required primary key combination, the requests to PDS is expected to mandatorily contain that ID. Otherwise, the request is rejected with 400 HTTP error code. For example, when the primary key combination is SUPI, the request to PDS must contain a valid SUPI value. Otherwise, the request is rejected with 400 HTTP error code. For more information on primary key combination, see PDS UE ID Configuration.

Note:

This validation is not applicable in case of PDS notification call flows.

Multi UE ID based query is the default type of querying PolicyDS database when the type of search is not selected.

Multi UE ID indicates that there can be multiple SUPIs for one GPSI or multiple GPSIs associated with one SUPI.

Note:

Multi UE ID based search query uses OR criteria and the search time taken is higher.

Preferential query allows to

specify a key preference for PDS search query used to look up for a

pdsprofile record.

- SUPI:

pdsprofileis queried based on the given SUPI value. - GPSI:

pdsprofileis queried based on the given GPSI value. - SUPI, GPSI:

pdsprofileis queried first based on the given SUPI value and then based on the given GPSI value. - GPSI, SUPI:

pdsprofileis queried first based on the given GPSI value and then based on the given SUPI value.

Note:

For notification and audit query the preference order is not considered.

Migration of database records

Policy allows to switch from one type of search to the other type.

For example, the type of search can be switched from Multi UE ID based search or Preferential search to Single UE ID based search.

When the type of search is switched, the database records in

PDSProfile and PDSSubscriber tables are

updated accordingly in a lazy manner. For example, if search type is switched from

Multi UE ID based search or Preferential search to Single UE ID based search, the

records in PDSSubscriber are updated with the information present

in PDSProfile table, provided there is unique SPI/GPSI combination

associated to that profile.

CNC Console for Policy displays if the migration of records is in progress. If so, how many records are still to be migrated (that is, total number of non-migrated records). For more information, see PDS UE ID Configuration.

Important:

When the type of query is switched from one type of search to another type, the dashboards that are used to identify unique subscriber count will be affected.Note:

Whenever PDS queries PolicyDS database, it stores the results in cache. If a PolicyDS database query is triggered within the cached period, PDS displays the results stored in the cache. PDS does not query the database every time the query is triggered.

Important:

While switching from one type of search to the other, (for example, while switching from Multi UE ID based search or Preferential search to Single UE ID based search or vice versa) make sure that the migration of records is complete.

Note:

In case of multi-site deployment, Policy supports displaying the migration status at each of the sites.

To disable the migration status for one of the sites, all the sites should be in migration enabled state with the updated configuration. Otherwise, the migration status cannot be disabled.

Migration should be disabled in all the sites almost simultaneously to avoid stale subscriptions being left in PDS.

Migration from Single UE ID to Single UE ID with different primary key combinations should be done in all sites almost simultaneously to avoid stale subscription being left in PDS.

Sample data migration scenario

Note:

The switching of the search types and the migration of PDS data is supported only in case of CREATE and audit requests.

Whenever PDS receives a request from any of the PCF microservices such as SM service, AM service, or UE Policy service, PDS queries its PolicyDS database to retrieve the existing data based on the configured query type such as Multi UE ID or Single UE ID based search. In case, there is any change in the query type, PDS uses the latest query type to search for the data.

Here is a sample scenario when PDS receives a CREATE request for a subscriber for

which PDS updates the pdsprofile details using a single UE ID query

using SUPI.

PDS receives a CREATE request with SUPI= supi1 and GPSI=gpsi1 for the site Site1, and

the query type is changed from Multi UE ID based search to Single UE ID based search

using SUPI as the primary key. PDS queries the pdsprofile database

for the given profileID having the given SUPI.

If there is no matching record found for the pdsprofileId with the

given SUPI, PDS updates the SUPI, GPSI, and other profile details for the

profileID in pdsprofile database.

PDS then queries the subscriber details in pdssubscriber database

using the pdsprofileID. If there is a matching record found with

the pdsprofileID, PDS updates the SUPI, GPSI, and other details of

the profile corresponding to the subscriber (pdsuserID).

If there is no matching record found for the given pdsprofileID, PDS

adds the new profile details for the subscriber based on

pdsuserID.

It is mandatory to manually enable audit to support the migration.

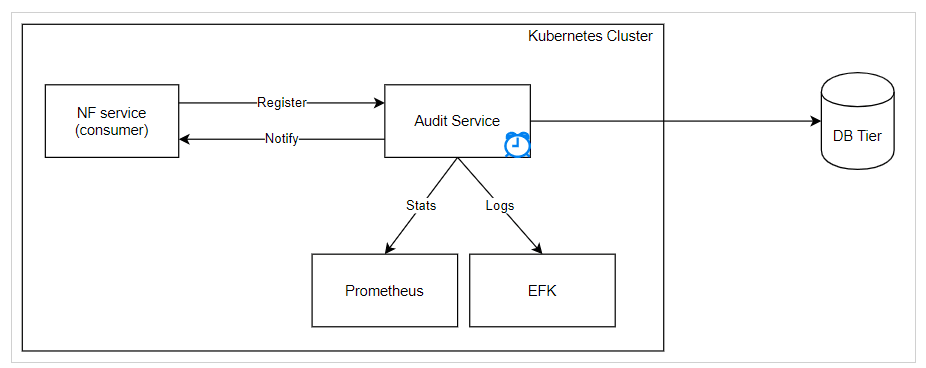

When Audit service is performed on pdssubscriber database, it

identifies the subscriber details (pdsuserIDs) that are deleted in

pdssubscriber database. The corresponding

profileIDs are then removed from the

pdsprofile database.

Similarly, when Audit service is performed on pdsprofile database,

it identifies the profileIDs that are deleted in

pdsprofile database. The subscriber details in

pdssubscriber database is then updated to remove the non

existent pdsprofileID.

Managing Optimization of PolicyDS Database Query With Configurable UE IDs

This section describes how to enable and configure the feature using CNC Console and REST API.

Configure

Multi UE ID based search is the default type, if the type of search is not explicitly configured. The type of searching PolicyDS database can be configured either using CNC Console or using REST API.

Configure using CNC Console

To configure the UE ID based configuration using CNC Console, perform the configurations on PDS UE ID Configuration page under Service Configurations.

For more information, see PDS UE ID Configuration.

Configure using REST API

To configure the UE ID based configuration using REST API, configure

the parameters for PDS UE ID Configuration Settings API.

For more information see, PDS UE ID Configuration Settings section in Oracle Communications Cloud Native Core, Converged Policy REST Specification Guide.

Observability

Metrics

occnp_db_processing_latency_seconds_count metric is

used to monitor the UE ID based query on PolicyDS database.

For more information, see Policy DS Metrics.

The ENABLE_PDS_DB_HISTOGRAM_METRICS advanced settings

key for PDS must be enabled to use

occnp_db_processing_latency_seconds_count metric.

For more information on ENABLE_PDS_DB_HISTOGRAM_METRICS key, see

PDS Settings.

Installation and Upgrade Impact

Upgrade impact:

- During installation/upgrade, UE ID configuration selected is

validated before updating the

pdssettingstable. - In case of duplicate configuration, a warn log is printed without

any update to

pdssettingsdatabase. - Partition-based approach can be enabled only through the fresh installation

- In case of other invalid configuration (Site1 with Partition

enabled and Site2 with Partition disabled and vice versa), a warn log is printed

and the configuration updated in

pdssettingsdatabase is the same as previous sites.

Logging

The following logs from PDS info are used to verify the type of search:

{"instant":{"epochSecond":1744551776,"nanoOfSecond":106606704},"thread":"boundedElastic-6","level":"DEBUG","loggerName":"ocpm.uds.policyds.util.PdsUserIdGeneratorUtil","message":"PdsUserId generated for SINGLE_UE_ID_PREFERENTIAL_SEARCH with primaryKeyCombination : SUPI

is :imsi-450081000000001##0##fe7d992b-0541-4c7d-ab84-c6d70b1b0123","endOfBatch":false,"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger","threadId":102,"threadPriority":5,"messageTimestamp":"2025-04-13T13:42:56.106+0000","ocLogId":"1744551775993_152_policy-occnp-ingress-gateway-f88679cd9-6j79l"}The PDS logs display the type of search and also how the primary key is generated.

{"instant":{"epochSecond":1744551776,"nanoOfSecond":307685362},"thread":"boundedElastic-10","level":"DEBUG","loggerName":"ocpm.uds.policyds.db.helper.PdsSubscriberV2Helper","message":"Updating mode: 16768 in pdssubcriber for user id:

imsi-450081000000001##1##fe7d992b-0541-4c7d-ab84-c6d70b1b0123","endOfBatch":true,"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger","threadId":140,"threadPriority":5,"messageTimestamp":"2025-04-13T13:42:56.307+0000","ocLogId":"1744551775993_152_policy-occnp-ingress-gateway-f88679cd9-6j79l"}Checkpoints

- Migration of records is not required when the type of search is switched

between Multi UE ID search and Preferential search, as both these type of

searches query

pdsprofileandpdssubscribertables. - While switching to Single UE ID search,

siteIdcan have a maximum of 80 characters when both SUPI and GPSI are used. - The prerequisite for switching to Single UE ID search is that there should be multiple UE ID configurations. That is, one SUPI cannot have multiple GPSIs or one GPSI cannot have multiple SUPIs.

- All the sites should be upgraded to new release before modifying the PDS Settings configurations.

- In case of multi-site deployment, in order to disable the

migration at one site, all the sites should be in migration enabled state

with the updated configuration. Otherwise, migration at the specified site

cannot be disabled.

The following are the key points to remember while disabling the migration in any of the sites in a multi-site deployment:

-

Migration can be disabled at any of the sites, only if all the sites are using the same type of query. Migration can not be disabled at any one site if any of the sites are using a different query type.

For example, in a three site deployment, if Single SUPI query is used in Site1 and Site3, while Multi UE ID query is used in Site2, migration can not be disabled in Site1 or Site3.

-

Query type can be changed at a site only if all the sites are using the same query type.

For example, if Site1 and Site3 are using Single SUPI query and Site2 is using Multi UE ID query, type of query at Site1 or Site3 cannot be changed from Single SUPI query to Sinlge SUPI/GPSI until the type of query at Site2 is also changed to Single SUPI. When all the three sites are using Single SUPI query, query type can be changed to Single SUPI/GPSI or any other query type.

-

Migration from one query type to another is not allowed at one site when one of the other sites is using a different query type.

For example, if Site1 is migrated from Single SUPI to Single SUPI/GPSI while Site2 and Site3 are still using Single SUPI, Site2 or Site3 cannot be migrated from Single SUPI to Mult UE ID type.

Since Site1 is already migrated to Single SUPI/GPSI, Site2 and Site3 must also be migrated to Single SUPI/GPSI completely before attempting to migrate Site2 or Site3 to Multi UE ID type.

-

Migration at one site cannot be disabled, if the query type at that site is migrated to a different one while the other sites are yet to be migrated.

For example, if Site1 is migrated from Single SUPI/GPSI to Multi UE ID while Site2 and Site3 are yet to be migrated from Single SUPI/GPSI to Multi UE ID, migration cannot be disabled at Site1.

Migration at Site1 can not be disabled only after Site2 and Site3 are also migrated from Single SUPI/GPSI to Multi UE ID type.

-

-

When a new site is added, by default the query type at that site will be in Multi UE ID mode. Suppose, the other sites are using a different query type, the new site should also be migrated to that query type.

For example, while Site1 and Site2 are using Single SUPI query type, if a new site Site3 is added, the default query type at Site3 is Multi UE ID, which must be migrated to Single SUPI type as in Site1 and Site2.

- Migration should be disabled in all the sites almost simultaneously to avoid stale subscriptions being left in PDS.

- Migration from one Single UE ID search to another Single UE ID search with different primary key combination should be done in the all the sites almost simultaneously to avoid stale subscription being left in PDS.

- The value of SiteID field on PDS UE ID Configuration page in CNC Console should match with the actual siteIDs.

- When migration is disabled, any non migrated records can not be gracefully

deleted if

pdsprofileis deleted. - A record can be deleted, if the configured primary key combination (UE ID) is not present in the record.

- Records in

Pdsprofileare deleted once the migration is turned off in the subsequent audit notification for thepdsprofiletable. - Audit notification on

Pdsprofilecan not handle migration of the corresponding subscribers. Only the audit notification onpdssubscribercan handle the migration. - Partition-based approach can be enabled only through the fresh installation.

- Configuration at all the sites must be the same. That is, if site1 is installed with a Multi UE ID configuration, then Site 2 should also be installed with a Multi UE ID configuration. Any other configuration apart from this will not be considered.

- If the configured primary key combination is not passed in the create

request, the request is rejected with a

400 BAD request. - If the UE ID passed in the UDR or CHF notification is the same as the primary key combination configured, the lookup can happen with the primary key combination only.

-

The following are the key points to remember while migrating records from different combination of the query types:

-

Migration from Multi UE ID to Preferential search:

If a migration from Multi UE ID based query to Preferential based query is attempted, if the preference order and the requested subscriber IDs are the same, there can be an improvement in the turn around of the search result.

Migration of records depends on the preference order. Migration is applicable only if the query uses specific subscription based on preference order.

For example, if the preference order is SUPI, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 is treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are for the same subscriber.

-

Migration from Preferential search to Multi UE ID search:

If an attempt is made to migrate the type of query from Preferential based query to Multi UE ID based query, migration of records are not applicable if the records are migrated from

pdsprofiledatabase with query using a preference order to new query onpdsprofiledatabase using SUPI or GPSI.Any combination of SUPI and GPSI if shared between subscribers are considered as one subscriber.

-

Migration from Single primary key search to Multi UE ID search:

While migrating from Single primary key to Multi UE ID based query, if the migration is enabled, records are migrated from one single primary key based query on

pdssubscriberto a new query onpdssubscriber.In single primary key, the primary key uniquely identifies the subscriber. For example, SUPI.

Suppose, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 is treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are same subscribers.

If the migration is not enabled, there is no migration of records.

Any combination of SUPI and GPSI, if shared between subscribers is considered as one subscriber.

-

Migration from Single primary key search to Preferential search:

In case of a migration from Single primary key based query to Preferential query, if the migration is enabled, migration of records depends on the preference order. Records are migrated from single primary key based search on

pdssubscriberdatabase to another primary key based search onpdssubscriberdatabase.If the preference order is SUPI, the query must include at least one valid SUPI value.

If the preference order is GPSI, the query must include at least one valid GPSI value.

If the preference order is SUPI, GPSI, records are searched first based on the given SUPI value and then based on the given GPSI value. The query must include at least a valid SUPI or a valid GPSI value.

If the preference order is GPSI, SUPI, records are searched first based on the given GPSI value and then based on the given SUPI value. The query must include at least a valid GPSI or a valid SUPI value.

In single primary key, the primary key uniquely identifies the subscriber. For example, single SUPI.

For example, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 is treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are same subscribers.

If the migration is disabled, there is no migration of records.

For example, if the preference order is SUPI, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 is treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are same subscribers.

-

Migration from Multi UE ID search to Single primary key search:

While switching from Multi UE ID based query to Single primary key serach like Single SUPI or Single GPSI or a combination of SUPI and GPSI as primary key, if the migration is enabled, records are migrated from SUPI or GPSI based query on

pdsprofiledatabase to a new query using SUPI or GPSI onpdsprofiledatabase.Any combination of SUPI and GPSI, if shared between subscribers is considered as one subscriber.

If the migration is not enabled, there is no migration of records.

SUPI or GPSI, or every combination of SUPI and GPSI is treated as a separate subscriber.

For example if the migration is to a Single SUPI query, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 are treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are same subscribers.

If the migration is to a combination of SUPI and GPSI as primary key, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 is treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are also different subscribers.

-

Migration from Preferential search to Single primary key search:

While switching from Preferential search to Single primary key search such as Single SUPI or Single GPSI, or a combination of SUPI and GPSI as primary key, if the migration is enabled, records are migrated from SUPI or a GPSI or a combination of SUPI, GPSI based query on

pdsprofiledatabase to a new query using SUPI or GPSI onpdsprofiledatabase.Any combination of SUPI and GPSI if shared between subscribers is considered as one subscriber.

If the migration is not enabled, there is no migration of records.

SUPI or GPSI, or every combination of SUPI and GPSI is treated as a separate subscriber.

For example if the migration is to a Single SUPI query, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 are treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are same subscribers.

If the migration is to a combination of SUPI and GPSI as primary key, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 is treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are also different subscribers.

-

Migration from one Single primary key search to another Single primary key search:

During migration from one Single primary key search to another Single primary key search such as from Sinlge SUPI or a Single GPSI based search to a combination of Single SUPI and GPSI based search, if migration is enabled, records are migrated from a query using one primary key on

pdssubscriberdatabase to a query based on another primary key onpdssubscriberdatabase.SUPI uniquely identifies the subscriber.

SUPI or GPSI, or every combination of SUPI and GPSI is treated as a separate subscriber.

For example if the migration is to a Single SUPI query, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 are treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are same subscribers.

If the migration is to a combination of SUPI and GPSI as primary key, S1:SUPI1-GPSI1 and S2:SUPI2-GPSI1 is treated as different subscribers. While S1:SUPI1-GPSI1 and S2:SUPI1-GPSI2 are also different subscribers.

-

4.2 Support for Monitoring Migration Status of Database Records

Policy supports assessing the status of DB records in Policy for various microservices.

Note:

Currently, Policy supports assessing the status of only the PolicyDS database records.- Multi UE ID: Allows to use multiple search criteria based on a primary key combination using AND (Preferential) and OR (default).

- Single UE ID: Allows to use a specific criteria based on a primary key combination to query the database.

- Partition based: Allows to use search criteria based on a composite

key formed with a combination of keys. All the keys can be present in a single

table or in different data tables.

Partition based search is applicable only in case of fresh installation.

enablePdsPartitionBasedSchemaHelm parameter must be configured to enable Partition based schema for PDS.When

PdsPartitionBasedSchemaparameter is enabled, the primary key combination for partition based search must be configured usingprimaryKeyCombinationHelm parameter.For more details on

enablePdsPartitionBasedSchemaandenablePdsPartitionBasedSchemaHelm parameters, see Enabling/Disabling Services Configurations section in Oracle Communications Cloud Native Core, Converged Policy Installation, Upgrade, and Fault Recovery Guide.

For more details on the different type of search supported by Policy, see Optimizing PolicyDS Database Query With Configurable UE IDs.

The primary keys used for the query can be SUPI, GPSI, or a combination of SUPI and GPSI.

In case of Single UE ID search types, the primary key includes source type and siteIDs along with the selected SUPI or GPSI or a combination of SUPI and GPSI.

In case of partition based search type, a composite key is formed with subscriber IDs (SUPI, GPSI, or a combination of SUPI and GPSI), source type, and siteIDs stored in separate columns or data tables.

The following table depicts the possible primary key combination for each search type.

Table 4-1 Possible primary key combination for each search type

| Search Type | Primary Key Combination |

|---|---|

| Multi UE ID | UUID |

| Single UE ID | SUPI##GPSI##source_type##siteid |

| Single UE ID | SUPI##source_type##siteid |

| Single UE ID | GPSI##source_type##siteid |

| Partition based Single-UE Id | SUPI##GPSI |

| Partition based Single-UE Id | SUPI |

| Partition based Single-UE Id | GPSI |

Policy allows to switch the type of search. For example, it is possible to switch the type of search from Multi UE ID to Single UE ID type.

When the type of search is switched from one type to the other, tables within the database are updated to be in sync.

For example, when the type of search is switched from Multi UE ID to Single UE ID type, records are migrated from the tables used by Multi UE ID type to the tables used by Single UE ID type.

While the data migration is in progress, the database can be queried to get the count of database records that are pending to be migrated.

For example, while switching from Multi UE ID based query to Single UE ID based query, the database can be queried to get the count of records pertaining to the microservice or a subscriber that are yet to be migrated from one type of search to the other.

In case of multisite deployment, the query results such as count of records can be queried for specific sites.

The database migration status can be queried or viewed through CNC Console. For more information, see Custom Query.

Managing Custom Query

Configure

The Custom Query page under Status and Query in CNC Console can be used to query the database migration status.

For more information, see Custom Query.

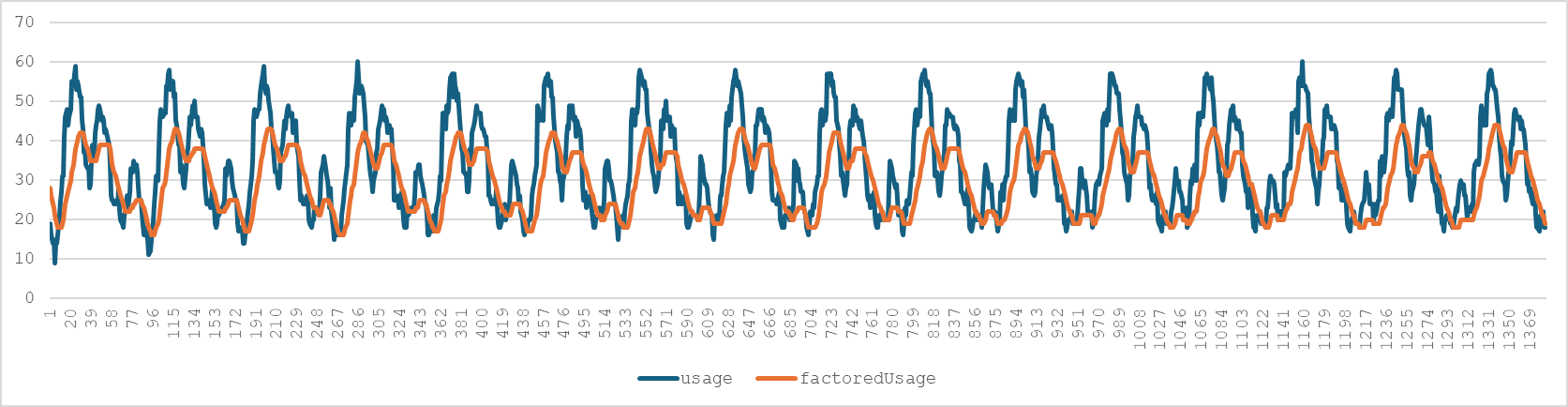

4.3 Support for Signaling and DB Access Processing Latency Histogram Metrics

In order to provide sufficient insight into the microservices performance for incoming requests, Policy includes histogram metrics for the microservices.

These Histogram metrics enable Policy to observe data distribution by measuring the latency of multiple HTTP and database requests sent and received by the microservices.

Histogram Metrics for Binding Service

Enable

Histogram metrics for Binding service can be enabled either using Helm configuration or using CNC Console.

To enable histogram metrics for Binding service using CNC console,

configure the ENABLE_BINDING_HTTP_HISTOGRAM_METRICS advanced

settings key on Binding Service Configuration page under

Service Configurations in CNC Console.

For more information about the advance settings keys, see Binding Service.

To enable histogram metrics for Binding service using Helm configuration, configure

the value of SIGNALING_DB_HISTOGRAM_METRIC_ENABLED parameter to

true.

For more information on Helm configuration, see Binding Service Configurations section in Oracle Communications Cloud Native Core, Installation, Upgrade, and Fault Recovery Guide.

Metrics

- occnp_db_processing_latency_seconds

- occnp_http_in_conn_processing_latency_seconds

- occnp_http_out_conn_processing_latency_seconds

For more information, see Binding Service Metrics.

Histogram Metrics for PDS Service

- occnp_db_processing_latency_seconds

- occnp_http_in_conn_processing_latency_seconds

- occnp_http_out_conn_processing_latency_seconds

For more information, see Policy DS Metrics.

Histogram Metrics for PCRF Core Service

- occnp_diam_response_local_processing_latency_seconds

- occnp_http_in_conn_processing_latency_seconds

- occnp_http_out_conn_processing_latency_seconds

- occnp_db_processing_latency_ms

For more information, see PCRF Core Metrics.

4.4 Support for NR vs EUTRA KPIs for N7 Interface

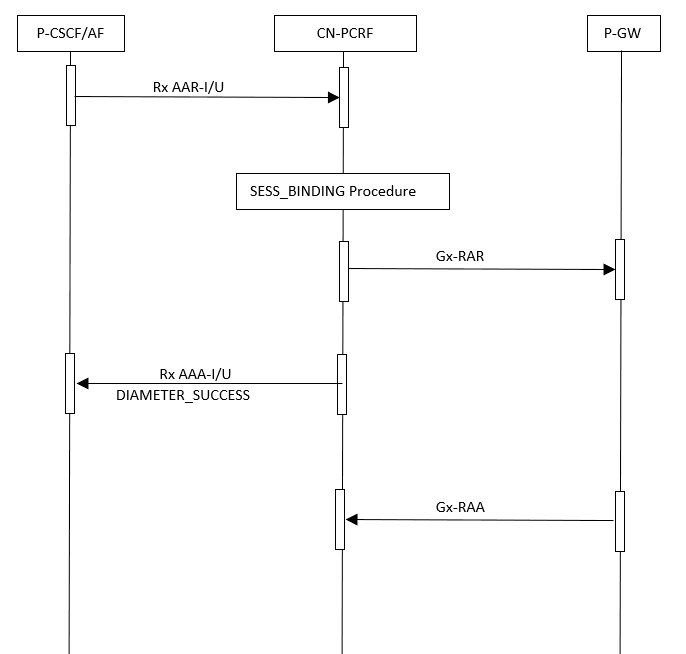

The 5G deployment can be broadly categorized into Non-Standalone (NSA) and Standalone (SA) architectures. The NSA deployments combines a 5G Radio Access Network (RAN) operating alongside a legacy 4G LTE core network. The SA deployments feature a 5G RAN and a cloud native 5G core. The 5G network operators may deploy their networks using both the NSA and SA deployment strategies.

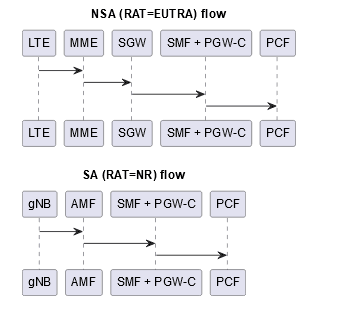

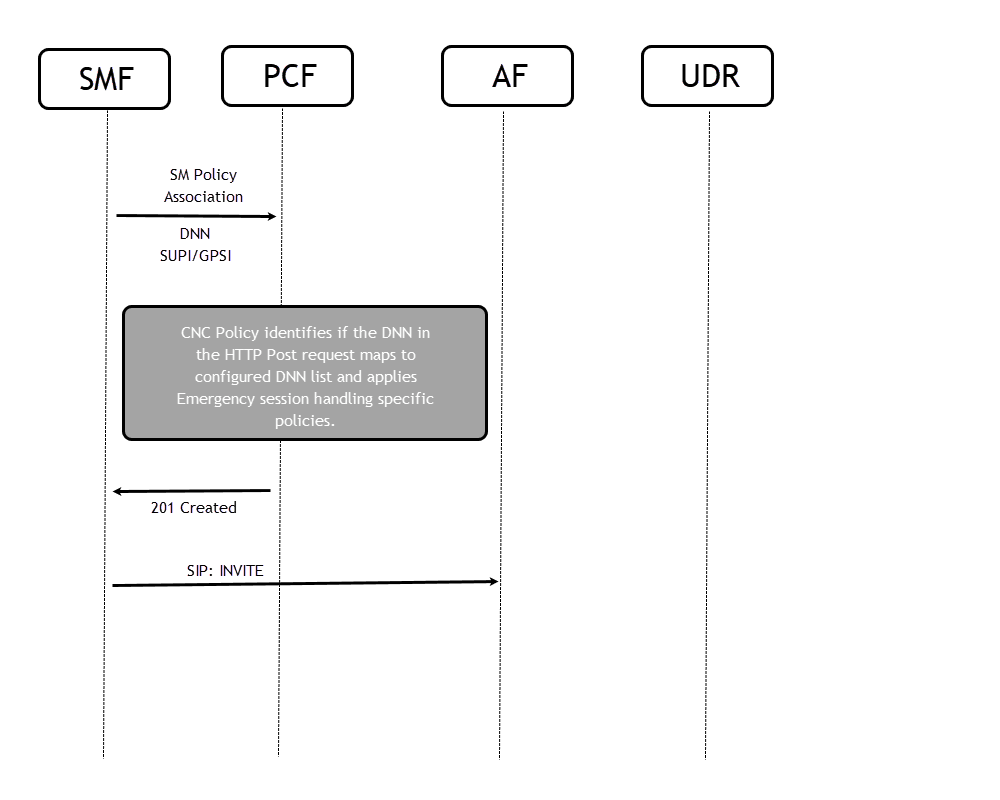

Figure 4-1 NSA and SA Flow

The NSA subscribers follows:

Long Term Evolution (LTE) network → Mobile Management Entity

(MME)→ Serving Gateway (SGW) → Session Management Function (SMF) →

Policy Control Function (PCF) with Radio Access Technology

(RAT) value set to EUTRA. The Evolved Universal Terrestrial Radio Access

(EUTRA) is the air interface technology used in 4G LTE networks.

The SA subscribers follows:

Session Management Function (SMF) → PDN Gateway → Control

Plane(PGW-C) → Policy Control Function (PCF) with RAT value

set to NR. New Radio (NR) is the air interface technology used in 5G

networks.

Currently, the KPIs in PCF application do not differentiate between

NSA and SA call flow. This feature provides KPI for N7 interface to

differentiate between the NR and EUTRA radio access technology in the SM

Service. The SM service will provide KPI to visualize in Grafana dashboard,

and allows to measure the SMPolicyCreate,

SMPolicyUpdate and SMPolicyDelete

transactions per second (TPS) along with RAT type (NR or EUTRA).

Note:

The error responses from Ingress Gateway microservice does not support the newly added dimensions.ocpm_ingress_request_totalocpm_ingress_response_totalhttp_in_conn_request_totalhttp_in_conn_response_total

http_in_conn_ext_request_totalhttp_in_conn_ext_response_total

Table 4-2 SM Dimensions

| Dimension Name | Values | Description | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

accessType access_type |

|

Supported access types. | ||||||||||||||||||

| policyControlRequestTrigger |

|

Policy Control Request Trigger can have single value or comma separated list of values ordered alphabetically. The metrics using this dimension

is not pegged if this field is not populated with

either of the following mandatory values:

They can come individually, together, or along other values. When a list of values is received, at least one of the previous values must be present; otherwise, the metric is not pegged. If the received number of values for this dimension is greater than 5, the mandatory values are prioritized first among the received values. The remaining values are ordered alphabetically and combine them with the previously retrieved mandatory ones until we run out of remaining values, or we will reach the maximum number of values allowed (5), whichever occurs first. If more values do exist after this process, they are ignored. For example: Table 4-3

For delete request this dimension

can be empty; otherwise the rules in Table

For requests where value is not validated, sanitization is done, and invalid values are removed and ignored. |

||||||||||||||||||

|

rat_type ratType |

|

Supported Radio Access Type (RAT). | ||||||||||||||||||

| sourceAccessType | Single value |

The previous access type before the update. The value can be empty if cannot be retrieved from a session. Dimension: accessType |

||||||||||||||||||

| sourceRatType | Single value |

The previous radio access type before the update. The value can be empty if cannot be retrieved from a session. Dimension: ratType |

A sample metric:

occnp_http_in_conn_ext_request_total{accessType="NON_3GPP_ACCESS",application="pcf_smservice",operationType="update",policyControlRequestTrigger="AC_TY_CH,NO_CREDIT,RAT_TY_CH,PRA_CH,UE_IP_CH",ratType="NR",sourceAccessType="3GPP_ACCESS",sourceRatType="EUTRA"} 1.0

For more detailed information about the metrics, see SM Service Metrics section.

For more information about the dimensions accessType,

ratType, and

policyControlRequestTrigger details, see CNC Policy Metrics section.

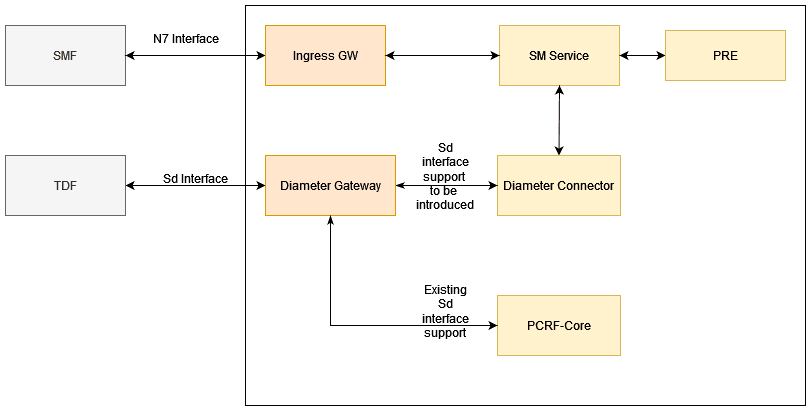

4.5 Support of Traffic Detection on SMF-N7 and TDF using Sd Interface

Important:

Custom support for traffic detection on SMF-N7 and TDF using the Sd interface is an experimental feature. It is subject to change and is not supported for production use (lab use only).

The CnPolicy provides ADC rules to the Traffic Detection Function (TDF) by using the Sd interface. The Sd interface enables to communicate with the TDF. CnPolicy uses a TDF to detect and identify specific types of network. This allows it to understand which application or service is generating the traffic. Based on the detected traffic type, the CnPolicy applies predefined policies or rules to manage the traffic flow. In solicited reporting, CnPolicy instructs TDF to report traffic events, including application starting or stopping based on the appropriate ADC rules.

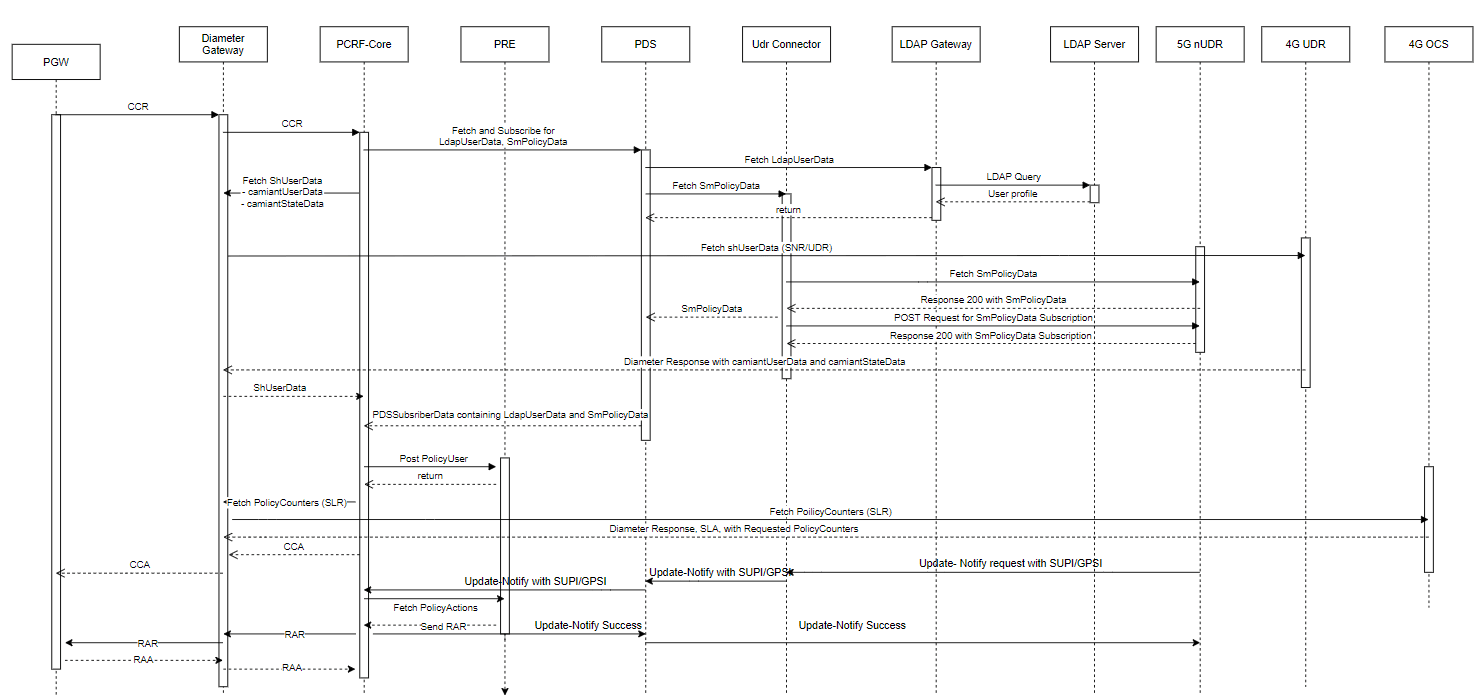

The support for Sd interface already exists between PCRF Core and Diameter Gateway, for a PCRF deployment. For more details about it, see Support for Sd Interface in PCRF section.

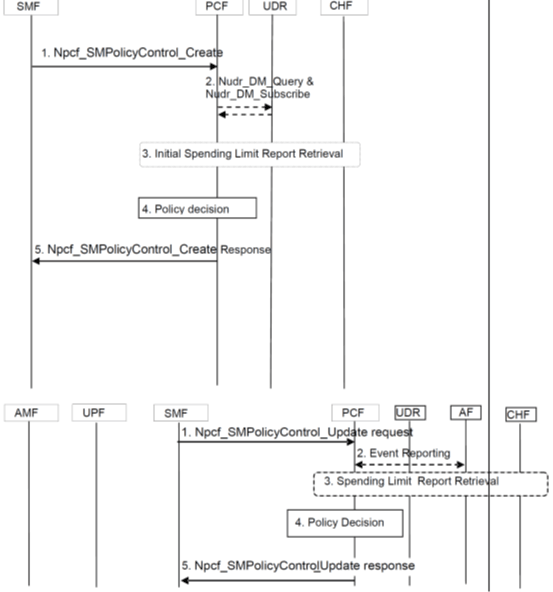

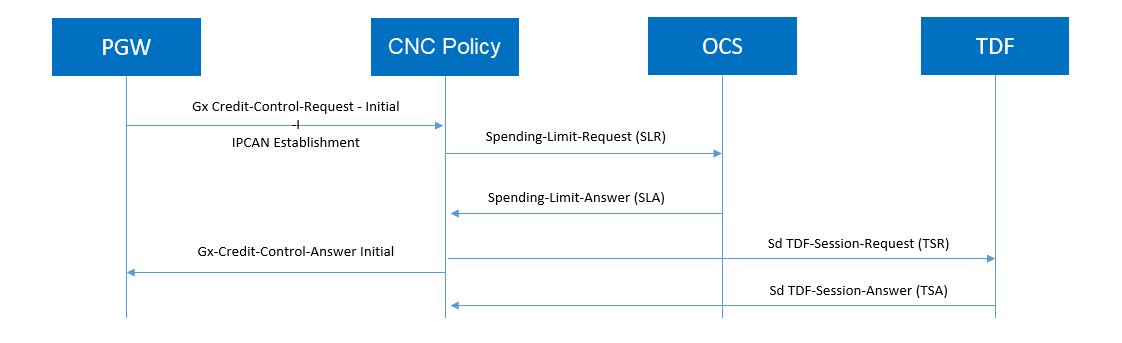

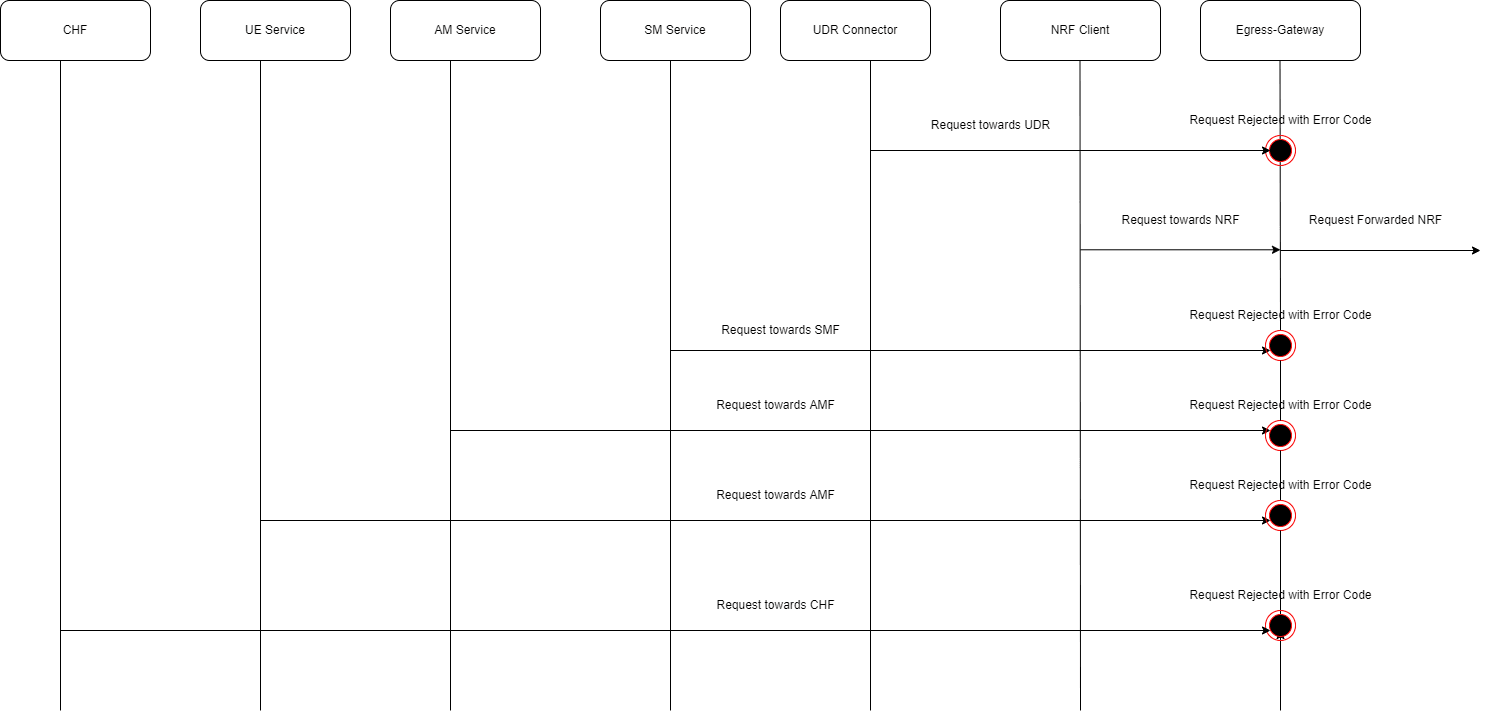

Figure 4-2 Sd interface in PCF

- TDF session establishment between CnPolicy and TDF when the session request is initiated by PCF only. For changes made to this procedure, refer to Sd Session Establishment from PCF to TDF callflow.

- Update of TDF session, refer to Sd Session Modification from PCF to TDF when PLMN Change and Sd Session Modification from PCF to TDF when Update Notification from OCS-Sy SNR call flows.

- TDF session termination requests is initiated by PCF, refer to Sd Session Termination Initiated by CnPolicy callflow.

- TDF session termination requests is initiated by TDF, refer to Sd Session Termination Initiated by TDF callflow.

- Provisioning of ADC rules from CnPolicy for traffic detection and enforcement at the TDF.

- Reporting of the start and the stop of a detected applications and transfer of service data flow descriptions for the detected applications, if available, from the TDF to the CnPolicy.

Call Flows

This section describes the different SM service call flows that are modified to support TDF session in CnPolicy.

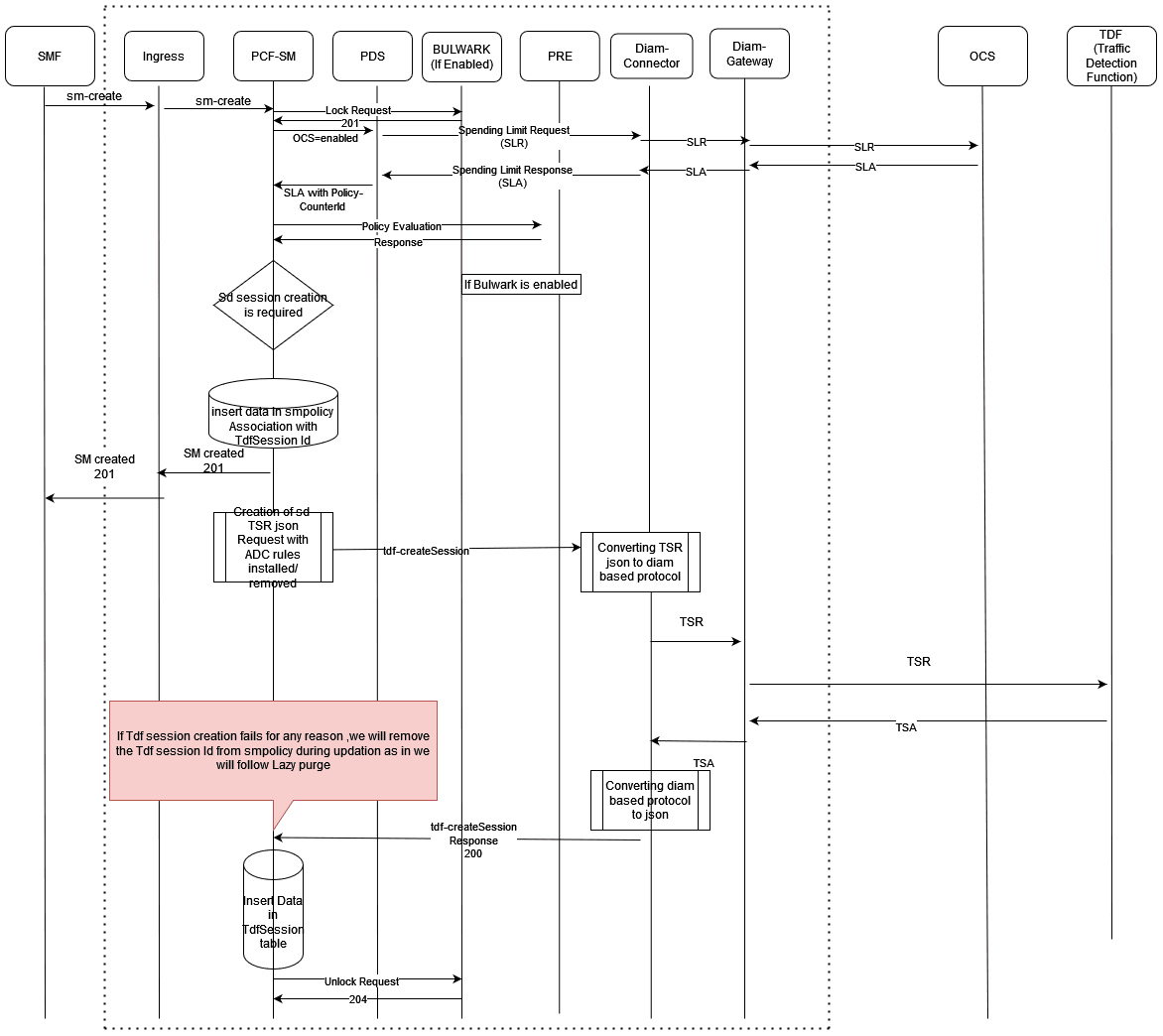

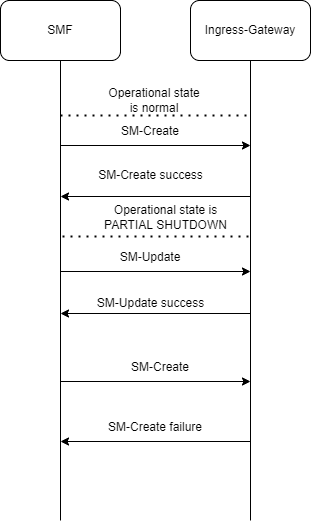

Sd Session Establishment from PCF to TDF

Note:

CnPolicy supports one Sd session per PDU session from SMF-N7. In case of multiple PDU sessions, the new Sd session is established to the same TDF for which the IPCAN session already exists.The following procedure describes how CnPolicy initiates an Sd session from PCF-SM towards the TDF by sending a ADC Rule, using a TDF-Session-Request (TSR) message to the TDF and getting a successful TDF-Session-Answer (TSA) message to PCF-SM:

Figure 4-3 Sd Session Establishment (PCF→ TDF)

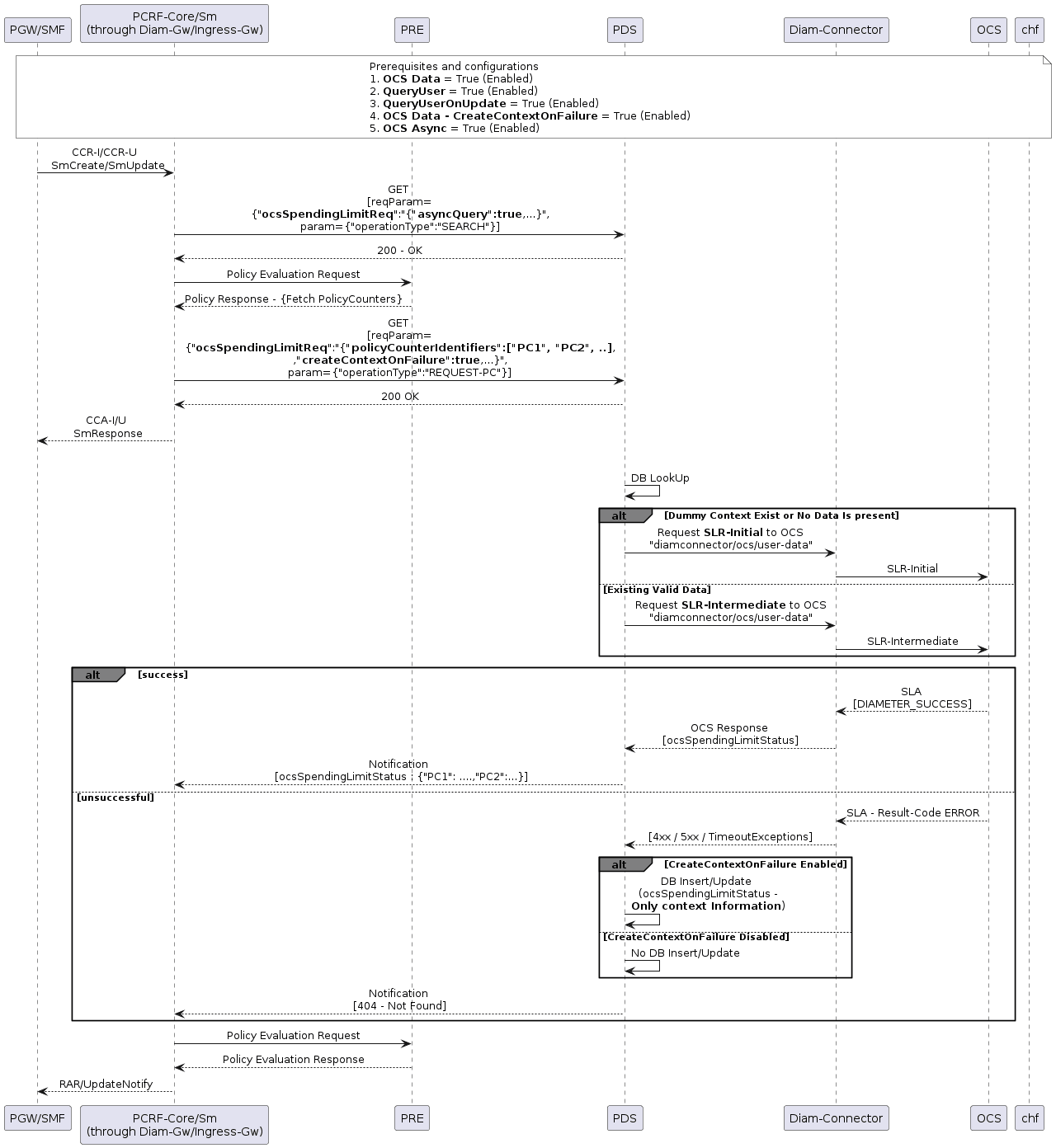

- SMF sends a SM-Create request to PCF-SM.

- If the OCS is enabled, then Spending Limit Request (SLR) is sent by the Diameter Connector to OCS.

- A Spending Limit Answer (SLA) is sent by OCS to Diameter Connector.

- PCF-SM on receiving the Policy counter in SLA, initiates a policy evaluation request to PRE.

- PCF-SM checks for any Sd session creation requirement in the PRE response.

- If the Sd session needs to be created, a Tdf session id is created and added to the smPolicy association.

- The smPolicy association along with Tdf session id is stored in the database.

- PCF-SM responds with

HTTP 201 SM Createto SMF. - PCF-SM creates a Sd TDF-Session-Request (TSR) JSON text with Application Detection and Control (ADC) rules, and then initiates a tdf-creation request with the TSR JSON text toward Diameter Connector.

- The Diameter Connector converts the TSR JSON to diameter based protocol, and sends a TSR request toward Traffic Detection Function (TDF).

- The TDF responds with TDF-Session-Answer (TSA) to Diameter Gateway, and Diameter Connector converts this TSA to JSON text.

- Diameter Connector responds to PCF-SM with HTTP 200 OK, and TSA JSON text. At PCF-SM the text is stored in the TDFSession table in the database.

- If Tdf session creation fails then the TDF session id is removed from the smPolicy association during the database lazy purge.

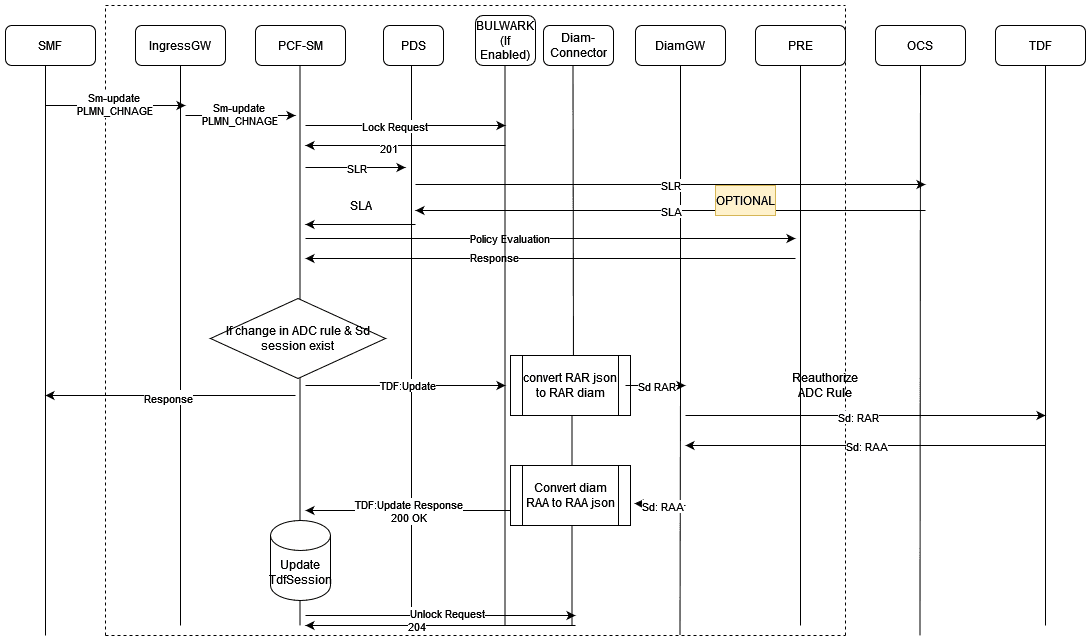

Sd Session Modification from PCF to TDF

Sd Session Modification from PCF to TDF when PLMN Change

Figure 4-4 Sd Session Modification from PCF to TDF when PLMN Change

- The SMF initiates a SM update request toward PCF on change in the Public Land Mobile Network (PLMN).

- The PCF-SM service in PCF on receiving the SLA response from OCS, initiates a policy evaluation towards PDS.

- After receiving the response from PDS, PCF-SM checks if

there is any ADC rule changes and if the Sd session

exists.

- If the condition is true, then PCF-SM sends a TDF update request towards a TDF through Diameter Connector.

- Diameter Connector sends a Sd RAR request towards Diameter Gateway.

- Diameter Gateway forwards this Sd RAR request to the TDF. The ADC rules are reauthorized at TDF.

- TDF responds with Sd RAA towards Diameter Gateway.

- Diameter Gateway forwards this to Diameter Connector.

- Diameter Connector converts the Sd RAA response object to JSON format, and sends this to PCF-SM.

- PCF-SM stores the updated Tdfsession into the database.

Sd Session Modification on Update Notification from OCS-Sy SNR:

Note:

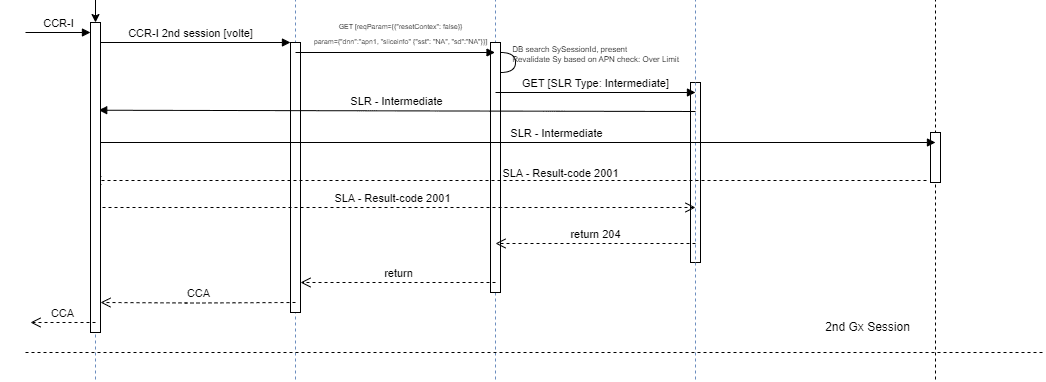

If the TDF session is not already existing, then this procedure will not create a new TDF session.Figure 4-5 Sd Session Modification on Update Notification from OCS-Sy SNR

- On OCS initiating a SNR request toward PCF, the PCF-SM initiates a policy evaluation request toward PDS.

- In case of policy changes from PDS, PCF-SM

triggers the SM update notify request toward SMF.

PCF-SM also checks if there is any ADC rule changes

and if the Sd session exists.

- If the condition is true, then PCF-SM sends a TDF update request towards a TDF through Diameter Connector.

- Diameter Connector sends a Sd RAR request towards Diameter Gateway.

- Diameter Gateway forwards this Sd RAR request to the TDF. The ADC rules are reauthorized at TDF.

- TDF responds with Sd RAA towards Diameter Gateway.

- Diameter Gateway forwards this to Diameter Connector.

- Diameter Connector converts the Sd RAA response object to JSON format, and sends this to PCF-SM.

- PCF-SM stores the updated Tdfsession into the database.

Sd Session Termination Initiated by CnPolicy

Session-Release-Cause attribute

set to IP_CAN_SESSION_TERMINATION towards TDF.

CnPolicy sends delete response to SMF over N7 interface. It also

supports receiving and processing of Sd CCR-T response have

CC-Request-Type attribute set to

TERMINATION_REQUEST.

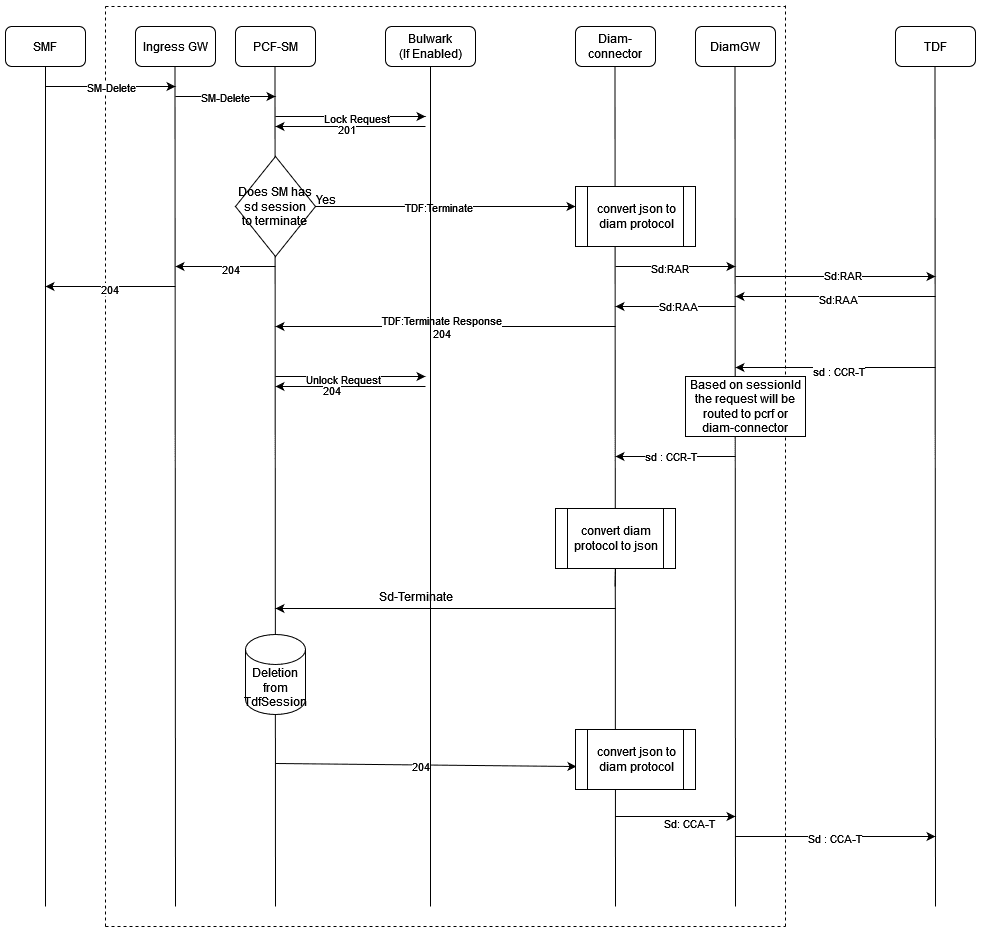

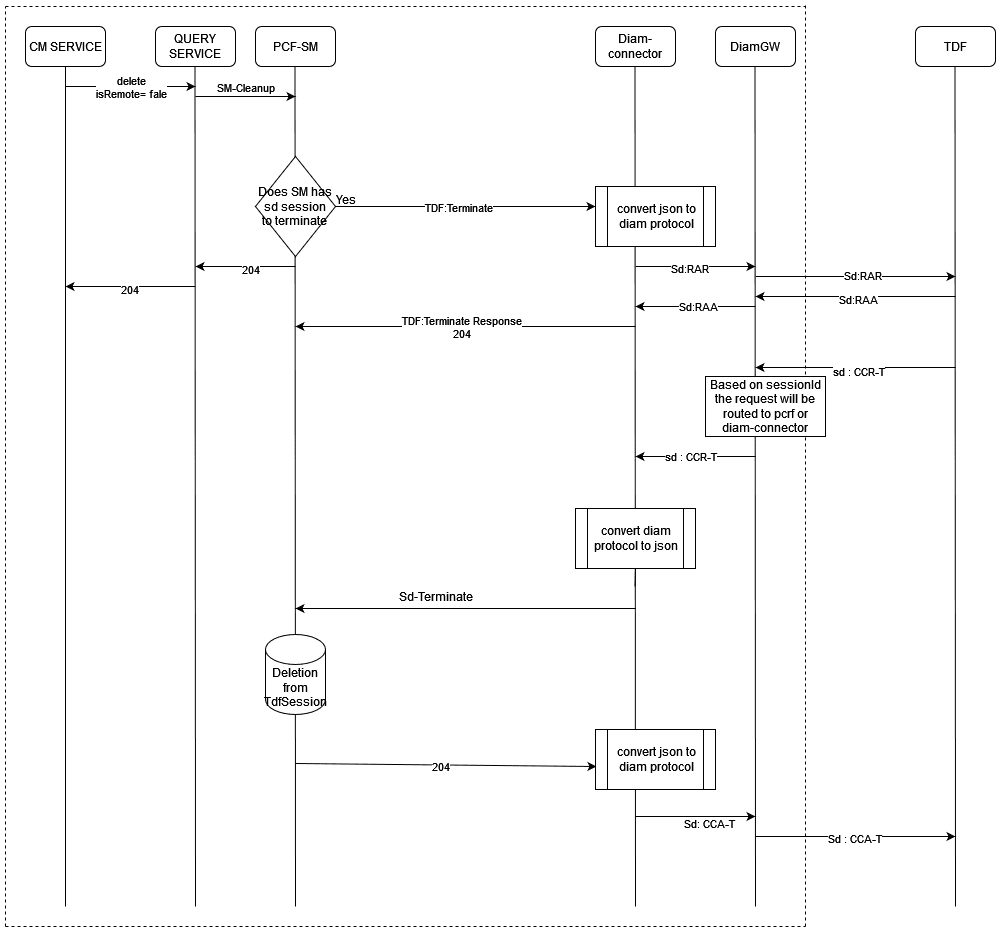

Figure 4-6 Sd Session Termination (PCF → TDF)

- SMF sends a SM-Delete request to PCF-SM.

- PCF-SM checks if SM session has Sd session to terminate, if exists then it sends a TDF terminate request to Diameter Connector.

- Diameter Connector converts the json to diameter protocol and sends Sd RAR towards Diameter Gateway.

- Diameter Gateway on receiving the Sd RAA successful

response, it is forwarded to Diameter Connector.

- Diameter Connector responds with TDF terminate to PCF-SM, and PCF-SM also responds with terminate success to SMF.

- TDF sends a Sd: CCR-T request to Diameter Gateway. Diameter Gateway based on the session id the request will be routed to either PCRF Core or Diameter Connector service.

- If Diameter Connector receives an Sd: CCR-T request from Diameter Gateway, it converts the diameter protocol request to JSON, and sends it to PCF-SM.

- PCF-SM deletes the associated Tdfsession in the database and send a request to Diameter Connector.

- Diameter Connector converts JSON data to diameter protocol, and sends a Sd: CCA-T request to Diameter Gateway.

- Diameter Gateway forwards the Sd: CCA-T to TDF.

- Diameter Gateway on receiving the Sd RAA failure response, the Sd session is deleted and PCF does not wait for CCR-T from TDF.

Sd Session Termination Initiated by TDF

Figure 4-7 Sd Session Termination (TDF → PCF)

- TDF initiates a Sd: CCR-T request toward Diameter Gateway.

- Diameter Gateway sends this request to Diameter Connector.

- Diameter Connector based on the session id, the request will be re-routed.

Diameter Gateway Routing Decision for CCR-U or CCR-T from TDF

The Diameter Gateway on receiving the CCR-U or CCR-T request from TDF has

to decide if the request has to be routed to PCRF Core or Diameter

Connector. It uses the <optional value> of

diameter session-id AVP, which is used to identify a specific

session. An encoded <optional value> is appended

with session-id and added to the Diameter Gateway header to

differentiate between PCRF Core and PCF so that it can re-route the

requests accordingly.

<option

value>.

Figure 4-8 Flowchart for Diameter Gateway Routing Decision

Diameter Gateway service uses RFC-6733 recommendation for

the Session-id AVP format <DiameterIdentity>;<high

32 bits>;<low 32 bits>[;<optional

value>].

<high 32 bits> and <low 32 bits>are decimal representations of the high and low 32 bits of a monotonically increasing 64-bit value. The 64-bit value is rendered in two part to simplify formatting by 32-bit processors. At startup, the high 32 bits of the 64-bit value may be initialized to the time in NTP format RFC5905, and the low 32 bits may be initialized to zero. This will for practical purposes eliminate the possibility of overlapping Session-Ids after a reboot, assuming the reboot process takes longer than a second. Alternatively, an implementation may keep track of the increasing value in non-volatile memory.<optional value>is implementation specific.

A sample session-id AVP format:

sd1.oacle.com;5dd8b4b775-fvwlg;1728285868;efac51ae-3d26-4632-9d3b-7ee3a5bbd207_101NTAyU00=

Table 4-4 Session-id AVP Format

| Diameter Identity | High 32 bits | Low 32 bits | <option value> |

|---|---|---|---|

| sd1.oacle.com | 5dd8b4b775-fvwlg | 1728285868 | efac51ae-3d26-4632-9d3b-7ee3a5bbd207_101NTAyU00= |

Table 4-5 <option value> Format

| AssociationID | Encoding_version_number | Number of Elements | Encoded_Key_Value_Pair |

|---|---|---|---|

|

This is a random UUID generated number. Example: efac51ae-3d26-4632-9d3b-7ee3a5bbd207 |

This determines the encryption or decryption algorithm used for the particular ID to get the key-value pair information. Example: 1 |

This determines how many key-value pairs exists in the encoded format in encoded_key_value_pair Example: 01 |

This contains the key-value pair in a specific format used to decode the data. The |

Table 4-6 Decoded_Key_Value_Pair as TagID-Length-Value (TLV) format

| AssociationIdTLV | length_of_AssociationIdTLV | Value |

|---|---|---|

|

This is the enum for tag IDs. Example: In decoded |

This represents the length of the value string. Example: In decoded |

This holds the value. Example: In decoded Note: Currently only SM value is supported. |

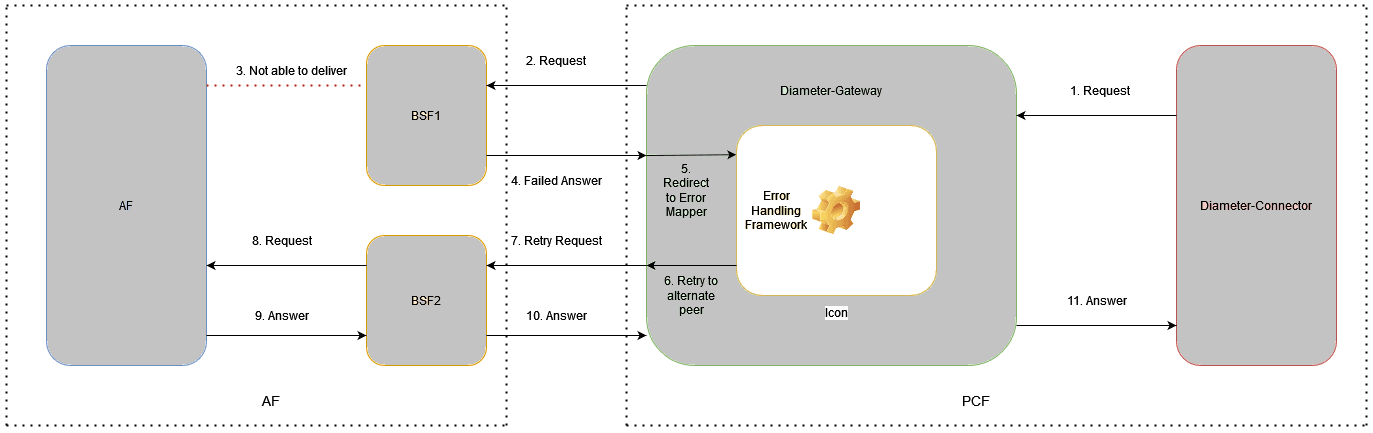

Diameter Gateway Force Routing for Sd Interface

In Policy converged mode deployment, both the HTTP and Diameter requests are handled by CnPolicy. In this mode of deployment if the Diameter Gateway is unable to identify the right session for the Sd CCR-T or CCR-U requests then the CnPolicy uses force routing mechanism. By default, this mechanism is disabled. It can be enabled by adding the following advanced setting keys to Diameter Gateway service configurations in CNC Console.

Table 4-7 Parameters for Advanced Settings

| Key | Value |

|---|---|

| DIAMETER.gateway.sd.session.owner.failure.forcerouting.enabled |

This is used to enable force routing to PCRF Core and/or Diameter Connector for Sd interface. Allowed values are true or false. Default Value: false |

| DIAMETER.gateway.sd.session.owner.failure.forcerouting.service.list | This is used to

set the force routing to PCRF Core and/or Diameter

Connector.

The possible list of

values:

|

| DIAMETER.gateway.sd.session.owner.failure.forcerouting.retry.timeout | This is used to

enable force routing retry on the next service if

the current selected service is throwing timeout

error. Allowed values are true or false.

Default Value: true |

| DIAMETER.gateway.sd.session.owner.failure.forcerouting.retry.diameter.unknown.session.id | This is used to

enable force routing retry on the next service if

the current selected service is throwing

DIAMETER_UNKNOWN_SESSION_ID.

Allowed values are true or false.

Default Value: true |

| DIAMETER.gateway.sd.session.owner.failure.forcerouting.retry.error.code.list |

Provide the list of diameter error code for which users want to retry force routing. If the diameter error code coming from the current selected service is present in this list then force routing is retried on the next service. Allowed value is a string, and default is empty string. Example: 3004, 5012 Default Value: "" |

Note:

If the TDF sends a empty or null, or encodesSESSION_OWNER as

UNKNOWN in session-id then the

Diameter Gateway cannot find the session owner.

For more information about the advanced settings in Diameter Gateway service, see Settings section.

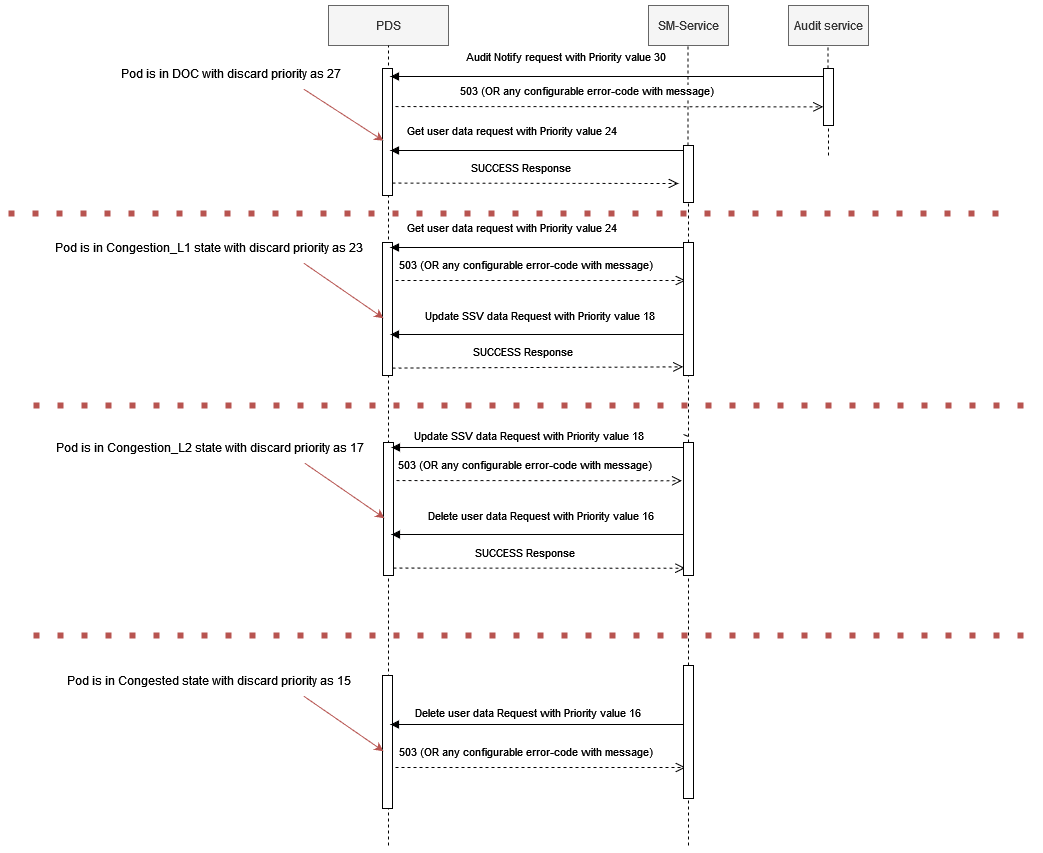

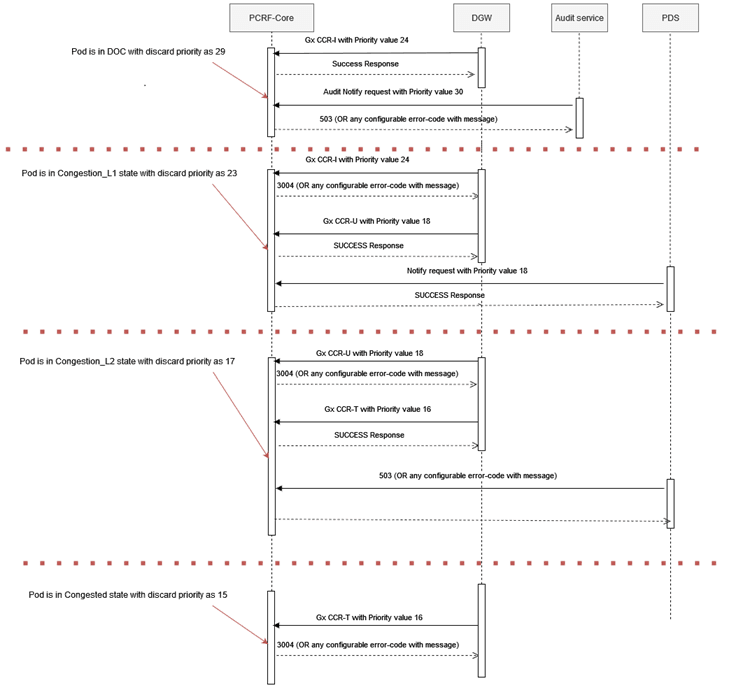

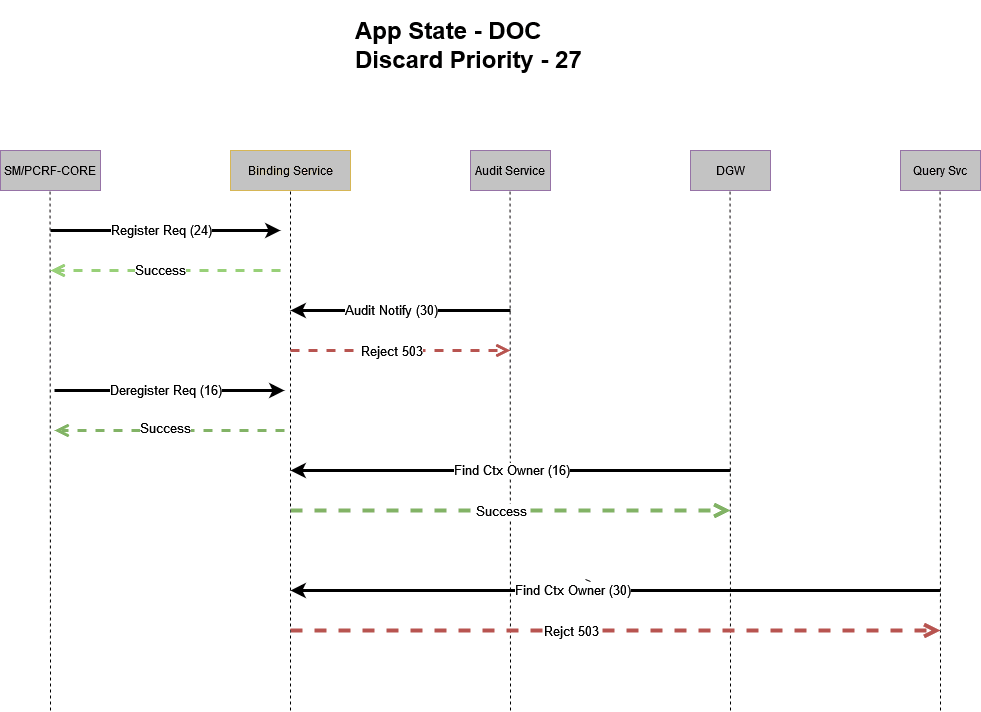

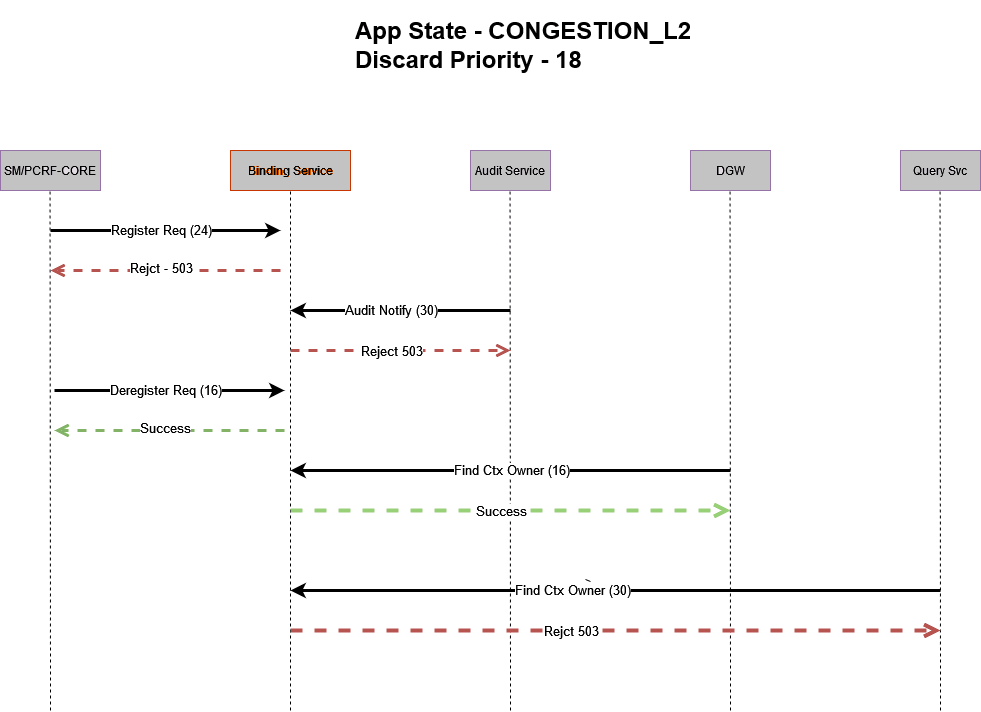

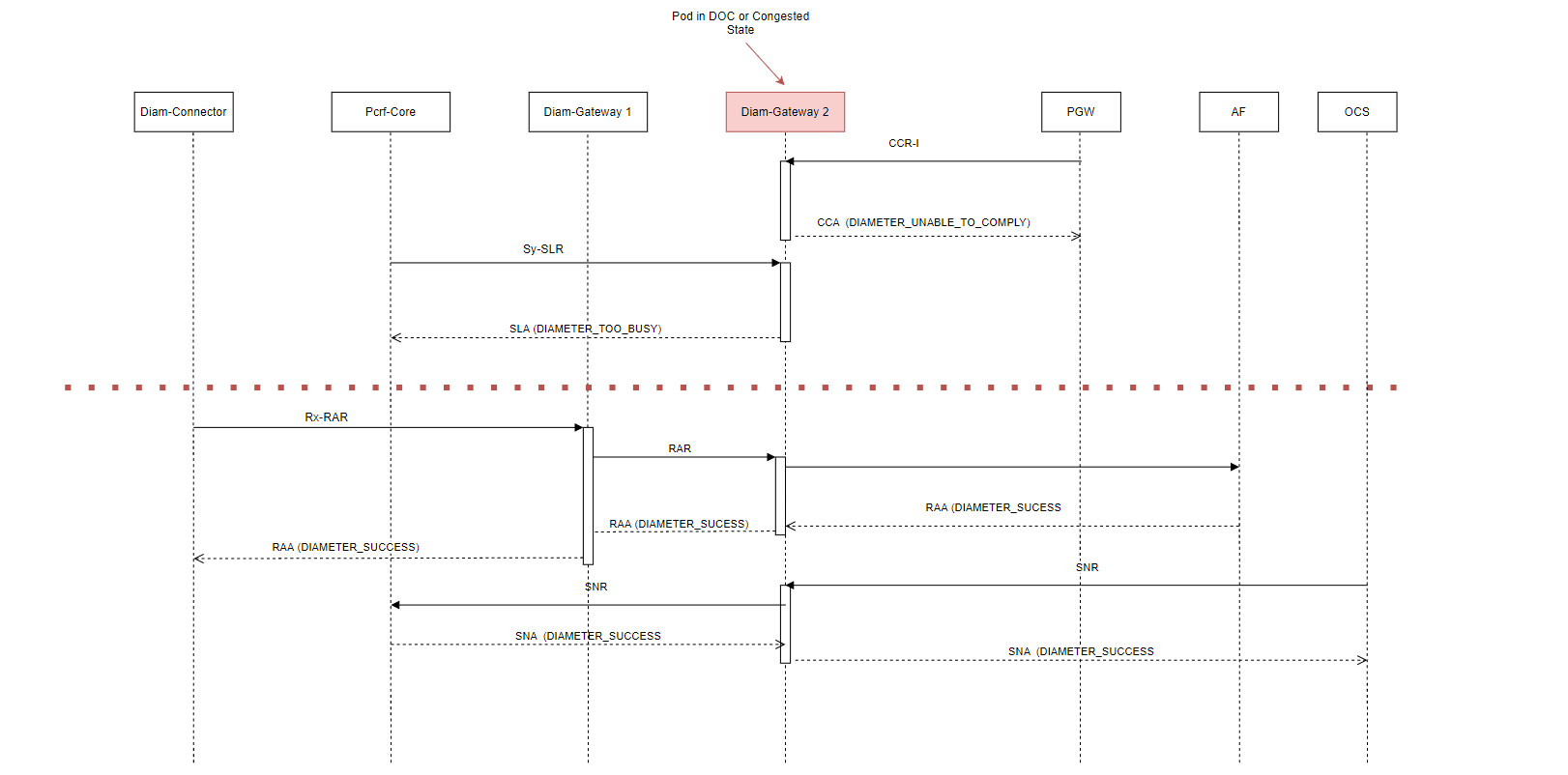

Changes in Congestion Control feature

SM Service Congestion Control

When SM service is in congestion state then based on request priority and

discard priority messages are either accepted or rejected. The

request priority is decided based on the priority order:

oc-message-priority header →

3gpp-sbi-message-priority header →

advanced settings priority →

default priority.

Table 4-8 SM Advanced Settings

| Key | Value |

|---|---|

| SM.TDF.SESSION.DELETE.PRIORITY | 16 |

| SM.TDF.SESSION.UPDATE.PRIORITY | 18 |

For more details about the Congestion Control support in SM Service, refer to SM Service Pod Congestion Control.

Diameter Connector Congestion Control

When Diameter Connector service is in congestion state then based on

request priority and discard priority messages are either accepted

or rejected. The request priority is decided based on the priority

order: oc-message-priority header/OC-Message-Priority

AVP → advanced settings priority →

default priority.

Table 4-9 Diameter Connector Advanced keys

| Key | Value |

|---|---|

| SM.TDF.CREATE.SESSION.REQUEST.PRIORITY | 24 |

| SM.TDF.TERMINATE.REQUEST.PRIORITY | 16 |

| SM.TDF.UPDATE.REQUEST.PRIORITY | 18 |

| SD.CCR.TERMINATE.REQUEST.PRIORITY | 16 |

| SD.CCR.UPDATE.REQUEST.PRIORITY | 18 |

For more details about the Congestion Control support in Diameter Connector, refer to Diameter Connector Pod Congestion Control.

Diameter Gateway Congestion Control

For more details about the Congestion Control support in Diameter Gateway, refer to Diameter Pod Congestion Control.

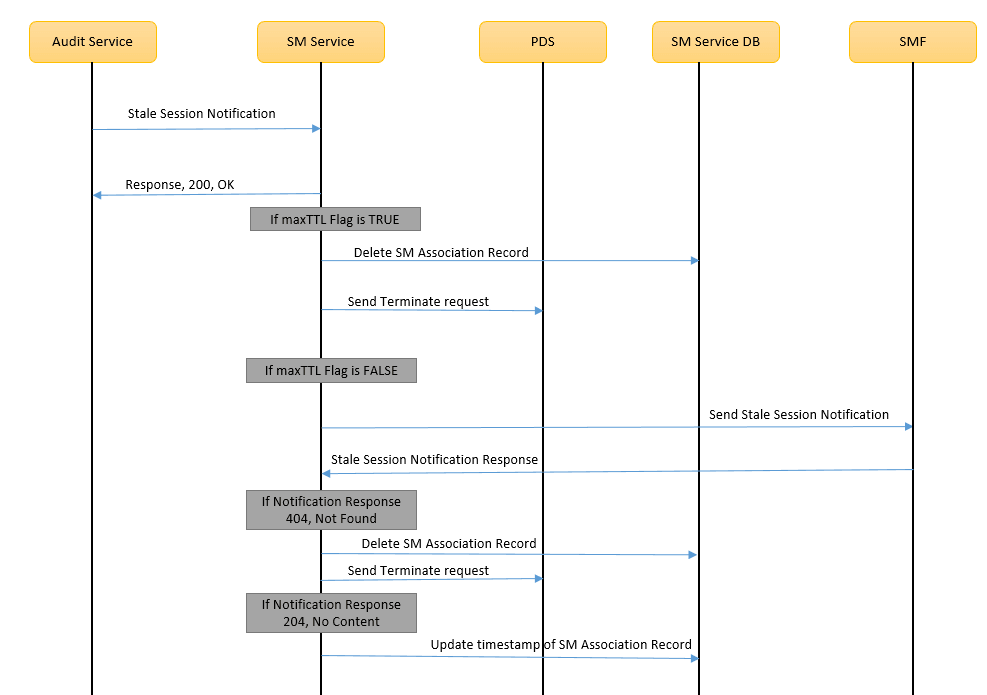

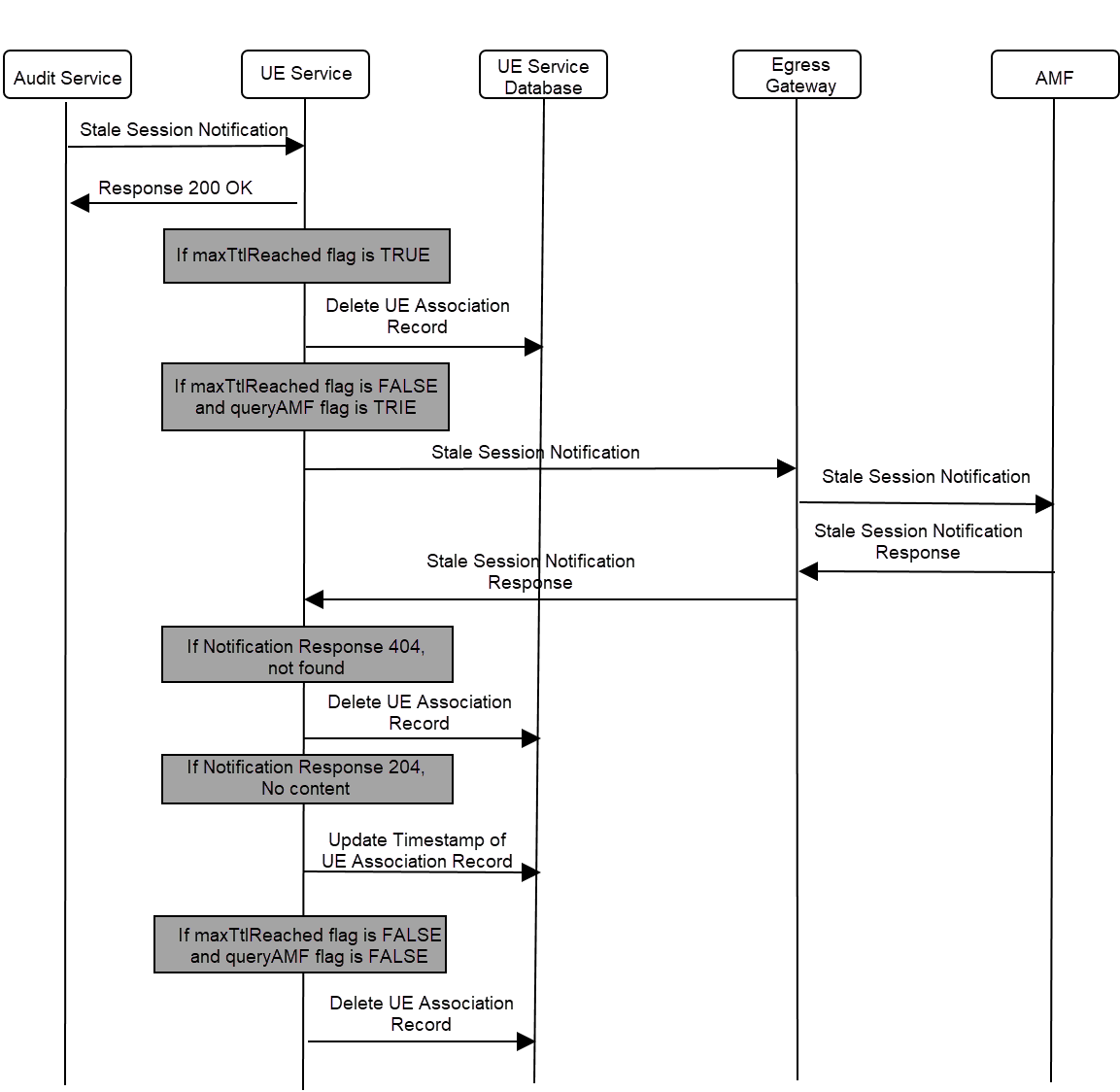

Changes in Audit Service for TDF Stale Session Cleanup in Database

Audit service supports auditing the TDF Session table periodically and

remove the stale sessions. Based on the

session'sLastAccessTime information the

Audit service determines if the session is stale or not. If it is a

stale session then it sends notification to SM service for cleaning

the stale session.

For more details about editing the audit information related to TDF Session in CNC Console, refer to PCF Session Management page.

CNC Console Configurations

The N7-Sd feature in PCF is not enabled by default. This can

be enabled by adding the TDF.TDF_ENALBLED advanced

setting key in CNC Console.

In SM service, the data stored in the SmAssociation table in the database

can be compressed. This can also be applied to TdfSession table too.

To enable the data compression in SM service, enable the

System.Data Compression Scheme field in PCF

Session Management service.

For more information to enable this feature and also the data compression in SM service, see PCF Session Management page in CNC Console.

The traffic profile containing the ADC rules can be created using the Traffic Profile page in the CNC Console. The ADC rules can also be configures using the Rest APIs. For more information, see "PCF Traffic Profile ADC Rule", "PCF Predefined ADC Rule", and "PCF Predefined ADC Rule Base" sections in Oracle Communications Cloud Native Core, Converged Policy REST Specification Guide.

HTTP and Diameter Result Codes Mapping

In CnPolicy converged deployment, both the HTTP and Diameter requests are handled by the application. The errors in Diameter Connector are handled by the result code AVP in Diameter messages, which are mapped to appropriate HTTP response code.

Diameter Gateway maps Diameter error codes to HTTP status codes. This table provides the mapping of Diameter result code to HTTP response code when the Diameter Connector sends response to SM service.

Table 4-10 Diameter Result Codes from TDF toward SM service

| Diameter Result Code | HTTP Response Code | Diameter Connector Response Body |

|---|---|---|

| DIAMETER_SUCCESS | 200 | No response body |

| DIAMETER_MISSING_AVP / DIAMETER_UNABLE_TO_COMPLY / DIAMETER_UNABLE_TO_DELIVER / DIAMETER_TOO_BUSY / DIAMETER_LOOP_DETECTED/ DIAMETER_APPLICATION_UNSUPPORTED | 500 |

|

| DIAMETER_UNKNOWN_SESSION_ID | 404 |

|

This table describes the Diameter Connector various error 'cause' that it can send in its response body to SM service.

Table 4-11 Error Cause Diameter Connector to SM service

| Diameter Error Name | Diameter Result Code | Response Body 'Cause' from Diameter Connector to SM |

|---|---|---|

| DIAMETER_UNABLE_TO_COMPLY | 5012 | An internal failure occurred. |

| DIAMETER_UNKNOWN_SESSION_ID | 5002 | A request is received with unknown session ID. |

| DIAMETER_UNABLE_TO_DELIVER | 3002 | Request did not delivered to the destination. |

| DIAMETER_TOO_BUSY | 3004 | An upstream peer is either in congestion or too busy to handle a request. |

| DIAMETER_MISSING_AVP | 5005 | The request did not contain an AVP that is required by the Command Code definition. |

| DIAMETER_COMMAND_UNSUPPORTED | 3001 | The Request contained a Command-Code that the receiver did not recognize or support. |

| DIAMETER_LOOP_DETECTED | 3005 | An agent detected a loop while trying to get the message to the intended recipient. |

| DIAMETER_UNSUPPORTED_VERSION | 5011 | A request was received, whose version number is unsupported. |

| DIAMETER_AVP_OCCURS_TOO_MANY_TIMES | 5009 | A message was received that included an AVP that appeared more often than permitted in the message definition. |

| DIAMETER_AVP_UNSUPPORTED | 5001 | The peer received a message that contained an AVP that is not recognized or supported and was marked with the Mandatory bit. |

| DIAMETER_INVALID_AVP_VALUE | 5004 | The request contained an AVP with an invalid value in its data portion. |

| DIAMETER_INVALID_AVP_LENGTH | 5014 | The request contained an AVP with an invalid length. |

| DIAMETER_INVALID_HDR_BITS | 3008 | A request was received whose bits in the Diameter header were either set to an invalid combination, or to a value that is inconsistent with the command code definition. |

| DIAMETER_INVALID_AVP_BITS | 3009 | A request was received that included an AVP whose flag bits are set to an unrecognized value, or that is inconsistent with the AVP definition. |

503 Service Unavailable

response.

{

"type": null,

"title": "SERVICE_UNAVAILABLE",

"status": 503,

"detail": "Service Unavailable",

"instance": null,

"cause": "Request processing timeout.",

"invalidParams": null

}

{

"type": null,

"title": "INTERNAL_SERVER_ERROR",

"status": 500,

"detail": "Error Occurred",

"instance": null,

"cause": "Request did not delivered to the destination.",

"invalidParams": null

}

{

"type": null,

"title": "INTERNAL_SERVER_ERROR",

"status": 500,

"detail": "Error Occurred",

"instance": null,

"cause": "The request did not contain an AVP that is required by the Command Code definition.",

"invalidParams": null

}

{

"type": null,

"title": "INTERNAL_SERVER_ERROR",

"status": 500,

"detail": "Error Occurred",

"instance": null,

"cause": "Request did not delivered to the destination.",

"invalidParams": null

}Sd CCR-T/CCR-U response from SM towards TDF

Table 4-12 HTTP Result Codes from SM service toward TDF

| HTTP Response Code | Diameter Result Code |

|---|---|

| 204/200 | DIAMETER_SUCCESS |

| 500 | DIAMETER_UNABLE_TO_COMPLY |

| 503 | DIAMETER_UNABLE_TO_COMPLY |

| 400 | DIAMETER_UNABLE_TO_COMPLY |

| 404 | DIAMETER_UNKNWON_SESSION_ID |

Support for TDS Session in Session Viewer

The session viewer supports SM TDS session in Session Viewer page of CNC Console.

TDF Session Viewer Call Flows

This section describes the different call flows for TDF session in Session view.

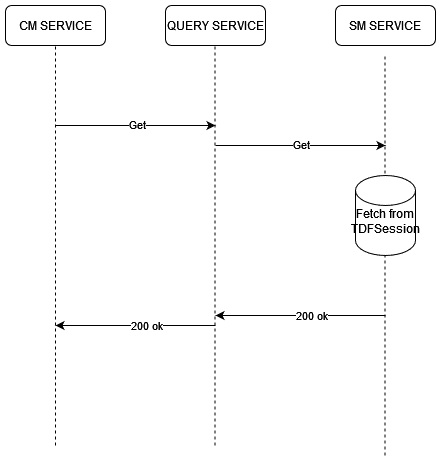

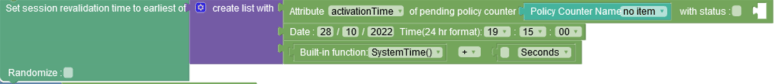

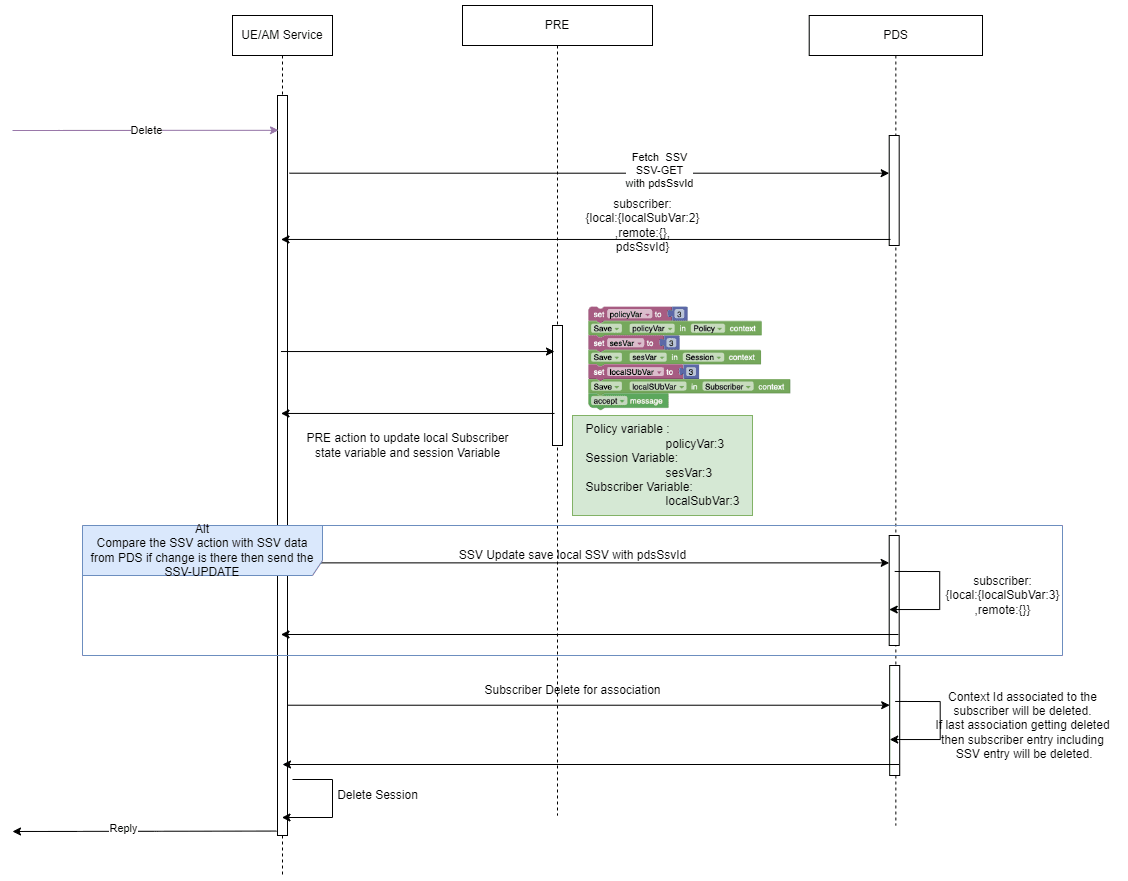

Figure 4-9 Fetch TDF Session

Delete TDF Session

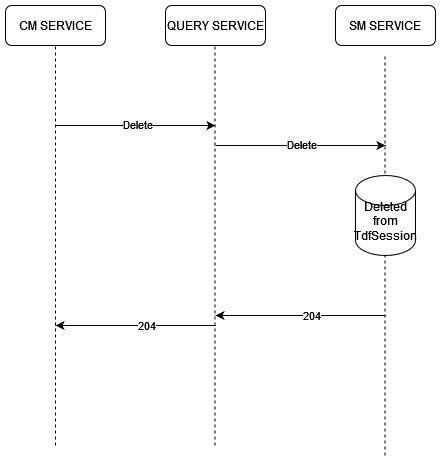

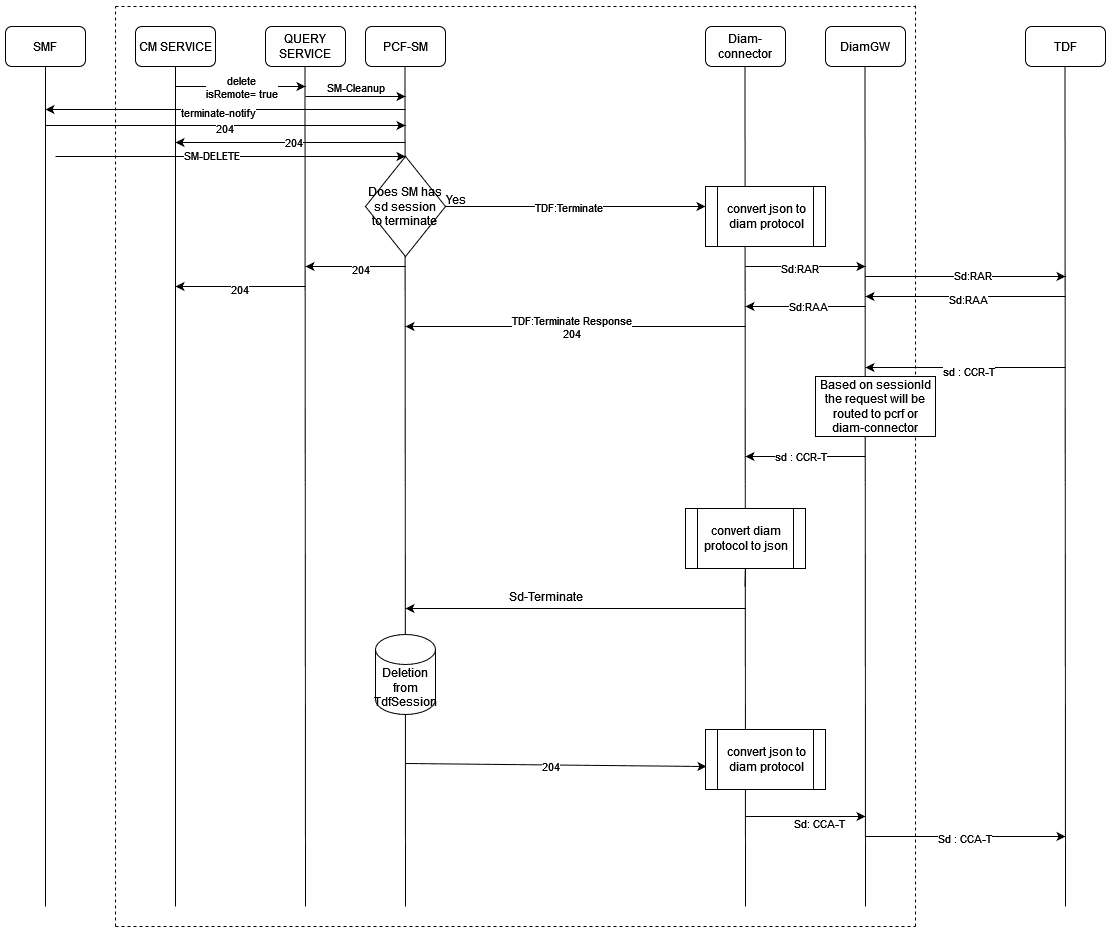

Figure 4-10 LOCAL DELETE TDF SESSION

REMOTE DELETE TDF SESSION

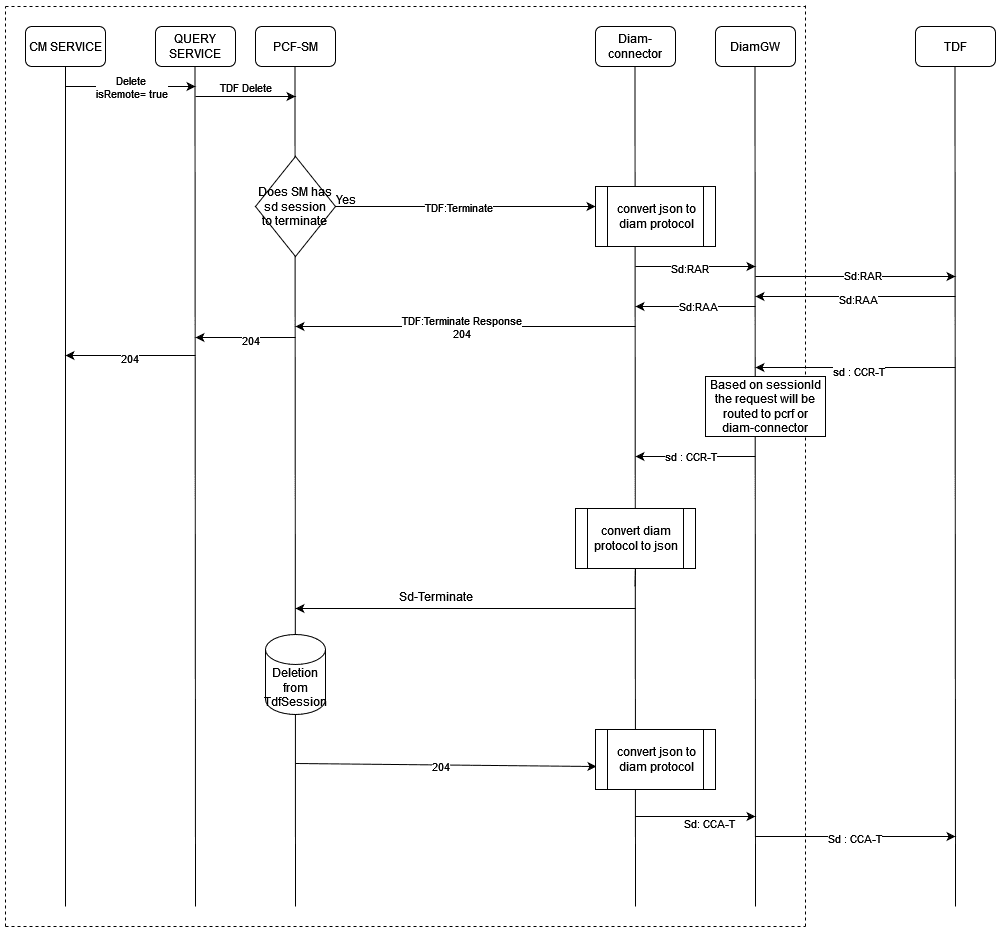

Figure 4-11 REMOTE DELETE TDF SESSION

LOCAL DELETE SM SESSION

Figure 4-12 LOCAL DELETE SM SESSION

REMOTE DELETE SM SESSION

Figure 4-13 REMOTE DELETE SM SESSION

Manage Sd Interface Support for PCF

Enable

This feature is not enabled by default.

Configure Using CNC Console

Enable this feature by adding the TDF.TDF_ENALBLED

advanced settings key to PCF Session Management page in CNC Console.

To configure auditing of TDF stale sessions, see PCF Session Management page in CNC Console.

To view the SM TDF Session details, see

Session Viewer page in CNC Console.

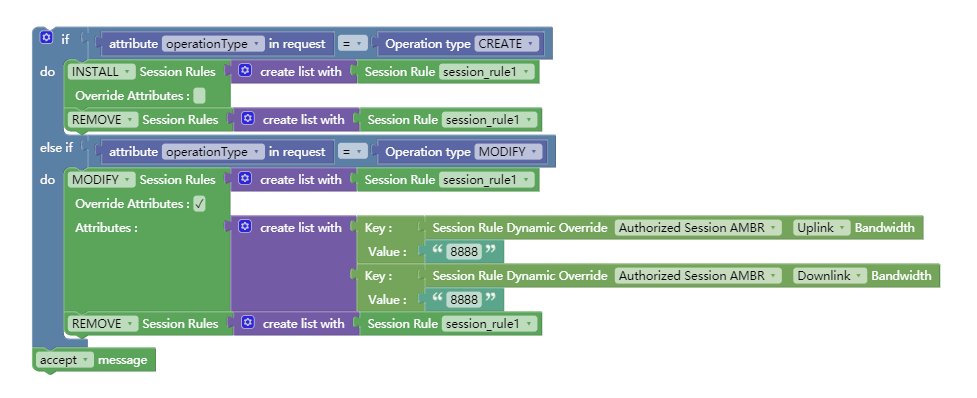

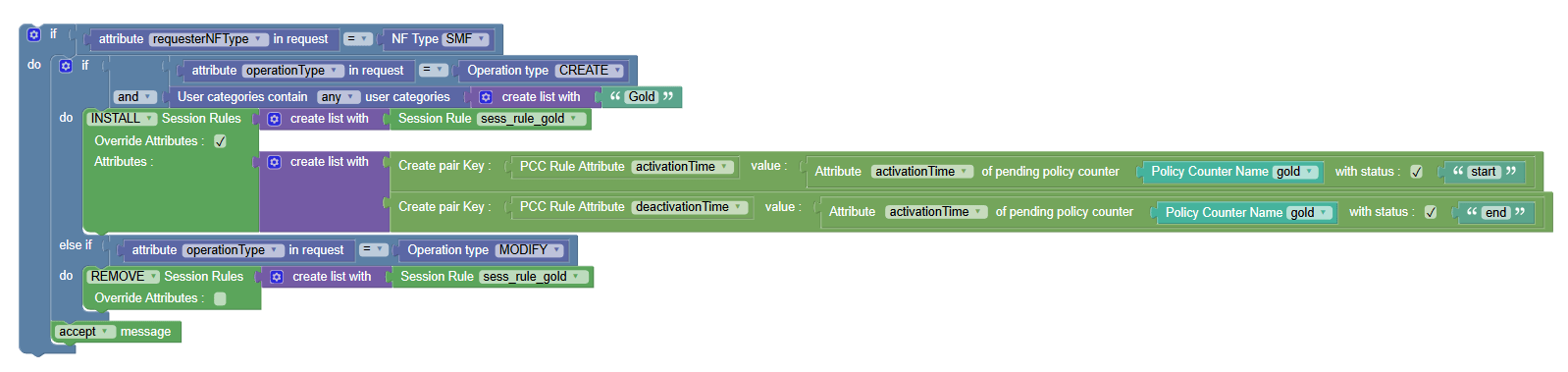

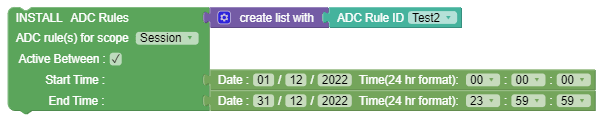

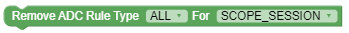

Policy Blockly

FQDN from notificationUriRemove ADC Type RulesInstall ADC RulesRemove ADC RulesEstablish traffic detection session with peer node nameEstablish traffic detection session with peer node set nameInstall ADCRules For TimePeriod

For more details about this blockly, see "PCF-SM Category" section in Oracle Communications Cloud Native Core, Policy Design Guide.

Observability

Metrics

occnp_http_out_conn_request_totaloccnp_http_out_conn_response_totalocpm_egress_request_totalocpm_egress_response_totaloccnp_pcf_smservice_overall_processing_time_seconds_countoccnp_pcf_smservice_overall_processing_time_seconds_sumoccnp_pcf_smservice_overall_processing_time_seconds_maxocpm_egress_request_timeout_totalsession_oam_request_totalsession_oam_response_totaloccnp_http_sm_request_totaloccnp_http_sm_response_totalaudit_notifications_sentaudit_update_notify_session_not_foundaudit_update_notify_session_foundaudit_update_notify_response_erroraudit_update_timestamp_cntaudit_delete_records_countaudit_delete_records_max_ttl_countocpm_late_arrival_rejection_totaloccnp_late_processing_rejection_total

occnp_diam_service_overall_processing_time_secondsoccnp_diam_service_overall_processing_time_seconds_bucketoccnp_diam_service_overall_processing_time_seconds_sumoccnp_diam_service_overall_processing_time_seconds_maxoccnp_diam_request_network_totaloccnp_diam_response_network_totaloccnp_diam_response_latency_secondsoccnp_diam_response_latency_seconds_bucketoccnp_diam_response_latency_seconds_sumoccnp_diam_response_latency_seconds_countoccnp_diam_response_latency_seconds_max

occnp_diam_connector_overall_processing_time_seconds_maxoccnp_diam_connector_overall_processing_time_seconds_countoccnp_diam_connector_overall_processing_time_seconds_sumoccnp_stale_diam_request_cleanup_totaloccnp_stale_http_request_cleanup_total

Alerts

The TDF_CONNECTION_DOWN alert is generated when there is a disconnection with configured TDF peer node.

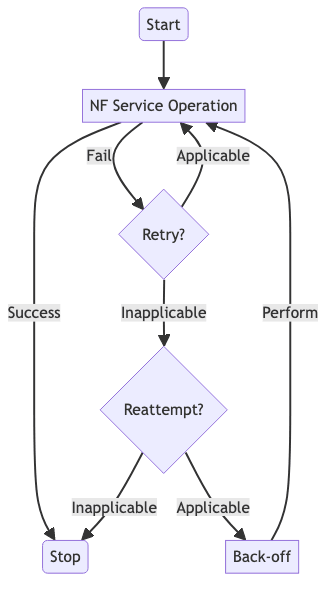

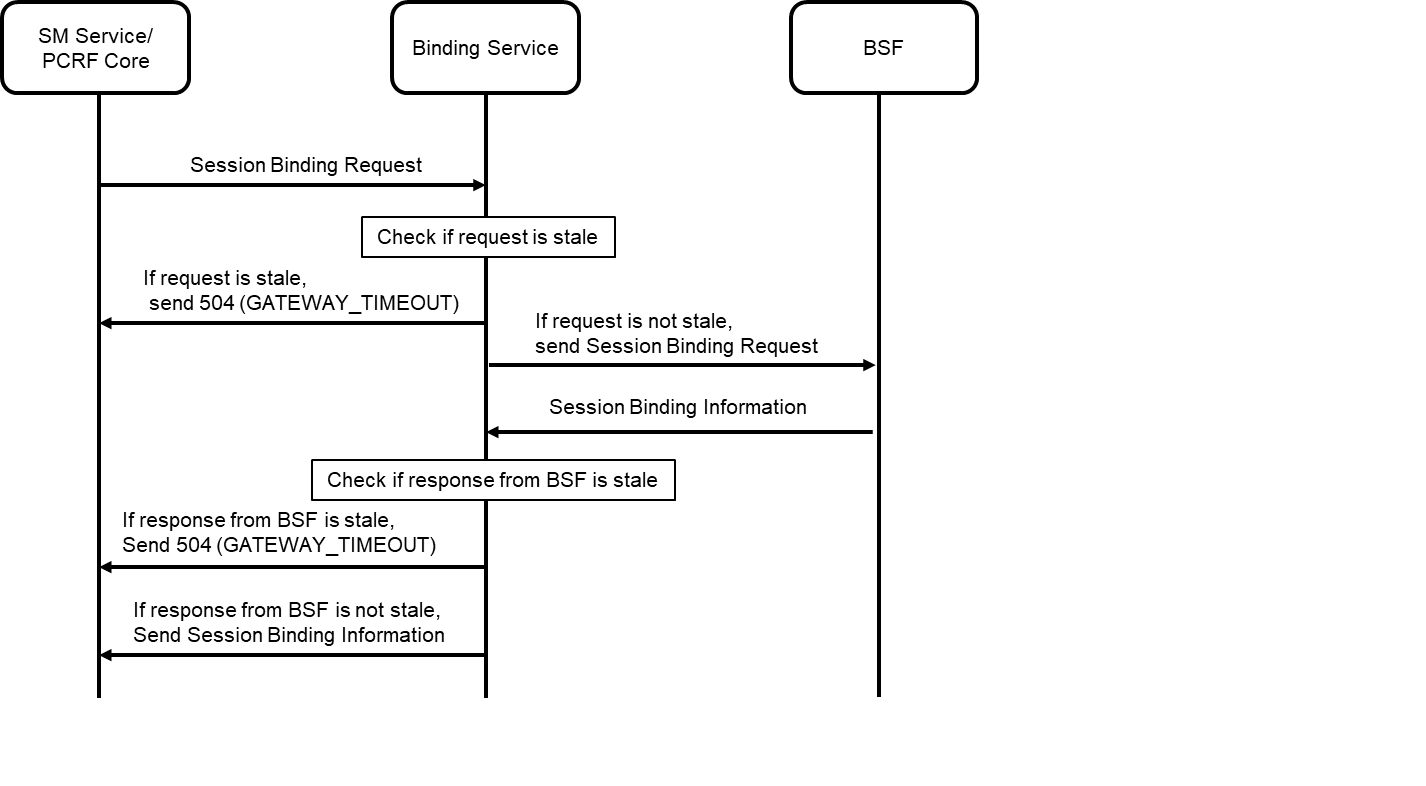

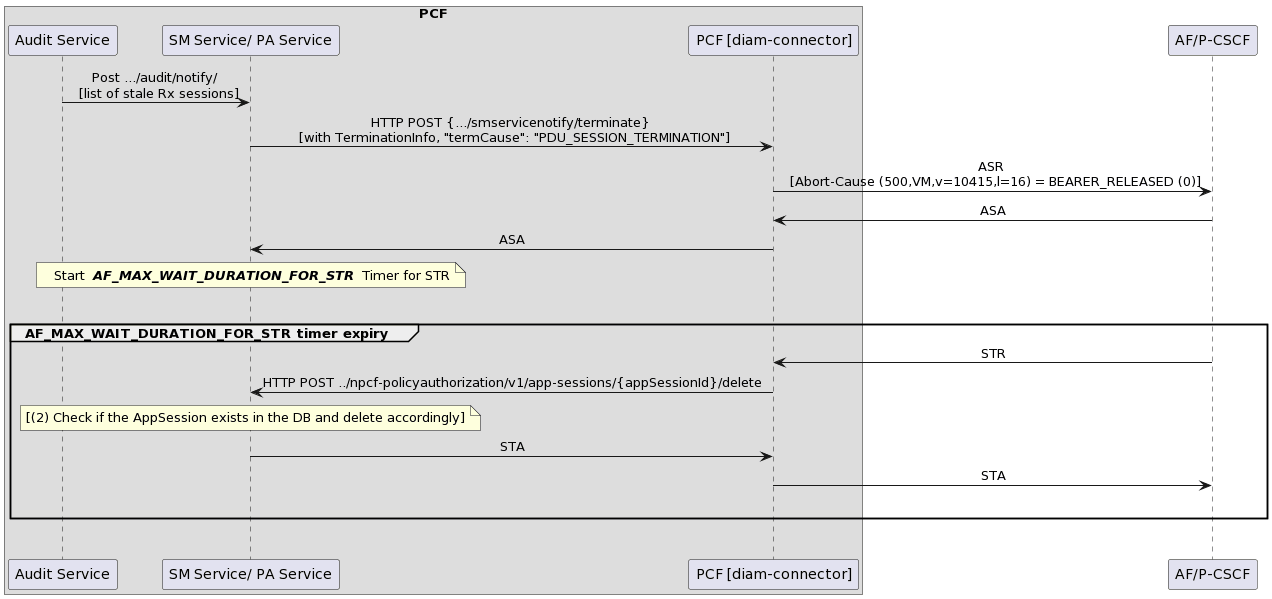

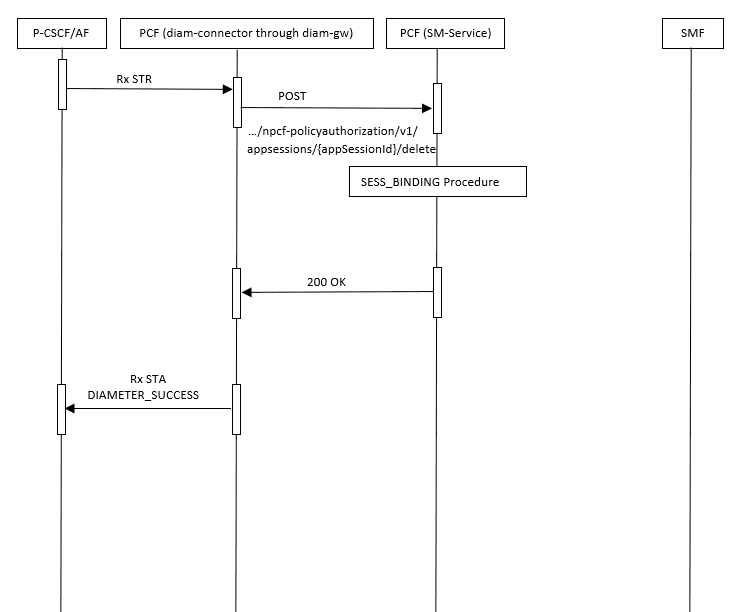

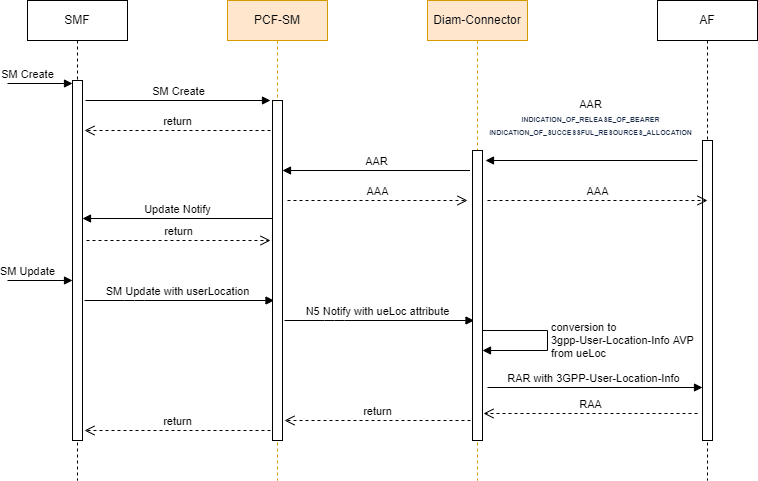

4.6 Handling Collision Between AAR and STR Messages During Update Notify Timeout

Whenever PCF receives a Authorization Authentication Request (AAR-I/AAR-U) from Proxy-

Call Session Control Function (P-CSCF), PCF asynchronously responds to P-CSCF with a

Authorization Authentication Answer (AAA) and also sends an

UpdateNotify message to SMF to install the pccRules.

If PCF does not receive any response from SMF and the wait time expires, it applies the

session retry logic if configured. If PCF does not receive any response from SMF after

all the retries and during this time if PCF also receives a Session Termination Request

(STR) from P-CSCF, PCF sends a UpdateNotify message to SMF to remove

the pccRules. Thereby, a collision or race condition arises as PCF first sends an

UpdateNotifymessage to SMF to install the pccRule and before

receiving any response from SMF, PCF also sends an UpdateNotify message

to SMF to remove the pccRule.

PCF handles concurrency or collision between AAR-I or AAR-U triggered due to

UpdateNotify to install pccRule and STR messages triggered due to

UpdateNotify to remove the pccRule.

PCF sends UpdateNotify request for pccRule removal to SMF

for STR after Timeouts and there is no response received for

UpdateNotify request sent due to AAR-I/AAR-U.

PCF interacts with P-CSCF to enforce Quality of Service (QoS) parameters. PCF allocates the necessary network resources to ensure VoLTE calls have the lowest possible latency, ensuring call quality.

PCF receives AAR from P-CSCF through BSF to create the dedicated QoS flow for VoLTE session. AAR includes QoS information associated with the bearer. The request includes IP Multimedia Subsystem (IMS) Voice bearer and optionally Video or Real-time text (RTT) bearer.

PCF processes the AAR-I request and sends a UpdateNotify

for pccRule install to SMF. If PCF does not receive any response from SMF, it retries

the request as per session retry configuration.

UpdateNotify for pccRule install from

SMF, if it receives a STR to terminate the session:

- If the wait time to receive response from SMF expires and as per the session

retry configuration, if the number of retries is also exhausted, pccRules are

deleted from

SmPolicyAssociationduring timeout error handling. - PCF sends the

UpdateNotifyto SMF due to STR to remove the pccRule depending on whether the pccRules are present inSmPolicyAssociationdatabase or not.

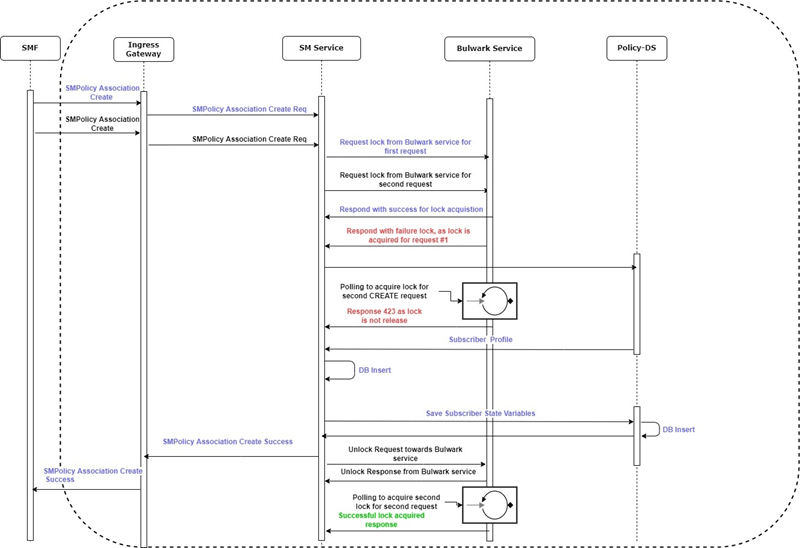

Design and Architecture

PCF handles the race condition between multiple UpdateNotify

messages triggered due to AAR-I or AAR-U and STR requests using

pendingConfirmationAction advanced settings key.

SYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.ENABLEDSYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.PROCESS_ON_UPDATESYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.RESPONSE_CODESSYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.EXCEPTIONS

For more information on these advanced settings keys for SM service, see PCF Session Management.

When PCF reveices AAR, PCF sends UpdateNotify to SMF to install the

rules. The message to SMF also includes pendingConfirmationAction

key.

The pendingConfirmationAction key indicates if the pccRule is

installed in SmPolicyAssociation database or not during the timeout

error.

If pccRules are installed as part of AAR-I or AAR-U and are saved in

SmPolicyAssociation database, the

pendingConfirmationAction key is updated accordingly.

pendingConfirmationAction key can be:

- 0 (None)

- 1 (install)

- 2 (remove)

If PCF receives a successful response from SMF for the UpdateNotify

message sent due to AAR-I or AAR-U, PCF removes the

pendingConfirmationAction from

SmPolicyAssociation database.

Otherwise, if PCF receives STR request before receiving any response from SMF, the

pccRules are removed from SmPolicyAssociation database and

pendingConfirmationAction key is updated accordingly.

PCF sends pendingConfirmationAction key in ASR to P-CSCF depending

on Supress_ASR key. When Supress_ASR key is

enabled, PCF does not send pendingConfirmationAction to P-CSCF.

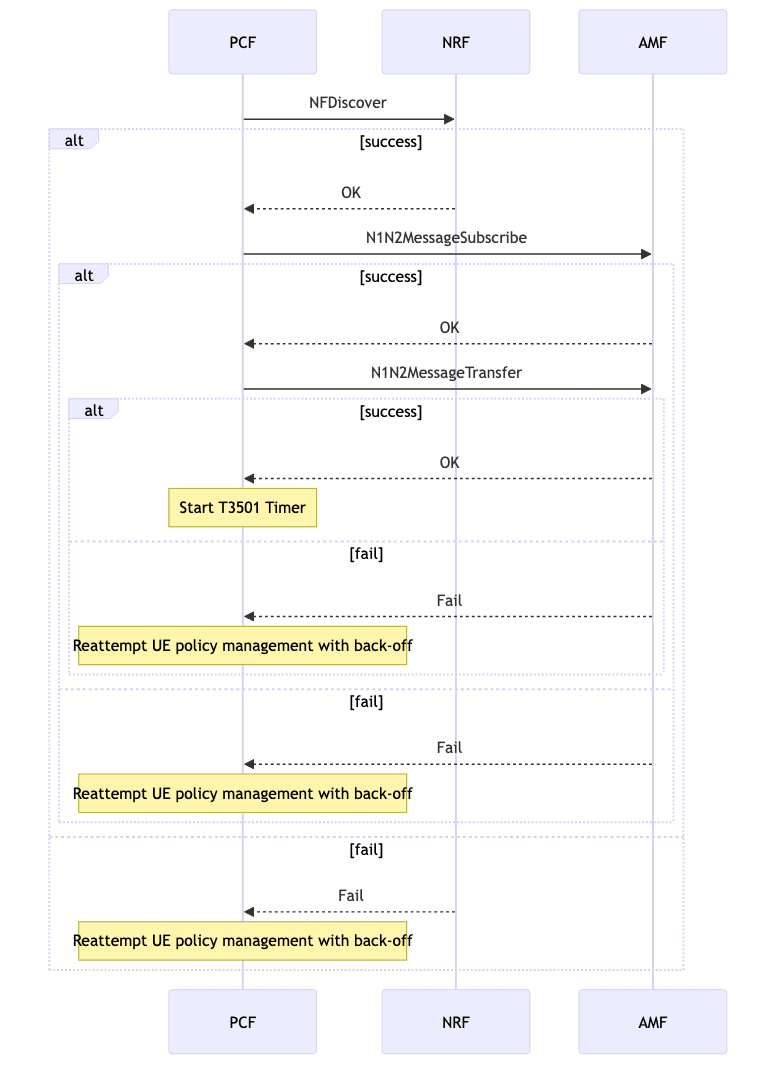

Call Flow

Figure 4-14 Handling Collision Between AAR and STR Messages During UpdateNotify Timeout

-

PCF receives requests from P-CSCF to enforce Quality of Service (QoS) parameters. The PCF allocates the necessary network resources to ensure Voice over Long-Term Evolution (VoLTE) calls have the lowest possible latency and high call quality.

P-CSCF sends AAR command to PCF through BSF to create the dedicated bearer for Voice over New Radio (VoNR) session. AAR includes QoS information associated with the bearer. The request includes IP Multimedia Subsystem (IMS) Voice bearer and optionally Video/Round-trip time (RTT) bearer.

- BSF sends the request to PCF.

-

PCF responds to P-CSCF with AAA through BSF.

- BSF forwards the AAA from PCF to P-CSCF through Diameter Gateway.

-

PCF sends an

UpdateNotifyrequest to SMF triggered due to AAR-I. -

After sending the

UpdateNotifyrequest for AAR-I to SMF, PCF waits for configured timeout period. -

If there is no response for

UpdateNotifyfrom SMF triggered due to AAR-I, PCF applies the session retry forSMUpdatenotification. -

If there is no response from SMF beyond the configured time period and all the retries are over for

UpdateNotifyrequest sent to SMF due to AAR-I, PCF sends anUpdateNotifyrequest to SMF for STR for pccRule removal.

Flow scenarios

Figure 4-15 Handling Collision Between AAR and STR Messages During Update Notify-Success Scenario

In this case, PCF receives an AAR-I message from AF/P-CSCF.

PCF sends the UpdateNotify (pccRule install) message to

SMF. SMF responds with a 204 Success message.

PCF saves the pccRules in the SMPolicyAssociation

database.

When PCF receives an STR message to terminate the session, it sends an STR request to SMF for pccRule removal.

SMF responds with an UpdateNotify success message and

PCF removes the pccRules from SMPolicyAssociation database.

Figure 4-16 Handling Collision Between AAR and STR Messages During UpdateNotify-Timeout Scenario

After PCF sends an UpdateNotify for pccRule install to

SMF, if there is no response from SMF due to Timeout at SMF, PCF applies the session

retry.

SYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.ENABLED

advanced settings key is set to true.

SYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.ENABLEDSYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.PROCESS_ON_UPDATESYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.RESPONSE_CODESSYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.EXCEPTIONS

Figure 4-17 Handling Collision Between AAR and STR Messages During UpdateNotify-Partial Success Scenario

In case of partial success in pccRule installation by SMF, PCF handles

the situation as pending-Success-pendingFailure (200 partial

success response).

- The pccRules sent in

UpdateNotifyare saved inSMPolicyAssociationdatabase withpendingConfirmationkey ifhandleUpdateNotifyForRxCollisionkey is enabled. - If PCF receives AAR-U,

smUpdateNotifyis sent with pccRules to create them ifsendPendingConfirmationRulesToInstallOnUpdatekey is enabled. If PCF receives a successful response from SMF, thependingConfirmationkey is removed for pccRules. - If PCF receives AAR-U,

smUpdateNotifyis sent with pccRules to create them ifsendPendingConfirmationRulesToInstallOnUpdatekey is enabled. If PCF receives a successful response from SMF, thependingConfirmationkey is removed for pccRules. - STR is sent with pccRules information to be removed if

sendPendingConfirmationToDeleteOnSTRkey is enabled. - If PCR receives STR, it sends

SmfUpdateNotifycleaning up pccRules. - If PCF receives AAR-U, it sends the pccRules again to confirm if they are installed.

Figure 4-18 Handling Collision Between AAR and STR Messages During UpdateNotify-408 or 504 Errors from SMF

- If SMF responds with Timeout error, 408/504

REQUEST_TIMEOUTerror code and ifSYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.RESPONSE_CODESkey is enabled, and pccRules are saved in SMPolicyAssociation database. - PCF sends a Abort Session Request (ASR) with

SuppressASRkey disabled to AF/P-CSCF. - AF/P-CSCF responds with Abort Session Answer (ASA).

- PCF receives a STR from AF/P-CSCF. PCF does not send any

UpdateNotifymessage to SMF as the pccRules were not installed. - PCF responds to AF- PCSCF with STA.

Figure 4-19 Handling Collision Between AAR and STR Messages During UpdateNotify-408 or 504 Errors from SMF When Error Codes are Configured

- If SMF responds with Timeout error, 408/504

REQUEST_TIMEOUTerror. - PCF treats the

UpdateNotifymessage asSuccess. The pccRules are saved inSMPolicyAssociationdatabase. The value ofpendingConfirmationActionkey is set toREMOVE. - PCF sends a Abort Session Request (ASR) with

SuppressASRdisabled to AF/P-CSCF. - AF/P-CSCF responds with Abort Session Answer (ASA).

- PCF receives a STR from AF/P-CSCF. PCF asynchronously responds with STA to AF/P-CSCF.

- PCF sends the

UpdateNotifymessage to SMF for pccRule removal. SMF removes the pccRules and responds with a success message to PCF.

Figure 4-20 Handling Collision Between AAR-I, AAR-U, and STR Messages During UpdateNotify-Timeout Scenario with SupressASR Enabled

- PCF receives an AAR-I from AF/P-CSCF and sends an

UpdateNotifyto SMF to install the pccRule. - If PCF does not receive the response from SMF, PCF retries to

send the

UpdateNotifymessage. - PCF treats the response to

UpdateNotifyassuccess. PCF saves the pccRules inSMPolicyAssociationdatabase with the value ofpendingConfirmationActionkey set toINSTALL. - While awaiting the resonse for the

UpdateNotifydue to AAR-I from SMF, if PCF receives an AAR-U from AF/P-CSCF, PCF sends anUpdateNotifydue to AAR-U to SMF. - PCF saves the pccRules in

SMPolicyAssociationdatabase with the value ofpendingConfirmationActionkey set toREINSTALL. - If SMF responds with a

204 SUCCESSmessage, PCF saves the pccRules inSMPolicyAssociationdatabase and removes thePendingConfirmationkey. - When PCF receives an STR message from AF/P-CSCF, PCF sends an

UpdateNotifymessage to SMF to remove the pccRules. - If SMF responds to

UpdateNotifywith a204 Successmessage, PCF removes the pccRules fromSMPolicyAssociationdatabase.

Managing Collision Between AAR and STR Messages During Update Notify Timeout

Enable

This feature can be enabled by configuring

SYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.ENABLED

Advanced Settings Key under PCF Session Management page

for Service Configurations in CNC Console. For more

information, see PCF Session Management.

Configure

This feature can be configured using the following Advanced Settings Keys under PCF Session Management page for Service Configurations in CNC Console.

SYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.ENABLEDSYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.PROCESS_ON_UPDATESYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.RESPONSE_CODESSYSTEM.RX.UPDATE_NOTIFY.RULES.PENDING_CONFIRMATION.EXCEPTIONS

For more information, see PCF Session Management.

Observability

Metrics

ocpm_handle_update_notify_timeout_as_pending_confirmation_totalocpm_handle_update_notify_error_response_as_pending_confirmation_totalocpm_process_pending_confirmation_rules_on_pa_update_totalocpm_process_pending_configuration_rules_on_pa_delete_totalocpm_rx_update_notify_request_total

For more information, see SM Service Metrics.

Alerts

RX_PENDING_CONFIRMATION_UPDATE_NOTIFY_ERROR_RESPONSE_ABOVE_CRITICAL_THRESHOLDRX_PENDING_CONFIRMATION_UPDATE_NOTIFY_ERROR_RESPONSE_ABOVE_MAJOR_THRESHOLDRX_PENDING_CONFIRMATION_UPDATE_NOTIFY_ERROR_RESPONSE_ABOVE_MINOR_THRESHOLDRX_PENDING_CONFIRMATION_UPDATE_NOTIFY_TIMEOUT_ABOVE_CRITICAL_THRESHOLDRX_PENDING_CONFIRMATION_UPDATE_NOTIFY_TIMEOUT_ABOVE_MAJOR_THRESHOLDRX_PENDING_CONFIRMATION_UPDATE_NOTIFY_TIMEOUT_ABOVE_MINOR_THRESHOLD

For more information, see Common Alerts.

Logging

Policy logs collisions that occure when pccRule Removal is sent to SMF

during UpdateNotify after timeouts occur for

UpdateNotify for AAR messages.

Examples:

AAR-I/AAR-U update notify:

{

"instant":{

"epochSecond":1737588500,

"nanoOfSecond":256798000

},

"thread":"boundedElastic-7",

"level":"INFO",

"loggerName":"ocpm.pcf.service.sm.serviceconnector.SmfConnector",

"message":"Ready to send Update Notification to SMF: {\n \"smPolicyDecision\" : {\n \"pccRules\" : {\n \"0_3\" : {\n \"flowInfos\" : [ {\n \"flowDescription\" : \"permit in 17 from 10.10.10.10 to 10.17.18.19 8001\",\n \"flowDirection\" : \"UPLINK\"\n }, {\n \"flowDescription\" : \"permit out 17 from 10.17.18.19 to 10.10.10.10 8001\",\n \"flowDirection\" : \"DOWNLINK\"\n } ],\n \"pccRuleId\" : \"0_3\",\n \"precedence\" : 401,\n \"refQosData\" : [ \"qosdata_2\" ],\n \"refTcData\" : [ \"tcdata_1\" ]\n },\n \"0_2\" : {\n \"flowInfos\" : [ {\n \"flowDescription\" : \"permit in 17 from 10.10.10.10 to 10.17.18.19 8000\",\n \"flowDirection\" : \"UPLINK\"\n }, {\n \"flowDescription\" : \"permit out 17 from 10.17.18.19 to 10.10.10.10 8000\",\n \"flowDirection\" : \"DOWNLINK\"\n } ],\n \"pccRuleId\" : \"0_2\",\n \"precedence\" : 400,\n \"refQosData\" : [ \"qosdata_1\" ],\n \"refTcData\" : [ \"tcdata_0\" ]\n }\n },\n \"qosDecs\" : {\n \"qosdata_1\" : {\n \"5qi\" : 1,\n \"qosId\" : \"qosdata_1\",\n \"maxbrUl\" : \"12.0 Kbps\",\n \"maxbrDl\" : \"12.0 Kbps\",\n \"gbrUl\" : \"12.0 Kbps\",\n \"gbrDl\" : \"12.0 Kbps\",\n \"arp\" : {\n \"priorityLevel\" : 15,\n \"preemptCap\" : \"NOT_PREEMPT\",\n \"preemptVuln\" : \"PREEMPTABLE\"\n }\n },\n \"qosdata_2\" : {\n \"5qi\" : 1,\n \"qosId\" : \"qosdata_2\",\n \"maxbrUl\" : \"600.0 bps\",\n \"maxbrDl\" : \"600.0 bps\",\n \"gbrUl\" : \"600.0 bps\",\n \"gbrDl\" : \"600.0 bps\",\n \"arp\" : {\n \"priorityLevel\" : 15,\n \"preemptCap\" : \"NOT_PREEMPT\",\n \"preemptVuln\" : \"PREEMPTABLE\"\n }\n }\n },\n \"traffContDecs\" : {\n \"tcdata_0\" : {\n \"tcId\" : \"tcdata_0\",\n \"flowStatus\" : \"ENABLED\"\n },\n \"tcdata_1\" : {\n \"tcId\" : \"tcdata_1\",\n \"flowStatus\" : \"ENABLED\"\n }\n },\n \"policyCtrlReqTriggers\" : [ \"PLMN_CH\", \"UE_IP_CH\", \"DEF_QOS_CH\", \"AC_TY_CH\", \"SUCC_RES_ALLO\" ],\n \"lastReqRuleData\" : [ {\n \"refPccRuleIds\" : [ \"0_2\", \"0_3\" ],\n \"reqData\" : [ \"SUCC_RES_ALLO\" ]\n } ]\n },\n \"resourceUri\" : \"https://pcf_smservice.pcf:5805/npcf-smpolicycontrol/v1/sm-policies/1eb1b1bc-9e51-4ac0-ae38-13f21af05318_0_U1VQSTppbXNpLTQ1MDA4MTAwMDAwODAwMTs=\"\n}",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":293,

"threadPriority":5,

"messageTimestamp":"2025-01-22T17:28:20.256-0600",

"ocLogId":"${ctx:ocLogId}"

}"TimeOut Exception

Time Out"{

"instant":{

"epochSecond":1737588500,

"nanoOfSecond":257747000

},

"thread":"boundedElastic-7",

"level":"DEBUG",

"loggerName":"ocpm.pcf.service.sm.domain.component.user.UserManager",

"message":"process user service request",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":293,

"threadPriority":5,

"messageTimestamp":"2025-01-22T17:28:20.257-0600",

"ocLogId":"${ctx:ocLogId}"

}{

"instant":{

"epochSecond":1737588500,

"nanoOfSecond":257766000

},

"thread":"boundedElastic-7",

"level":"DEBUG",

"loggerName":"ocpm.pcf.service.sm.domain.component.user.UserManager",

"message":"Processing Async policyCounterActions.",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":293,

"threadPriority":5,

"messageTimestamp":"2025-01-22T17:28:20.257-0600",

"ocLogId":"${ctx:ocLogId}"

}{

"instant":{

"epochSecond":1737588503,

"nanoOfSecond":261970000

},

"thread":"boundedElastic-7",

"level":"WARN",

"loggerName":"ocpm.pcf.util.StackTraceUtil",

"message":"Failed to call SMF",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":293,

"threadPriority":5,

"messageTimestamp":"2025-01-22T17:28:23.261-0600",

"triggerClass":"ocpm.pcf.service.sm.serviceconnector.SmfConnector",

"errorName":"java.util.concurrent.TimeoutException",

"errorMessage":"Did not observe any item or terminal signal within 3000ms in 'source(MonoDeferContextual)' (and no fallback has been configured)",

"errorStack":"[reactor.core.publisher.FluxTimeout$TimeoutMainSubscriber.handleTimeout(FluxTimeout.java:295), reactor.core.publisher.FluxTimeout$TimeoutMainSubscriber.doTimeout(FluxTimeout.java:280), reactor.core.publisher.FluxTimeout$TimeoutTimeoutSubscriber.onNext(FluxTimeout.java:419), reactor.core.publisher.FluxOnErrorReturn$ReturnSubscriber.onNext(FluxOnErrorReturn.java:162), reactor.core.publisher.MonoDelay$MonoDelayRunnable.propagateDelay(MonoDelay.java:271), reactor.core.publisher.MonoDelay$MonoDelayRunnable.run(MonoDelay.java:286), reactor.core.scheduler.SchedulerTask.call(SchedulerTask.java:68), reactor.core.scheduler.SchedulerTask.call(SchedulerTask.java:28), io.micrometer.core.instrument.composite.CompositeTimer.recordCallable(CompositeTimer.java:129), io.micrometer.core.instrument.Timer.lambda$wrap$1(Timer.java:206), java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264), java.base/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:304), java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136), java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635), java.base/java.lang.Thread.run(Thread.java:840)]"

}"Handling time out for Rx Collision

Handle as Success"{

"instant":{

"epochSecond":1746472387,

"nanoOfSecond":965052000

},

"thread":"boundedElastic-2",

"level":"INFO",

"loggerName":"ocpm.pcf.service.sm.domain.component.rule.PendingConfirmationRuleHelper",

"message":"PccRules in Update Notify failure will be set as pendingConfirmation",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":95,

"threadPriority":5,

"messageTimestamp":"2025-05-05T13:13:07.965-0600",

"ocLogId":"${ctx:ocLogId}"

}{

"instant":{

"epochSecond":1746472387,

"nanoOfSecond":965553000

},

"thread":"boundedElastic-2",

"level":"DEBUG",

"loggerName":"ocpm.pcf.service.sm.domain.component.rule.PendingConfirmationRuleHelper",

"message":"PccRule 0_3 set as pendingConfirmation",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":95,

"threadPriority":5,

"messageTimestamp":"2025-05-05T13:13:07.965-0600",

"ocLogId":"${ctx:ocLogId}"

}{

"instant":{

"epochSecond":1746472387,

"nanoOfSecond":965600000

},

"thread":"boundedElastic-2",

"level":"DEBUG",

"loggerName":"ocpm.pcf.service.sm.domain.component.rule.PendingConfirmationRuleHelper",

"message":"PccRule 0_2 set as pendingConfirmation",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":95,

"threadPriority":5,

"messageTimestamp":"2025-05-05T13:13:07.965-0600",

"ocLogId":"${ctx:ocLogId}"

}"Metric Pegged

Metric"{

"instant":{

"epochSecond":1746472387,

"nanoOfSecond":959692000

},

"thread":"boundedElastic-2",

"level":"DEBUG",

"loggerName":"ocpm.pcf.service.sm.domain.component.metrics.SmMetrics",

"message":"Pegging Handle Update Notify timeout as Pending Confirmation metric, Operation Type: update_notify, Cause: Update Notify Time Out handled as PendingConfirmation",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":95,

"threadPriority":5,

"messageTimestamp":"2025-05-05T13:13:07.959-0600",

"ocLogId":"${ctx:ocLogId}"

}"STR Update Notify (Rule Remove)

STR Update Notify"{

"instant":{

"epochSecond":1746472392,

"nanoOfSecond":580363000

},

"thread":"boundedElastic-2",

"level":"DEBUG",

"loggerName":"ocpm.pcf.service.sm.domain.component.metrics.SmMetrics",

"message":"Pegging Sent pending confirmation rules to remove metric, Operation Type: update_notify",

"endOfBatch":false,

"loggerFqcn":"org.apache.logging.slf4j.Log4jLogger",

"threadId":95,

"threadPriority":5,

"messageTimestamp":"2025-05-05T13:13:12.580-0600",

"ocLogId":"${ctx:ocLogId}"

}{

"instant":{

"epochSecond":1746472392,

"nanoOfSecond":580524000

},

"thread":"boundedElastic-2",

"level":"INFO",

"loggerName":"ocpm.pcf.service.sm.serviceconnector.SmfConnector",