5 Upgrade and Rollback Guidelines for Multisite Georeplication Setup

This chapter presents various scenarios related to the upgrade and rollback of Network Functions (NFs). It also outlines robust recovery strategies designed to address errors or failures encountered during the upgrade process, providing guidance on how to efficiently restore services and minimize downtime. In the following scenarios, three-site georeplication setup is considered as an example.

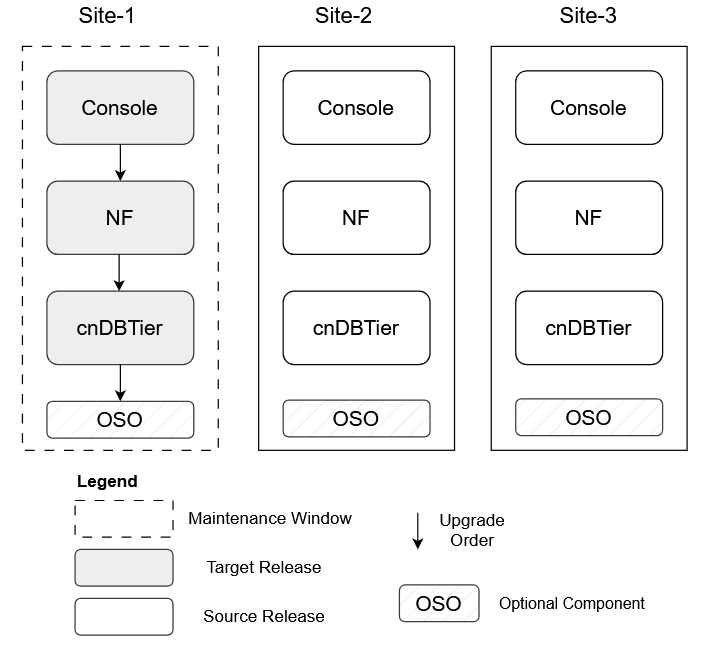

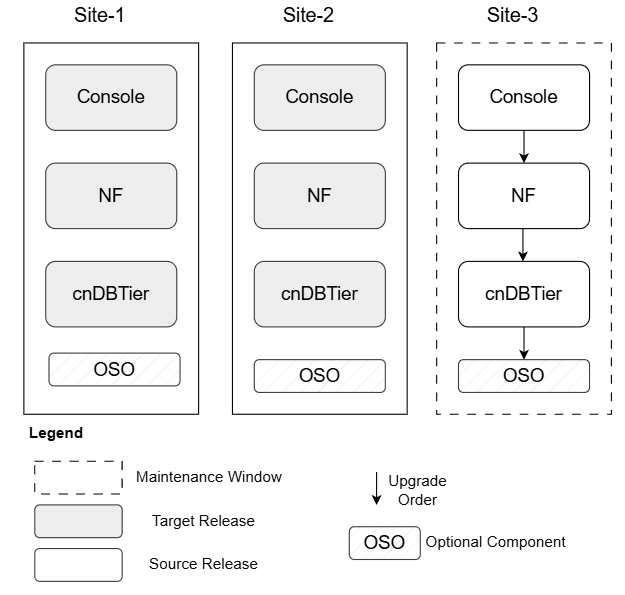

5.1 Scenario 1: Site-1 Upgrade

This scenario provides the upgrade guidelines for Site-1.

Figure 5-1 Scenario 1: Site-1 Upgrade

- The upgrade of Site-1 is a pivotal step in any multisite georeplication deployment. The recommended sequence for the upgrade process is to first upgrade the Console, followed by the Network Function (NF), and finally the cnDBTier, as outlined in preceding chapters. For detailed upgrade procedures, see the respective installation, upgrade, and fault recovery guides.

- This upgrade sequence is designed to ensure consistent data processing while minimizing service disruption. Since cnDBTier manages both configuration and subscriber data, which is replicated across all instances, it is essential to upgrade cnDBTier last to maintain data integrity throughout the process. The Site-1 upgrade is conducted as an in-service operation, allowing services to continue running with minimal impact.

- Once the upgrade is complete, system functionality is monitored and any potential service issues are addressed.

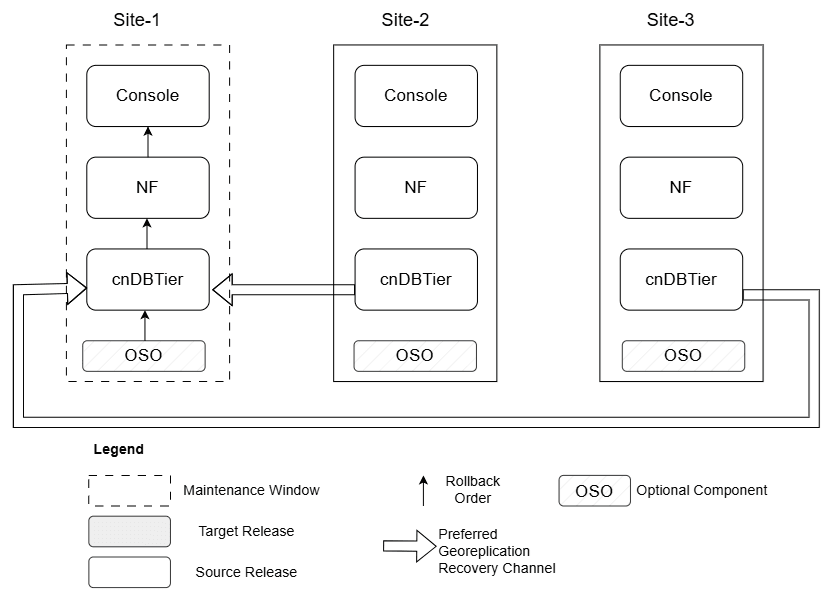

5.2 Scenario 2: Site-1 Rollback

This scenario provides the rollback guidelines for Site-1.

Figure 5-2 Scenario 2: Site-1 Rollback

- A rollback at Site-1 serves as a critical recovery measure when the newly deployed release introduces issues that cannot be resolved within the allotted maintenance window. After a Site-1 upgrade, system operations are monitored closely for signs of functional irregularities, performance impact, or any adverse impact on services. In case of any significant issue, particularly one that cannot be addressed through minor configuration adjustments, a rollback procedure is initiated to restore stability.

- Prior to initiating a rollback, troubleshooting is performed with a focus on resolving manageable issues, such as configuration mismatches or custom values file errors, within the current maintenance window. If these efforts are unsuccessful, a rollback action is performed.

- The rollback process follows a defined sequence: first, revert cnDBTier, then the Network Function (NF), and finally the Console. This order is designed to safeguard data integrity and system consistency throughout the operation. Typically, a subsequent upgrade attempt is planned for the next available maintenance window once the underlying issues have been corrected or necessary patches have been made available. For detailed rollback procedures, see the respective installation, upgrade, and fault recovery guides.

- If required, Georeplication Recovery (GRR) for Site-1 can be performed either from Site-2 or Site-3 to facilitate a consistent state across all sites. For more information on the GRR procedure, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

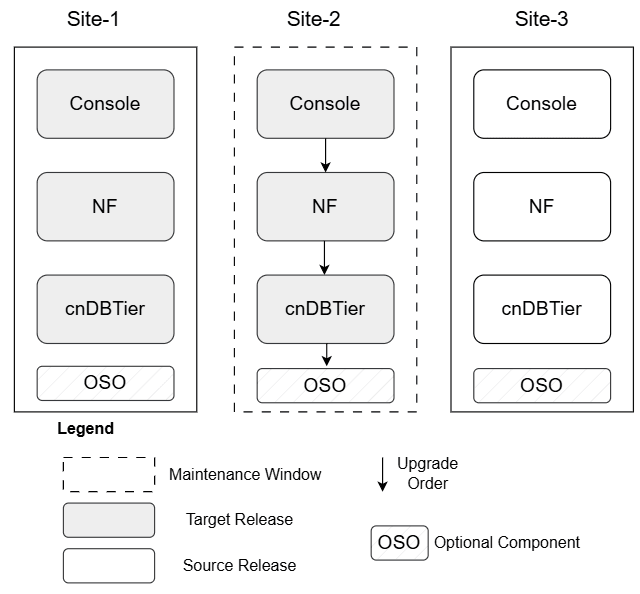

5.3 Scenario 3: Site-2 Upgrade

This scenario provides the upgrade guidelines for Site-2.

Figure 5-3 Scenario 3: Site-2 Upgrade

- Once the Site-1 upgrade is successful, the upgrade proceeds to Site-2.

- The recommended sequence for the upgrade process is to first upgrade the Console, followed by the Network Function (NF), and finally the cnDBTier, as outlined in preceding chapters. For detailed procedures, see the installation, upgrade, and fault recovery guides specific to cnDBTier and the NF. This upgrade sequence is designed to ensure consistent data processing while minimizing service disruption. Since cnDBTier manages both configuration and subscriber data, which is replicated across all instances, it is essential to upgrade cnDBTier last to maintain data integrity throughout the process.

- The Site-2 upgrade is conducted as an in-service operation, allowing services to continue running with minimal impact. Once the upgrade is complete, system functionality is monitored and any potential service issues are addressed.

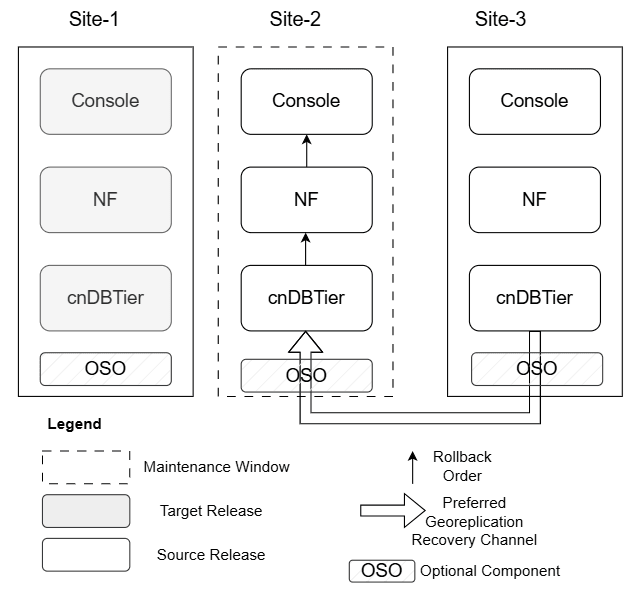

5.4 Scenario 4: Site 2 Rollback

This scenario provides the rollback guidelines for Site-2.

Figure 5-4 Scenario 4: Site 2 Rollback

- n case Site-2 upgrade fails, rollback to the source release is recommended.

- Prior to initiating a rollback, troubleshooting is performed with a focus on resolving manageable issues, such as configuration mismatches or custom values file errors, within the current maintenance window. If these efforts are unsuccessful, a rollback action is performed.

- The rollback process follows a defined sequence: first, revert cnDBTier, then the Network Function (NF), and finally the Console. This order is designed to safeguard data integrity and system consistency throughout the operation. Typically, a subsequent upgrade attempt is planned for the next available maintenance window once the underlying issues have been corrected or necessary patches have been made available. For detailed rollback procedures, see the respective installation, upgrade, and fault recovery guides.

- If required, Georeplication Recovery (GRR) for Site-2 is preferred from Site-3 to facilitate a consistent state across all sites. For more information on the GRR procedure, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

5.5 Scenario 5: Site-3 Upgrade

This scenario provides the upgrade guidelines for Site-3.

Figure 5-5 Scenario 5: Site-3 Upgrade

- Site-3 typically undergoes an upgrade only after Site-1 and Site-2 have been successfully upgraded. This staged approach reduces risk, ensuring stability before completing the upgrade cycle. For detailed upgrade procedures, see the respective installation, upgrade, and fault recovery guides.

- Once Site-3 is upgraded, maintenance for the entire set concludes. Although rollbacks are rare at this stage since most issues are caught earlier, if problems like configuration file errors appear, they are corrected and the upgrade is retried. Any persistent problems are addressed through follow-up patch releases.

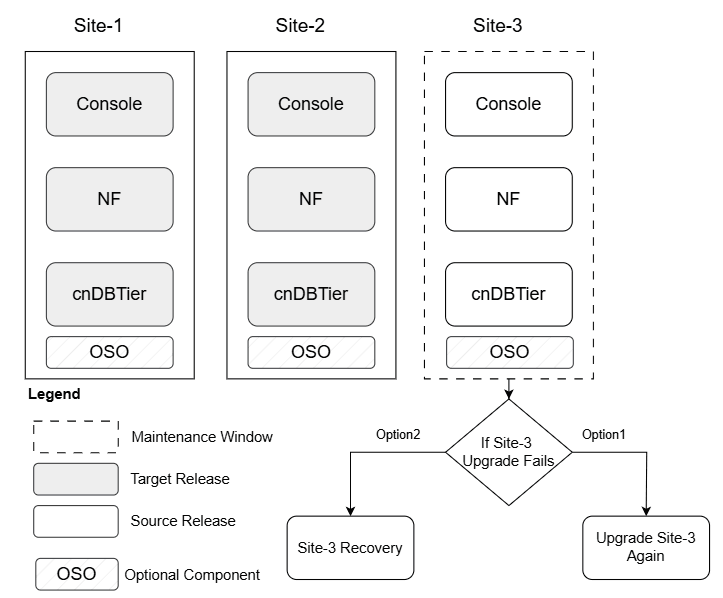

5.6 Scenario 6: Site-3 Rollback

This scenario provides the rollback guidelines for Site-3.

Figure 5-6 Scenario 6: Site-3 Rollback

In case upgrade of Site-3 fails, following are the options:

- Troubleshooting is performed with a focus on resolving manageable issues, such as configuration mismatches or custom values file errors, within the current maintenance window.

- Option1: Attempt Site-3 upgrade again: In this case, the upgrade Site-3 as mentioned in the Scenario 5: Site-3 Upgrade.

- In case re-upgrade fails then rollback of Site-3 can be performed.

- Option2: Rollback all the sites to the source release: In this case, rollback all the sites as mentioned in the Scenario 8: All Sites Rollback.

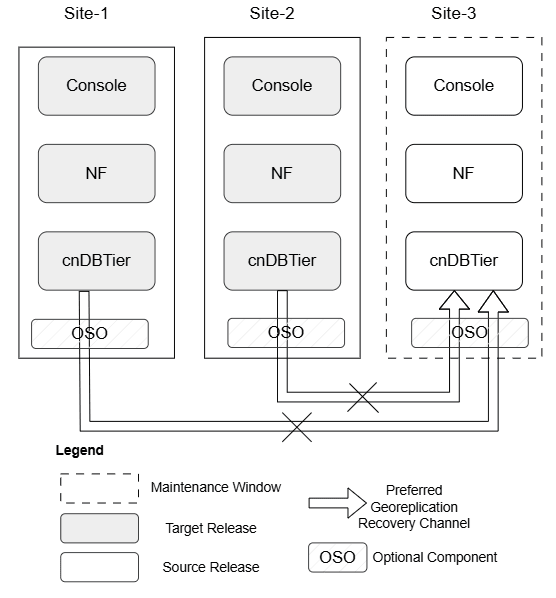

5.7 Scenario 7: Site-3 Recovery when replication breaks

This scenario provides the recovery guidelines when replication channel of Site-3 breaks, after Site-3 upgrade or rollback failure.

Figure 5-7 Scenario 7: Site 3 Rollback when replication break

- In case of Site-3 could not be made operational after Site-3 upgrade or rollback then Site-3 recovery procedure should be followed to restore a healthy environment.

- GRR is only supported between sites running the same software version. Attempting to use GRR to connect a newer release with an older one is not supported and could lead to data inconsistency or system instability.

- Install the target release on Site-3 to match with the version of the other sites.

- Install sequence is as follows:

- First install the cnDBTier, and establish the replication channels with other sites of the set.

- Next install the Network Function (NF), and finally the Console.

- For detailed installation procedures, see the respective installation, upgrade, and fault recovery guides.

- Existing configuration method of configuring a new site is applied on the newly built set.

- After full site recovery, traffic is introduced on this site.

For detailed rollback procedures, see the respective installation, upgrade, and fault recovery guides.

For more information on the GRR procedure, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

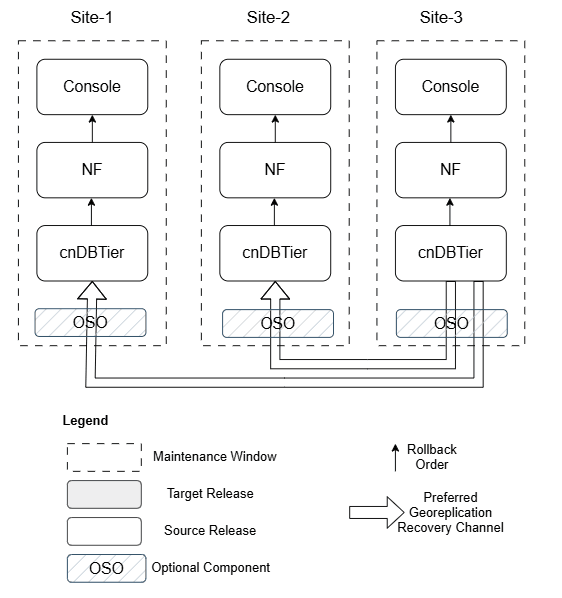

5.8 Scenario 8: All Sites Rollback

This scenario addresses the rare situation in which a decision is made to revert (rollback) changes across all three sites.

Figure 5-8 Scenario 8: All Sites Rollback

- Perform Site-3 rollback as mentioned in Scenario-6.

- Once Site-3 rollback is complete, perform Site-2 rollback.

- Georeplication Recovery (GRR) for Site-2 is performed from Site-3 to facilitate a consistent state across all sites.

- Once Site-2 rollback is complete, perform Site-1 rollback.

- Georeplication Recovery (GRR) for Site-1 is performed from Site-3 to facilitate a consistent state across all sites.

For detailed rollback procedures, see the respective installation, upgrade, and fault recovery guides.

For more information on the GRR procedure, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

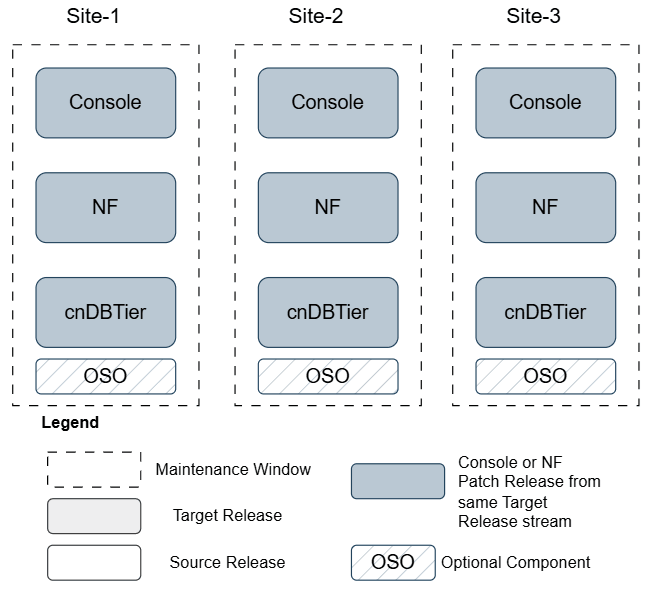

5.9 Scenario 9: Patch Upgrade

This scenario provides the patch release upgrade guidelines for Site-3.

Figure 5-9 Scenario 9: Patch Upgrade

- A patch release upgrade updates software within the same release stream, delivering incremental improvements such as bug fixes, security vulnerability patches, and enhancements to system stability or performance. For example, upgrading a component from version 25.1.100 to 25.1.101 is considered a patch release upgrade.

- Patch upgrades are performed on individual components one by one using a similar methodology as full upgrade. This incremental approach helps to catch issues early, allowing for easy rollback or corrective action before broader deployment. Patch upgrades minimize operational disruption and, if needed, follow the same troubleshooting and rollback logic as main releases. For detailed upgrade procedures, see the respective installation, upgrade, and fault recovery guides.

Note:

All patch components (Console, NF, cnDBTier) must align to the same patch release stream (for example, all on 24.3.x, 24.2.x, 25.1.1xx, 25.1.2xx). Mixed patch versions across these components are not supported.

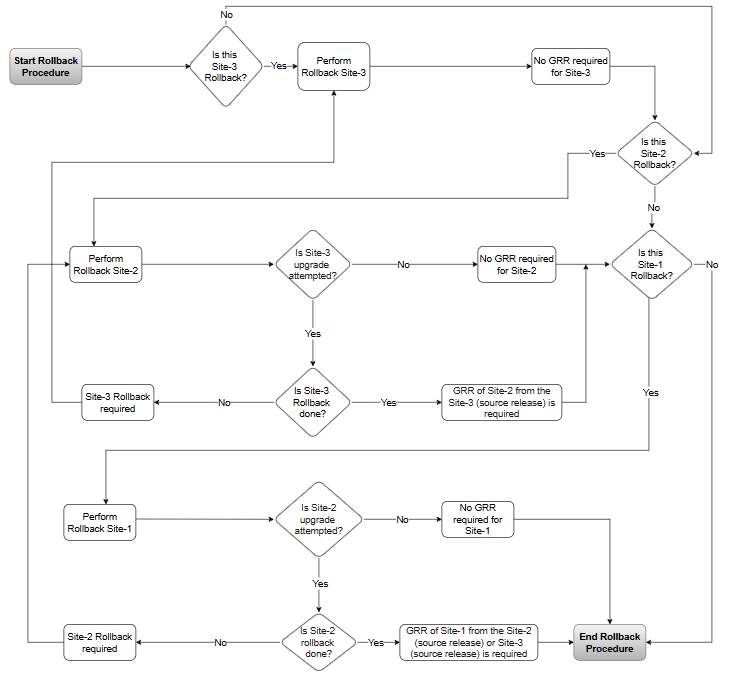

5.10 Georeplication Recovery (GRR) procedures to follow after Rollback

The following images describes the rollback guidance based on site that you are rolling back, as explained in the below flow chart:

Figure 5-10 Georeplication Recovery (GRR) procedures to follow after Rollback

Terminologies:

- Source Release: Previously deployed software on the georedundant site set. (Upgrade Source Release -> Target Release and hence rollback Target Release -> Source Release)

- Target Release: New release upgrade done on the georedundant site set. (Upgrade Source Release -> Target Release and hence rollback Target Release -> Source Release)

-

Site-1: Represents the site on which upgrade is done first.

Note:

Runhelm ls <DbTier release name> -n <namespace>to determine cnDBTier upgrade order in the sites. For instance, site that is upgraded first is considered as site1, and so on. - Site-2: Represents the site on which upgrade is done after the Site-1.

- Site-3: Represents the site which got upgraded last.

- Hence the order of upgrade is Site-1 then Site-2 and finally Site-3.

Note:

If rollback of multiple sites are required in extreme conditions then it should follow the reverse order of upgrade, that is, Site-3 should be rolled back first, followed by Site-2 and then Site-1 rollback.