2 Installing OCNADD

This chapter provides information about installing Oracle Communications Network Analytics Data Director (OCNADD) on the supported platforms.

- Oracle Communications Cloud Native Core, Cloud Native Environment (CNE)

- VMware Tanzu Application Platform (TANZU)

Note:

This document describes the OCNADD installation on CNE. However, the procedure for installation on TANZU is similar to the installation on CNE. Any steps unique to TANZU platform are mentioned explicitly in the document.2.1 Prerequisites

Before installing and configuring OCNADD, make sure that the following requirements are met:

2.1.1 Software Requirements

This section lists the software that must be installed before installing OCNADD:

Table 2-1 Mandatory Software

| Software | Version |

|---|---|

| Kubernetes | 1.27.x, 1.26.x, 1.25.x |

| Helm | 3.12.3 |

| Docker/Podman | 4.4.1 |

| kubectl-hns | v1.1.0 |

Note:

OCNADD 23.4.0.0.1 supports CNE 23.4.x, 23.3.x, and 23.2.x.echo $OCCNE_VERSIONkubectl versionhelm versionNote:

Starting with CNE 1.8.0, Podman is the preferred container platform instead of docker. For more information on installing and configuring Podman, see the Oracle Communications Cloud Native Core, Cloud Native Environment (CNE) Installation Guide.If you are installing OCNADD on TANZU, the following software must be installed:

Table 2-2 Mandatory Software

| Software | Version |

|---|---|

| Tanzu | 1.4.1 |

tanzu versionNote:

Tanzu was supported in release 22.4.0. Release 23.4.0.0.1 has not been tested on Tanzu.Depending on the requirement, you may have to install additional software while deploying OCNADD. The list of additional software items, along with the supported versions and usage, is given in the following table:

Table 2-3 Additional Software

| Software | Version | Required For |

|---|---|---|

| Prometheus-Operator | 2.44.0 | Metrics |

| Metallb | 0.13.11 | LoadBalancer |

| cnDBTier | 23.4.x, 23.3.x, 23.2.x | MySQL Database |

| hnc-controller-manager | v1.1.0 | To manage hns |

Note:

- Some of the software are available by default if OCNADD is getting deployed in Oracle Communications Cloud Native Core, Cloud Native Environment (CNE).

- Install the additional software if any is not available by default with CNE.

- If you are deploying OCNADD in any other environment, for instance, TANZU, all the above mentioned software must be installed before installing OCNADD.

helm ls -A2.1.2 Environment Setup Requirements

This section provides information on environment setup requirements for installing Oracle Communications Network Analytics Data Director (OCNADD).

Network Access

The Kubernetes cluster hosts must have network access to the following repositories:

- Local docker image repository – It contains the OCNADD docker images.

To check if the Kubernetes cluster hosts can access the local docker image repository, pull any image with an image-tag, using the following command:

podman pull docker-repo/image-name:image-tagwhere,

docker-repo is the IP address or hostname of the docker image repository.

image-name is the docker image name.

image-tag is the tag assigned to the docker image used for the OCNADD pod.

- Local Helm repository – It contains the OCNADD Helm charts.

To check if the Kubernetes cluster hosts can access the local Helm repository, run the following command:

helm repo update - Service FQDN or IP Addresses of the required OCNADD services, for instance, Kafka Brokers must be discoverable from outside of the cluster, which is publicly exposed so that Ingress messages to OCNADD can come from outside of Kubernetes.

Client Machine Requirements

Note:

Run all thekubectl and helm commands in this

guide on a system depending on the infrastructure and deployment. It could be a

client machine, such as a virtual machine, server, local desktop, and so on.

This section describes the requirements for client machine, that is, the machine used by the user to run deployment commands.

The client machine must meet the following requirements:

- network access to the helm repository and docker image repository.

- configured Helm repository

- network access to the Kubernetes cluster.

- required environment settings to run the

kubectl,podman, anddockercommands. The environment should have privileges to create namespace in the Kubernetes cluster. - The Helm client installed with the push plugin. Configure the environment in such a manner that the

helm installcommand deploys the software in the Kubernetes cluster.

Server or Space Requirements

- Oracle Communications Cloud Native Core, Cloud Native Environment (CNE) Installation, Upgrade, and Fault Recovery Guide

- Oracle Communications Network Analytics Data Director Benchmarking Guide

- Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide

OCNADD GUI Requirements

- https://static.oracle.com

- https://static-stage.oracle.com

cnDBTier Requirement

OCNADD supports cnDBTier in a CNE environment. cnDBTier must be up and running in case of containerized Cloud Native Environment. For more information about the installation procedure, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

Note:

- If cnDBTier 23.2.x or higher release is installed, set the

ndb_allow_copying_alter_tableparameter to 'ON' in the cnDBTier custom values file (dbtier_23.2.0_custom_values_23.2.0.yaml) and perform cnDBTier upgrade before install, upgrade, rollback or any fault recovery procedure is performed for OCNADD. Set the parameter to its default value, 'OFF' once the activity is completed and perform the cnDBTier upgrade to apply the parameter changes. - To perform cnDBTier upgrade, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

Data Director Images

The following table lists Data Director microservices and their corresponding images:

Table 2-4 OCNADD images

| Microservices | Image | Tag |

|---|---|---|

| OCNADD-Configuration | ocnaddconfiguration | 23.4.0.0.1 |

| OCNADD-ConsumerAdapter | ocnaddconsumeradapter | 23.4.0.0.1 |

| OCNADD-Aggregation |

ocnaddnrfaggregation ocnaddscpaggregation ocnaddseppaggregation |

23.4.0.0.1 |

| OCNADD-Alarm | ocnaddalarm | 23.4.0.0.1 |

| OCNADD-HealthMonitoring | ocnaddhealthmonitoring | 23.4.0.0.1 |

| OCNADD-Kafka | ocnaddkafkahealthclient | 23.4.0.0.1 |

| OCNADD-Admin | ocnaddadminservice | 23.4.0.0.1 |

| OCNADD-UIrouter | ocnadduirouter | 23.4.0.0.1 |

| OCNADD-GUI | ocnaddgui | 23.4.0.0.1 |

| OCNADD-Filter | ocnaddfilter | 23.4.0.0.1 |

| OCNADD-Correlation | ocnaddcorrelation | 23.4.0.0.1 |

Note:

The service images are prefixed with the OCNADD release name.2.1.3 Resource Requirements

This section describes the resource requirements to install and run Oracle Communications Network Analytics Data Director (OCNADD).

OCNADD supports centralized deployment, where each data director site has been logically replaced by a worker group. The deployment consists of a management group and multiple worker groups. Traffic processing services are managed within the worker group, while configuration and administration services are managed within the management group.

In the case of centralized deployment, resource planning should consider the following points:

- There will be only one management group consisting of the following services:

- ocnaddconfiguration

- ocnaddalarm

- ocnaddadmin

- ocnaddhealthmonitoring

- ocnaddgui

- ocnadduirouter

- There can be one or more worker groups managed by the single management group and

each worker group logically depicts the standalone data director site w.r.t traffic

processing function. This includes the following services:

- ocnaddkafka

- zookeeper

- ocnaddnrfaggregation

- ocnaddseppaggregation

- ocnaddscpaggregation

- ocnaddcorrelation

- ocnaddfilter

- ocnaddconsumeradapter

- The customer needs to plan for the resources corresponding to the management group and the number of worker groups required.

OCNADD supports various other deployment models. Before finalizing the resource requirements, see the OCNADD Deployment Models section. The resource usage and available features vary based on the deployment model selected. The centralized deployment model is the default model from 23.4.0 onward in the fresh installation with one management group and at least one worker group.

OCNADD Resource Requirements

Table 2-5 OCNADD Resource Requirements(Bases on HTTP2 Data Feed)

| OCNADD Services | vCPU Req | vCPU Limit | Memory Req (Gi) | Memory Limit (Gi) | Min Replica | Max Replica | Partitions | Topic Name |

|---|---|---|---|---|---|---|---|---|

| ocnaddconfiguration | 1 | 1 | 1 | 1 | 1 | 1 | - | - |

| ocnaddalarm | 1 | 1 | 1 | 1 | 1 | 1 | - | - |

| ocnaddadmin | 1 | 1 | 1 | 1 | 1 | 1 | - | - |

| ocnaddhealthmonitoring | 1 | 1 | 1 | 1 | 1 | 1 | - | - |

| ocnaddscpaggregation | 2 | 2 | 2 | 2 | 1 | 3 | 18 | SCP |

| ocnaddnrfaggregation | 2 | 2 | 2 | 2 | 1 | 1 | 6 | NRF |

| ocnaddseppaggregation | 2 | 2 | 2 | 2 | 1 | 2 | 12 | SEPP |

| ocnaddadapter | 3 | 3 | 4 | 4 | 2 | 14 | 126 | MAIN |

| ocnaddkafka | 6 | 6 | 64 | 64 | 4 | 4 | - | - |

| zookeeper | 1 | 1 | 2 | 2 | 3 | 3 | - | - |

| ocnaddgui | 1 | 2 | 1 | 1 | 1 | 2 | - | - |

| ocnadduirouter | 1 | 2 | 1 | 1 | 1 | 2 | - | - |

| ocnaddcorrelation | 3 | 3 | 24 | 64 | 1 | 4 | - | - |

| ocnaddfilter | 2 | 2 | 3 | 3 | 1 | 4 | - | - |

Note:

For detailed information on the OCNADD profiles, see the "Profile Resource Requirements" section in the Oracle Communications Network Analytics Data Director Benchmarking Guide.

Ephemeral Storage Requirements

Table 2-6 Ephemeral Storage

| Service Name | Ephemeral Storage (min) in Mi | Ephemeral Storage (max) in Mi |

|---|---|---|

| <app-name>-adapter | 200 | 800 |

| ocnaddadminservice | 100 | 200 |

| ocnaddalarm | 100 | 500 |

| ocnaddhealthmonitoring | 100 | 500 |

| ocnaddscpaggregation | 100 | 500 |

| ocnaddseppaggregation | 100 | 500 |

| ocnaddnrfaggregation | 100 | 500 |

| ocnaddconfiguration | 100 | 500 |

| ocnaddcorrelation | 100 | 500 |

| ocnaddfilter | 100 | 500 |

2.2 Installation Sequence

This section provides information on how to install Oracle Communications Network Analytics Data Director (OCNADD).

Note:

- It is recommended to follow the steps in the given sequence for preparing and installing OCNADD.

- Make sure you have the required software installed before proceeding with the installation.

- This is the installation procedure for a standard OCNADD deployment. To install a more secure deployment (such as, adding users, changing password, enabling mTLS, and so on) see, Oracle Communications Network Analytics Suite Security Guide.

2.2.1 Pre-Installation Tasks

To install OCNADD, perform the preinstallation steps described in this section.

Note:

Thekubectl commands may vary based on the platform used for

deploying OCNADD. Users are recommended to replace kubectl with

environment-specific command line tool to configure Kubernetes resources through

kube-api server. The instructions provided in this document are as per the OCCNE’s

version of kube-api server.

2.2.1.1 Downloading OCNADD Package

To download the Oracle Communications Network Analytics Data Director (OCNADD) package from MOS, perform the following steps:

- Log in to My Oracle Support with your credentials.

- Select the Patches and Updates tab to locate the patch.

- In the Patch Search window, click Product or Family (Advanced).

- Enter "Oracle Communications Network Analytics Data Director" in the Product field, select "Oracle Communications Network Analytics Data Director 23.4.0.0.1" from Release drop-down list.

- Click Search. The Patch Advanced Search Results displays a list of releases.

- Select the required patch from the search results. The Patch Details window opens.

- Click Download. File Download window appears.

- Click the <p********_<release_number>_Tekelec>.zip file to download the OCNADD package file.

- Extract the zip file to download the network function patch to the system where the network function must be installed.

To download the Oracle Communications Network Analytics Data Director package from the edelivery portal, perform the following steps:

- Login to the edelivery portal with your credentials. The following screen appears:

Figure 2-1 edelivery portal

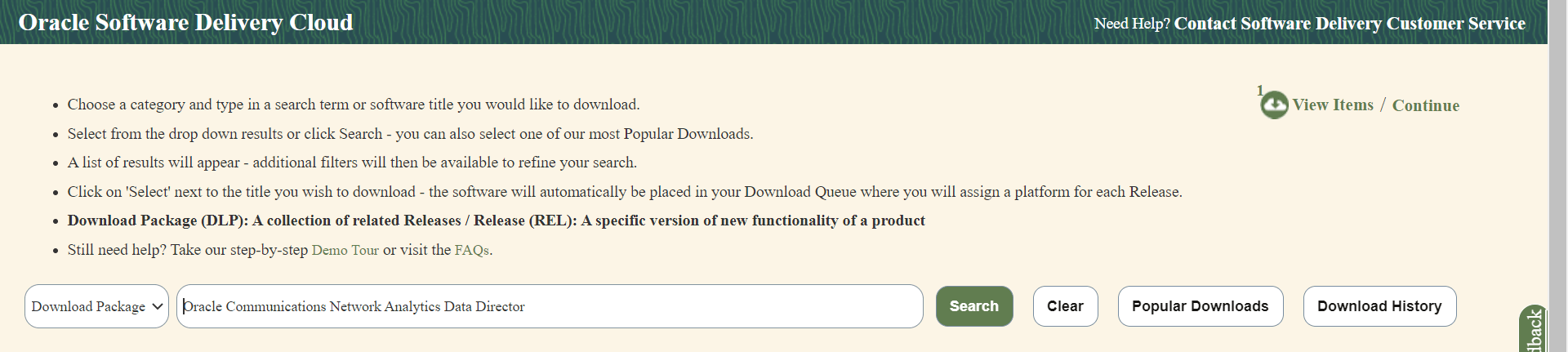

- Select the Download Package option, from All Categories drop down list.

- Enter Oracle Communications Network Analytics Data Director in the search bar.

Figure 2-2 Search

- List of release packages available for download are displayed on the screen. Select the release package you want to download, the package automatically gets downloaded.

2.2.1.2 Pushing the Images to Customer Docker Registry

Docker Images

Important:

kubectl commands might vary based on the platform deployment. Replace kubectl with Kubernetes environment-specific command line tool to configure Kubernetes resources through kube-api server. The instructions provided in this document are as per the Oracle Communications Cloud Native Core, Cloud Native Environment (CNE) version of kube-api server.

Oracle Communications Network Analytics Data Director (OCNADD) deployment package includes ready-to-use docker images and helm charts to help orchestrate containers in Kubernetes. The communication between Pods of services of OCNADD are preconfigured in the helm charts.

Following table lists the Docker images of OCNADD:

Table 2-7 Docker Images for OCNADD

| Service Name | Docker Image Name | Image Tag |

|---|---|---|

| OCNADD-Configuration | ocnaddconfiguration | 2.3.67 |

| OCNADD-ConsumerAdapter | <app-name>-adapter | 2.8.3 |

| OCNADD-Aggregation |

ocnaddnrfaggregation ocnaddscpaggregation ocnaddseppaggregation |

2.6.4 |

| OCNADD-Alarm | ocnaddalarm | 2.5.4 |

| OCNADD-HealthMonitoring | ocnaddhealthmonitoring | 2.6.2 |

| OCNADD-Kafka | kafka-broker-x | 3.6.0:2.0.23 |

| OCNADD-Admin | ocnaddadminservice | 2.6.40 |

| OCNADD-UIRouter | ocnadduirouter | 23.4.1 |

| OCNADD-GUI | ocnaddgui | 23.4.1 |

| OCNADD-Backup-Restore | ocnaddbackuprestore | 2.0.2 |

| OCNADD-Filter | ocnaddfilter | 1.6.9 |

| OCNADD-Correlation | ocnaddcorrelation | 1.5.6 |

Note:

- The service image names are prefixed with the OCNADD release name.

- The above table depicts the default OCNADD microservices and their respective images. However, a few more necessary images are delivered as a part of the OCNADD package, you must push these images along with the default images.

Pushing Docker Images

To push the images to the registry:

- Untar the OCNADD package zip file to retrieve the OCNADD docker image tar

file:

The directory consists of the following:tar -xvzf ocnadd_pkg_23_4_0_0_1.tar.gz cd ocnadd_pkg_23_4_0_0_1 tar -xvzf ocnadd-23.4.0.0.1.tar.gz- OCNADD Docker Images

File:

ocnadd-images-23.4.0.0.1.tar - Helm

File:

ocnadd-23.4.0.0.1.tgz - Readme txt

File:

Readme.txt - Custom

Templates:

custom-templates.zip - ssl_certs

folder:

ssl_certs

- OCNADD Docker Images

File:

- Run one of the following commands to first change the directory and then

load the

ocnadd-images-23.4.0.0.1.tarfile:cd ocnadd-package-23.4.0docker load --input /IMAGE_PATH/ocnadd-images-23.4.0.0.1.tarpodman load --input /IMAGE_PATH/ocnadd-images-23.4.0.0.1.tar - Run one of the following commands to verify if the images are loaded:

docker imagespodman imagesVerify the list of images shown in the output with the list of images shown in the table Table 2-7. If the list does not match, reload the image tar file.

- Run one of the following commands to tag each imported image to the registry:

docker tag <image-name>:<image-tag> <docker-repo>/<image-name>:<image-tag>podman tag <image-name>:<image-tag> <docker-repo>/<image-name>:<image-tag> - Run one of the following commands to push the image to the registry:

docker push <docker-repo>/<image-name>:<image-tag>podman push <docker-repo>/<image-name>:<image-tag>Note:

It is recommended to configure the docker certificate before running the push command to access customer registry through HTTPS, otherwise, docker push command may fail. - Run the following command to push the helm charts to the helm

repository:

helm push <image_name>.tgz <helm_repo> - Run the following command to extract the helm

charts:

tar -xvzf ocnadd-23.4.0.0.1.tgz - Run the following command to unzip the custom-templates.zip file.

unzip custom-templates.zip

2.2.1.3 Creating OCNADD Namespace

This section explains how to verify or create new namespaces in the system. In this section, the namespaces for the management group and worker group should be created.

To verify if the required namespace already exists in the system, run the following command:

kubectl get namespacesIf the namespace exists, you may continue with the next steps of installation.

If the required namespace is not available, create a namespace using the following command:

Note:

This step requires hierarchical namespaces creation for the management group and worker group(s).kubectl create namespace <required parent-namespace>kubectl hns create <required child-namespace> --namespace <parent-namespace>kubectl create namespace dd-mgmt-groupkubectl hns create dd-worker-group1 --namespace dd-mgmt-groupkubectl hns tree <parent-namespace># kubectl hns tree dd-mgmt-group

dd-mgmt-group

└── [s] dd-worker-group1

[s] indicates subnamespaces

Naming Convention for Namespaces

While choosing the name of the namespace where you wish to deploy OCNADD, make sure the following requirements are met:

- starts and ends with an alphanumeric character

- contains 63 characters or less

- contains only alphanumeric characters or '-'

Note:

It is recommended to avoid using prefixkube- when creating

namespace. This is required as the prefix is reserved for Kubernetes system

namespaces.

2.2.1.4 Creating Service Account, Role, and Role Binding

This section is optional and it describes how to manually create a service account, role, and rolebinding. It is required only when customer needs to create a role, rolebinding, and service account manually before installing OCNADD. Skip this if choose to create by default from helm charts.

In the case of centralized deployment, this procedure needs to be repeated for each of the management group and worker group(s).

Note:

The secret(s) should exist in the same namespace where OCNADD is getting deployed. This helps to bind the Kubernetes role with the given service account.Creating Service Account, Role, and RoleBinding for Management Group

To create the service account, role, and rolebinding:

- Prepare OCNADD Management Group Resource File:

- Run the following command to create an OCNADD resource file

specifically for the management

group:

vi <ocnadd-mgmt-resource-file>.yamlReplace

<ocnadd-mgmt-resource-file>with the required name for the management group resource file. - For example:

vi ocnadd-mgmt-resource-template.yaml

- Run the following command to create an OCNADD resource file

specifically for the management

group:

- Update OCNADD Management Group Resource Template:

- Update the

ocnadd-mgmt-resource-template.yamlfile with release-specific information.Note:

Replace <custom-name> and <namespace> with their respective OCNADD management group namespace. Use a custom name preferably similar to the management namespace name to avoid upgrade issues. - A sample template to update the ocnadd-mgmt-resource-template.yaml file with is

given

below:

# # Sample template start # apiVersion: v1 kind: ServiceAccount metadata: name: < custom - name > -sa - ocnadd namespace: < namespace > automountServiceAccountToken: false -- - apiVersion: rbac.authorization.k8s.io / v1 kind: Role metadata: name: < custom - name > -cr rules: -apiGroups: [""] resources: ["pods", "configmaps", "services", "secrets", "resourcequotas", "events", "persistentvolumes", "persistentvolumeclaims"] verbs: ["*"] - apiGroups: ["extensions"] resources: ["ingresses"] verbs: ["create", "get", "delete"] - apiGroups: [""] resources: ["nodes"] verbs: ["get"] - apiGroups: ["scheduling.volcano.sh"] resources: ["podgroups", "queues", "queues/status"] verbs: ["get", "list", "watch", "create", "delete", "update"] -- - apiVersion: rbac.authorization.k8s.io / v1 kind: RoleBinding metadata: name: < custom - name > -crb roleRef: apiGroup: "" kind: Role name: < custom - name > -cr subjects: -kind: ServiceAccount name: < custom - name > -sa - ocnadd namespace: < namespace > -- - apiVersion: rbac.authorization.k8s.io / v1 kind: RoleBinding metadata: name: < custom - name > -crb - policy roleRef: apiGroup: "" kind: ClusterRole name: psp: privileged subjects: -kind: ServiceAccount name: < custom - name > -sa - ocnadd namespace: < namespace > -- - # # Sample template end #

- Update the

- Create Service Account, Role, and RoleBinding:

- Run the following command to create the service account, role, and rolebinding for

the management

group:

kubectl -n <dd-mgmt-group-namespace> create -f ocnadd-mgmt-resource-template.yamlReplace

<dd-mgmt-group-namespace>with the namespace where the OCNADD management group will be deployed. - For

example:

$ kubectl -n dd-mgmt-group create -f ocnadd-mgmt-resource-template.yaml

- Run the following command to create the service account, role, and rolebinding for

the management

group:

Note:

- Update the custom values file

ocnadd-custom-values-23.4.0.0.1-mgmt-group.yamlcreated/copied fromocnadd-custom-values-23.4.0.0.1.yamlin the "Custom Templates" folder. - Change the following parameters to

falseinocnadd-custom-values-23.4.0.0.1-mgmt-group.yamlafter adding the global service account to the management group. Failing to do so might result in installation failure due to CRD creation and deletion:serviceAccount: create: false name: <custom-name> ## --> Change this to <custom-name> provided in ocnadd-mgmt-resource-template.yaml above ## upgrade: false clusterRole: create: false name: <custom-name> ## --> Change this to <custom-name> provided in ocnadd-mgmt-resource-template.yaml above ## clusterRoleBinding: create: false name: <custom-name> ## --> Change this to <custom-name> provided in ocnadd-mgmt-resource-template.yaml above ## - Ensure the namespace used in

ocnadd-mgmt-resource-template.yamlmatches the below parameters inocnadd-custom-values-23.4.0.0.1-mgmt-group.yaml:global.deployment.management_namespace global.cluster.nameSpace.name

Creating Service Account, Role, and RoleBinding for Worker Group

Run the following command to create the service account, role, and rolebinding:

Note:

Repeat the below procedure for each of the worker groups that needs to be added to the centralized deployment.- Prepare OCNADD Worker Group Resource File:

- Run the following command to create an OCNADD resource file specifically

for the worker

group:

vi <ocnadd-wg1-resource-file>.yamlReplace

<ocnadd-wg1-resource-file>with the required name for the worker group resource file. - For example:

vi ocnadd-wg1-resource-template.yaml

- Run the following command to create an OCNADD resource file specifically

for the worker

group:

- Update OCNADD Worker Group Resource Template:

- Update the

ocnadd-wg1-resource-template.yamlfile with release-specific information.Note:

Replace <custom-name> and <namespace> with their respective OCNADD worker group namespace. Use a custom name preferably similar to the worker group namespace name to avoid upgrade issues. - A sample template to update the

ocnadd-wg1-resource-template.yamlfile with is given below:## Sample template start# apiVersion: v1 kind: ServiceAccount metadata: name: <custom-name>-sa-ocnadd namespace: <namespace> automountServiceAccountToken: false --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: <custom-name>-cr rules: - apiGroups: [""] resources: ["pods","configmaps","services", "secrets","resourcequotas","events","persistentvolumes","persistentvolumeclaims"] verbs: ["*"] - apiGroups: ["extensions"] resources: ["ingresses"] verbs: ["create", "get", "delete"] - apiGroups: [""] resources: ["nodes"] verbs: ["get"] - apiGroups: ["scheduling.volcano.sh"] resources: ["podgroups", "queues", "queues/status"] verbs: ["get", "list", "watch", "create", "delete", "update"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: <custom-name>-crb roleRef: apiGroup: "" kind: Role name: <custom-name>-cr subjects: - kind: ServiceAccount name: <custom-name>-sa-ocnadd namespace: <namespace> --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: <custom-name>-crb-policy roleRef: apiGroup: "" kind: ClusterRole name: psp:privileged subjects: - kind: ServiceAccount name: <custom-name>-sa-ocnadd namespace: <namespace> --- ## Sample template end#

- Update the

- Create Service Account, Role, and RoleBinding:

- Run the following command to create the service account, role, and rolebinding for

the worker

group:

kubectl -n <dd-worker-group-namespace> create -f ocnadd-wg1-resource-template.yamlReplace

<dd-worker-group-namespace>with the namespace where the OCNADD worker group will be deployed. - For

example:

$ kubectl -n dd-worker-group1 create -f ocnadd-wg1-resource-template.yaml

- Run the following command to create the service account, role, and rolebinding for

the worker

group:

Note:

- Update the custom values file

ocnadd-custom-values-23.4.0.0.1-worker-group1.yamlcreated/copied fromocnadd-custom-values-23.4.0.0.1.yamlin the "Custom Templates" folder. - Change the following parameters to

falseinocnadd-custom-values-23.4.0.0.1-worker-group1.yamlafter adding the global service account to the worker group. Failing to do so might result in installation failure due to CRD creation and deletion:serviceAccount: create: false name: <custom-name> ## --> Change this to <custom-name> provided in ocnadd-wg1-resource-template.yaml above ## upgrade: false clusterRole: create: false name: <custom-name> ## --> Change this to <custom-name> provided in ocnadd-wg1-resource-template.yaml above ## clusterRoleBinding: create: false name: <custom-name> ## --> Change this to <custom-name> provided in ocnadd-wg1-resource-template.yaml above ## - Ensure the namespace used in

ocnadd-wg1-resource-template.yamlmatches the below parameters inocnadd-custom-values-23.4.0.0.1-worker-group1.yaml:global.cluster.nameSpace.nameThe

management_namespaceparameter is set to the namespace used for the management group.global.deployment.management_namespace

2.2.1.5 Configuring OCNADD Database

OCNADD microservices use MySQL database to store the configuration and run time data.

The database is managed by the helm pre-install hook. However, OCNADD requires the database administrator to create an admin user in MySQL database and provide the necessary permissions to access the databases. Before installing OCNADD it is required to create the MySQL user and databases.

Note:

- If the admin user is already available, then update the credentials, such as username and password (base64 encoded) in

ocnadd/templates/ocnadd-secret-hook.yaml. - If the admin user is not available, then create it using the following procedure. Once the user is created, update the credentials for the user in

ocnadd/templates/ocnadd-secret-hook.yaml.

Creating an Admin User in the Database

- Run the following command to access the MySQL pod:

Note:

Use the namespace in which the cnDBTier is deployed. For example,occne-cndbtiernamespace is used. The default container name isndbmysqld-0kubectl -n occne-cndbtier exec -it ndbmysqld-0 -- bash - Run the following command to login to MySQL server using MySQL

client:

$ mysql -h 127.0.0.1 -uroot -p $ Enter password: - To create an admin user, run the following

command:

CREATE USER IF NOT EXISTS'<ocnadd admin username>'@'%' IDENTIFIED BY '<ocnadd admin user password>';Example:

CREATE USER IF NOT EXISTS 'ocdd'@'%' IDENTIFIED BY 'ocdd';Where:

<ocdd> is the admin username and <ocdd> is the password for MySQL admin user

- Run the following command to grant the necessary permissions to

the admin user and run the FLUSH command to reload the grant

table:

GRANT ALL PRIVILEGES ON *.* TO 'ocdd'@'%' WITH GRANT OPTION;FLUSH PRIVILEGES; - Access the

ocnadd-secret-hook.yamlfrom the OCNADD helm files using the following path:ocnadd/templates/ocnadd-secret-hook.yaml - Update the following parameters in the

ocnadd-secret-hook.yamlwith the admin user credentials:data: MYSQL_USER: b2NkZA== MYSQL_PASSWORD: b2NkZA==To generate the base64 encoded user and password from the terminal, run the following command:echo -n <string> | base64 -w 0Where,

<string>is the admin username or password created in step3.For example:

echo -n ocdd | base64 -w 0 b2NkZA==

Update Database Name

Note:

- By default, the database names are configuration_schema, alarm_schema, and healthdb_schema for the respective services.

- Skip this step if you plan to use the default database names during database creation. If not, change the database names as required.

To update the database names in the Configuration Service, Alarm Service, and Health Monitoring services:

- Access the

ocdd-db-resource.sqlfile from the helm chart using the following path:ocnadd/ocdd-db-resource.sql - Update all occurrences of the database name in

ocdd-db-resource.sql.

Note:

During the OCNADD reinstallation, all three application databases must be removed manually by running thedrop database <dbname>; command.

2.2.1.6 Configuring Secrets for Accessing OCNADD Database

The secret configuration for OCNADD database is automatically managed during the database creation the helm preinstall procedure.

2.2.1.7 Configuring SSL or TLS Certificates

- Extract the Package:

If not already done, extract the package

occnadd-package-23.4.0.tgz. - Copy Worker Group Service Values for SSL Configuration

Before configuring certificates, follow these steps to create copies of the

worker_group_service_valuesfile for each worker group within thessl_certs/default_valuesfolder:- For Worker Group

1:

cp ssl_certs/default_values/worker_group_service_values ssl_certs/default_values/worker_group_service_values_wg1 - For Worker Group

2:

cp ssl_certs/default_values/worker_group_service_values ssl_certs/default_values/worker_group_service_values_wg2

- For Worker Group

1:

- For each additional worker group, repeat the above process by

incrementing the suffix number (

_wg1,_wg2, etc.) for distinct file names. This ensures separate configurations for different worker groups.

Note:

- Before configuring the SSL/TLS certificates see, the "Customizing CSR and Certificate Extensions" section in the Oracle Communications Network Analytics Suite Security Guide

- This is a mandatory procedure, perform this procedure before you proceed with installation.

Before generating certificates using cacert and

cakey, the Kafka access mode needs to be finalized,

and accordingly, the

ssl_certs/default_values/worker_group_service_values

files should be updated for each worker group respectively.

The following access modes are available and applicable for worker groups only:

- When the NF producers and OCNADD are in the same cluster

- with external access disabled

- The NF producers and OCNADD are in a different cluster

- with LoadBalancer

Note:

- If the NF Producers and OCNADD are deployed in same cluster, all three ports can be used that is, 9092 for PLAIN_TEXT, 9093 for SSL, and 9094 for SASL_SSL. However, the 9092 port is non-secure and hence not recommended to use.

- If the NF Producers and OCNADD are deployed in different cluster, only the 9094 (SASL_SSL) port is exposed

- It is recommended to use the individual server IPs in the Kafka bootstrap server list instead of single service IP like "kafka-broker:9094".

The NF producers and OCNADD are in the same cluster

- With external access disabled

In this mode, the Kafka cluster is not exposed externally. By

default, the parameters externalAccess.enabled and

externalAccess.autoDiscovery are set to

false, therefore no change is needed. The

parameters externalAccess.enabled and

externalAccess.autoDiscovery are present in the

ocnadd-custom-values-23.4.0.0.1.yaml file.

The default values of bootstrap-server are given below:

kafka-broker-0.kafka-broker:9093

kafka-broker-1.kafka-broker:9093

kafka-broker-2.kafka-broker:9093 The NF producers and OCNADD are in different clusters

If the NF producers and OCNADD are in different Clusters, then either the LoadBalancer or NodePort Service Type can be used. In both the cases, the IP addresses are required to be updated manually in the ssl_certs/default_values/values of kafka-broker section by using the following steps:

With LoadBalancer

- Update the following parameters in Kafka section of the

ocnadd-custom-values-23.4.0.0.1.yamlfile:externalAccess.typeto LoadBalancer-

externalAccess.enabledto true externalAccess.autoDiscoveryto true

- Update based on LoadBalance IP types as follows

- When Static LoadBalancer IPs are

used

- Update the following parameters

in the Kafka section of the

ocnadd-custom-values-23.4.0.0.1.yamlfile:externalAccess.setstaticLoadBalancerIpsto 'true'. Default isfalse.- Static IP list in "externalAccess.LoadBalancerIPList" separated with comma.

For example:

externalAccess: setstaticLoadBalancerIps: true LoadBalancerIPList: [10.20.30.40,10.20.30.41,10.20.30.42] - Add all the static IPs under

kafka-broker section in the file

ssl_certs/default_values/worker_group_service_values

of the respective worker group files created

earlier.

For example: For worker-group1 if the static IP list is "10.20.30.40,10.20.30.41,10.20.30.42"

vi ssl_certs/default_values/worker_group_service_values_wg1 [kafka-broker] client.commonName=kafka-broker-zk server.commonName=kafka-broker DNS.1=*.kafka-broker.<nameSpace>.svc.<Cluster-Domain> DNS.2=kafka-broker DNS.3=*.kafka-broker IP.1=10.20.30.40 IP.2=10.20.30.41 IP.3=10.20.30.42

- Update the following parameters

in the Kafka section of the

- When LoadBalancer IP CIDR block is used

- The LoadBalancer IP CIDR block should already be available during the site planning, if not available then contact the CNE infrastructure administrator to get the IP CIDR block for Loadbalancer IPs.

- Add all the available IPs under

kafka-broker section in the file

ssl_certs/default_values/worker_group_service_values

of the respective worker group files created

earlier.

For example: For the worker-group1, if the available IP CIDR block is "10.x.x.0/26" with IP range is [1-62]

vi ssl_certs/default_values/worker_group_service_values_wg1 [kafka-broker] client.commonName=kafka-broker-zk server.commonName=kafka-broker DNS.1=*.kafka-broker.<nameSpace>.svc.<Cluster-Domain> DNS.2=kafka-broker DNS.3=*.kafka-broker IP.1=10.x.x.1 IP.2=10.x.x.2 . . IP.4=10.x.x.62

- When Static LoadBalancer IPs are

used

2.2.1.7.1 Generate Certificates using CACert and CAKey

OCNADD allows the users to provide the CACert and CAKey and generate certificates for all the services by running a predefined script.

Use the ssl_cert folder to generate the certificates for Management Group or Worker Group namespaces accordingly.

- Navigate to the

<ssl_certs>/default_valuesfolder. - Edit the corresponding

management_service_valuesandworker_group_service_valuesfiles created earlier with respect to each worker group and update global parameters, CN, and SAN(DNS/IP entries) for each service based on the requirement as explained below. - Change the default domain (

occne-ocdd) name and default namespace (ocnadd-deploy) in everyservice_valuesfile with your corresponding cluster domain which will be used to create certificate as shown below:Example:

For management group: namespace = dd-mgmt-group clusterDomain = cluster.local.com sed -i "s/ocnadd-deploy/dd-mgmt-group/g" management_service_values sed -i "s/occne-ocdd/cluster.local.com/g" management_service_values For worker group1: namespace = dd-worker-group1 clusterDomain = cluster.local.com sed -i "s/ocnadd-deploy/dd-worker-group1/g" worker_group_service_values_wg1 sed -i "s/occne-ocdd/cluster.local.com/g" worker_group_service_values_wg1 For worker group2: namespace = dd-worker-group2 clusterDomain = cluster.local.com sed -i "s/ocnadd-deploy/dd-worker-group2/g" worker_group_service_values_wg2 sed -i "s/occne-ocdd/cluster.local.com/g" worker_group_service_values_wg2Note:

Edit correspondingservice_valuesfile for global parameters and RootCA common name, and add service blocks of all the services for which the certificate needs to be generated.Global Params: [global] countryName=<country> stateOrProvinceName=<state> localityName=<city> organizationName=<org_name> organizationalUnitName=<org_bu_name> defaultDays=<days to expiry> Root CA common name (e.g. rootca common_name=*.svc.domainName) ##root_ca commonName=*.svc.domainName Service common name for client and server and SAN(DNS/IP entries). (Make sure to follow exact same format and provide an empty line at the end of each service block) [service-name-1] client.commonName=client.cn.name.svc1 server.commonName=server.cn.name.svc1 IP.1=127.0.0.1 DNS.1=localhost [service-name-2] client.commonName=client.cn.name.svc2 server.commonName=server.cn.name.svc2 IP.1= 10.20.30.40 DNS.1 = *.svc2.namespace.svc.domainName . . . ##end - Run the

generate_certs.shscript with the following command:./generate_certs.sh -cacert <path to>/CAcert.pem -cakey <path to>/CAkey.pemWhere,

<path to>is the folder path where the CACert and CAKey are present.Note:

In case the certificates are being generated for the worker group separately, then make sure the same CA certificate and private keys are used for generating the certificates as used for generating the management group certificates. The similar command as mentioned below can be used for the worker group certificate generation after the management group certificates have been generated:./generate_certs.sh -cacert <path to>/cacert.pem -cakey <path to>/private/cakey.pem - Select the mode of

deployment:

"1" for non-centralized "2" for upgrade from non-centralized to centralized "3" for centralisedSelect the mode of deployement (1/2/3) : 3 - Select the namespace where you want to generate the

certificates:

Enter kubernetes namespace: <your_working_namespace> - Select the

service_valuesfile you would like to apply. Below example is for Management Group:Select the values file you would like to apply Choose among the values file: 1. management_service_values 2. worker_group_service_values 3. worker_group_service_values_wg1 4. worker_group_service_values_wg2 Choose a file by entering its corresponding number: 1 - Enter passphrase for CAkey when

prompted:

Enter passphrase for CA Key file: <passphrase> - Select “y” when prompted to create CSR for each

service:

Create Certificate Signing Request (CSR) for each service? Y - Select “y” when prompted to sign CSR for each service with CA

Key:

Would you like to sign CSR for each service with CA key? Y - Run the following command to check if the secrets are created in the

specified

namespace:

kubectl get secret -n <namespace> - Run the following command to describe any secret created by

script:

kubectl describe secret <secret-name> -n <namespace> - Repeat the above steps to generate certificates for all the worker groups being

added to the Centralized deployment mode. In Step 7, select corresponding

worker_group_service_valuesfile with respect to the worker group for which the certificates are being generated.

2.2.1.7.2 Generate Certificate Signing Request (CSR)

Users can generate the certificate signing request for each of the services using the OCNADD script, and then can use the generated CSRs to generate the certificates using its own certificate signing mechanism (External CA server, Hashicorp Vault, and Venafi).

Perform the following procedure to generate the CSR:

- Navigate to the

<ssl_certs>/default_valuesfolder. - Edit the corresponding

management_service_valuesandworker_group_service_valuesfiles created earlier with respect to each worker group and update global parameters, CN, and SAN(DNS/IP entries) for each service based on the requirement explained below. - Change the default domain (

occne-ocdd) name and default namespace (ocnadd-deploy) in everyservice_valuesfile with your corresponding cluster domain which will be used to create certificate as shown below:Example:For management group: namespace = dd-mgmt-group clusterDomain = cluster.local.com sed -i "s/ocnadd-deploy/dd-mgmt-group/g" management_service_values sed -i "s/occne-ocdd/cluster.local.com/g" management_service_values For worker group1: namespace = dd-worker-group1 clusterDomain = cluster.local.com sed -i "s/ocnadd-deploy/dd-worker-group1/g" worker_group_service_values_wg1 sed -i "s/occne-ocdd/cluster.local.com/g" worker_group_service_values_wg1 For worker group2: namespace = dd-worker-group2 clusterDomain = cluster.local.com sed -i "s/ocnadd-deploy/dd-worker-group2/g" worker_group_service_values_wg2 sed -i "s/occne-ocdd/cluster.local.com/g" worker_group_service_values_wg2Note:

Edit correspondingservice_valuesfile for global parameters and RootCA common name, and add service blocks of all the services for which the certificate needs to be generated.Global Params: [global] countryName=<country> stateOrProvinceName=<state> localityName=<city> organizationName=<org_name> organizationalUnitName=<org_bu_name> defaultDays=<days to expiry> Root CA common name (e.g. rootca common_name=*.svc.domainName) ##root_ca commonName=*.svc.domainName Service common name for client and server and SAN(DNS/IP entries). (Make sure to follow exact same format and provide an empty line at the end of each service block) [service-name-1] client.commonName=client.cn.name.svc1 server.commonName=server.cn.name.svc1 IP.1=127.0.0.1 DNS.1=localhost [service-name-2] client.commonName=client.cn.name.svc2 server.commonName=server.cn.name.svc2 IP.1= 10.20.30.40 DNS.1 = *.svc2.namespace.svc.domainName . . . ##end - Run the

generate_certs.shscript with the--gencsror-gcflag../generate_certs.sh --gencsr - Select the mode of deployment

"1" for non-centralized "2" for upgrade from non-centralized to centralized "3" for centralisedSelect the mode of deployement (1/2/3) : 3 - Select the namespace where you would like to generate the

certificates:

Enter kubernetes namespace: <your_working_namespace> - Select the

service_valuesfile you would like to apply. Below example is with worker-group1 and worker-group2:Select the values file you would like to apply Choose among the values file: 1. management_service_values 2. worker_group_service_values 3. worker_group_service_values_wg1 4. worker_group_service_values_wg2 Choose a file by entering its corresponding number: 1 - Once the service CSRs are generated the

demoCAfolder will be created. Navigate to CSR and keys in thedemoCA/dd_mgmt_worker_services/<your_namespace>/services(separate for client and server). The CSR can be signed using your own certificate signing mechanism to generate the certificates. - Make sure that the certificates and key names are created in the following format

based on the service is acting as a client or server.

For Client servicename-clientcert.pem and servicename-clientprivatekey.pem

For Server servicename-servercert.pem and servicename-serverprivatekey.pem

- Once above certificates are generated by signing CSR with the Certificate Authority,

copy those certificates in the respective

demoCA/dd_mgmt_worker_services/<your_namespace>/servicesfolder of each services.Note:

- Make sure to use the same CA key for both management group and worker group(s)

- Make sure the certificates are copied in the respective folders for the client and the server based on their generated CSRs

- Run

generate_certs.shwith thecacertpath and--gensecretor-gsto generate secrets:./generate_certs.sh -cacert <path to>/cacert.pem --gensecret - Select the namespace where you would like to generate the

certificates:

Enter kubernetes namespace: <your_working_namespace> - Select “y” when prompted to generate secrets for the

services:

Would you like to continue to generate secrets? (y/n) y - Run the following command to check if the secrets are created in the specified

namespace:

kubectl get secret -n <namespace> - Run the following command to describe any secret created by the

script:

kubectl describe secret <secret-name> -n <namespace>

2.2.1.7.3 Kubectl HNS Installation

- Extract the OCNADD package if not already extracted and untar the

hns_package.tar.gz:cd ocnadd-package-23.4.0.0.1 tar -xvzf hns_package.tar.gz - Go to the

hns_packagefolder:cd hns_package - Load, tag, and push the HNS image to the image

repository:

podman load -i hns_package/hnc-manager.tar podman tag localhost/k8s-staging-multitenancy/hnc-manager:v1.1.0 <image-repo>/k8s-staging-multitenancy/hnc-manager:v1.1.0 podman push <image-repo>/k8s-staging-multitenancy/hnc-manager:v1.1.0 - Update the image repository in the

ha.yamlfile:- /manager image: occne-repo-host:5000/k8s-staging-multitenancy/hnc-manager:v1.1.0 ## ---> Update image to <image-repo>/k8s-staging-multitenancy/hnc-manager:v1.1.0 - Repeat the image update for the second occurrence in the

ha.yamlfile:- /manager image: occne-repo-host:5000/k8s-staging-multitenancy/hnc-manager:v1.1.0 ## ---> Update image to <image-repo>/k8s-staging-multitenancy/hnc-manager:v1.1.0 - Run the HNS file to create resources for

kubectl hns:kubectl apply -f ha.yaml - Copy the binary

kubectl-hnsto/usr/binor any location in the user's$PATH:sudo cp --remove-destination kubectl-hns /usr/bin/ sudo chmod +x /usr/bin/kubectl-hns sudo chmod g+x /usr/bin/kubectl-hns sudo chmod o+x /usr/bin/kubectl-hns - Verify the namespace are properly created by creating a child

namespace and deleting it:

- Run the following commands to create

namespace:

kubectl create ns test-parent kubectl hns create test-child -n test-parent - Run the following command to list the child

namespaces:

kubectl hns tree test-parentSample output:test-parent └── [s] test-child - Run the following commands to delete

namespace:

kubectl delete subns test-child -n test-parent kubectl delete ns test-parent

- Run the following commands to create

namespace:

2.2.2 Installation Tasks

Note:

Before starting the installation tasks, ensure that the Prerequisites and Pre-Installation Tasks are completed.2.2.2.1 Installing OCNADD Package

This section describes how to install the Oracle Communications Network Analytics Data Director (OCNADD) package.

To install the OCNADD package, perform the following steps:

Create OCNADD Namespace

Create the OCNADD namespace, if not already created. For more information, see Creating OCNADD Namespace.

Generate Certificates

- Perform the steps defined in Configuring SSL or TLS Certificates section to complete the certificate generation.

Update Database Parameters

To update the database parameters, see Configuring OCNADD Database.

Update ocnadd-custom-values-23.4.0.0.1.yaml file

Update the ocnadd-custom-values-23.4.0.0.1.yaml (depending on the type of deployment model) with the required

parameters.

For more information on how to access and update the ocnadd-custom-values-23.4.0.0.1.yaml files, see Customizing OCNADD.

Install Helm Chart

OCNADD Release 23.4.0 or later release supports fresh deployment in centralized mode only.

Data Director Installation with Default Worker Group

In 23.4.0, Data Director can be installed with default worker group in centralized mode.

Note:

- No HNS package is required for this installation mode and only one worker group "default worker Group" is possible.

- For this deployment mode, the management group and the default worker group will be deployed on the same namespace.

Deploy Centralized Site

Create a copy of the charts and custom values for the management group

and the default worker group from the ocnadd-package-23.4.0.0.1 folder. The user can create multiple copies of Helm charts folder

and custom-values file in the following suggested way:

- For Management Group: Create a copy of the following files

from extracted

folder:

# cd ocnadd-package-23.4.0.0.1 # cp -rf ocnadd ocnadd_mgmt # cp custom_templates/ocnadd-custom-values-23.4.0.0.1.yaml ocnadd-custom-values-mgmt-group.yaml - For Worker Group: Create a copy of the following files from

extracted

folder:

# cp -rf ocnadd ocnadd_default_wg # cp custom_templates/ocnadd-custom-values-23.4.0.yaml ocnadd-custom-values-default-wg-group.yaml

Note:

For additional worker groups, repeat this process (for example, for Worker Group 2, create "ocnadd_wg2" and

"ocnadd-custom-values-wg2-group.yaml").

Installing Management Group:

- Create a namespace to deploy the Data Director if it doesn't exist

already. See Creating OCNADD Namespace section.

For example:

# kubectl create namespace ocnadd-deploy - Create certificates if it was not already created for the management group. For more information about certificate generation, see Configuring SSL or TLS Certificates and Oracle Communications Network Analytics Suite Security Guide.

- Modify the

ocnadd-custom-values-mgmt-group.yamlfile as follows:global.deployment.centralized: true global.deployment.management: true global.deployment.management_namespace:ocnadd-deploy ##---> update it with namespace created in Step 1 global.cluster.namespace.name:ocnadd-deploy ##---> update it with namespace created in Step 1 global.cluster.serviceAccount.name:ocnadd ## --> update the ocnadd with namespace created in Step 1 global.cluster.clusterRole.name:ocnadd ## --> update the ocnadd with namespace created in Step 1 global.cluster.clusterRoleBinding.name:ocnadd ## --> update the ocnadd with namespace created in Step 1 - Install using the "

ocnadd_mgmt" Helm charts folder created for the management group:helm install <management-release-name> -f ocnadd-custom-values-<mgmt-group>.yaml --namespace <default-deploy-namespace> <helm_chart>Where,

<management-release-name>release name of management group deployment<mgmt-group>management custom values file<default-deploy-namespace>namespace where management group is deployed<helm-chart>helm chart folder of OCNADDFor example:helm install ocnadd-mgmt -f ocnadd-custom-values-mgmt-group.yaml --namespace ocnadd-deploy ocnadd_mgmt

Installing Default Worker Group:

- Create certificates if it was not already created for the

management group. For more information about certificate generation, see Configuring SSL or TLS Certificates and Oracle Communications Network

Analytics Suite Security Guide.

Note:

For worker group Certificate creation select same namespace as selected for management group (For example, ocnadd-deploy) - Modify the

ocnadd-custom-values-default-wg-group.yamlfile as follows:global.deployment.centralized: true global.deployment.management: false ##---> default is true global.deployment.management_namespace:ocnadd-deploy ##---> update it with namespace created in Step 1 of Installing Management Group section global.cluster.namespace.name:ocnadd-deploy ##---> update it with namespace created in Step 1 of Installing Management Group section global.cluster.serviceAccount.create: true ## --> update the parameter to false global.cluster.clusterRole.create: true ## --> update the parameter to false global.cluster.clusterRoleBinding.create: true ## --> update the parameter to false - Install using the "

ocnadd_default_wg" Helm charts folder created for default Worker Group:helm install <default-worker-group-release-name> -f ocnadd-custom-values-<default-wg-group>.yaml --namespace <default-deploy-namespace> <helm_chart>Where,

<default-worker-group-release-name>release name of default worker group deployment<default-wg-group>default worker group custom values file<default-deploy-namespace>namespace where default worker group is deployed<helm-chart>helm chart folder of OCNADDFor example:helm install ocnadd-default-wg -f ocnadd-custom-values-default-wg-group.yaml --namespace ocnadd-deploy ocnadd_default_wg

Data Director Installation with Multiple Worker Group

The 23.4.0 or later release supports fresh deployment in centralized mode only.

Deploy Centralized Site

Create a copy of the charts and custom values for the management group

and the default worker group from the ocnadd-package-23.4.0.0.1 folder. The user can create multiple copies of Helm charts folder

and custom-values file in the following suggested way:

- For Management Group: Create a copy of the following files

from extracted

folder:

# cd ocnadd-package-23.4.0.0.1 # cp -rf ocnadd ocnadd_mgmt # cp custom_templates/ocnadd-custom-values-23.4.0.0.1.yaml ocnadd-custom-values-mgmt-group.yaml - For Worker Group: Create a copy of the following files from

extracted

folder:

# cp -rf ocnadd ocnadd_wg1 # cp custom_templates/ocnadd-custom-values-23.4.0.yaml ocnadd-custom-values-wg1-group.yaml

Note:

If more than one worker group is being added, create similar copies accordingly. Like for Worker Group2: create folder name ocnadd_wg2 and ocnadd-custom-values-wg2-group.yaml file.Installing Management Group:

- Create a namespace for the Management Group if it doesn't exist

already. See Creating OCNADD Namespace section.

For example:

kubectl create namespace dd-mgmt-group - Create certificates if it was not already created for the management group. For more information about certificate generation, see Configuring SSL or TLS Certificates and Oracle Communications Network Analytics Suite Security Guide.

- Modify the

ocnadd-custom-values-mgmt-group.yamlfile as follows:global.deployment.centralized: true global.deployment.management: true global.deployment.management_namespace:ocnadd-deploy ##---> update it with management-group namespace for example dd-mgmt-group global.cluster.namespace.name:ocnadd-deploy ##---> update it with management-group namespace for example dd-mgmt-group global.cluster.serviceAccount.name:ocnadd ## --> update the ocnadd with the management-group namespace for example dd-mgmt-group global.cluster.clusterRole.name:ocnadd ## --> update the ocnadd with the management-group namespace for example dd-mgmt-group global.cluster.clusterRoleBinding.name:ocnadd ## --> update the ocnadd with the management-group namespace for example dd-mgmt-group - Install using the "

ocnadd_mgmt" Helm charts folder created for the management group:helm install <management-release-name> -f ocnadd-custom-values-<mgmt-group>.yaml --namespace <management-group-namespace> <helm_chart>For example:helm install ocnadd-mgmt -f ocnadd-custom-values-mgmt-group.yaml --namespace dd-mgmt-group ocnadd_mgmt

Installing Worker Group:

- Create a namespace for the W Group if it doesn't exist already. See

Creating OCNADD Namespace section.

For example:

kubectl hns create dd-worker-group1 -n dd-mgmt-group - Create certificates if it was not already created for the worker group that is going to be created. For more information about certificate generation, see Configuring SSL or TLS Certificates and Oracle Communications Network Analytics Suite Security Guide.

- Modify the

ocnadd-custom-values-default-wg-group.yamlfile as follows:global.deployment.centralized: true global.deployment.management: false ##---> default is true global.deployment.management_namespace:ocnadd-deploy ##---> update it with management-group namespace for example dd-mgmt-group global.cluster.namespace.name:ocnadd-deploy ##---> update it with worker-group namespace for example dd-worker-group1 global.cluster.serviceAccount.name:ocnadd ## --> update the ocnadd with the worker-group namespace for example dd-worker-group1 global.cluster.clusterRole.name:ocnadd ## --> update the ocnadd with the worker-group namespace for example dd-worker-group1 global.cluster.clusterRoleBinding.name:ocnadd ## --> update the ocnadd with the worker-group namespace for example dd-worker-group1 - Install using the "

ocnadd_wg1" Helm charts folder created for the worker group:helm install <worker-group1-release-name> -f ocnadd-custom-values-<wg1-group>.yaml --namespace <worker-group1-namespace> <helm_chart>For example:helm install ocnadd-wg1 -f ocnadd-custom-values-wg1-group.yaml --namespace dd-worker-group1 ocnadd_wg1

Note:

For additional worker groups, repeat the Installing Default Worker Group: procedure. For instance, for Worker Group 2, replicate the steps accordingly.Caution:

Do not exit from Helm install command manually. After running the Helm install command, it takes some time to install all the services. In the meantime, you must not press Ctrl+C to come out from the command. It leads to some anomalous behavior.2.2.2.2 Verifying OCNADD Installation

This section describes how to verify if Oracle Communications Network Analytics Data Director (OCNADD) is installed successfully.

- In the case of Helm, run one of the following

commands:

helm status <helm-release> -n <namespace> Example: To check dd-management group # helm status ocnadd-mgmt -n dd-mgmt-group To check dd-worker-group # helm status ocnadd-wg1 -n dd-worker-group1The system displays the status as deployed if the deployment is successful.

- Run the following command to check whether all the services are

deployed and active:

To check management-group:

watch kubectl get pod,svc -n dd-mgmt-groupTo check worker-group1:watch kubectl get pod,svc -n dd-worker-group1kubectl -n <namespace_name> get services

Note:

- All microservices status must be Running and Ready.

- Take a backup of the following files that are required during fault

recovery:

- Updated Helm charts for both management and worker group(s)

- Updated custom-values for both management and worker group(s)

- Secrets, certificates, and keys that are used during the installation for both management and worker group(s)

- If the installation is not successful or you do not see the status as Running for all the pods, perform the troubleshooting steps. For more information, refer to Oracle Communications Network Analytics Data Director Troubleshooting Guide.

2.2.2.3 Creating OCNADD Kafka Topics

To create OCNADD Kakfa topics, see the "Creating Kafka Topic for OCNADD" section of Oracle Communications Network Analytics Data Director User Guide

2.2.2.4 Installing OCNADD GUI

Install OCNADD GUI

The OCNADD GUI gets installed along with the OCNADD services.

Configure OCNADD GUI in CNCC

Prerequisite: To configure OCNADD GUI in CNC Console, you must have the CNC Console installed. For information on how to install CNC Console and configure the OCNADD instance, see Oracle Communications Cloud Native Configuration Console Installation, Upgrade, and Fault Recovery Guide.

Before installing CNC Console, ensure to update the instances parameters with the following details in the occncc_custom_values.yaml file:

instances:

- id: Cluster1-dd-instance1

type: DD-UI

owner: Cluster1

ip: 10.xx.xx.xx #--> give the cluster/node IP

port: 31456 #--> give the node port of ocnaddgui

apiPrefix: /<clustername>/<namespace>/ocnadd

- id: Cluster1-dd-instance1

type: DD-API

owner: Cluster1

ip: 10.xx.xx.xx #--> give the cluster/node IP

port: 32406 #--> give the node port of ocnaddbackendrouter

apiPrefix: /<clustername>/<namespace>/ocnaddapi

# Applicable only for Manager and Agent core. Used for Multi-Instance-Multi-Cluster Configuration Validation

validationHook:

enabled: false #--> add this enabled: false to validationHook

#--> do these changes under section :

cncc iam attributes

# If https is disabled, this Port would be HTTPS/1.0 Port (secured SSL)

publicHttpSignalingPort: 30085 #--> CNC console nodeport

#--> add these lines under cncc-iam attributes

# If Static node port needs to be set, then set staticNodePortEnabled flag to true and provide value for staticNodePort

# Else random node port will be assigned by K8

staticNodePortEnabled: true

staticHttpNodePort: 30085 #--> CNC console nodeport

staticHttpsNodePort: 30053

#--> do these changes under section : manager cncc core attributes

#--> add these lines under mcncc-core attributes

# If Static node port needs to be set, then set staticNodePortEnabled flag to true and provide value for staticNodePort

# Else random node port will be assigned by K8

staticNodePortEnabled: true

staticHttpNodePort: 30075

staticHttpsNodePort: 30043

#--> do these changes under section : agent cncc core attributes

#--> add these lines under acncc-core attributes

# If Static node port needs to be set, then set staticNodePortEnabled flag to true and provide value for staticNodePort

# Else random node port will be assigned by K8

staticNodePortEnabled: true

staticHttpNodePort: 30076

staticHttpsNodePort: 30044occncc_custom_values.yaml file:instances:

- id: Cluster1-dd-instance1

type: DD-UI

owner: Cluster1

ip: 10.xx.xx.xx #--> update the cluster/node IP

port: 31456 #--> ocnaddgui port

apiPrefix: /<clustername>/<namespace>/ocnadd

- id: Cluster1-dd-instance1

type: DD-API

owner: Cluster1

ip: 10.xx.xx.xx #--> update the cluster/node IP

port: 32406 #--> ocnaddbackendrouter port

apiPrefix: /<clustername>/<namespace>/ocnaddapiExample:

occncc_custom_values.yaml will be as follows:DD-UI apiPrefix:

/occne-ocdd/ocnadd-deploy/ocnadd

DD-API apiPrefix:

/occne-ocdd/ocnadd-deploy/ocnaddapiAccess OCNADD GUI

To access OCNADD GUI, follow the procedure mentioned in the "Accessing CNC Console" section of Oracle Communications Cloud Native Configuration Console Installation, Upgrade, and Fault Recovery Guide.

2.2.2.5 Adding a Worker Group

Note:

HNS should be already installed for adding a new worker group. If not, see Kubectl HNS Installation section for the steps.Assumptions:

- Centralized Site is already deployed with at least one worker group.

- Management Group deployment is up and running, example namespace "dd-mgmt-group".

- Worker Group namespace which is being added is created, example namespace "dd-worker-group2".

- Create the namespace for worker-group2 if not already created. Fro more infromation,

see Creating OCNADD Namespace.

For example:

kubectl create namespace dd-worker-group2 -n dd-mgmt-group - Create a copy of the following files from extracted

folder:

cp -rf ocnadd ocnadd_wg2 cp custom_templates/ocnadd-custom-values-23.4.0.0.1.yaml ocnadd-custom-values-wg2-group.yaml - Generate certificates for the new worker group according to the "Configuring SSL or TLS Certificates" section and the Oracle Communications Network Analytics Suite Security Guide.

- Modify the

ocnadd-custom-values-wg2-group.yamlfile as follows:global.deployment.centralized: true global.deployment.management: true ##---> Update it to 'false' global.deployment.management_namespace:ocnadd-deploy ##---> update it with management-group namespace for example dd-mgmt-group global.cluster.namespace.name:ocnadd-deploy ##---> update it with worker-group namespace for example dd-worker-group2 global.cluster.serviceAccount.name:ocnadd ## --> update the ocnadd with the worker-group namespace for example dd-worker-group2 global.cluster.clusterRole.name:ocnadd ## --> update the ocnadd with the worker-group namespace for example dd-worker-group2 global.cluster.clusterRoleBinding.name:ocnadd ## --> update the ocnadd with the worker-group namespace for example dd-worker-group2 - Install using the

ocnadd_wg2Helm charts folder created for the worker group:helm install <worker-group2-release-name> -f ocnadd-custom-values-<wg2-group>.yaml --namespace <worker-group2-namespace> <helm_chart>For example:

helm install ocnadd-wg2 -f ocnadd-custom-values-wg2-group.yaml --namespace dd-worker-group2 ocnadd_wg2 - To verify the installation of the new worker

group:

# watch kubectl get pod,svc -n dd-worker-group2 - Follow the section "Creating OCNADD Kafka Topics" to create topics on newly added worker group.

2.2.2.6 Deleting a Worker Group

Assumptions:

- Centralized Site is already deployed with at least one worker group.

- Management Group deployment is up and running, example namespace "dd-mgmt-group".

- Worker groups "worker-group1" and "worker-group2" deployment are up and running, example namespace 'dd-worker-group1' and 'dd-worker-group2'.

- Worker group "worker-group2" needs to be deleted.

- Clean up the configurations corresponding to worker-group which is

being deleted. For example, if it is 'worker-group2':

- Delete all the adapter feeds corresponding to worker-group2 from the UI.

- Delete all the filters applied to worker-group2 from the UI.

- Delete all the correlation applied to worker-group2 from the UI.

- Delete all the Kafka feeds corresponding to worker-group2 from the UI.

- Run the follwing command to uninstall the worker

group:

helm uninstall <worker-group2-release-name> -n <worker-group1-namespace>For example:helm uninstall ocnadd-wg2 -n dd-worker-group2 - Delete the worker group

namespace:

kubectl hns delete <worker-group2-release-name> -n <management-group-namespace>For example:kubectl hns delete dd-worker-group2 -n dd-mgmt-group