2 Troubleshooting OCNADD

Note:

kubectl commands might vary based on the platform

deployment. Replace kubectl with Kubernetes environment-specific

command line tool to configure Kubernetes resources through

kube-api server. The instructions provided in this document

are as per the Oracle Communications Cloud Native Environment (OCCNE) version of

kube-api server.

2.1 Generic Checklist

The following sections provide a generic checklist for troubleshooting OCNADD.

Deployment Checklist

- Failure in Certificate or Secret generation.

There may be a possibility of an error in certificate generation when the Country, State, or Organization name is different in CA and service certificates.

Error Code/Error Message: The countryName field is different between CA certificate (US) and the request (IN) (similar error message will be reported forState or Org name)To resolve this error:

- Navigate to "ssl_certs/default_values/" and edit the "values" file.

- Change the following values under "[global]" section:

- countryName

- stateOrProvinceName

- organizationName

- Ensure the values match the CA configurations, for

example:

If the CA has country name as "US", state as "NY", and Org name as "ORACLE" then, set the values under [global] parameter as follows:

[global] countryName=US stateOrProvinceName=NY localityName=BLR organizationName=ORACLE organizationalUnitName=CGBU defaultDays=365 - Rerun the script and verify the certificate and secret generation.

- Run the following command to verify if OCNADD deployment, pods, and services created are running and

available:

Verify the output, and check the following columns:# kubectl -n <namespace> get deployments,pods,svc- READY, STATUS, and RESTARTS

- PORT(S) of service

Note:

It is normal to observe the Kafka broker restart during deployment. - Verify if the correct image is used and correct environment

variables are set in the deployment.

To check, run the following command:

# kubectl -n <namespace> get deployment <deployment-name> -o yaml - Check if the microservices can access each other through a REST

interface.

To check, run the following command:

Example:# kubectl -n <namespace> exec <pod name> -- curl <uri>kubectl exec -it pod/ocnaddconfiguration-6ffc75f956-wnvzx -n ocnadd-system -- curl 'http://ocnaddadminservice:9181/ocnadd-admin-svc/v2/{workerGroup}/topic'Note:

These commands are in their simple format and display the logs only if ocnaddconfiguration and ocnadd-admin-svc pods are deployed.

The list of URIs for all the microservices:

- http://ocnaddconfiguration:<port>/ocnadd-configuration/v1/subscription

-

http://ocnaddalarm:<port>/ocnadd-alarm/v1/alarm?&startTime=<start-time>&endTime=<end-time>

use off-set date time format: e.g 2022-07-12T05:37:26.954477600Z

- <ip>:<port>/ocnadd-admin-svc/v1/{workerGroup}/topic/

- <ip>:<port>/ocnadd-admin-svc/v1/{workerGroup}/describe/topic/<topicName>

- <ip>:<port>/ocnadd-admin-svc/v1/{workerGroup}/alter

- <ip>:<port>/ocnadd-admin-svc/v1/{workerGroup}/broker/expand/entry

- <ip>:<port>/ocnadd-admin-svc/v1/{workerGroup}/broker/health

Application Checklist

Logs Verification

Note:

The below procedures should be verified or run corresponding to the applicable worker group or the management group.# kubectl -n <namespace> logs -f <pod name>-f to follow the logs or grep option to

obtain a specific pattern in the log output.

# kubectl -n ocnadd-system logs -f $(kubectl -n ocnadd-system get pods -o name |cut -d'/' -f 2|grep nrfaggregation)Above command displays the logs of the ocnaddnrfaggregation

service.

# kubectl logs -n ocnadd-system <pod name> | grep <pattern>Note:

These commands are in their simple format and display the logs only if there is atleast onenrfaggregation pod deployed.

Kafka Consumer Rebalancing

The Kafka consumers can rebalance in the following scenarios:

- The number of partitions changes for any of the subscribed topics.

- A subscribed topic is created or deleted.

- An existing member of the consumer group is shutdown or fails.

- In the Kafka consumer application,

- Stream threads inside the consumer app skipped sending heartbeat to Kafka.

- The batch of messages took longer time to process and causes the time between the two polls to take longer.

- Any stream thread in any of the consumer application pods dies because of some error and it is replaced with a new Kafka Stream thread.

- Any stream thread is stuck and not processing any message.

- A new member is added to the consumer group (for example, new consumer pod spins up).

When the rebalancing is triggered, there is a possibility that offsets are not committed by the consumer threads as offsets are committed periodically. This can result in messages corresponding to non-committed offsets being sent again or duplicated when the rebalancing is completed and consumers started consuming again from the partitions. This is a normal behavior in the Kafka consumer application. However, because of frequent rebalancing in the Kafka consumer applications, the counts of messages in the Kafka consumer application and 3rd party application can mismatch.

Data Feed not accepting updated endpoint

Problem

If a Data feed is created for synthetic packets with an incorrect endpoint, updating the endpoint afterward has no effect.

Solution

Delete and recreate the data feed for synthetic packets with the correct endpoint.

Kafka Performance Impact (due to disk limitation)

Problem

When source topics (SCP, NRF, and SEPP) and MAIN topic are created with Replication Factor = 1

For a low performance disk, the Egress MPS rate drops/fluctuates with the following traces in the Kafka broker logs:

Shrinking ISR from 1001,1003,1002 to 1001. Leader: (highWatermark: 1326, endOffset: 1327). Out of sync replicas: (brokerId: 1003, endOffset: 1326) (brokerId: 1002, endOffset: 1326). (kafka.cluster.Partition)

ISR updated to 1001,1003 and version updated to 28(kafka.cluster.Partition)Solution

The following steps can be performed (or verified) to optimize the Egress MPS rate:

- Try to increase the disk performance in the cluster where OCNADD is deployed.

- If the disk performance cannot be increased, then perform the following

steps for OCNADD:

- Navigate to the Kafka helm charts values file (<helm-chart-path>/ocnadd/charts/ocnaddkafka/values.yaml)

- Change the below parameter in the values.yaml:

- offsetsTopicReplicationFactor: 1

- transactionStateLogReplicationFactor: 1

- Scale down the Kafka and zookeeper deployment by modifying the

following lines in the corresponding worker group helm chart and custom

values:

ocnaddkafka: enabled: false - Perform helm upgrade for the worker

group:

helm upgrade <release name> <chart path of worker group> -n <namespace of the worker group> - Delete PVC for Kafka and Zookeeper using the following

commands:

kubectl delete pvc -n <namespace> kafka-volume-kafka-broker-0kubectl delete pvc -n <namespace> kafka-volume-kafka-broker-1kubectl delete pvc -n <namespace> kafka-volume-kafka-broker-2kubectl delete pvc -n <namespace> kafka-broker-security-zookeeper-0kubectl delete pvc -n <namespace> kafka-broker-security-zookeeper-1kubectl delete pvc -n <namespace> kafka-broker-security-zookeeper-2

- Modify the value of the following parameter to true, in the

corresponding worker group helm chart and custom

values

ocnaddkafka: enabled: true - Perform helm upgrade for the worker

group:

helm upgrade <release name> <chart path of worker group> -n <namespace of the worker group>

Note:

The following points are to be considered while applying the above procedure:

- In case a Kafka broker becomes unavailable, then you may experience an impact on the traffic on the Ingress side.

- Verify the Kafka broker logs or describe the Kafka/zookeeper pod which is unavailable and take the necessary action based on the error reported.

500 Server Error on GUI while creating/deleting the Data Feed

Problem

Occasionally, due to network issues, the user may observe a "500 Server Error" while creating/deleting the Data Feed.

Solution

- Delete and recreate the feed if it is not created properly.

- Retry the action after logging out from the GUI and login back again.

- Retry creating/deleting the feed after some time.

Kafka resources reaching more than 90% utilization

Problem

Kafka resources(CPU, Memory) reached more than 90% utilization due to a higher MPS rate or slow disk I/O rate

Solution

Add additional resources to the following parameters that are reaching high utilization.

File name: ocnadd-custom-values.yaml corresponding to the

worker group

Parameter name: ocnaddkafka.ocnadd.kafkaBroker.resource

kafkaBroker:

name:kafka-broker

resource:

limit:

cpu:5 ===> change it to require number of CPUs

memory:24Gi ===> change it to require number of memory size Kafka ACLs: Identifying the Network IP "Host" ACLs in Kafka Feed

Problem

User is unable to identify the Network IP "Host" ACLs in Kafka Feed.

Solution

The following procedure can be referred to in the case of the user being unable to identify the Network IP address.

Adding network IP address for Host ACL

This set of instructions explains how to add a network IP address to the host ACL. The procedure is illustrated using the following example configuration:

- Kafka Feed Name: demofeed

- Kafka ACL User: joe

- Kafka Client Hostname: 10.1.1.15

Note:

The below steps should be run corresponding to the worker group against which the Kafka feed is created/modifiedRetrieving Current ACLs

curl -k --location --request GET 'https://ocnaddadminservice:9181/ocnadd-admin-svc/v2/{workerGroup}/acls' ["(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))"]Authorization Error in Kafka Logs

With the Kafka feed "demofeed" configured with acl user "joe" and client host IP address "10.1.1.15" the Kafka reports the following authorization error in the Kafka Logs.

[2023-07-31 05:34:22,063] INFO Principal = User:joe is Denied Operation = Read from host = 10.1.1.0 on resource = Group:LITERAL:demofeed for request = JoinGroup with resourceRefCount = 1 (kafka.authorizer.logger)In the above output, it can be seen that host ACL is allowing specific client IP address "10.1.1.15", whereas the Kafka server is expecting the ACL for the network IP "10.1.1.0" too, which is the network IP address.

Steps to Create Network IP Address ACL

- Check Kafka Logs

To identify the network IP address that Kafka is denying against the configured feed, follow these steps:

- Check the Kafka logs using the

command:

kubectl logs -n <namespace> -c kafka-broker kafka-broker-1 -fFor example:

kubectl logs -n ocnadddeploy -c kafka-broker kafka-broker-1 -f - Look for traces similar to

this:

Principal = User:joe is Denied Operation = Read from host = 10.1.1.0 on resource = Group:LITERAL:demofeed for request = JoinGroup with resourceRefCount = 1 (kafka.authorizer.logger) take the ip address which is being denied by Kafka, in this case, it is "10.1.1.0"Identify the denied IP address; in this case, it is "10.1.1.0."

- Check the Kafka logs using the

command:

- Create Host ACL for Network IP

- Access any Pod within the OCDD deployment, such as

kafka-broker-0:

kubectl exec -it kafka-broker-0 -n <namespace> -- bash - Run the provided curl commands to configure the host network IP

ACLs:

curl -k --location --request POST 'https://ocnaddconfiguration:12590/ocnadd-configuration/v2/{workerGroup}/client-acl' --header 'Content-Type: application/json' --data-raw '{ "principal": "joe", "hostName": "10.1.1.0", "resourceType": "TOPIC", "resourceName": "MAIN", "aclOperation": "READ" }' curl -k --location --request POST 'https://ocnaddconfiguration:12590/ocnadd-configuration/v2/{workerGroup}/client-acl' --header 'Content-Type: application/json' --data-raw '{ "principal": "joe", "hostName": "10.1.1.0", "resourceType": "GROUP", "resourceName": "demofeed", "aclOperation": "READ" }'

- Access any Pod within the OCDD deployment, such as

kafka-broker-0:

- Verify ACLsUse the following curl command to verify the ACLs:

curl -k --location --request GET 'https://ocnaddadminservice:9181/ocnadd-admin-svc/v2/{workerGroup}/acls'Here is an example of the expected output, indicating ACLs forWith Feed Name: demofeed, ACL user: joe, Host Name:10.1.1.15, Network IP:10.1.1.0:["(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.0, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.0, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))"]

Producer Unable to Send traffic to OCNADD when an External Kafka Feed is enabled

Problem

Producer is unable to send traffic to OCNADD when an External Kafka Feed is enabled.

Solution

Follow the below steps to debug and investigate if the producer is unable to send traffic to DD when ACL is enabled and there are unauthorization errors coming in producer NF logs.

Debug and Investigation Steps:

Note:

The below steps should be run corresponding to the worker group against which the Kafka feed is being enabled- Begin by creating the

admin.propertiesfile within the Kafka broker, following Step 2 of "Update SCRAM Configuration with Users" as outlined in the Oracle Communications Network Analytics Data Director User Guide. - If the producer's security protocol is SASL_SSL (port 9094), verify

whether the users have been created in SCRAM. Use the following command for

verification:

./kafka-configs.sh --bootstrap-server kafka-broker:9094 --describe --entity-type users --command-config ../../admin.propertiesIf no producer's SCRAM ACL users are found, see to the "Prerequisites for External Consumers" section in the Oracle Communications Network Analytics Data Director User Guide to create the necessary Client ACL users.

- In case the producer's security protocol is SSL (port 9093), ensure that the Network Function (NF) producer's certificates have been correctly generated as per the instructions provided in the Oracle Communications Network Analytics Suite Security Guide.

- Check whether the producer client ACLs have been set up based on the configured

security protocol (SASL_SSL or SSL) in the NF Kafka Producers. To verify this:

- Access any Pod from the OCNADD deployment. For instance,

kafka-broker-0:kubectl exec -it kafka-broker-0 -n <namespace> -- bash - Run the following curl command to list all the

ACLs:

curl -k --location --request GET 'https://ocnaddadminservice:9181/ocnadd-admin-svc/v2/{workerGroup}/acls'The expected output might resemble the following example, indicatingWith Feed Name: demofeed, ACL user: joe, Host Name:10.1.1.15, Network IP:10.1.1.0:["(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.0, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.0, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))"]

- Access any Pod from the OCNADD deployment. For instance,

- If no ACLs are found as observed in step 4, follow the "Create Client ACLs" section

provided in the Oracle Communications Network Analytics Data Director User

Guide to establish the required ACLs.

By following these steps, you will be able to diagnose and address issues related to the producer's inability to send traffic to OCNADD when an External Kafka Feed is enabled, and ACL-related authorization errors are encountered.

External Kafka Consumer Unable to consume messages from DD

Problem

External Kafka Consumer is unable to consume messages from OCNADD.

Solution

If you are experiencing issues where an external Kafka consumer is unable to consume messages from OCNADD, especially when ACL is enabled and unauthorized errors are appearing in the Kafka feed's logs, follow the subsequent steps for debugging and investigation:

Debug and Investigation Steps:

Note:

The below steps should be run corresponding to the worker group against which the Kafka feed is created/modified.- Verify that ACL Users created for the Kafka feed, along with SCRAM

users, are appropriately configured in the JAAS config by executing the following

command:

./kafka-configs.sh --bootstrap-server kafka-broker:9094 --describe --entity-type users --command-config ../../admin.properties - Validate that the Kafka feed parameters have been correctly configured in the consumer client. If not, ensure proper configuration and perform an upgrade on the Kafka feed's consumer application.

- Inspect the logs of the external consumer application.

- If you encounter an error related to "XYZ Group authorization failure" in

the consumer application logs, follow these steps:

- Access any Pod within the OCNADD deployment. For example,

kafka-broker-0:kubectl exec -it kafka-broker-0 -n <namespace> -- bash - Run the curl command below to retrieve ACLs

information and verify the existence of ACLs for the Kafka

feed:

curl -k --location --request GET 'https://ocnaddadminservice:9181/ocnadd-admin-svc/v2/{workerGroup}/acls'Sample output with

Feed Name: demofeed, ACL user: joe, Host Name:10.1.1.15, Network IP:10.1.1.0:["(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.0, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.0, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))"] - If no ACL is found for the Kafka feed with the resource

type "Group," run the following curl command to create the Group

resource type ACLs:

curl -k --location --request POST 'https://ocnaddconfiguration:12590/ocnadd-configuration/v2/{workerGroup}/client-acl' --header 'Content-Type: application/json' --data-raw '{ "principal": "<ACL-USER-NAME>", "resourceType": "GROUP", "resourceName": "<KAFKA-FEED-NAME>", "aclOperation": "READ" }'

- Access any Pod within the OCNADD deployment. For example,

- If you encounter an error related to "XYZ TOPIC authorization failure" in

the consumer application logs, follow these steps:

- Access any Pod within the OCNADD deployment. For example,

kafka-broker-0:kubectl exec -it kafka-broker-0 -n <namespace> -- bash - Run the curl command below to retrieve ACLs information

and verify the existence of ACLs for the Kafka

feed:

curl -k --location --request GET 'https://ocnaddadminservice:9181/ocnadd-admin-svc/v2/{workerGroup}/acls'Sample output withFeed Name: demofeed, ACL user: joe, Host Name:10.1.1.15, Network IP:10.1.1.0:["(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.0, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=GROUP, name=demofeed, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.0, operation=READ, permissionType=ALLOW))","(pattern=ResourcePattern(resourceType=TOPIC, name=MAIN, patternType=LITERAL), entry=(principal=User:joe, host=10.1.1.15, operation=READ, permissionType=ALLOW))"] - If no ACL is found for the Kafka feed with the resource

type "TOPIC," run the following curl command to create the TOPIC

resource type ACLs:

curl -k --location --request POST 'https://ocnaddconfiguration:12590/ocnadd-configuration/v2/{workerGroup}/client-acl' --header 'Content-Type: application/json' --data-raw '{ "principal": "<ACL-USER-NAME>", "resourceType": "TOPIC", "resourceName": "MAIN", "aclOperation": "READ" }'

- Access any Pod within the OCNADD deployment. For example,

- If you encounter an error related to "XYZ Group authorization failure" in

the consumer application logs, follow these steps:

Database Error During Kafka Feed Update

Problem:

When attempting to update a Kafka feed, you may encounter an error similar to the following:

Error Message: Updating the Kafka feed in the database has failed.

Solution:

To address this issue, retry the Kafka feed update using the Update Kafka feed option from OCNADD UI with the same information as in the previous attempt.

2.2 Helm Install and Upgrade Failure

This section describes the various helm installation or upgrade failure scenarios and the respective troubleshooting procedures:

2.2.1 Incorrect Image Name in ocnadd-custom-values.yaml File

Problem

helm install fails if an incorrect image name is provided in the ocnadd-custom-values.yaml file or if the image is missing in the image repository.

Error Code or Error Message

When you run kubectl get pods -n <ocnadd_namespace>, the status of the pods might be ImagePullBackOff or ErrImagePull.

Solution

Perform the following steps to verify and correct the image name:

- Edit the

ocnadd-custom-values.yamlfile and provide the release specific image names and tags. - Run the

helm installcommand. - Run the

kubectl get pods -n <ocnadd_namespace>command to verify if all the pods are in Running state.

2.2.2 Failed Helm Installation/Upgrade Due to Prometheus Rules Applying Failure

Scenario:

Helm installation or upgrade fails due to Prometheus rules applying failure.

Problem:

Helm installation or upgrade might fail if Prometheus service is down or Prometheus PODs are not available during the helm installation or upgrade of the OCNADD.

Error Code or Error Message:

Error: UPGRADE FAILED: cannot patch "ocnadd-alerting-rules" with kind PrometheusRule: Internal error occurred: failed calling webhook "prometheusrulemutate.monitoring.coreos.com": failed to call webhook: Post "https://occne-kube-prom-stack-kube-operator.occne-infra.svc:443/admission-prometheusrules/mutate?timeout=10s": context deadline exceededSolution:

Perform the following steps to proceed with the OCNADD helm install or upgrade:

- Move the "

ocndd-alerting-rules.yaml" and "ocnadd-mgmt-alerting-rules.yaml" from the<chart_path>/helm-charts/templatesto some other directory outside the OCNADD charts. - Continue with the helm install/upgrade.

- Run the following command to verify if the status of all the pods are

running:

kubectl get pods -n <ocnadd_namespace>

The OCNADD Prometheus alerting rules must be applied again when the Prometheus service

and PODs are available back in service. Ensure to apply the alerting rules using the

Helm upgrade procedure itself by moving back the

"ocndd-alerting-rules.yaml" and

"ocnadd-mgmt-alerting-rules.yaml" files in the

<chart_path>/helm-charts/templates directory.

2.2.3 Docker Registry is Configured Incorrectly

Problem

helm install might fail if the Docker Registry is not configured in all primary and secondary nodes.

Error Code or Error Message

When you run kubectl get pods -n <ocnadd_namespace>, the status of

the pods might be ImagePullBackOff or ErrImagePull.

Solution

Configure the Docker Registry on all primary and secondary nodes. For information about Docker Registry configuration, see Oracle Communications Cloud Native Environment Installation, Upgrade, and Fault Recovery Guide.

2.2.4 Continuous Restart of Pods

Problem

helm install might fail if MySQL primary or secondary hosts are not configured properly in ocnadd-custom-values.yaml.

Error Code or Error Message

When you run kubectl get pods -n <ocnadd_namespace>, the pods shows restart count increases continuously, or there is a Prometheus alert for continuous pod restart.

-

Verify MySQL connectivity.

MySQL servers may not be configured properly. For more information about the MySQL configuration, see Oracle Communications Network Analytics Data Director Installation, Upgrade, and Fault Recovery Guide.

- Describe the POD to check more details on the error, troubleshoot further based on the reported error.

- Check the POD log for any error, troubleshoot further based on the reported error.

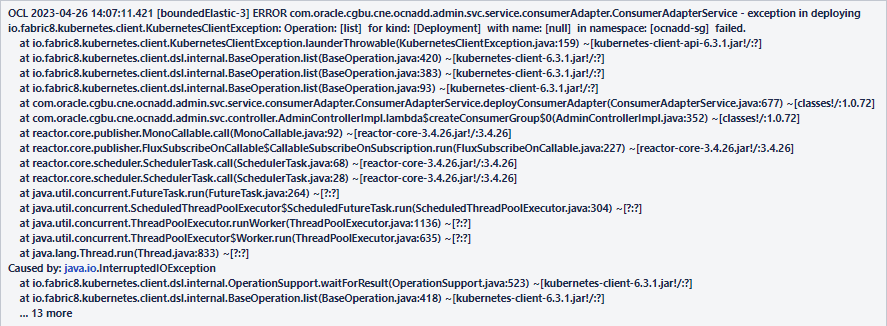

2.2.5 Adapter Deployment Removed during Upgrade or Rollback

Problem

Adapter(data feed) is deleted during upgrade or rollback.

Error Code or Error Message

Figure 2-1 Error Message

Solution

- Run the following command to verify the data feeds:

kubectl get po -n <namespace> - If data feeds are missing, verify the above mentioned error message in the admin service log, by running the following command:

kubectl logs <admin svc pod name> -n <namespace> - If the error message is present, run the following command:

kubectl rollout restart deploy <configuration app name> -n <namespace>

2.2.6 ocnadd-custom-values.yaml File Parse Failure

This section explains the troubleshooting procedure in case of failure while parsing the ocnadd-custom-values.yaml file.

Problem

Unable to parse the ocnadd-custom-values.yaml file or any other values.yaml while running Helm install.

Error Code or Error Message

Error: failed to parse ocnadd-custom-values.yaml: error converting YAML to JSON: yaml

Symptom

When parsing the ocnadd-custom-values.yaml file, if the above mentioned error is received, it indicates that the file is not parsed because of the following reasons:

- The tree structure may not have been followed.

- There may be a tab space in the file.

Solution

Download the latest OCNADD custom templates zip file from MoS. For more information, see Oracle Communications Network Analytics Data Director Installation, Upgrade, and Fault Recovery Guide.

2.2.7 Kafka Brokers Continuously Restart after Reinstallation

Problem

When re-installing OCNADD in the same namespace without deleting the PVC that was used for the first installation, Kafka brokers will go into crashloopbackoff status and keep restarting.

Error Code or Error Message

When you run, kubectl get pods -n <ocnadd_namespace> the broker pod's

status might be Error/crashloopbackoff and it might keep restarting continuously, with

"no disk space left on the device" errors in the pod logs.

Solution

- Delete the Stateful set (STS) deployments of the brokers. Run

kubectl get sts -n <workergroup_namespace>to obtain the Stateful sets in the namespace. - Delete the STS deployments of the services with disk full issue. For

example, run the command

kubectl delete sts -n <workergroup_namespace> kafka-broker1 kafka-broker2. - After deleting the STS of the brokers delete the pvc. Delete the PVCs in

the namespace, which is used by kafka-brokers. Run

kubectl get pvc -n <workergroup_namespace>to get the PVCs in that namespace.The number of PVCs used is based on the number of brokers deployed. Therefore, select the PVCs that have the name kafka-broker or zookeeper, and delete them. To delete the PVCs, run

kubectl delete pvc -n <workergroup_namespace> <pvcname1> <pvcname2>.

For example:

For a three broker setup in worker group namespace ocnadd-wg1, you will need to delete these PVCs:

kubectl delete pvc -n ocnadd-wg1 broker1-pvc-kafka-broker1-0, broker2-pvc-kafka-broker2-0, broker3-pvc-kafka-broker3-0, kafka-broker-security-zookeeper-0, kafka-broker-security-zookeeper-1 kafka-broker-security-zookeeper-22.2.8 Kafka Brokers Continuously Restart After the Disk is Full

Problem

While there is no disk space left on the broker or zookeeper in a corresponding worker group.

Error Code or Error Message

When you run kubectl get pods -n <ocnadd_namespace>, the broker pod's status might be error, or crashloopbackoff and it might keep restarting continuously.

Solution

Note:

The below steps should be run corresponding to the worker group against which the Kafka is reporting disk full error.- Delete the STS(stateful set) deployments of the brokers:

- Get the STS in the namespace with the following

command:

kubectl get sts -n <ocnadd_namespace> - Delete the STS deployments of the services with disk full

issue:

kubectl delete sts -n <ocnadd_namespace> <sts1> <sts2>For example, for three broker setup:kubectl delete sts -n ocnadd-deploy kafka-broker1 kafka-broker2 kafka-broker3 zookeeper

- Get the STS in the namespace with the following

command:

- Delete the PVCs in that namespace that is used by the removed kafka-brokers. To get the PVCs in that namespace:

kubectl get pvc -n <ocnadd_namespace>The number of PVCs used will be based on the number of brokers you deploy. Select the PVCs that have the name kafka-broker or zookeeper and delete them.

- To delete PVCs,

run:

kubectl delete pvc -n <ocnadd_namespace> <pvcname1> <pvcname2>For example, for a three broker setup in namespace ocnadd-deploy, you must delete these PVCs;

kubectl delete pvc -n ocnadd-deploy broker1-pvc-kafka-broker1-0 broker2-pvc-kafka-broker2-0 broker3-pvc-kafka-broker3-0 kafka-broker-security-zookeeper-0 kafka-broker-security-zookeeper-1 kafka-broker-security-zookeeper-2

- To delete PVCs,

run:

-

Once the STS and PVC's are deleted for the services, edit the respective broker's values.yaml to increase the PV size of the brokers at the location:

<chartpath>/charts/ocnaddkafka/values.yaml.If any formatting or indentation issues occur while editing, refer to the files in<chartpath>/charts/ocnaddkafka/defaultTo increase the storage, edit the fields pvcClaimSize for each broker. For recommendation of PVC storage, see Oracle Communications Network Analytics Data Director Benchmarking Guide.

- Upgrade the Helm chart after increasing the PV size

helm upgrade <release-name> <chartpath> -n <namespace> - Create the required topics.

2.2.9 Kafka Brokers Restart on Installation

Problem

Kafka brokers re-start during OCNADD installation.

Error Code or Error Message

The output of the command kubectl get pods -n <ocnadd_namespace> displays the broker pod's status as restarted.

Solution

The Kafka Brokers wait for a maximum of 3 minutes for the Zookeepers to come online before they are started. If the Zookeeper cluster does not come online within the given interval, the broker will start before the Zookeeper and will error out as it does not have access to the Zookeeper.This may Zookeeper may start after the 3 interval as the node may take more time to pull the images due to network issues.Therefore, when the zookeeper does not come online within the given time this issue may be observed.

2.2.10 Kafka Brokers in Crashloop State After Rollback

Problem

Kafka brokers pods in crashloop state on rollback from 23.4.0

Error Code or Error Message

kubectl logs -n ocnadd-deploy kafka-broker-3 auto-discovery

E1117 17:02:02.770723 9 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E1117 17:02:02.771065 9 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refusedSolution

Perform the following steps to resolve the issue:

- Edit the service account using the below command

kubectl edit sa -n ocnadd-deploy ocnadd-deploy-sa-ocnadd- Update the parameter

"

automountServiceAccountToken" totrueautomountServiceAccountToken: true

- Save the service account.

- Run the

kubectl get pods -n <ocnadd_namespace>command to verify if the status of all the Kafka pods is now Running.

2.2.11 Database Goes into the Deadlock State

Problem

MySQL locks get struck.

Error Code or Error Message

ERROR 1213 (40001): Deadlock found when trying to get lock; try restarting the transaction.

Symptom

Unable to access MySQL.

Solution

Perform the following steps to remove the deadlock:

- Run the following command on each SQL

node:

This command retrieves the list of commands to kill each connection.SELECT CONCAT('KILL ', id, ';') FROM INFORMATION_SCHEMA.PROCESSLIST WHERE `User` = <DbUsername> AND `db` = <DbName>;Example:select CONCAT('KILL ', id, ';') FROM INFORMATION_SCHEMA.PROCESSLIST where `User` = 'ocnadduser' AND `db` = 'ocnadddb'; +--------------------------+ | CONCAT('KILL ', id, ';') | +--------------------------+ | KILL 204491; | | KILL 200332; | | KILL 202845; | +--------------------------+ 3 rows in set (0.00 sec) - Run the kill command on each SQL node.

2.2.12 The Pending Rollback Issue Due to PreRollback Database Rollback Job Failure

Scenario: Rollback to previous release or above versions are failing due to pre-rollback-db job failure and OCNADD is entering into pending-rollback status

Problem: In this case, OCNADD gets stuck and can't proceed with any other operation like install/upgrade/rollback.

Error Logs - Example with 23.2.0.0.1:

>helm rollback ocnadd 1 -n <namespace>

Error: job failed: BackoffLimitExceeded

$ helm history ocnadd -n ocnadd-deploy

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Fri Jul 14 10:38:31 2023 superseded ocnadd-23.2.0 23.2.0 Install complete

2 Fri Jul 14 11:38:31 2023 superseded ocnadd-23.2.0 23.2.0.0.1 Upgrade complete

3 Fri Jul 14 11:40:17 2023 superseded ocnadd-23.3.0 23.3.0.0.0 Upgrade complete

4 Mon Jul 17 07:22:04 2023 pending-rollback ocnadd-23.2.0 23.2.0.0.1 Rollback to 1

$ helm upgrade ocnadd <helm chart> -n ocnadd-deploy

Error: UPGRADE FAILED: another operation (install/upgrade/rollback) is in progressSolution:

To resolve the pending-rollback issue, delete the secrets related to the 'pending-rollback' revision. Follow these steps:

- Get secrets using

kubectl

$kubectl get secrets -n ocnadd-deploy NAME TYPE DATA AGE adapter-secret Opaque 8 14d certdbfilesecret Opaque 1 14d db-secret Opaque 6 47h default-token-dmrqq kubernetes.io/service-account-token 3 14d egw-secret Opaque 8 14d jaas-secret Opaque 1 4d19h kafka-broker-secret Opaque 8 14d ocnadd-deploy-admin-sa-token-mfh6l kubernetes.io/service-account-token 3 4d19h ocnadd-deploy-cache-sa-token-g7rd2 kubernetes.io/service-account-token 3 4d19h ocnadd-deploy-gitlab-admin-token-qfmmf kubernetes.io/service-account-token 3 4d19h ocnadd-deploy-kafka-sa-token-2qqs9 kubernetes.io/service-account-token 3 4d19h ocnadd-deploy-sa-ocnadd-token-tj2zf kubernetes.io/service-account-token 3 47h ocnadd-deploy-zk-sa-token-9x2rb kubernetes.io/service-account-token 3 4d19h ocnaddadminservice-secret Opaque 8 14d ocnaddalarm-secret Opaque 8 14d ocnaddcache-secret Opaque 8 14d ocnaddconfiguration-secret Opaque 8 14d ocnaddhealthmonitoring-secret Opaque 8 14d ocnaddnrfaggregation-secret Opaque 8 14d ocnaddscpaggregation-secret Opaque 8 14d ocnaddseppaggregation-secret Opaque 8 14d ocnaddthirdpartyconsumer-secret Opaque 8 14d ocnadduirouter-secret Opaque 8 14d oraclenfproducer-secret Opaque 8 14d regcred-sim kubernetes.io/dockerconfigjson 1 8d secret Opaque 7 4d19h sh.helm.release.v1.ocnadd.v1 helm.sh/release.v1 1 4d19h sh.helm.release.v1.ocnadd.v2 helm.sh/release.v1 1 4d19h sh.helm.release.v1.ocnadd.v3 helm.sh/release.v1 1 4d19h sh.helm.release.v1.ocnadd.v4 helm.sh/release.v1 1 47h sh.helm.release.v1.ocnaddsim.v1 helm.sh/release.v1 1 8d zookeeper-secret Opaque 8 14d - Delete Secrets Related to Pending-Rollback Revision: In this

case the secrets of revision 4, that is,

'sh.helm.release.v1.ocnadd.v4'need to be deleted since the data director entered 'pending-rollback' status in revision 4:kubectl delete secrets sh.helm.release.v1.ocnadd.v4 -n ocnadd-deploySample output:

secret "sh.helm.release.v1.ocnadd.v4" deleted - Check Helm History: Verify that the pending-rollback status has

been cleared using the following

command:

helm history ocnadd -n ocnadd-deploySample output:

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION 1 Fri Jul 14 10:38:31 2023 superseded ocnadd-23.2.0 23.2.0 Install complete 2 Fri Jul 14 11:38:31 2023 superseded ocnadd-23.2.0 23.2.0.0.1 Upgrade complete 3 Fri Jul 14 11:40:17 2023 superseded ocnadd-23.3.0-rc.2 23.3.0.0.0 Upgrade complete - Restore Database Backup: Restore the database backup taken before the upgrade started. Follow the "Create OCNADD Restore Job" section of the "Fault Recovery" from the Oracle Communications Network Analytics Data Director Installation, Upgrade and Fault Recovery Guide.

- Perform Rollback: Perform rollback again using the following

command:

helm rollback <release name> <revision number> -n <namespace>For example:

helm rollback ocnadd 1 -n ocnadd-deploy --no-hooks - Verification: Verify that end-to-end traffic is running between the DD and the corresponding third-party application.

2.2.13 Upgrade fails due to unsupported changes

Problem

Upgrade failed from the source release to the target release due to unsupported changes in the target release

The upgrade failed from the source release to the target release due to unsupported changes in the target release (during the upgrade the Database Job was successful but the upgrade failed due to an error).

Note:

This issue is not a generic issue, however, may occur if users are unable to sync up the target release charts.Example:

Scenario: If there is a PVC size mismatch from the source release to the target release.

Error Message in Helm History: Example with 23.2.0.0.1 to 23.3.0

Error: UPGRADE FAILED: cannot patch "zookeeper" with kind StatefulSet: StatefulSet.apps "zookeeper" is invalid: spec: Forbidden: updates to statefulset spec for fields other than 'replicas', 'template', 'updateStrategy', 'persistentVolumeClaimRetentionPolicy' and 'minReadySeconds' are forbiddenRun the following command: (with 23.2.0.0.1)

helm history <release-name> -n <namespace>Sample output with version 23.2.0.0.1:

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Tue Aug 1 07:01:29 2023 superseded ocnadd-23.2.0 23.2.0 Install complete

2 Tue Aug 1 07:08:29 2023 deployed ocnadd-23.2.0 23.2.0.0.1 Upgrade complete

3 Tue Aug 1 07:24:08 2023 failed ocnadd-23.3.0 23.3.0.0.0 Upgrade "ocnadd" failed: cannot patch "zookeeper" with kind StatefulSet: StatefulSet.apps "zookeeper" is invalid: spec: Forbidden: updates to statefulset spec for fields other than 'replicas', 'template', 'updateStrategy', 'persistentVolumeClaimRetentionPolicy' and 'minReadySeconds' are forbiddenSolution

Perform the following steps:

- Correct and sync the target release charts as per the source release, and ensure that no new feature of the new release is enabled.

- Perform an upgrade. For more information about the upgrade procedure, see "Upgrading OCNADD" in the Oracle Communications Network Analytics Data Director Installation, Upgrade, and Fault Recovery Guide.

2.2.14 Upgrade Failed from Source Release to Target Release Due to Helm Hook Upgrade Database Job

Problem

Upgrade failed from patch source release to target release due to helmhook upgrade DB job (Upgrade job fails).

Error Code or Error Message: Example with 23.2.0.0.1 to 23.3.0

Error: UPGRADE FAILED: pre-upgrade hooks failed: job failed: BackoffLimitExceededRun the following command:

helm history <release-name> -n <namespace>Sample Output:

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Tue Aug 1 07:01:27 2023 superseded ocnadd-23.2.0 23.2.0 Install complete

2 Tue Aug 1 07:08:29 2023 deployed ocnadd-23.2.0 23.2.0.0.1 Upgrade complete

2 Tue Aug 1 07:24:08 2023 failed ocnadd-23.3.0 23.3.0.0.0 Upgrade "ocnadd" failed: pre-upgrade hooks failed: job failed: BackoffLimitExceededSolution

Rollback to patch, correct the errors, and then run the upgrade once again.

- Run helm rollback to previous release

revision:

helm rollback <helm release name> <revision number> -n <namespace> - Restore the Database backup taken before upgrade. For more information see, the procedure "Create OCNADD Restore Job" in the "Fault Recovery" section in the Oracle Communications Network Analytics Data Director Installation, Upgrade, and Fault Recovery Guide.

- Correct the upgrade issue and run a fresh upgrade. For more information, see "Upgrading OCNADD" in the Oracle Communications Network Analytics Data Director Installation, Upgrade, and Fault Recovery Guide.

2.2.15 Upgrade fails due to Database MaxNoOfAttributes exhausted

Scenario:

Upgrade fails due to Database MaxNoOfAttributes exhausted

Problem:

Helm upgrade may fail due to maximum number for attributes allowed to be created has reached maximum limit.

Error Code or Error Message:

Executing::::::: /tmp/230300001.sql

mysql: [Warning] Using a password on the command line interface can be insecure.

ERROR 1005 (HY000) at line 51: Can't create destination table for copying alter table (use SHOW WARNINGS for more info).

error in executing upgrade db scripts

Solution:

Delete few database schemas that are not being used or the ones which are stale.

For example, from MySQL prompt, drop database xyz;

Note:

Dropping unused or stale database schemas is a valid approach. However, exercise caution when doing this to ensure you are not deleting important data. Make sure to have proper backups before proceeding.2.2.16 Webhook Failure During Installation or Upgrade

Problem

Installation or upgrade unsuccessful due to webhook failure.

Error Code or Error Message

Sample error log:

Error: INSTALLATION FAILED: Internal error occurred: failed calling webhook "prometheusrulemutate.monitoring.coreos.com": failed to call webhook: Post "https://occne-kube-prom-stack-kube-operator.occne-infra.svc:443/admission-prometheusrules/mutate?timeout=10s": context deadline exceeded

Solution

Retry installation or upgrade using Helm.

2.2.17 Adapters Do Not Restart after Rollback

Scenario:

When upgraded from one version to another version and created a new adapter in new version, the new adapter is still present even after rollback.

Problem:

When rolled back to an older version, every resource in OCNADD should go back to their previous state. So, any adapter resources created in new version should be deleted as well as they didn't exist before in older versions.

Solution:

$ kubectl delete service,deploy,hpa <adapter-name> -n ocnadd-deploy2.2.18 Adapters Do Not Restart after Rollback

Scenario:

When Data Feeds are created in the source release and an upgrade is performed to the latest release later if the Rollback to the previous release was performed then the Adapters pods restart is expected.

Problem:

The Adapters do not restart. The Admin Service throws the following exception:

io.fabric8.kubernetes.client.KubernetesClientException: Operation: [update] for kind: [Deployment] with name: [app-http-adapter] in namespace: [ocnadd-deploy] failed.

at io.fabric8.kubernetes.client.KubernetesClientException.launderThrowable(KubernetesClientException.java:159) ~[kubernetes-client-api-6.5.1.jar!/:?]

at io.fabric8.kubernetes.client.dsl.internal.HasMetadataOperation.update(HasMetadataOperation.java:133) ~[kubernetes-client-6.5.1.jar!/:?]

at io.fabric8.kubernetes.client.dsl.internal.HasMetadataOperation.update(HasMetadataOperation.java:109) ~[kubernetes-client-6.5.1.jar!/:?]

at io.fabric8.kubernetes.client.dsl.internal.HasMetadataOperation.update(HasMetadataOperation.java:39) ~[kubernetes-client-6.5.1.jar!/:?]

at com.oracle.cgbu.cne.ocnadd.admin.svc.service.consumerAdapter.ConsumerAdapterService.lambda$ugradeConsumerAdapterOnStart$1(ConsumerAdapterService.java:1068) ~[classes!/:2.2.3]

at java.util.ArrayList.forEach(ArrayList.java:1511) ~[?:?]

at com.oracle.cgbu.cne.ocnadd.admin.svc.service.consumerAdapter.ConsumerAdapterService.ugradeConsumerAdapterOnStart(ConsumerAdapterService.java:1022) ~[classes!/:2.2.3]Solution:

Restart the Admin Service after the rollback, which in turn will restart the Adapters to revert them to the older version.

2.2.19 Third-Party Endpoint DOWN State and New Feed Creation

Scenario:

When a third-party endpoint is in a DOWN state and a new third-party Feed (HTTP/SYNTHETIC) is created with the "Proceed with Latest Data" configuration, data streaming is expected to resume once the third-party endpoint becomes available and connectivity is established.

Problem

A third-party endpoint is in a DOWN state and a new third-party Feed (HTTP/SYNTHETIC) is created.

Solution

It is recommended to use "Resume from Point of Failure" configuration in case of third-party endpoint unavailability during feed creation.

2.2.20 Adapter Feed Not Coming Up After Rollback

Scenario:

When Data Feeds are created in the previous release and an upgrade is performed to the latest release, and in the latest release both the data feeds are deleted and rollback was carried out to the previous release. Then the feeds that are created in the older Release should have come back after Rollback.

Problem

- After the Rollback only one of the Data Feeds is created back.

- The Data Feeds get created after the rollback, however, it is stuck in "Inactive" state.

Solution:

- After the rollback, clone the feeds and delete the old feeds.

- Update the Cloned Feeds with the Data Stream Offset as EARLIEST to avoid data loss.

2.2.21 Adapters pods are in the "init:CrashLoopBackOff" error state after rollback

Scenario:

This issue occurs when the adapter pods, created before or after the upgrade, encounter errors due to missing fixes related to data feed types available only in the latest releases.

Problem:

Adapter pods are stuck in the "init:CrashLoopBackOff" state after a rollback from the latest release to an older release.

Solution Steps:

- Delete the Adapter Manually:

Run the following command to delete the adapter:

kubectl delete service,deploy,hpa <adapter-name> -n ocnadd-deploy - Clone or Recreate the Data Feed:

Clone or recreate the Data Feed again with the same configurations. While creating the data feed, the Data Stream Offset option can be set as "EARLIEST" to avoid data loss.

2.2.22 Configuration Service Pod Goes into The "CrashLoopBackOff" State After Upgrade When the cnDBTier Version is 23.2.x or Above

Scenario:

When OCNADD is upgraded from the previous release to the latest release and the dbTier version is 23.2.x or above.

Problem:

After the upgrade, the configuration service pod goes into the "CrashLoopBackOff" state with DB-related errors in the logs.

Solution:

Update the "ndb_allow_copying_alter_table" parameter in

dbTier to "ON".

update ndb_allow_copying_alter_table=ONNote:

- If cnDBTier 23.2.x release is installed, set the

"

ndb_allow_copying_alter_table" parameter to 'ON' in the cnDBTier custom valuesdbtier_23.2.0_custom_values_23.2.0.yamlfile before performing any install, upgrade, rollback, or disaster recovery procedure for OCNADD. Revert the parameter to its default value, 'OFF', after the activity is completed and perform the cnDBTier upgrade to apply the parameter changes. - For cnDBTier upgrade instructions, see Oracle Communications Cloud Native Core, cnDBTier Installation, Upgrade, and Fault Recovery Guide.

2.2.23 Feed/Filter Configurations are Missing From Dashboard After Upgrade

Scenario:

After OCNADD is upgraded from the previous release to the latest release, it may be observed that some feed or filter configurations are missing from dashboard.

Problem:

When this issue occurs after upgrade, the configuration service log will have the following message "Table definition has changed, please retry transaction".

Solution:

- Delete the configuration service pod

manually:

kubectl delete pod n ocnadd-deploy <configuration-name> - Check the logs of the new configuration service pod, if "Table definition has changed, please retry transaction" is still present in the log, repeat step 1.

2.2.24 Kafka Feed Cannot be Created in Dashboard After Upgrade

Scenario:

After OCNADD is upgraded from the previous release to the latest release, it may be observed that new Kafka feed configurations cannot be created in dashboard.

Problem:

When this issue occurs after upgrade, the configuration service log will have the following message "Table definition has changed, please retry transaction".

Solution:

- Delete the configuration service pod

manually:

kubectl delete pod n ocnadd-deploy <configuration-name> - Check the logs of the new configuration service pod, if "Table definition has changed, please retry transaction" is still present in the log, repeat step 1.

2.2.25 OCNADD Services Status Not Correct in Dashboard After Upgrade

Scenario:

After OCNADD is upgraded from the previous release to the latest release, it may be observed that the OCNADD microservices status is not correct.

Problem:

When this issue occurs after upgrade, the OCNADD micro services log will have the similar traces as indicated below:

kubectl exec -it -n <namespace> zookeeper-0 -- bash

[ocnadd@zookeeper-0 ~]$ cat extService.txt |grep -i retrykubectl logs -n <namespace> <ocnadd-service-podname> -f |grep -i retry

OCL 2023-12-11 07:23:53.919 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 0

OCL 2023-12-11 07:25:12.046 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 1

OCL 2023-12-11 07:27:11.904 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 2

OCL 2023-12-11 07:30:09.591 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 3

OCL 2023-12-11 07:32:33.906 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 4

OCL 2023-12-11 07:37:26.195 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 5

OCL 2023-12-11 07:54:05.282 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 6

OCL 2023-12-11 08:21:37.994 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 7

OCL 2023-12-11 09:19:50.729 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 8

OCL 2023-12-11 11:24:15.125 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 9

OCL 2023-12-11 15:32:03.699 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 10

OCL 2023-12-11 21:28:51.323 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 11

OCL 2023-12-12 04:01:02.025 [parallel-1] ERROR com.oracle.cgbu.cne.ocdd.healthmonitoringclient.service.Scheduler - Health Profile Registration is not successful, retry number 12Solution:

- Restart all the OCNADD microservices using the command below. This

command should be run for all the worker groups and the management group separately

in case deployment is upgraded to the centralized

mode:

kubectl rollout restart -n <namespace> deployment,sts - Check the health status of each of the OCNADD services on OCNADD UI, the status should become active of each of the services.