4 OCNWDAF Installation

This chapter describes how to install Oracle Communications Network Data Analytics Function (OCNWDAF) on Oracle Communications Cloud Native Environment (CNE).

The steps are divided into two categories:

You are recommended to follow the steps in the given sequence for preparing and installing OCNWDAF.

4.1 Preinstallation

To install OCNWDAF, perform the steps described in this section.

Note:

The kubectl commands might vary based on the platform used for deploying CNC Policy. Users are recommended to replacekubectl with environment-specific command line tool to configure kubernetes resources through kube-api server. The instructions provided in this document are as per the CNE’s version of kube-api server.

4.1.1 Creating Service Account, Role, and RoleBinding

This section describes the procedure to create service account, role, and rolebinding.

Important:

The steps described in this section are optional and you can skip it in any of the following scenarios:- If service accounts are created automatically at the time of OCNWDAF deployment.

- If the global service account with the associated role and role-bindings is already configured or if you are using any internal procedure to create service accounts.

If a service account with necessary rolebindings is already available, then update the

ocnwdaf/values.yamlwith the account details before initiating the installation procedure. In case of incorrect service account details, the installation fails.

Create Service Account

To create the global service account:

- Create an OCNWDAF service account resource file:

vi <ocnwdaf resource file>Example:

vi ocnwdaf-sampleserviceaccount-template.yaml - Update the resource file with the release specific information:

Note:

Update <helm-release> and <namespace> with its respective OCNWDAF namespace and OCNWDAF helm release name.apiVersion: v1 kind: ServiceAccount metadata: name: <helm-release>-serviceaccount namespace: <namespace>

<helm-release> is the helm deployment name.

<namespace> is the name of the Kubernetes namespace of OCNWDAF. All the microservices are deployed in this Kubernetes namespace.

Define Permissions using Role

- Create an OCNWDAF roles resource file:

vi <ocnwdaf sample role file>Example:

vi ocnwdaf-samplerole-template.yaml - Update the resource file with the role specific information:

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: <helm-release>-role rules: - apiGroups: [""] resources: - pods - services - configmaps verbs: ["get", "list", "watch"]

Create RoleBindings

- Create an OCNWDAF rolebinding resource file:

vi <ocnwdaf sample rolebinding file>Example:

vi ocnwdaf-sample-rolebinding-template.yaml - Update the resource file with the role binding specific information:

apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: <helm-release>-rolebinding namespace: <namespace> roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: <helm-release>-role subjects: - kind: ServiceAccount name: <helm-release>-serviceaccount namespace: <namespace>

Create resources

kubectl -n <namespace> create -f <service account resource file>;kubectl -n <namespace> create -f <roles resource file>;kubectl -n <namespace> create -f <rolebinding resource file>Note:

Once the global service account is added, users must addglobal.ServiceAccountName in the ocnwdaf/values.yaml file; otherwise, installation may fail as a result of creating and deleting custom resource definitions (CRD).

4.1.2 Configuring Database, Creating Users, and Granting Permissions

This section explains how a database administrator can create the databases, users, and grant permissions to the users for OCNWDAF.

Perform the following steps to create the OCNWDAF MySQL database and grant permissions to the OCNWDAF user for database operations:

- Unzip the package nwdaf-installer.zip

mkdir nwdaf-installer unzip nwdaf-installer.zip -d nwdaf-installer/ - Log in to the server or machine with permission to access the SQL nodes of NDB cluster.

- Connect to the SQL node of the NDB cluster or connect to the cnDBTier.

- Run the following command to connect to the cnDBTier:

kubectl -n <cndbtier_namespace> exec -it <cndbtier_sql_pod_name> -c <cndbtier_sql_container_name> -- bash - Run the following command to log in to the MySQL prompt as a user with root permissions:

mysql -h 127.0.0.1 -uroot -p - Copy the file content from nwdaf-release-package/installer/nwdaf-installer/sql/ocn-nwdaf-db-script-23.1.0.0.0.sql and run it in the current MySQL instance.

4.1.3 Verifying and Creating OCNWDAF Namespace

This section explains how to verify or create a new namespace in the system.

To verify if the required namespace already exists in the system, run the following command:

$ kubectl get namespacesIn the output of the above command, check if the required namespace is available. If not available, create the namespace using the following command:

Note:

This is an optional step. Skip this step if the required namespace already exists.$ kubectl create namespace <required namespace>$ kubectl create namespace oc-nwdafNaming Convention for Namespaces

While choosing the name of the namespace where you wish to deploy OCNWDAF, make sure the following requirements are met:

- Starts and ends with an alphanumeric character

- Contains 63 characters or less

- Contains only alphanumeric characters or '-'

Note:

It is recommended to avoid using prefixkube- when creating namespace as this prefix

is reserved for Kubernetes system namespaces.

4.1.4 Verifying Installer

A folder named installer is obtained on decompressing the release package, copy this folder to the Kubernetes bastion home. To verify if the installer, run the command:

[ -d "./nwdaf-release-package/installer/nwdaf-installer" ] && echo "nwdaf-installer exist"

[ -d "./nwdaf-release-package/installer/cap4c-installer" ] && echo "cap4c-installer exist"

[ -d "./nwdaf-release-package/installer/nwdaf-cap4c" ] && echo "nwdaf-cap4c exist"

[ -d "./nwdaf-release-package/installer/nwdaf-ats" ] && echo "nwdaf-ats exist"Sample output:

nwdaf-installer exist

nwdaf-cap4c exist

cap4c-installer exist

nwdaf-ats exist4.2 Installation Tasks

This section describes the tasks that the user must follow for installing OCNWDAF.

4.2.1 Install NRF Client

This section describes the procedure to install the NRF client and configure the NRF client parameters.

Note:

These configurations are required when an NF has to register with the NRF. Before you proceed with NRF client configuration changes, NRF client service should be enabled.Installing NRF client

Preparation

Set the required environment variables, run the commands:

export K8_NAMESPACE="<nwdaf-kubernetes-namespace>"

export MYSQL_HOST="<db-host>"

export MYSQL_PORT="<db-port>"

export MYSQL_ENGINE="<db-engine>"Configure The Required Databases

- Log in to the server or machine with permission to access the SQL nodes of NDB cluster.

- Connect to the SQL node of the NDB cluster or connect to the cnDBTier, run the command:

kubectl -n <cndbtier_namespace> exec -it <cndbtier_sql_pod_name> -c <cndbtier_sql_container_name> -- bash - Connect to MySQL prompt as a user with root permissions:

mysql -h 127.0.0.1 -uroot -p - Copy the DDL file content from nwdaf-release-package/nrf-client-installer/sql/ddl.sql and run it in current MySQL instance.

- Copy the DCL file content from nwdaf-release-package/nrf-client-installer/sql/dcl.sql and run it in current MySQL instance

Install nrf-client

install-nrf-client.shsh ./nwdaf-release-package/nrf-client-installer/scripts/install-nrf-client.shVerify the Installation

kubectl get pods -n ${K8_NAMESPACE}[service-user@blurr7-bastion-1 nwdaf-nrf-client-installer]$ kubectl get pods -n ${K8_NAMESPACE}

NAME READY STATUS RESTARTS AGE

nrfclient-appinfo-5495cb8779-vjdqd 1/1 Running 0 2m50s

nrfclient-ocnf-nrf-client-nfdiscovery-6c6787996f-f4zdn 1/1 Running 0 2m34s

nrfclient-ocnf-nrf-client-nfdiscovery-6c6787996f-w9d8d 1/1 Running 0 2m50s

nrfclient-ocnf-nrf-client-nfmanagement-7dddb57fb7-bkf7d 1/1 Running 0 2m50s

nrfclient-ocpm-config-6d8f49dd6-vfl5j 1/1 Running 0 2m50sYou should configure the NRF Client parameters in the ocnwdaf-<version>.custom-values.yaml file, for complete list of NRF Client parameters see NRF Client Parameters.

4.2.2 Installing OCNWDAF CAP4C

Set Up the Required Environment Variables

Export the following required environment variables:

export IMAGE_REGISTRY="<image-registry-uri>"

export K8_NAMESPACE="<nwdaf-kubernetes-namespace>"

export ENCRYPT_KEY="<symetric-encrypt-key>"Replace the <image-registry-uri>, <nwdaf-kubernetes-namespace>, <symetric-encrypt-key> with the appropriate values. For instance, the K8_NAMESPACE should be set to ocn-nwdaf as per the OCNWDAF installation steps provided in previous section to create namespace.

Configure the Database

CAP4C

Follow the procedure to create CAP4C MySQL database and grant user permissions to the required users, to perform the necessary operations on the database:

- Unzip the package cap4c-installer.zip

mkdir cap4c-installer unzip cap4c-installer.zip -d cap4c-installer/ - Log in to the server or machine with permission to access the SQL nodes of the NDB cluster.

- Connect to the SQL node of the NDB cluster or connect to the cnDBTier. Run the following command to connect to the cnDBTier:

kubectl -n <cndbtier_namespace> exec -it <cndbtier_sql_pod_name> -c <cndbtier_sql_container_name> -- bash - Run the following command to log in to the MySQL prompt as a user with root permissions. A user with root permission can create users with required permissions, to perform necessary operations on the database.

mysql -h 127.0.0.1 -uroot -p - Copy the DDL file content from nwdaf-release-package/installer/cap4c-installer/sql/ddl.sql and run it in the current MySQL instance.

- Copy the DCL file content from nwdaf-release-package/installer/cap4c-installer/sql/dcl.sql and run it in the current MySQL instance.

Note:

You can change the DB user and password by editing the file ocn-nwdaf-db-script-23.1.0.0.0.sql before you run it in the above step, if you change DB user and password ensure that you export the property variables as described in the Verifying Dependencies procedure .

Installing Dependencies

- Unzip the package nwdaf-cap4c-installer.zip

mkdir nwdaf-cap4c-installer unzip nwdaf-cap4c-installer.zip -d nwdaf-cap4c-installer/ - Run

01-install-dependencies.shas follows:cd nwdaf-cap4c-installer/scripts sh 01-install-applications.sh

Verifying Dependencies

Run the following command to verify if the dependencies are running:

kubectl get pods -n ${K8_NAMESPACE}Verify if the status of all the dependencies (listed below) is Running. If the status of any dependency is not Running, wait until a maximum of 5 restarts have occurred.

NAME READY STATUS RESTARTS AGE

kafka-sts-0 1/1 Running 0 01s

kafka-sts-1 1/1 Running 0 01s

kafka-sts-2 1/1 Running 0 01s

nwdaf-cap4c-spring-cloud-config-server-deploy-xxxxxxxxx-xxxxx 1/1 Running 0 01s

redis-master-pod 1/1 Running 0 01s

redis-slave-sts-0 1/1 Running 0 01s

redis-slave-sts-1 1/1 Running 0 01s

zookeper-sts-0 1/1 Running 0 01sThe following is the list of default variables used to configure OCNWDAF. You can export any variable and it will be replaced in the deployed configuration.

- MYSQL_HOST

- MYSQL_PORT

- KAFKA_BROKERS

- DRUID_HOST

- DRUID_PORT

- REDIS_HOST

- REDIS_PORT

- CAP4C_KAFKA_INGESTOR_DB

- CAP4C_KAFKA_INGESTOR_DB_USER

- CAP4C_KAFKA_INGESTOR_DB_PASSWORD

- CAP4C_MODEL_CONTROLLER_DB

- CAP4C_MODEL_CONTROLLER_DB_USER

- CAP4C_MODEL_CONTROLLER_DB_PASSWORD

- CAP4C_MODEL_EXECUTOR_DB_USER

- CAP4C_MODEL_EXECUTOR_DB_PASSWORD

- CAP4C_STREAM_ANALYTICS_DB

- NWDAF_CAP4C_REPORTING_SERVICE_USER

- NWDAF_CAP4C_REPORTING_SERVICE_PASSWORD

- NWDAF_CAP4C_SCHEDULER_SERVICE_DB

- NWDAF_CAP4C_SCHEDULER_SERVICE_DB_USER

- NWDAF_CAP4C_SCHEDULER_SERVICE_DB_PASSWORD

- NWDAF_CONFIGURATION_HOST

- NWDAF_USER

- NWDAF_DB_PASSWORD

Change the default values for the following variables:

export MYSQL_HOST=<any-other-mysql-host>

export MYSQL_PORT=<any-other-mysql-port>Setting up Encrypted Credentials

To encrypt the credentials, run the following commands:

export CAP4C_PASSWORD=cap4c_passwd

export CAP4C_MODEL_CONTROLLER_PASSWORD="'{cipher}$(kubectl -n ${K8_NAMESPACE} exec $(kubectl -n ${K8_NAMESPACE} get pods --no-headers -o custom-columns=":metadata.name" | grep nwdaf-cap4c-spring-cloud-config-server) -- curl -s localhost:8888/encrypt -d ${CAP4C_PASSWORD})'"

export CAP4C_KAFKA_INGESTOR_DB_PASSWORD="'{cipher}$(kubectl -n ${K8_NAMESPACE} exec $(kubectl -n ${K8_NAMESPACE} get pods --no-headers -o custom-columns=":metadata.name" | grep nwdaf-cap4c-spring-cloud-config-server) -- curl -s localhost:8888/encrypt -d ${CAP4C_PASSWORD})'"

export CAP4C_MODEL_EXECUTOR_DB_PASSWORD="'{cipher}$(kubectl -n ${K8_NAMESPACE} exec $(kubectl -n ${K8_NAMESPACE} get pods --no-headers -o custom-columns=":metadata.name" | grep nwdaf-cap4c-spring-cloud-config-server) -- curl -s localhost:8888/encrypt -d ${CAP4C_PASSWORD})'"

export NWDAF_CAP4C_REPORTING_SERVICE_PASSWORD="'{cipher}$(kubectl -n ${K8_NAMESPACE} exec $(kubectl -n ${K8_NAMESPACE} get pods --no-headers -o custom-columns=":metadata.name" | grep nwdaf-cap4c-spring-cloud-config-server) -- curl -s localhost:8888/encrypt -d ${CAP4C_PASSWORD})'"

export NWDAF_CAP4C_SCHEDULER_SERVICE_DB_PASSWORD="'{cipher}$(kubectl -n ${K8_NAMESPACE} exec $(kubectl -n ${K8_NAMESPACE} get pods --no-headers -o custom-columns=":metadata.name" | grep nwdaf-cap4c-spring-cloud-config-server) -- curl -s localhost:8888/encrypt -d ${CAP4C_PASSWORD})'"

export NWDAF_DB_PASSWORD=ocn_nwdaf_user_passwd

export NWDAF_DB_PASSWORD="'{cipher}$(kubectl -n ${K8_NAMESPACE} exec $(kubectl -n ${K8_NAMESPACE} get pods --no-headers -o custom-columns=":metadata.name" | grep nwdaf-cap4c-spring-cloud-config-server) -- curl -s localhost:8888/encrypt -d ${NWDAF_DB_PASSWORD})'"Note:

The NWDAF_<microservice>_PASSWORD and CAP4C_<microservice>_PASSWORD by default are the same that the DB users created in the section Configure the Database, if you change the password in this step, you must change the passwords in the database before you continue with the installation.

Configuring Dependencies

Run 02-prepare-dependencies.sh, as

follows:

cd nwdaf-cap4c-installer/scripts

sh 02-prepare-dependencies.shInstalling CAP4C Images

Run install-applications.sh, as follows:

cd ~/nwdaf-release-package/installer/cap4c-installer/scripts

sh install-applications.shVerify if all the services are up and running, run the command:

kubectl get pods --namespace=ocn-nwdaf -o wideSample output:

NAME READY STATUS RESTARTS AGE

nwdaf-cap4c-reporting-service-deploy-59565b4b95-cfls9 1/1 Running 0 3m13s

nwdaf-cap4c-scheduler-service-deploy-67ddc89858-29fcr 1/1 Running 0 3m13s

nwdaf-cap4c-scheduler-service-deploy-67ddc89858-s2gr7 1/1 Running 0 3m13s

cap4c-model-controller-deploy-6469bbccd8-wghkm 1/1 Running 0 3m30s

cap4c-model-executor-deploy-579969f887-9tdd2 1/1 Running 0 3m29s

cap4c-stream-analytics-deploy-57c6556865-ddhks 1/1 Running 0 3m29s

cap4c-stream-analytics-deploy-57c6556865-gp2qs 1/1 Running 0 3m29s

cap4c-kafka-ingestor-deploy-596498b677-4bmj8 1/1 Running 0 3m38s

cap4c-kafka-ingestor-deploy-596498b677-xv8lc 1/1 Running 1 3m38sInstall OCNWDAF Images

Run 01-install-applications.sh, as follows:

cd ~/nwdaf-release-package/installer/nwdaf-installer/scripts

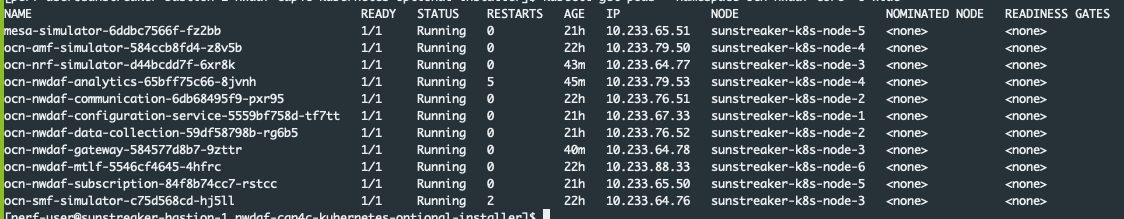

sh install-applications.shVerify if all the services are up and running, run the command:

kubectl get pods --namespace=ocn-nwdaf -o wideFigure 4-1 Sample Output

All micro services running in K8's and each one is a pod. Each microservice will only have one pod.

OCNWDAF Microservices Port Mapping

Table 4-1 Port Mapping

| Service | Port Type | IP Type | Network Type | Service Port | Container Port |

|---|---|---|---|---|---|

| ocn-amf-simulator | Internal | ClusterIP | Internal / K8s | 8085/TCP | 8080/TCP |

| mesa-simulator | Internal | ClusterIP | Internal / K8s | 8097/TCP | 8080/TCP |

| ocn-nrf-simulator | Internal | ClusterIP | Internal / K8s | 8084/TCP | 8080/TCP |

| ocn-nwdaf-analytics | Internal | ClusterIP | Internal / K8s | 8083/TCP | 8080/TCP |

| ocn-nwdaf-communication | Internal | ClusterIP | Internal / K8s | 8082/TCP | 8080/TCP |

| ocn-nwdaf-configuration-service | Internal | ClusterIP | Internal / K8s | 8096/TCP | 8080/TCP |

| ocn-nwdaf-data-collection | Internal | ClusterIP | Internal / K8s | 8081/TCP | 8080/TCP |

| ocn-nwdaf-gateway | Internal | ClusterIP | Internal / K8s | 8088/TCP | 8080/TCP |

| ocn-nwdaf-mtlf | Internal | ClusterIP | Internal / K8s | 8093/TCP | 8080/TCP |

| ocn-nwdaf-subscription | Internal | ClusterIP | Internal / K8s | 8087/TCP | 8080/TCP |

| ocn-smf-simulator | Internal | ClusterIP | Internal / K8s | 8094/TCP | 8080/TCP |

4.2.3 Seeding Slice Load and Geographical Data for Simulation

To simplify the running of the script, a docker image is created with the csv_data and json_data embedded into the image.

Verify if the nwdaf-cap4c-initial-setup-script pod is running in the cluster, if not, create the values.yml file as below:

global:

projectName: nwdaf-cap4c-initial-setup-script

imageName: nwdaf-cap4c/nwdaf-cap4c-initial-setup-script

imageVersion: 2.22.4.0.0

config:

env:

APPLICATION_NAME: nwdaf-cap4c-initial-setup-script

APPLICATION_HOME: /appRun the HELM command:

helm install nwdaf-cap4c-initial-setup-script https://artifacthub-phx.oci.oraclecorp.com/artifactory/ocnwdaf-helm/nwdaf-cap4c-deployment-template-22.0.0.tgz -f <path_values_file>/values.yml -n <namespace_name>A container will be running inside the K8s cluster, identify the name of the container, run the following command:

$ kubectl get pods -n <namespace_name>

Sample output:

NAME READY STATUS RESTARTS AGE

nwdaf-cap4c-initial-setup-script-deploy-64b8fbcd9-2vqf9 1/1 Running 0 55sSearch for the name and port of the configurator service, to be used later on. Run the command:

$ kubectl get svc -n <namespace_name> Sample output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ocn-nwdaf-configuration-service ClusterIP 10.233.57.212 <none> 8096/TCP 38dRun the following command to access the container:

$ kubectl exec -n <namespace_name> nwdaf-cap4c-initial-setup-script-deploy-64b8fbcd9-2vqf9 -it bashNote:

Instead of using the IP address the host name is used.$ python3 cells_loader.py -c /app-data/csv_data -f slices -i <configurator_service_name>.<namespace_name>:<configurator_service_port> -t 2

For example:

python3 cells_loader.py -c /app-data/csv_data -f slices -i ocn-nwdaf-configuration-service-internal:8096 -t 2To begin loading the cells, run the command:

$ python3 cells_loader.py -c /app-data/csv_data -j /app-data/json_data -f austin_cells -i <configurator_service_name>.<namespace_name>:<configurator_service_port> -t 1For example:

python3 cells_loader.py -c /app-data/csv_data -j /app-data/json_data -f austin_cells -i ocn-nwdaf-configuration-service-internal:8096 -t 1Note that the -f parameter indicates the data source file being used, while -t defines the type of load.

Other optional parameters:

-

-c / --csv_directory [full path]This parameter indicates the data directory which contains the specified filename .csv file. It must be the full system path of the directory containing the .csv data file.

-

-j / --json_directory [full path]This parameter indicates the data directory which contains the specified filename .json file. It must be the full system path of the directory containing the .json data file.

-

-i / --hostIp [host IP]This is the IP address of the server where the Configurator service is installed, it must be reachable from the machine that runs this script. The IP address must contain the port when appropriate.

-

-p / --protocol [protocol]This is the protocol used by the Configurator service. Valid values are "http" or "https". Default is "http".

4.2.4 Verifying OCNWDAF Installation

This section describes how to verify if OCNWDAF is installed successfully.

To check the installation status, run any of the following commands:

helm3 ls release_name -n <release-namespace>Example:

helm3 ls ocnwdaf -n ocnwdaf_namespaceYou should see the status as DEPLOYED if the deployment is successful.

To get status of jobs and pods, run the following command:

kubectl get jobs,pods -n release_namespaceExample:

kubectl get pod -n ocnwdafYou should see the status as Running and Ready for all the pods if the deployment is successful.

Run the following command to get status of services:

kubectl get services -n release_namespaceExample:

kubectl get services -n ocnwdaf_namespaceNote:

Take a backup of the following files that are required during disaster recovery:- Updated ocnwdaf-custom-values.yaml file

- Updated helm charts

- secrets, certificates, and keys that are used during installation

4.2.5 Performing Helm Test

Helm Test is a feature that validates the successful installation of OCNWDAF and determines if the NF is ready to take traffic. The pods are tested based on the namespace and label selector configured for the helm test configurations.

Note:

Helm Test can be performed only on helm3.Prerequisite: To perform the helm test, you must have the helm test configurations completed under the "Global Parameters" section of the custom_values.yaml file. For more information on parameters, see Global Parameters.

Run the following command to perform the helm test:

helm3 test <helm-release_name> -n <namespace>where:

helm-release-name is the release name.

namespace is the deployment namespace where OCNWDAF is installed.

Example:

helm3 test ocnwdaf -n ocnwdaf

Sample output:

NAME: ocnwdaf

LAST DEPLOYED: Mon Nov 14 11:01:24 2022

NAMESPACE: ocnwdaf

STATUS: deployed

REVISION: 1

TEST SUITE: ocnwdaf-test

Last Started: Mon Nov 14 11:01:45 2022

Last Completed: Mon Nov 14 11:01:53 2022

Phase: Succeeded

NOTES:

# Copyright 2022 (C), Oracle and/or its affiliates. All rights reserved4.2.6 Configuring OCNWDAF GUI

This section describes how to configure Oracle Communications Networks Data Analytics Function (OCNWDAF) GUI using the following steps:

Configure OCNWDAF GUI in CNC Console

Prerequisite: To configure OCNWDAF GUI in CNC Console, you must have CNC Console installed. For information on how to install CNC Console, refer to Oracle Communications Cloud Native Configuration Console Installation, Upgrade and Fault Recovery Guide.

Before installing CNC Console, ensure that the instances parameters are updated in the occncc_custom_values.yaml file.

If CNC Console is already installed, ensure all the parameters are updated in the occncc_custom_values.yaml file. For information refer to Oracle Communications Cloud Native Configuration Console Installation, Upgrade and Fault Recovery Guide.

Access OCNWDAF GUI

To access OCNWDAF GUI, follow the procedure mentioned in the "Accessing CNC Console" section of Oracle Communications Cloud Native Configuration Console Installation, Upgrade and Fault Recovery Guide.