4 Troubleshooting OCNWDAF

This chapter provides information to troubleshoot the common errors which can be encountered during the preinstallation, installation, upgrade, and rollback procedures of OCNWDAF.

4.1 Generic Checklist

The following sections provide a generic checklist for troubleshooting tips.

Deployment related tips

Perform the following checks after the deployment:

- Are OCNWDAF deployment, pods, and services created?

Are OCNWDAF deployment, pods, and services running and available?

Run the following the command:

Inspect the output, check the following columns:# kubectl -n <namespace> get deployments,pods,svc- AVAILABLE of deployment

- READY, STATUS, and RESTARTS of a pod

- PORT(S) of service

- Check if the microservices can access each other via REST interface.

Run the following command:

# kubectl -n <namespace> exec <pod name> -- curl <uri>

Application related tips

Run the following command to check the application logs and look for exceptions:

# kubectl -n <namespace> logs -f <pod name>You can use '-f' to follow the logs or 'grep' for specific pattern in the log output.

4.2 Deployment Related Issue

This section describes the most common deployment related issues and their resolution steps. It is recommended to perform the resolution steps provided in this guide. If the issue still persists, then contact My Oracle Support.

4.2.1 Installation

This section describes the most common installation related issues and their resolution steps.

4.2.1.1 Pod Creation Failure

A pod creation can fail due to various reasons. Some of the possible scenarios are as follows:

Verifying Pod Image Correctness

To verify pod image:

- Check whether any of the pods is in the ImagePullBackOff state.

- Check if the image name used for all the pods are correct. Verify the image names and versions in the OCNWDAF installation file. For more information about the custom value file, see Oracle Communications Networks Data Analytics Function Installation and Fault Recovery Guide.

Verifying Resource Allocation Failure

To verify any resource allocation failure:

- Run the following command to verify whether any pod is in the pending state.

kubectl describe <nwdaf-drservice pod id> --n <ocnef-namespace> - Verify whether any warning on insufficient CPU exists in the describe output of the respective pod. If it exists, it means there are insufficient CPUs for the pods to start. Address this hardware issue.

Verifying Resource Allocation Issues on Webscale Environment

Webscale environment has openshift container installed. There can be cases where,

- Pods does not scale after you run the installation command and the installation fails with timeout error. In this case, check for preinstall hooks failure. Run the oc get job command to create the jobs. Describe the job for which the pods are not getting scaled and check if there are quota limit exceeded errors with CPU or memory.

- Any of the actual microservice pods do not scale post the hooks completion. In this case, run the oc get rs command to get the list of replicaset created for the NF deployment. Then, describe the replicaset for which the pods are not getting scaled and check for resource quota limit exceeded errors with CPU or memory.

- Installation times-out after all the microservice pods are scaled as expected with the expected number of replicas. In this case, check for post install hooks failure. Run the oc get job command to get the post install jobs and do a describe on the job for which the pods are not getting scaled and check if there are quota limit exceeded errors with CPU or memory.

- Resource quota exceed beyond limits.

Verifying Resources Assigned to Previous Installation

If a previous installations, uninstall procedure was not successful and the uninstall process was forced, it is possible that some resources are still assigned to the previous installation. This can be detected by running the following command:

kubectl -n <namepsace> describe pod <podname>While searching for events, if you detect messages similar to the following message, it indicates that there are resources still assigned to the previous installation and should be purged.

0/n nodes are available: n pods has unbound immediate PersistenVolumeClaims4.2.1.2 Pod Startup Failure

- If dr-service, diameter-proxy, and diam-gateway services are stuck in the Init state, then the reason could be that config-server is not yet up. A sample log on these services is as follows:

"Config Server is Not yet Up, Wait For config server to be up."To resolve this, you must either check for the reason of config-server not being up or if the config-server is not required, then disable it.

- If the notify and on-demand migration service is stuck in the Init state, then the reason could be the dr-service is not yet up. A sample log on these services is as follows:

"DR Service is Not yet Up, Wait For dr service to be up."To resolve this, check for failures on dr-service.

4.2.1.3 NRF Registration Failure

- Confirm whether registration was successful from the nrf-client-service pod.

- Check the ocnwdaf-nrf-client-nfmanagement logs. If the log has "OCNWDAF is Unregistered" then:

- Check if all the services mentioned under allorudr/slf (depending on OCNWDAF mode) in the installation file has same spelling as that of service name and are enabled.

- Once all services are up, OCNWDAF must register with NRF.

- If you see a log for SERVICE_UNAVAILABLE(503), check if the primary and secondary NRF configurations (primaryNrfApiRoot/secondaryNrfApiRoot) are correct and they are UP and Running.

4.2.1.4 Helm Install Failure

This section describes the various scenarios in which helm install might fail. Following are some of the scenarios:

4.2.1.4.1 Incorrect image name in ocnwdaf-custom-values file

Problem

helm install might fail if an incorrect image name is provided in the ocnwdaf-custom-values.yaml file.

Error Code/Error Message

When kubectl get pods -n <ocnwdaf_namespace> is performed, the status of the pods might be ImagePullBackOff or ErrImagePull.

For example:

NAME READY STATUS RESTARTS AGE

cap4c-model-controller-deploy-779cbdcf8f-wscf9 1/1 Running 0 28d

cap4c-model-executor-deploy-68b498c765-rpwz8 0/1 ImagePullBackOff 0 27d

cap4c-stream-analytics-deploy-744878569-xn4wb 0/1 ImagePullBackOff 0 27d

kafka-sts-0 1/1 Running 1 95d

kafka-sts-1 1/1 Running 1 95d

kafka-sts-2 1/1 Running 1 95d

keycloak-pod 1/1 Running 0 3d19h

mysql-pod 1/1 Running 1 95dSolution

- Check ocnwdaf-custom-values.yaml file has the release specific image name and tags.

For ocnwdaf images details, see "Customizing ocnwdaf" in Oracle Communications Cloud Native Core Networks Data Analytics Function Installation Guide.vi ocnwdaf-custom-values-<release-number> - Edit ocnwdaf-custom-values file in case the release specific image name and tags must be modified.

- Save the file.

- Run the following command to delete the deployment:

helm delete --purge <release_namespace>Sample command:helm delete --purge ocnwdaf - To verify the deletion, see the "Verifying Uninstallation" section in Oracle Communications Networks Data Analytics Function Installation and Fault Recovery Guide..

- Run

helm installcommand. For helm install command, see the "Customizing OCNWDAF" section in Oracle Communications Networks Data Analytics Function Installation and Fault Recovery Guide.. - Run

kubectl get pods -n <ocnwdaf_namespace>to verify if all the pods are in Running state.For example:

$ kubectl get pods -n ocnwdafNAME READY STATUS RESTARTS AGE cap4c-model-controller-deploy-b5f8b48d7-6h58w 1/1 Running 0 21h cap4c-model-executor-deploy-575b448467-j8tdd 1/1 Running 1 (4d ago) 6d15h cap4c-stream-analytics-deploy-79ffd7fb65-5lzr5 1/1 Running 0 17h kafka-sts-0 1/1 Running 0 60d keycloak-pod 1/1 Running 0 3d17h mysql-pod 1/1 Running 0 60d nwdaf-cap4c-kafka-ui-pod 1/1 Running 0 57d nwdaf-cap4c-scheduler-service-deploy-548c7948d4-64s85 1/1 Running 0 6d14h nwdaf-cap4c-spring-cloud-config-server-deploy-565dd8f7d6-cxdwh 1/1 Running 0 19d nwdaf-portal-deploy-55488c885-rgq77 1/1 Running 0 20h nwdaf-portal-service-deploy-8dc89dd9f-z2964 1/1 Running 0 20h ocn-nwdaf-analytics-info-deploy-f4585c4b-zbf5d 1/1 Running 0 3d16h ocn-nwdaf-communication-service-deploy-7bf75fbb7c-4qx9s 1/1 Running 3 (3d15h ago) 3d15h ocn-nwdaf-configuration-service-deploy-d87b66c55-7ttcc 1/1 Running 0 3d16h ocn-nwdaf-data-collection-service-deploy-5ffcb86488-l9r9l 1/1 Running 0 24h ocn-nwdaf-gateway-service-deploy-654cbc6475-h95tw 1/1 Running 0 3d15h ocn-nwdaf-mtlf-service-deploy-545c8b445d-kqzfz 1/1 Running 0 3d15h ocn-nwdaf-subscription-service-deploy-f7959fc76-wfcxm 1/1 Running 0 19h redis-master-pod 1/1 Running 0 60d redis-slave-sts-0 1/1 Running 0 60d zookeper-sts-0 1/1 Running 0 60d

4.2.1.4.2 Docker registry is configured incorrectly

Problem

helm install might fail if the docker registry is not configured in all primary and secondary nodes.

Error Code/Error Message

When kubectl get pods -n <ocnwdaf_namespace> is performed, the status of the pods might be ImagePullBackOff or ErrImagePull.

For example:

$ kubectl get pods -n ocnwdaf

Solution

Configure docker registry on all primary and secondary nodes. For more information on configuring the docker registry, see Oracle Communications Cloud Native Environment Installation Guide.

4.2.1.4.3 Continuous Restart of Pods

Problem

helm install might fail if the MySQL primary and secondary hosts are not configured properly in ocnwdaf-custom-values.yaml.

Error Code/Error Message

When kubectl get pods -n <ocnwdaf_namespace> is performed, the pods restart count increases continuously.

For example:

$ kubectl get pods -n ocnwdaf

Solution

MySQL servers(s) may not be configured properly according to the pre-installation steps. For configuring MySQL servers, see the "Configuring Database, Creating Users, and Granting Permissions" section in Oracle Communications Cloud Native Core Networks Data Analytics Function Installation Guide.

4.2.1.5 Custom Value File Parse Failure

ocnwdaf-custom-values.yaml file.

Problem

Not able to parse ocnwdaf-custom-values-x.x.x.yaml, while running helm install.

Error Code/Error Message

Error: failed to parse ocnwdaf-custom-values-x.x.x.yaml: error converting YAML to JSON: yaml

Symptom

While creating the ocnwdaf-custom-values-x.x.x.yaml file, if the aforementioned error is received, it means that the file is not created properly. The tree structure may not have been followed or there may also be tab spaces in the file.

Solution

- Download the latest OCNWDAF templates zip file from MOS. For more information, see the "Downloading OCNWDAF Package" section in Oracle Communications Cloud Native Core Networks Data Analytics Function Installation Guide.

- Follow the steps mentioned in the "Installation Tasks" section in Oracle Communications Cloud Native Core Networks Data Analytics Function Installation Guide.

4.2.2 Post Installation

4.2.2.1 Helm Test Error Scenario

Following are the error scenarios that may be identified using helm test.

- Run the following command to get the Helm Test pod name:

kubectl get pods -n <deployment-namespace> - When a helm test is performed, a new helm test pod is created. Check for the Helm Test pod that is in an error state.

- Get the logs using the following command:

kubectl logs <podname> -n <namespace>Example:kubectl get <helm_test_pod> -n ocnwdafFor further assistance, collect the logs and contact MOS.

4.2.2.2 Purge Kafka Topics for New Installation

If in a previous OCNWDAF installation, Kafka topics contained messages, the topics should be retained in the new installation but not the messages. Follow the procedure below to prevent purge of Kafka topics:

- Connect to Kafka pod in your Kubernetes environment, run the command:

kubectl -n <namespace> exec -it <podname> -- bash - Change directory, move to the directory that contains the binary files:

cd kafka_2.13-3.1.0/bin/ - Obtain the list of topics, run the command:

kafka-topics.sh --list --bootstrap-server localhost:9092 - Delete each topic (repeat this step for each topic):

kafka-topics.sh --bootstrap-server localhost:9092 --delete --topic <topicname>

On completion of this procedure, the Kafka topics exist, but the messages do not exist.

Note:

After every installation is recommended to purge the topics before uninstalling them.4.3 Database Related Issues

This section describes the most common database related issues and their resolution steps. It is recommended to perform the resolution steps provided in this guide. If the issue still persists, then contact My Oracle Support.

4.3.1 Debugging MySQL DB Errors

If you are facing issues related to subscription creation, follow the procedure below to login to MySQL DB:

Note:

Once the MySQL cluster is created, the cndbtier_install container generates the password and stores it in the occne-mysqlndb-root-secret secret.- Retrieve the MySQL root password from occne-mysqlndb-root-secret secret.

Run the command:

$ kubectl -n occne-cndbtier get secret occne-mysqlndb-root-secret -o jsonpath='{.data}'map[mysql_root_password:TmV4dEdlbkNuZQ==] - Decode the encoded output received as an output of the previous step to get the actual password:

$ echo TmV4dEdlbkNuZQ== | base64 --decode NextGenCne - Login to MySQL pod, run the command:

$ kubectl -n occnepsa exec -it ndbmysqld-0 -- bashNote:

Default container name is:mysqlndbcluster.Run the command

kubectl describe pod/ndbmysqld-0 -n occnepsato see all the containers in this pod. - Login using MySQL client as the root user, run the command:

$ mysql -h 127.0.0.1 -uroot -p - Enter current root password for MySQL root user obtained from step 2.

- To debug each microservice, perform the following steps:

- For the ocn-nwdaf-subscription service, run the following SQL commands:

use <dbName>; use nwdaf_subscription; select * from nwdaf_subscription; select * from amf_ue_event_subscription select * from smf_ue_event_subscription - For the ocn-nrf-simulator service, run the following SQL commands:

use <dbName>; use nrf; select * from profile; - For the ocn-smf-simulator service, run the following SQL commands:

use <dbName>; use nrf; select * from smf_event_subscription; - For the ocn-amf-simulator service, run the following SQL commands:

use <dbName>; use nrf; select * from amf_event_subscription; - For the ocn-nwdaf-data-collection service, run the following SQL commands:

use <dbName>; use nwdaf_data_collection; select * from amf_event_notification_report_list; select * from amf_ue_event_report; select * from cap4c_ue_notification; select * from slice_load_level_notification; select * from smf_event_notification_report_list; select * from smf_ue_event_report; select * from ue_mobility_notification; - For the ocn-nwdaf-configuration-service service, run the following SQL commands:

use <dbName>; use nwdaf_configuration_service; select * from slice; select * from tracking_are; select * from slice_tracking_area; select * from cell;

- For the ocn-nwdaf-subscription service, run the following SQL commands:

4.4 Apache Kafka Related Issues

To debug issues related to Apache Kafka pipelines (such as, unable to read messages from the pipeline or write messages to the pipeline) perform the following steps:

- Get the Kafka pods, run the command:

kubectl -n performance-ns get pods -o wide | grep "kafka" - Select any pod and access the pod using the command:

kubectl -n performance-ns exec -it kafka-sts-0 -- bash - Move to the directory containing the binary files, run the command:

cd kafka_2.13-3.1.0/bin/ - Obtain the list of topics, run the command:

kafka-topics.sh --list --bootstrap-server localhost:9092 - For each topic, run the following command:

kafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --topic <topic-name>

4.5 CAP4C Related Issues

- cap4c-model-controller

- cap4c-model-executor

- kafka

- mysql-pod

To obtain more information on the service pods, follow the steps listed below:

-

Each of these services is deployed as pod in Kubernetes. To find the status of the pods in Kubernetes run the following command:

$ kubectl get pods -n <namespace>Sample output:

NAME READY STATUS RESTARTS AGE cap4c-model-controller-deploy-779cbdcf8f-w2pfh 1/1 Running 0 4d8h cap4c-model-executor-deploy-f9c96db54-ttnhd 1/1 Running 0 4d5h cap4c-stream-analytics-deploy-744878569-5xr2w 1/1 Running 0 4d8h - To verify the pod information, print the detail of each pod to:

$ kubectl describe pod cap4c-model-controller-deploy-779cbdcf8f-w2pfh -n <namespace>Sample output:

Name: cap4c-model-controller-deploy-779cbdcf8f-w2pfh Namespace: performance-ns Priority: 0 Node: sunstreaker-k8s-node-2/192.168.200.197 Start Time: Fri, 26 Aug 2022 15:31:39 +0000 Labels: app=cap4c-model-controller pod-template-hash=779cbdcf8f Annotations: cni.projectcalico.org/containerID: 480ca581a828184ccf6fabf7ec7cfb68920624f48d57148f6d93db4512bc5335 cni.projectcalico.org/podIP: 10.233.76.134/32 cni.projectcalico.org/podIPs: 10.233.76.134/32 kubernetes.io/psp: restricted seccomp.security.alpha.kubernetes.io/pod: runtime/default Status: Running - List the service configuration for the pods, run the command:

$ kubectl get svc -n <namespace>Sample output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE cap4c-executor ClusterIP 10.233.5.218 <none> 8888:32767/TCP 4d8h

4.6 Service Related Issues

This section describes the most common service related issues and their resolution steps. It is recommended to perform the resolution steps provided in this guide. If the issue still persists, then contact My Oracle Support.

4.6.1 Errors from Microservices

The OCNWDAF microservices are listed below:

- ocn-nwdaf-subscription

- ocn-nwdaf-data-collection

- ocn-nwdaf-communication

- ocn-nwdaf-configuration-service

- ocn-nwdaf-analytics

- ocn-nwdaf-gateway

- ocn-nwdaf-mtlf

- ocn-nrf-simulator

- ocn-smf-simulator

- ocn-amf-simulator

- mesa-simulator

To debug microservice related errors, obtain the logs in the pods which are facing issues, run the following commands for each microservice:

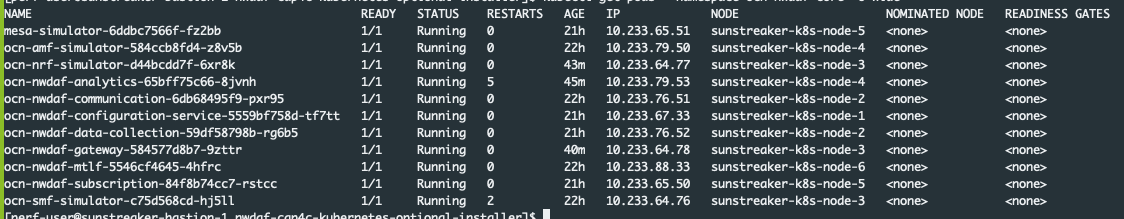

- Obtain the pod information, run the command:

kubectl get pods -n <nameSpace> -o wideSample output:

Figure 4-1 Sample Output

- Obtain the log information for the pods, run the command:

kubectl logs <podName> -n <nameSpace>

kubectl logs ocn-nwdaf-subscription-84f8b74cc7-d7lk9 -n performance-nskubectl logs ocn-nwdaf-data-collection-57b948989c-xs7dq -n performance-nskubectl logs ocn-nwdaf-gateway-584577d8b7-f2xvd -n performance-nskubectl logs ocn-amf-simulator-584ccb8fd4-pcdn6 -n performance-ns