3 OCNWDAF Features

This section explains the OCNWDAF features.

3.1 Capex Optimization

In a network, subscribers are distributed unevenly, resulting in some areas having high density of subscribers as compared to others. Areas with a high density of subscribers require additional resources. The Capex Optimization service identifies such regions based on the information about preferred subscribers and network activity in that area. OCNWDAF collects information about the active UEs per cell, the aggregated tracking area, the user plane resources serving in the tracking area, and the NFs (UPF or, AMF or SMF) load servicing (optionally) the tracking area to determine severely congested tracking areas and derive Capex metrics about the preferred subscribers in a group for a tracking area.

For information on the Capex Optimization microservice, see unresolvable-reference.html#GUID-29F0DDCB-79A9-4503-98AF-91B834454F2B.

The user can create Capex groups using the OCNWDAF GUI and view the Capex Analytics on the GUI. For more information, see unresolvable-reference.html#GUID-DCE0061F-A8B1-4228-B9A0-1ECEBEF21F4E.

3.2 IPv6 Support

OCNWDAF supports Internet Protocol version 6 (IPv6) standard protocol. Using IPv6 format simplifies address configuration through stateless auto configuration. Devices generate their IPv6 addresses, thus reducing the need for DHCP (Dynamic Host Configuration Protocol) servers. Listed below are a few benefits associated with using the IPv6 format:

- IPv6 incorporates several in-built security features, such as IPsec (Internet Protocol Security). IPsec is an optional feature in IPv4, but it is an integral feature in IPv6 and enhances end-to-end secure communication.

- IPv6 is designed for efficient routing and network management. The header structure is simplified, reducing processing overhead on routers thus facilitating faster routing.

- The extensive address space in IPv6 eliminates the need for complex NAT setups, simplifying network configuration and improving end-to-end connectivity.

- IPv6 improves network performance, especially in the case of latency-sensitive applications. It streamlines the routing process and reduces network congestion.

- Certain regions and industries mandate using IPv6 to comply with emerging standards and regulations.

OCNWDAF supports both single stack and dual stack IPv6 environments. The default format is IPv4. Users can choose to configure either IPv4 or IPv6 during deployment through the HELM configuration. IPv6 is supported on all ingress (and egress) interfaces, including SBA, Kafka, and HTTPS. To ensure seamless internal and external communication when IPv6 is configured, it is recommended that all services have a cluster and an external IP in IPv6 format. To configure IPv6 support, see Oracle Communications Networks Data Analytics Function Installation, Upgrade, and Fault Recovery Guide.

3.3 Data Director (OCNADD) Integration

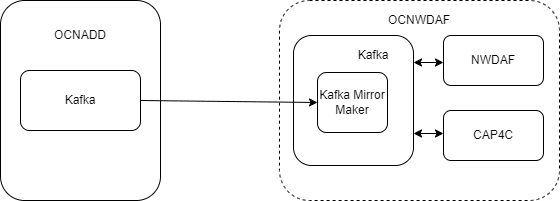

The OCNWDAF supports the Oracle Communications Network Analytics Data Director (OCNADD) as a data source. The Kafka Mirror Maker replication utility replicates Kafka topics between the OCNADD and the OCNWDAF. A Helm parameter dataSource is introduced in the values.yaml file to enable (or disable) OCNADD as a data source.

Note:

The Slice Load Level Analytics, UE Mobility Analytics, and UE Abnormal Behavior Analytics can be derived when the OCNADD is configured as a data source.Figure 3-1 Kafka Replication

For information on prerequisites to enable this feature, see "Environment Setup Requirements" section in Oracle Communications Networks Data Analytics Function Installation and Fault Recovery Guide.

To enable OCNADD as a data source, see "Configure Data Director" and "Installing OCNWDAF Package" sections in Oracle Communications Networks Data Analytics Function Installation and Fault Recovery Guide.

3.4 Machine Learning (ML) Model Evaluation and Selection

Machine Learning (ML) models are programs trained to identify patterns within data sets. Data sets contain historical data and vary based on region, use case, and service provider. ML algorithms analyze and detect patterns within a data set to train ML models. Trained ML models predict analytics based on the input data.

Algorithms are selected based on the data set and use case. Accordingly, different algorithms are effective in distinct scenarios. To obtain accurate predictions, users must retrain the ML models frequently. Users have flexibility in selecting, optimizing, testing, and training ML models.

The OCNWDAF provides a GUI dashboard to select, train, and optimize one or more ML Models for a given analytics category. The user can select among multiple algorithms supported by each analytics category and run experiments to determine the best-suited ML model for each data set. ML models are evaluated by running experiments and metrics are generated. Metrics for each experiment is displayed on the dashboard. User can select the ML model based on these metrics. Multiple algorithms can be selected simultaneously to run experiments.

To access the ML Model Selector page and perform ML model evaluation on the OCNWDAF GUI, see Machine Learning (ML) Model Selector.

3.5 Network Performance Visualization on the OCNWDAF Dashboard

The OCNWDAF provides network performance analytics to the consumer. The analytics information primarily includes resource consumption by gNodeB (gNB) and mobility performance indicators in the Area of Interest (AoI). The users can access the following network performance analytics on the OCNWDAF GUI:

- All available cells in a selected Tracking Area

- For each cell, the user can view the following information:

- GNB Computing, Memory, and Disk Usage

- Session Success Ratio

- HO Success Ratio

- The user can set a threshold value, select a tracking area and a time interval in the GUI. All the available cells in the selected tracking area within the selected time interval and specified threshold value are displayed on the screen.

For more information, see Monitoring page in the OCNWDAF GUI.

3.6 Service Mesh for Intra-NF Communication

Note:

For service mesh integration, the service mesh must be available in the cluster in which OCNWDAF is installed.3.7 Georedundancy and Data Replication

Overview

A network includes multiple sites, and each site can be located at different data centers and spread across geographic locations. A network failure can occur due to reasons such as network outages, software defects, hardware issues, and so on. These failures impact the continuity of network services. Georedundancy is used to mitigate such network failures and ensure service continuity in a network. In a georedundant deployment, when a failure occurs at one site, an alternate site takes ownership of all the subscriptions and activities of the failed site. The alternate site ensures consistent data flow, service continuity, and minimal performance loss. Georedundancy includes implementing data replication of one site across multiple sites to efficiently handle failure scenarios and ensure High Availability (HA).

Georedundant Deployment Architecture

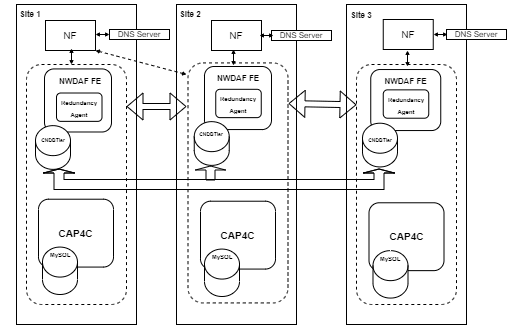

The OCNWDAF supports both 2-site and 3-site georedundant deployments. The following diagram depicts a 3-site georedundant deployment.

Figure 3-2 Georedundant Architecture

Redundancy Agent

Redundancy Agent is the microservice introduced to maintain communication and enable site transfer between the mated sites. It uses a heartbeat mechanism to broadcast the liveness of a site and receives liveness updates from mated sites.

Site transfer is based on the configured priority of the mated sites. The Redundancy Agent of every site maintains a priority list of the mated sites. The priority list is configured using the Helm chart. A site can access the priority list of other sites using the database and build the ownership matrix. When a site failure occurs at the primary site, the ownership is transferred to the secondary site. If the secondary site fails, the ownership is transferred to the tertiary site and so on. Identifying and recognizing a failed site is based on a quorum, where a majority of active sites agree on the failed status of the site.

An NF sends a subscription request to the Analytics Subscription Service. The subscription service verifies if the subscription exists and if it is a new subscription, it stores the subscription data and the site ID. The Redundancy Agent accordingly responds with a status to the subscription service. If the status is ACTIVE, the Analytics Subscription Service continues with the subscription request. If the Analytics Subscription Service receives any other status, it responds to the subscription request with an HTTP 5XX response. The subscription service updates and maintains site status based on the response received by the Redundancy Agent. If a subscription request is received from a NF when the site is down, the transfer of responsibility to mated site is the addressed by the Redundancy Agents, the request is forwarded to the site that is currently handling all the subscription requests of the site that is down. If a core component failure occurs, the Redundancy Agent intimates the Subscription Service, the Subscription Service caches the data sent by Redundancy Agent, and further this data is referred by Subscription Service while accepting fresh subscriptions. The Analytics Information Service also operates like the Analytics Subscription Service. The Redundancy Agent provides the site liveness data to the Data Collection Service when there is a change in the core component status. The Data Collection Service caches the data sent by Redundancy Agent, and refers to this data while accepting fresh data collection requests.

Data Replication

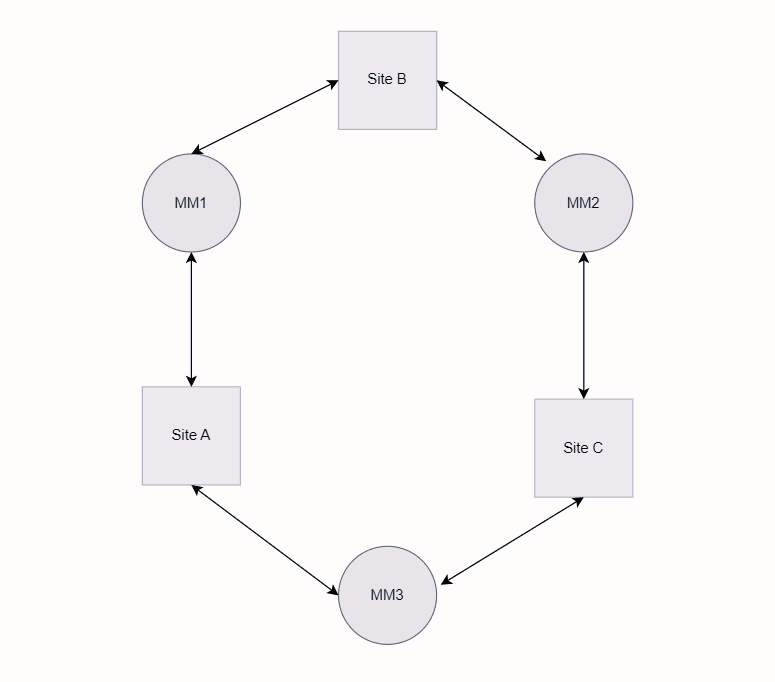

In a georedundant deployment, High Availability (HA) can be achieved by data replication across all sites. Data topics across all sites are replicated by the “Mirror Maker 2 (MM2)” which is the replication tool for Kafka topics. A three-site georedundant deployment requires three MM2s to operate in a circular topology. The MM2s are located in each site's environment. The diagram below depicts the recommended circular topology for a three-site georedundant deployment:

Figure 3-3 Circular Topology

In the above diagram:

- The Mirror Maker “MM1” of Site A handles the replication for both Sites A and B.

- The Mirror Maker “MM2” of Site B handles the replication for both Sites B and C.

- The Mirror Maker “MM3” of Site C handles replication for Sites C and A.

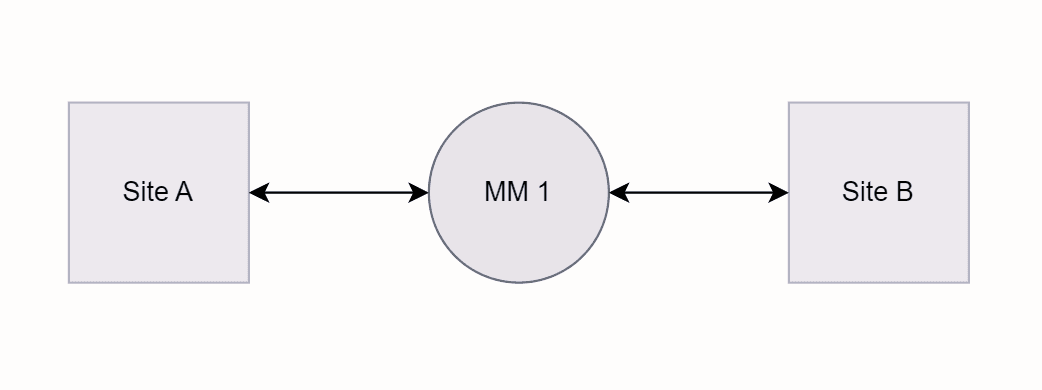

A two-site georedundant deployment requires only one MM2 connecting the sites. The MM2 can be in either of the site's environments. The diagram below depicts the recommended topology for a two-site georedundant deployment:

Figure 3-4 Two-Site Deployment

Prerequisites

- Each site configures the remote OCNWDAF sites that it shall be georedundant with.

- The data replication service must be in good health.

- Georedundant sites must be time synchronized.

- Georedundant OCNWDAF sites must be reachable from NFs from all three sites.

- The NFs register their services and maintain heartbeats with the OCNWDAF. The data is replicated across the georedundant OCNWDAFs, thereby allowing the NFs to seamlessly move between the OCNWDAFs in case of failure.

- The configurations at each site shall be the same to allow the OCNWDAFs at all sites to handle the subscriber NFs in the same manner.

- This feature is configured using Helm.

- For a three-site georedundant deployment use a circular topology with active/active connection for three sites.

- Recommended latency is less than 100 ms.

Georedundant Deployment Failure Scenarios

Listed below are possible failure scenarios in georedundant deployments and the respective recovery mechanisms to prevent network failures:

Table 3-1 Failure Scenarios

| Failure Scenario | Recovery Mechanisms |

|---|---|

| One of the mated OCNWDAF sites is down, and the heartbeat is not exchanged. | The Redundancy Agent updates the ownership matrix and transfers site ownership to the active site based on the existing ownership matrix. |

| The mated OCNWDAF site is up, but the heartbeat exchange is missed. | Redundancy Agent marks the unresponsive mated site as UNAVAILABLE and after number of heartbeats missed crosses the configured threshold, the Redundancy Agent marks the site as INACTIVE. Transfer of ownership is then initiated based on the priority list. |

| Heartbeats are exchanged, but the OCNWDAF site is experiencing a core component failure. |

In case of core component failure, the redundancy agent uses the K8s client to identify the failure of core components. The Redundancy Agent updates the ownership matrix and transfers site ownership to the active site based on the existing ownership matrix. |

| cnDBTier Primary Replication Channel Failure, cnDBTier Secondary Replication Channel Failure and cnDBTier Both Replication Channel Failure | cnDBTier takes appropriate action. For more information, see, Oracle Communications Cloud Native Core cnDBTier Installation, Upgrade, and Fault Recovery Guide.

Note: Transferring ownership in this case may result in some data loss. |

| Complete cnDBTier Failure or DB Connection Failure with OCNWDAF. | Redundancy Agent updates the current status of the OCNWDAF site as unavailable/down, updates the ownership matrix, and sends the heartbeat to mated sites. Redundancy Agent determines the status of the DB and takes appropriate action. For more information on cnDBtier recovery, see, Oracle Communications Cloud Native Core cnDBTier Installation, Upgrade, and Fault Recovery Guide |

| NF unable to send or communicate with local OCNWDAF. | The NF uses the DNS (or SCP Model-D) to obtain the IP of the secondary site which will now be responsible for the current site. |

Note:

-

In case of cnDBTier replication channel failures or complete cnDBtier failure, see, Oracle Communications Cloud Native Core cnDBTier Installation, Upgrade, and Fault Recovery Guide

- When one or more core components of an OCNWDAF instance fails, the OCNWDAF instance marks itself as INACTIVE, and broadcasts this message to other mated sites. The mated sites initiate the ownership transfer process.

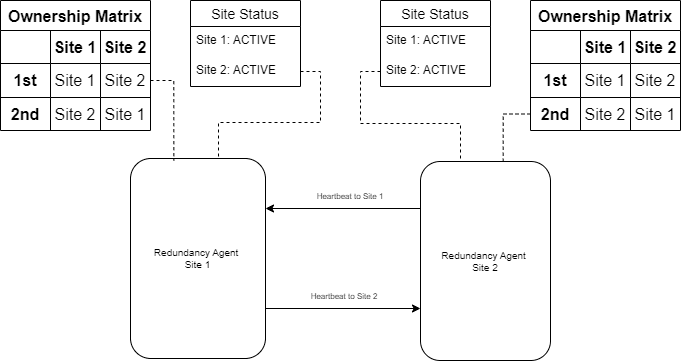

Two-Site Georedundancy

The following diagram depicts a two-site georedundant deployment:

Figure 3-5 Two-Site Georedundancy

The ownership matrix determines the transfer of ownership when a site failure occurs. The site failure is determined by the site status. The site status can be ACTIVE, INACTIVE, SUSPENDED, DISCOVERY and UNAVAILABLE. The ownership transfer occurs as per the ownership matrix when the site is INACTIVE, SUSPENDED or UNAVAILABLE.

For example, when Site 2 is INACTIVE, based on the ownership matrix Site 1 becomes the owner of all subscriptions owned by Site 2.

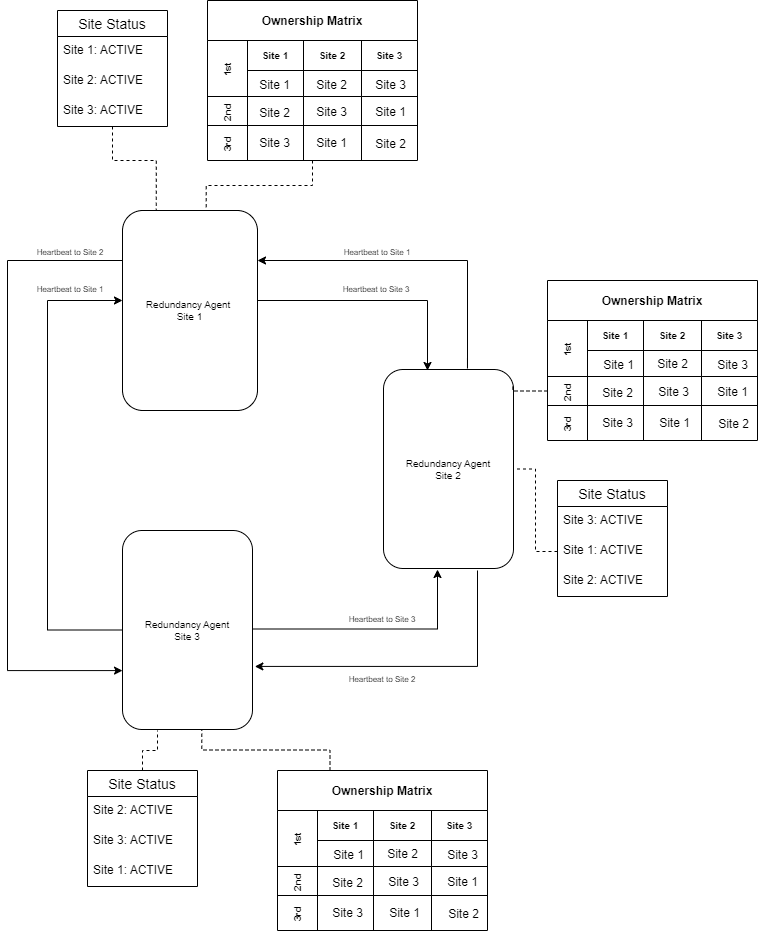

Three-Site Georedundancy

The following diagram depicts a three-site georedundant deployment:

Figure 3-6 Three-Site Georedundancy

The ownership matrix determines the transfer of ownership when a site failure occurs. The site failure is determined by the site status. The site status can be ACTIVE, INACTIVE, SUSPENDED, DISCOVERY and UNAVAILABLE. The ownership transfer occurs as per the ownership matrix when the site is INACTIVE, SUSPENDED or UNAVAILABLE.

For example, when Site 1 is down, Site 2 takes ownership of the subscriptions at Site 1. If Site 2 is also down Site 3, assumes ownership of subscriptions at Site 1. The transfer of ownership is based on the priority configured in the Ownership Matrix.

Managing Georedundancy

Deploy

To deploy OCNWDAF in a georedundant environment:

-

Install cnDBTier version 22.4.0 or above on each configured site. Ensure the DB Replication Channels between the sites are UP. For information about installing cnDBTier, see "Installing cnDBTier" in Oracle Communications Cloud Native Core cnDBTier Installation, Upgrade, and Fault Recovery Guide.

- Deploy OCNWDAF over the replicated cnDBTier sites. Ensure the sites have different names. For information about installing and deploying OCNWDAF, see Oracle Communications Cloud Native Core Networks Data Analytics Function Installation Guide and Fault Recovery Guide.

- Ensure that the redundancy agent details are added to the common gateway routing rules.

To install the Mirror Maker for data replication, see Oracle Communications Cloud Native Core Networks Data Analytics Function Installation Guide and Fault Recovery Guide.

Configure Georedundancy

To configure the Redundancy agent, see the section "Configure Redundancy Agent" in Oracle Communications Cloud Native Core Networks Data Analytics Function Installation Guide and Fault Recovery Guide.

Configure the georedundancy specific parameters in the OCNWDAF instances on the replicated sites. Configure the following parameters in the redundancy agents properties file to enable and configure georedundancy in the deployed sites:

- Set the GEO_REDUNDANCY_ENABLE parameter to true.

- Ensure that the following parameters are properly configured (based on number of georedundant sites in the deployment) in the redundancy agents properties file:

-

GEO_RED_AGENT_SITE_SECONDARY_SITEID

- GEO_RED_AGENT_SITE_SECONDARY_ADDRESS

- GEO_RED_AGENT_SITE_TERTIARY_SITEID

- GEO_RED_AGENT_SITE_TERTIARY_ADDRESS

- GEO_RED_SITE_SELF_PRIORITY

- GEO_RED_AGENT_SITE_ID

- GEO_RED_AGENT_SELF_ADDRESS

- GEO_RED_AGENT_NUMBER_OF_MATED_SITE

For example:

GEO_RED_AGENT_NUMBER_OF_MATED_SITE: 2 GEO_RED_AGENT_SITE_SECONDARY_SITEID: OCNWDAF-XX-2 GEO_RED_AGENT_SITE_SECONDARY_ADDRESS: http://ocn-nwdaf-gateway-service:8088 GEO_RED_AGENT_SITE_TERTIARY_SITEID: OCNWDAF-XX-3 GEO_RED_AGENT_SITE_TERTIARY_ADDRESS: http://ocn-nwdaf-gateway-service:8088 -

- If the deployment is a two-site georedundant deployment the tertiary site is not a part of the georedundant deployment, set a placeholder value. It should not be empty.

- After OCNWDAF instances are deployed over replicated cnDBTier sites, run the following command:

helm install {releasename} {chartlocation} -n {namespace}For example:helm install grdagent charts -n nwdaf

Configure the following parameters to enable and configure georedundancy in the custom values file for OCNWDAF:

Table 3-2 REDUNDANCY AGENT CONFIGURATION

| Parameter | Description | Default Value |

|---|---|---|

| ocnwdaf.cluster.namespace | Name space of the deployment

Note: Change this to the name space of OCNWDAF deployments. |

ocn-nwdaf |

| global.ocnNwdafGeoredagent | This parameter enables the georedundancy feature, it is turned off by default. Set this parameter to "True" to enable georedundancy. | False |

| global.siteVariables.OCNWDAF_SITE_ID | This parameter sets the name of the Site, it is used by the redundancy agent, the scheduler service, and the subscription service. | OCNWDAF-XX-1 |

| ocnnwdaf.geored.hooks.database | Database information for the hook | nwdaf_subscription |

| ocnnwdaf.geored.hooks.table | Table information for the hook | nwdaf_subscription |

| ocnnwdaf.geored.hooks.column1 | Column1 information for the hook | record_owner |

| ocnnwdaf.geored.hooks.column2 | Column2 information for the hook | current_owner |

| ocnnwdaf.geored.hooks.image | Image information for the hook | ocnwdaf-docker.dockerhub-phx.oci.oraclecorp.com/nwdaf-cap4c/nwdaf-cap4c-mysql:8.0.30 |

| ocnnwdaf.geored.agent.name | Name of the deployment | ocn-nwdaf_georedagent |

| ocnnwdaf.geored.agent.replicas | Number of Replicas | 1 |

| ocnnwdaf.geored.agent.image.source | Image for GRD Agent

Note: Modify this value if the image is in a different repository. |

occne-repo-host:5000/occne/redagent-ms-dev:1.0.31 |

| ocnnwdaf.geored.agent.image.pullPolicy | Image Pull Policy | IfNotPresent |

| ocnnwdaf.geored.agent.resources.limits.cpu | CPU Limit | 1 |

| ocnnwdaf.geored.agent.resources.limits.memory | Memory Limit | 1Gi |

| ocnnwdaf.geored.agent.resources.request.cpu | CPU Request | 1 |

| ocnnwdaf.geored.agent.resources.request.memory | Memory Request | 1Gi |

| ocnnwdaf.geored.agent.service.type | Service Type of the Deployment | ClusterIP |

| ocnnwdaf.geored.agent.service.port.containerPort | Container Port of the Deployment | 9181 |

| ocnnwdaf.geored.agent.service.port.targetPort | Target Port of the Deployment | 9181 |

| ocnnwdaf.geored.agent.service.port.name | Name of the Service Port | ocnwdafgeoredagentport |

| ocnnwdaf.geored.agent.service.prometheusport.containerPort | Container Port of the Prometheus | 9000 |

| ocnnwdaf.geored.agent.service.prometheusport.targetPort | Target Port of the Prometheus | 9000 |

| ocnnwdaf.geored.agent.service.prometheusport.name | Name of the Prometheus

Note: Modify the port name based on the promethus on the deployed setup. |

http-cnc-metrics |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_SERVER_HTTP2_ENABLED | Enable/Disable HTTP2 | TRUE |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_HEARTBEAT_INTERVAL_MS | Time Interval To check HeartBeat in "ms" | 10000 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_HEARTBEAT_THRESHOLD | Number of Time Times to check Heart Beat | 5 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_CORE_COMP_THRESHOLD | Number of Time Times to check Heart Beat toward Core Components provides | 5 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_SITE_NUMBER | Current Site Number | 1 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_SITE_ID | Current Site ID | OCNWDAF-XX-1 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_NUMBER_OF_MATED_SITE | Number of Mated Sites. It is updated based on GRD Sites in sync. | 1 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_SELF_ADDRESS | Current Agent Address. The resolvable URL of the OCNNWDAF Gateway service. This address should be reachable outside the cluster . | |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_MICROSERVICE_LIVELINESS_MS | Check Interval for OCNWDAF Microservice in "ms". | 10000 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_OCNWDAF_CORE_COMPONENT_LIST | List of OCNWDAF microservices that needs to be verified. | ocn-nwdaf-subscription |

| ocnnwdaf.geored.agent.env.GEO_RED_SITE_SUBSCRIPTION_OWNERSHIP_TRANSFER_URL | Subscription API for Ownership Transfer | http://ocn-nwdaf-subscription-service-internal:8087/nnwdaf-eventssubscription/v1/subscriptions/updateServingOwner |

| ocnnwdaf.geored.agent.env.GEO_RED_SITE_DATA_COLLECTION_URL | Data Collection API for Ownership Check | http://ocn-nwdaf-data-collection-service-internal:8081/ra/notify |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_DBTIER_REPLICATION_STATUS_URL | cnDBTier Monitor Service URL for Replication | Use the Reachable Monitor Service from Deployed CNDB namespace. For example: http://mysql-cluster-db-monitor-svc.{cndbnamspace}.svc.{domainname}:8080/db-tier/status/replication |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_DBTIER_STATUS_URL | cnDBTier Monitor Service URL for Local | Use the Reachable Monitor Service from Deployed CNDB namespace. For example: http://mysql-cluster-db-monitor-svc.{cndbnamspace}.svc.{domainname}:8080/db-tier/status/local |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_SITE_SECONDARY_SITEID | Secondary Site ID | OCNWDAF-XX-2 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_SITE_SECONDARY_ADDRESS | Secondary Site Address |

The Resolvable URL of the OCNWDAF Gateway of Secondary Site. This address should be reachable outside the cluster |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_SITE_TERTIARY_SITEID | Tertiary Site ID | OCNWDAF-XX-3 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_SITE_TERTIARY_ADDRESS | Tertiary Site Address |

The Resolvable URL of the OCNWDAF Gateway of Tertiary Site. This address should be reachable outside the cluster |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_DB_URL | IP of the Site cnDBTier | The Cluster IP/External IP of the CNDB |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_USERNAME | Name of the DB User with privileges to GRD DB and Subscription DB. The user should have access to both GRD and Subscription Databases | occneuser |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_PASSWORD | Password for the DB User | password |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_ENABLE | Enable/Disable GRD | false |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_DB_PORT | Port of the DB | 3306 |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_DB_NAME | Name of the GRD Database | georedagent |

| ocnnwdaf.geored.agent.env.GEO_RED_AGENT_CONFIG_SERVER | Config Server URL for the Site's OCNWDAF | http://nwdaf-cap4c-spring-cloud-config-server:8888 |

To customize the Mirror Maker configuration for data replication, see Oracle Communications Cloud Native Core Networks Data Analytics Function Installation Guide and Fault Recovery Guide.

Listed below are the Mirror Maker configuration parameters:

Table 3-3 Mirror Maker Parameters

| Parameter | Description |

|---|---|

| clusters | The clusters name. |

| clusterX.bootstrap.servers | This parameter refers to the brokers that belong to the cluster, host, and port. Multiple brokers can be separated by a comma, and clusterX is replaced by each cluster name. |

| clusterX.config.storage.replication.factor | The replication factor is used when Kafka Connect creates a topic to store the connector and task configuration data. This value should always be a minimum of “3” for a production system. It cannot exceed the number of Kafka brokers in the cluster. Set the value of this parameter to “1” to use the Kafka broker's default replication factor. |

| clusterX.offset.storage.replication.factor | The replication factor is used when Kafka Connect creates a topic to store the connector offsets. This value should always be a minimum of “3” for a production system. It cannot exceed the number of Kafka brokers in the cluster. Set the value of this parameter to “1” to use the Kafka broker's default replication factor. |

| clusterX.status.storage.replication.factor | The replication factor is used when Kafka Connect creates a topic to store the connector and task status updates. This value should always be a minimum of “3” for a production system. It cannot exceed the number of Kafka brokers in the cluster. Set the value of this parameter to “1” to use the Kafka broker's default replication factor. |

| replication.factor | Indicates the number of brokers available. |

| nwdafDataReplication.config.serviceN.kafkaService | The Kafka service name to build Kafka service's FQDN. |

| nwdafDataReplication.config.serviceN.namespace | The namespace to build Kafka service's FQDN. |

| nwdafDataReplication.config.serviceN.cluster | The cluster to build Kafka service's FQDN. |

Procedure to Migrate From Two-Site Georedundancy to Three-Site Georedundancy

To deploy a tertiary site in a two-site georedundant environment, update tertiary site parameters in the redundancy agents properties file.

- Update the parameters GEO_RED_AGENT_SITE_TERTIARY_SITEID, GEO_RED_AGENT_SITE_TERTIARY_ADDRESS, GEO_RED_SITE_SELF_PRIORITY to include the new site in the priority list and the address list for all sites including the existing sites and the new site as well.

-

Update GEO_RED_AGENT_NUMBER_OF_MATED_SITE parameter in the helm chart to increase the number of mated sites to 3 for all sites.

- Configure and deploy georedundancy service on each site. Run the following command:

helm install {releasename} {chartlocation} -n {namespace}

Disable Georedundancy

To disable georedundancy set the ENABLE_GEO_REDUNDANCY parameter to false in the redundancy agents properties file.

Uninstall Mirror Maker

To unistall Mirror Maker, run the following command:

helm uninstall nwdaf-data-replicationRemove a OCNWDAF site from a Georedundant Deployment

Prerequisites

Disable replication on the CNDB MySQL Cluster. For more information see the procedure to Gracefully stop geo-replication in the Oracle Communications Cloud Native Core cnDBTier User Guide.

Perform the following steps:

- Remove the OCNWDAF site from the DNS Service List.

- Delete the georedundant service from the site and remove the database from sites CNDB database.

- Remove the site references from other sites and upgrade their Redundancy Agents.

For example: If Site 3 is being removed, remove the reference of Site 3 from both Site 1 and Site 2 Redundancy Agents and upgrade the services.

3.8 Automated Test Suite Support

OCNWDAF provides Automated Test Suite (ATS) for validating the functionalities. ATS allows you to run OCNWDAF test cases using an automated testing tool, and then compares the actual results with the expected or predicted results. In this process, there is minimal user intervention.

For more information on installing and configuring ATS, see Oracle Communications Network Analytics Suite Automated Test Suite Guide.