5 Planning Infrastructure

In "Creating a Basic OSM Cloud Native Instance", you learned how to create a basic OSM instance in your cloud native environment. This chapter provides details about setting up infrastructure and structuring OSM instances for your organization. However, if you want to continue in your sandbox environment learning about more OSM cloud native capabilities, then proceed to "Creating Your Own OSM Cloud Native Instance".

See the following topics:

- Sizing Considerations

- Managing Configuration as Code

- Setting Up Automation

- Securing Operations in Kubernetes

Sizing Considerations

The hardware utilization for an OSM cloud native deployment is approximately the same as that of an OSM traditional deployment.

Consider the following when sizing for your cloud native deployment:

-

For OSM cloud native, ensure that the database is sized to account for the WLS Persistent Store workload residing in the database. For details, see the "Persistent Store Configuration & Operational Considerations for JMS, SAF & WebLogic tlogs in OSM (Doc ID 2469767.1)" knowledge article on My Oracle Support.

-

Oracle recommends sizing using a given production shape as a building block, adjusting the OSM cluster size to meet target order volumes.

-

In addition to planning hardware for a production instance, Oracle recommends planning for a Disaster Recovery size and key non-production instances to support functional, integration and performance tests The Disaster Recovery instance can be created against an Active Data Guard Standby database when needed and terminated when no longer needed to improve hardware utilization.

-

Non-production instances can likewise be created when needed, either against new or existing database instances.

Contact Oracle Support for further assistance with sizing.

Managing Configuration as Code

Managing Configuration as Code involves the following tasks:

- Creating Source Control Repository

- Managing OSM instances

- Deciding on the Scope

- Deployment Considerations

- Creating an Instance Using the Repository

Creating Source Control Repository

Managing Configuration as Code (CAC) is a central tenet of using OSM cloud native. You must create a source control repository to store all configuration that is necessary to re-create an OSM instance (or PDB) if it is lost. This does not include the toolkit scripts.

You must also set up a Docker repository for the OSM and OSM DB Installer images, as well as any custom versions of the OSM image for your use cases. For example, custom images are required to deploy a custom application .ear file. For more details on custom images, see "Extending the WebLogic Server Deploy Tooling (WDT) Model".

Managing OSM Instances

To extract the full benefits of OSM cloud native, it is imperative that you consider the management of the OSM instances before making potential configuration changes. The sections that follow describe how to structure your repositories to group project level artifacts, while allowing for other artifacts to be re-used (if needed) by the multiple OSM instances within a project.

Example Scenario

This section describes a scenario to help illustrate the concepts.

Let us assume that in an organization, OSM is used for two business purposes each of which is handled by two separate teams. The first team uses OSM to orchestrate wire line (triple play) orders for residential customers, and a second team uses OSM to process mobile orders for business customers.

Deciding on the Scope

You must first decide on the scope of the project including how many instances are required. Choose meaningful names for your project and instance.

The organization in our example will have two projects named resiwireline and bizwireless. We can assume that each project team has a predefined "pre-production" instance for final validation or production changes, a geo-redundant production instance for disaster recovery, a final User Acceptance Testing (UAT) instance for business testing, a few small Quality Assurance (QA) systems and many small development instances.

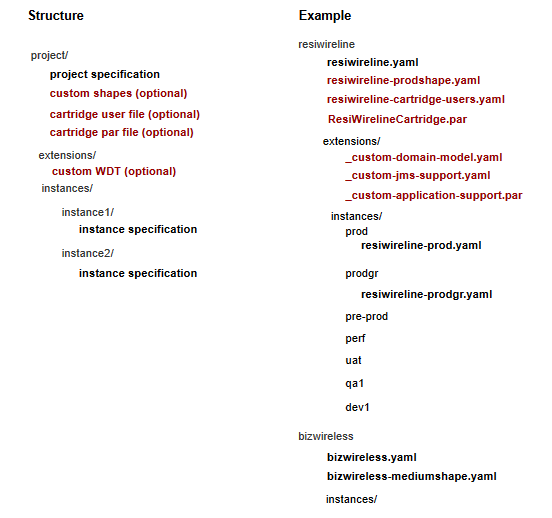

The directory structure for your configuration repository should reflect the hierarchical relationship of the project/instance relationship as well as isolating different projects from each other.

About the Repository Directory Structure

The project directory includes the project specification as well as configuration that is common to all instances, whereas instance specifications reside in a specific instance directory.

-

Each project requires its own project specifications (YAML files).

-

Optional artifacts such as the list of users and credentials used by the cartridges are also located under the top level project directory.

-

All artifacts under the project are shared across the instances. Instance directories contain the instance specification.

Note:

While cartridge par files are shown to reside in this repository, you may consider using a separate repository for cartridges as described in "Working with Cartridges".

The following illustration shows the structure and hierarchy of the project directory with an example.

Deployment Considerations

As the scenario shows, there will be many bits of configuration that may mix and match in different ways to produce a specific OSM instance. While all of these instances are pre-defined in the source control repository, they need not be deployed all the time.

Consider the following:

- For each project, one or more production instances may be deployed.

- It would be reasonable for pre-production to be deployed only when needed while first cloning the production DB.

- Likewise, the performance instance could also be deployed only when needed. Its PDB could be cloned from a specially generated PDB with synthetic test data, providing a consistent starting point.

- Likewise, the UAT instance could be deployed when needed, starting from similarly saved UAT PDB.

- The GR instance application would not be pre-deployed, but its database would be created in a DR site and synchronized from production via Active Data Guard.

Setting the Repository Path During Instance Creation

To offer flexibility in how the repository directory structure develops, the create-instance script takes as input, the path to the specification files.

The -s specPath parameter is mandatory in create-instance.sh and can point to several directories at once (directories are separated by a colon).

specPath would contain all the directories that contain specification files used for creating an instance:

- repo/resiwireline

- repo/resiwireline:repo/resiwireline/instances/qa. (This will include all specification files at the resiwireline project level, as well as the specification files in the qa instance directory.)

Additionally, a separate parameter is used to point to the directory where custom extensions are found.

The -m customExtPath parameter is an optional parameter that can be passed into the create-instance.sh script.

customExtPath would point to all the directories where custom template files reside for the instance being created: fileRepo/resiwireline/extensions

Setting Up Automation

This section describes the complete sequence of activities for setting up an OSM environment with the aim of grouping repeatable steps into high-level categories. You should start to plan the steps that you can automate to some degree. This section does not include details on the changes that must be made to the specification files, which is described in "Creating a Basic OSM Instance".

Note:

These steps exclude any one-time setup activities. For details on one-time setup activities, see the tasks you must do before creating an OSM instance in "Creating a Basic OSM Cloud Native Instance".Where pre-requisite secrets are required, the toolkit provides sample scripts for this activity. However, the scripts are not pipeline-friendly. Use the scripts for manually standing up an instance quickly and not for any automated process for creating instances. These scripts are also important because they illustrate both the naming of the secret and the layout of the data within the secret that OSM cloud native requires. You must replace references to toolkit scripts for creating secrets with your own mechanism in your DevOps process.

Configuring Code for Creating an OSM Instance

To configure code for creating an instance, you assemble the configuration at the project and the instance levels. While some of these activities could be automated, much of the work is manual in nature.

- Assemble the configuration.

To assemble the configuration at the project level:

Note:

These steps should be performed once per project and then re-used for each instance.- Copy $OSM_CNTK/samples/project.yaml to your file repository and rename to align with your project naming decisions made earlier (for example, project.yaml).

- Assemble the optional configuration files as needed. These files include custom WDT fragments, custom shapes, cartridge user files, and par files for deployment.

To assemble the configuration at the instance level, copy $OSM_CNTK/samples/instance.yaml to your file repository and rename to align with your project naming decisions made earlier (for example, project-instance.yaml).

- Create pre-requisite secrets for OSM DB access,

RCU DB access, OSM system users, OPSS, Introspector

and the WLS Admin credentials used when creating the

domain.

$OSM_CNTK/scripts/manage-instance-credentials.sh -p project -i instance \ create \ osmdb,rcudb,wlsadmin,osmldap,opssWP,wlsRTENote:

Passwords and other secret input must adhere to the rules specified of the corresponding component. - Create custom secrets as required by your OSM

solution

cartridges.

$OSM_CNTK/samples/credentials/manage-cartridge-credentials.sh -p project -i instance \ -c create \ -f user information file ** $OSM_CNTK/samples/credentials/manage-cartridge-credentials.sh -h for help - Create other custom secrets as required by optional configuration.

- Populate the embedded LDAP with all the

cartridge users (only those from prefix/map name

osm) under the

cartridgeUserssection in the project.yaml file. During the creation of the OSM server instance, for all the users listed, an account is created in embedded LDAP with the same username and password as the Kubernetes secret:cartridgeUsers: - osm - osmoe - osmde - osmfallout - osmoelf - osmlfaop - osmlf - tomadmin

After the configuration and the input are available, the remaining activities are focused on Continuous Delivery, which can be automated.

- Register a namespace per

project:

$OSM_CNTK/scripts/register-namespace.sh -p project -t namespaces # For example, $OSM_CNTK/scripts/register-namespace.sh -p sr -t wlsko,traefik # where the namespaces are separated by a comma without spacesNote:

wlskoandtraefikare examples of namespaces. Do not provide details about Traefik if you are not using it. - Create one OSM PDB per instance:

- If the master OSM PDB exists in the CDB, clone the PDB. In this scenario, a master PDB is created by cloning a seed PDB, deploying the OSM/RCU schema, and then optionally deploying cartridges. This master is only valid for a specific OSM schema version.

- If the master CDB does not have

the schema provisioned, do the following:

- Clone the seed PDB and then run

the DB installer to create OSM and the RCU

schema:

$OSM_CNTK/scripts/install-osmdb.sh -p sr -i quick -s $SPEC_PATH -c 1 (OSM Schema) $OSM_CNTK/scripts/install-osmdb.sh -p sr -i quick -s $SPEC_PATH -c 7 (RCU Schema) - Deploy the

cartridges:

./scripts/manage-cartridges.sh -p project_name -i instance_name -s $SPEC_PATH -f par_file -c parDeploy

- Clone the seed PDB and then run

the DB installer to create OSM and the RCU

schema:

- If you want to use a PDB from

another instance in order to reuse the OSM data,

do the following:

- Clone the existing PDB.

- Drop the existing

RCU:

$OSM_CNTK/scripts/install-osmdb.sh -p project -i instance -s $SPEC_PATH -c 8 - Recreate the

RCU:

$OSM_CNTK/scripts/install-osmdb.sh -p project -i instance -s $SPEC_PATH -c 7

- Create the

Ingress:

$OSM_CNTK/scripts/create-ingress.sh -p project -i instance -s $SPEC_PATH - Create the

instance.

$OSM_CNTK/scripts/create-instance.sh -p project -i instance -s $SPEC_PATH

Deleting an Ingress

$OSM_CNTK/scripts/delete-ingress.sh -p project -i instanceDeleting an Instance

This section describes the sequence of activities for deleting and cleaning up various aspects of the OSM environment.

- Run the following

command:

$OSM_CNTK/scripts/delete-instance.sh -p project -i instance - Remove the instance content manually from the LDAP server using your LDAP Admin client. Specify ou=project-instance.

To clean up the PDB, drop it.

- Delete the OSM instance and the database instance specification files.

- Delete the

secrets:

$OSM_CNTK/scripts/manage-instance-credentials.sh -p project -i instance \ delete \ osmldap,osmdb,rcudb,wlsadmin,opssWP,wlsRTE - Delete any additional custom secrets using kubectl.

Trying to streamline the processes and identifying when to omit certain activities and where other activities must be repeated can be challenging. For instance, dropping the OSM RCU schema is independent of deleting an instance, which happens through different script invocations. While the life-cycle of the OSM instance and the PDB should be aligned, there are also use cases where the business data in a PDB (cartridges or orders) is required for re-use by a different OSM instance. For details on specific use cases, see "Reusing the Database State".

Securing Operations in Kubernetes Cluster

This section describes how to secure the operations of OSM cloud native users in a Kubernetes cluster. A well organized deployment of OSM cloud native ensures that individual users have specific privileges that are limited to the requirements for their approved actions. The Kubernetes objects concerned are service accounts and RBAC objects.

All OSM cloud native users fall into the following three categories:

- Infrastructure Administrator

- Project Administrator

- OSM User

Infrastructure Administrator

Infrastructure Administrators perform the following operations:

- Install WebLogic Kubernetes Operator in its own namespace

- Create a project for OSM cloud native and configure it

- After creating a new project, run the register-namespace.sh script provided with the OSM cloud native toolkit

- Before deleting an OSM cloud native project, run the unregister-namespace.sh script

- Delete an OSM cloud native project

- Manage the lifecycle of WebLogic Kubernetes Operator (restarting, upgrading, and so on)

Project Administrator

Project Administrators can perform all the tasks related to an instance level OSM cloud native deployment within a given project. This includes creating, updating, and deleting secrets, OSM cloud native instances, OSM cloud native DB Installer, and so on. A project administrator can work on one specific project. However, a given human user may be assigned Project Administrator privileges on more than one project.

OSM User

This class of users corresponds to the users described in the context of traditionally deployed OSM. These users can log into the user interfaces (UI) of OSM and can call the OSM APIs. These users are not Kubernetes users and have no privileges outside that granted to them within the OSM application. For details about user management, see the OSM Cloud Native System Administrator's Guide and "Setting Up Authentication" in this guide.

About Service Accounts

Installing the WebLogic Kubernetes Operator requires the presence of a service account that is set up appropriately. The install-operator.sh script requires a service account called wlsko-ns-sa in the wlsko-ns namespace. For example, if the namespace where WKO is to be installed is called wlsko, then the expected service account is wlsko-sa. If a service account is found with the correct name, the script uses it. Otherwise, the script creates a service account by that name. The WKO pods need to be installed by the Infrastructure Administrator with cluster-admin privileges, but at runtime, they use this service account and its associated privileges as described in the WKO documentation.

The pods that comprise each OSM cloud native instance (including the transient OSM DB Installer pod and the transient WKO Introspector pod) within a given project namespace use the "default" service account in that namespace. This is created at the time of namespace creation, but can be modified by the Infrastructure Administrator later.

RBAC Requirements

The RBAC requirements for the WebLogic Kubernetes Operator are documented in its user guide. The privileges of the Infrastructure Administrator have to include these. In addition, the Infrastructure Administrator must be able to create and delete namespaces, operate on the WebLogic Kubernetes Operator's namespace and also on the Traefik namespace (if Traefik is used as the ingress controller). Depending on the specifics of your Kubernetes cluster and RBAC environment, this may require cluster-admin privileges.

The Project Administrator's RBAC can be much more limited. For a start, it would be limited to only that project's namespace. Further, it would be limited to the set of actions and objects that the instance-related scripts manipulate when run by the Project Administrator. This set of actions and objects is documented in the OSM cloud native toolkit sample located in the samples/rbac directory.

Structuring Permissions Using the RBAC Sample Files

There are many ways to structure permissions within a Kubernetes cluster. There are clustering applications and platforms that add their own management and control of these permissions. Given this, the OSM cloud native toolkit provides a set of RBAC files as a sample. You will have to translate this sample into a configuration that is appropriate for your environment. These samples are in samples/rbac directory within the toolkit.

The key files are project-admin-role.yaml and project-admin-rolebinding.yaml. These files govern the basic RBAC for a Project Administrator.

Do the following with these files:

-

Make a copy of both these files for each particular project, renaming them with the project/namespace name in place of "project". For example, for a project called "biz", these files would be biz-admin-role.yaml and biz-admin-rolebinding.yaml.

-

Edit both the files, replacing all occurrences of project with the actual project/namespace name.

For the project-admin-rolebinding.yaml file, replace the contents of the "subjects" section with the list of users who will act as Project Administrators for this particular project.

Alternatively, replace the contents with reference to a group that contains all users who will act as Project Administrators for this project.

-

Once both files are ready, they can be activated in the Kubernetes cluster by the cluster administrator using kubectl apply -f filename.

It is strongly recommended that these files be version controlled as they form part of the overall OSM cloud native configuration.

The Project Administrator role specification contains the pods/exec resource. This is required for only one specific scenario - using the OSM DB Installer to deploy a cartridge from the local file system (where the install-osmdb.sh script is being run). This particular resource can be removed, forcing cartridge deployment to only happen from a repository. It is highly recommended to remove this resource for production environments. The resource may be retained for development environment, as it eases the code-build-deploy-test cycle for OSM cartridge development.

In addition to the main Project Administrator role and its binding, the samples contain two additional and optional role-rolebinding sets:

- project-admin-addon-role.yaml and project-admin-addon-rolebinding.yaml: This role is per project and is an optional adjunct to the main Project Administrator role. It contains authorization for resources and actions in the project namespace that are not required by the toolkit, but might be of some use to the Project Administrator for debugging purposes.

- wko-read-role.yaml and wko-read-rolebinding.yaml: This role is available in the WebLogic Kubernetes Operator's namespace, and is an optional add-on to the Project Administrator's capabilities. It lets the user list the WKO pods and view their logs, which can be useful to debug issues related to instance startup and upgrade failures. This is suitable only for sandbox or development environments. It is strongly recommended that, even in these environments, WKO logs be exposed via a logs toolchain. The WebLogic Kubernetes Operator's Helm chart comes with the capability to interface with an ELK stack. For details, see https://oracle.github.io/weblogic-kubernetes-operator/userguide/managing-operators/#optional-elastic-stack-elasticsearch-logstash-and-kibana-integration.