8 Exploring Configuration Options

The OSM cloud native toolkit gives you options to set up your configuration based on your requirements. This chapter describes the configurations you can explore, allowing you to decide how best to configure your OSM cloud native environment to suit your needs.

You can choose configuration options for the following:

- Manage LDAP Providers in WLS via OSM

- Working with Shapes

- Injecting Custom Configuration Files

- Choosing Worker Nodes for Running OSM Cloud Native

- Working with Ingress, Ingress Controller, and External Load Balancer

- Using an Alternate Ingress Controller

- Reusing the Database State

- Setting Up Persistent Storage

- Setting Up Database Optimizer Statistics

- Leveraging Oracle WebLogic Server Active GridLink

- Managing Logs

- Managing OSM Cloud Native Metrics

The sections that follow provide instructions for working with these configuration options.

Manage LDAP Providers in WLS via OSM

- OSM will no longer support the management of LDAP server via OSM CNTK scripts (such as managing accounts, users and groups in the LDAP server).

- Support for OpenLDAP authentication provider out of the box through OSM has been deprecated.

- Support for Generic LDAP to adapt all supported directory services by weblogic which uses LDAP including OpenLDAP has been introduced.

- OSM will be providing functionality to users for other directory services via model extensions.

OSM provides two ways to integrate the LDAP service:

- Generic LDAP: It supports many of the LDAP vendors.

- Model Extensions: If a specific LDAP vendor is not compatible with Generic LDAP provider, a custom model extension can be used to configure a vendor-specific LDAP provider.

Secret Creation

ldap-secret-creation$OSM_CNTK/samples/credentials/manage-osm-ldap-credentials.sh -p project -i instance -c create -l ldap

- After executing the above command, the script asks the host and domain node to get users and groups, user domain node and password to access the LDAP server.

- After providing all the credentials, the script creates

project-instance-ldap-credentialssecret which is used while creating an instance to import users from the LDAP server and assign respective groups.

Enabling LDAP in Project Specification

<project>.yaml#External authentication authentication: # When enabled, kubernetes secret "project-instance-ldap-credentials" # must exist ldap: enabled: true

After above configurations, create the OSM cloud native instance. If it is already running, delete it and create it again.

Other LDAP Providers as listed by Weblogic can be used via model extensions.

- Add the custom model tpl file which has configurations for the LDAP

provider to the

$OSM_CNTK/samples/_custom-domain-model.tpl.

custom-ldapprovider.tpl

{{- if .Values.custom.jms }}

custom-jms-support.74.yaml: |+

{{- include "osm.custom-jms-support" $root | nindent 2 }}

{{- end }}

custom-<ldap-provider>-support.76.yaml: |+

{{- include "osm.custom-ldap-provider-support" $root | nindent 2}}- Enable custom flag and add both

custom-domain-model.tplandcustom-ldap-provider-support.tplto wdtFiles in the project specification file.

project.yaml

# Sample WDT extensions can be enabled here. When enabled is true, then

# _custom-domain-model.tpl needs to be un-commented. Custom template files can

# also be added.

custom:

enabled: true

application: false

jdbc: false

jms: false

#wdtFiles: [] # This empty declaration should be removed if adding items here.

wdtFiles:

# - _myWDTFile1.tpl

# - _myWDTFile2.tpl

- _custom-domain-model.tpl

- _custom-ldap-provider-support.tpl- Create the OSM cloud native instance. If it is already running, delete

it and create it again. Here

custom-models-pathrefers to the directory where the wdtFiles exist.

create-instance

$OSM_CNTK/scripts/create-instance.sh -p project -i instance -s specpath -m custom-models-path

Sample LDAP Provider Model File

If the decision is made to use a vendor specific LDAP provider instead of

Generic LDAP provider, to illustrate the model extension required a sample model

extension for Active Directory is provided at

$OSM_CNTK/samples/customExtensions/_custom-active_directory-support.tpl.

Such extensions can be populated with direct values for the fields or by referencing a

custom secret. If referencing a custom secret, the secret should contain referenced

fields and the secret must be specified in the section for custom secrets in project

specification.

<project>.yaml

#Custom secrets at Project level

# Any custom secrets that can be used within WDT metadata fragments specified

# above. Secrets used in instance specific WDT should be listed in the instance

# specification. More than one secret name can be provided

project:

customSecrets:

#secretNames: {} # This empty declaration should be removed if adding items here.

secretNames:

- ldap-provider-secretTo configure a vendor specific LDAP Provider, refer to the "WebLogic Server MBean Reference Document" for the WebLogic version in your image manifest file, under Configuration MBeans → Security MBeans. Alternatively, use the method described in the section "Displaying Valid Attributes and Child Attributes of a WDT Model".

Generic LDAP also supports OpenLDAP. In case you still want to use OpenLDAP Authentication Provider, you should follow the steps for "Using Generic LDAP" with minor changes.

To create the secret, replace ldap provided with

-l option in the secret creation command with

openldap.

openldap-secret-creation

$OSM_CNTK/samples/credentials/manage-osm-ldap-credentials.sh -p project -i instance -c create -l openldap

Enable "openldap" in project specification.

Note:

You cannot enable LDAP and OpenLDAP at the same time.project.yaml

# When enabled, kubernetes secret "project-instance-openldap-credentials" # must exist openldap: # Deprecated enabled: true

Working with Shapes

The OSM cloud native toolkit provides the following pre-configured shapes:

- charts/osm/shapes/dev.yaml. This can be used for development, QA and user acceptance testing (UAT) instances.

- charts/osm/shapes/devsmall.yaml. This can be used to reduce CPU requirements for small development instances.

- charts/osm/shapes/prod.yaml. This can be used for production, pre-production, and disaster recovery (DR) instances.

- charts/osm/shapes/prodlarge.yaml. This can be used for production, pre-production and disaster recovery (DR) instances that require more memory for OSM cartridges and order caches.

- charts/osm/shapes/prodsmall.yaml. This can be used to reduce CPU requirements for production, pre-production and disaster recovery (DR) instances. For example, it can be used to deploy a small production cluster with two managed servers when the order rate does not justify two managed servers configured with a prod or prodlarge shape. For production instances, Oracle recommends two or more managed servers. This provides increased resiliency to a single point of failure and can allow order processing to continue while failed managed servers are being recovered.

- charts/osm/shapes/prodxsmall.yaml. This shape is similar to prodsmall with the exception that the CPU resources are scaled down to accommodate instances with lower order workloads.

You can create custom shapes using the pre-configured shapes. See "Creating Custom Shapes" for details.

The pre-defined shapes come in standard sizes, which enables you to plan your Kubernetes cluster resource requirement and are available under directory charts/osm/shapes/ inside the toolkit.

The pre-defined shapes will have sizing requirements for the WebLogic pods (admin server and managed server), the microservice pods (OSM Gateway and RTUX) and for init (such as osm-gateway-init) as well as sidecar containers (wme, fluentd).

Based on the values (resource "request" and "limit") defined in the pre-defined shapes (or your custom shape), the Kubernetes scheduler attempts to find space for each pod in the worker nodes of the Kubernetes cluster.

To plan the cluster capacity requirement, consider the following:

- Number of development instances required to be running in parallel: D

- Number of managed servers expected across all the development instances: Md (Md will be equal to D if all the development instances are 1 MS instances)

- Number of OSM gateway microservices across all the development instances: Md (The number of gateway microservices will be always equal to the number of managed servers since we have 1:1 relationship)

- Number of RTUX microservices across all the development instances: D (RTUX microservice is always one per instance)

- Number of Fluentd containers across all the development instances (if enabled): D + Md

- Number of WME containers across all the development instances (unless disabled): D + Md

- Number of production (and production-like) instances required to be running in parallel: P

- Number of managed servers expected across all production instances: Mp

- Number of OSM gateway microservices across all the production instances: Mp

- Number of RTUX microservices across all the production instances: P

- Number of Fluentd containers across all the production instances (if enabled): P + Mp

- Number of WME containers across all the production instances (unless disabled): P + Mp

- Assume use of "dev" and "prod" shapes. The tables shown below are for example only. The real values will always come from the toolkit pre-defined shapes (or your custom shape).

- CPU requirement (CPUs) =D * 1 + Md * 2 + Md * 1 + D * 0.5 + D * 0.5 + Mp * 0.5 + D * 0.5 + Mp * 0.5 + P * 2 + Mp * 15 + Mp * 6 + P *2 + P * 0.5 + Md * 0.5 + P * 0.5 + Md * 0.5 = D * 2.5 + Md * 4 + P * 5 + Mp * 22

- Memory requirement (GB) = D * 4 + Md * 8 + Md * 2 + D * 1 + D * 1 + Md * 1 + D * 1 + Md * 1 + P * 8 + Mp * 48 + Mp * 12 + P * 4 + P * 1 + Mp * 1 + P * 1 + Mp * 1 = D * 7 + Md * 12 + P * 14 + Mp * 62

Table 8-1 Sample Sizing Requirements of "dev" Shapes

| Component | Kube Request | Kube Limit |

|---|---|---|

| Admin Server | 3 GB RAM, 1 CPU | 4 GB RAM, 1 CPU |

| Managed Server | 8 GB RAM, 2 CPU | 8 GB RAM, 2 CPU |

| OSM Gateway | 0.5 GB RAM, 0.5 CPU | 2 GB RAM, 1 CPU |

| OSM RTUX | 1 GB RAM, 0.5 CPU | 1 GB RAM, 0.5 CPU |

| Fluentd | 1 GB RAM, 0.5 CPU | 1 GB RAM, 0.5 CPU |

| WME | 1 GB RAM, 0.5 CPU | 1 GB RAM, 0.5 CPU |

Table 8-2 Sample Sizing Requirements of "prod" Shapes

| Component | Kube Request | Kube Limit |

|---|---|---|

| Admin Server | 8 GB RAM, 2 CPU | 8 GB RAM, 2 CPU |

| Managed Server | 48 GB RAM, 15 CPU | 48 GB RAM, 15 CPU |

| OSM Gateway | 12 GB RAM, 6 CPU | 12 GB RAM, 6 CPU |

| OSM RTUX | 4 GB RAM, 2 CPU | 4 GB RAM, 2 CPU |

| Fluentd | 1 GB RAM, 0.5 CPU | 1 GB RAM, 0.5 CPU |

| WME | 1 GB RAM, 0.5 CPU | 1 GB RAM, 0.5 CPU |

Note:

The production managed servers take their memory and CPU in large chunks. Kube scheduler requires the capacity of each pod to be satisfied within a particular worker node and does not schedule the pod if that capacity is fragmented across the worker nodes.The shapes are pre-tuned for generic development and production environments. You can create an OSM instance with either of these shapes, by specifying the preferred one in the instance specification.

# Name of the shape. The OSM cloud nativeshapes are devsmall, dev, prodsmall, prod, and prodlarge. # Alternatively, custom shape name can be specified (as the filename without the extension)

Init and Sidecar Containers Resourcing

# Sets resources to the wme sidecare container when enabled.

wme:

resources:

requests:

cpu: "500m"

memory: "1G"

limits:

cpu: "500m"

memory: "1G"

Creating Custom Shapes

You create custom shapes by copying the provided shapes and then specifying the desired tuning parameters. Do not edit the values in the shapes provided with the toolkit.

- The number of threads allocated to OSM work managers

- OSM connection pool parameters

- Order cache sizes and inactivity timeouts

To create a custom shape:

- Copy one of the pre-configured shapes and save it to your source repository.

- Rename the shape and update the tuning parameters as required.

- In the instance specification, specify the name of the shape you copied

and renamed:

shape: custom - Create the domain, ensuring that the location of your custom shape is

included in the colon-separated list of directories passed with

-s.$OSM_CNTK/scripts/create-instance.sh -p project -i instance -s spec_Path

Note:

While copying a pre-configured shape or editing your custom shape, ensure that you preserve any configuration that has comments indicating that it must not be deleted.Injecting Custom Configuration Files

Sometimes, a solution cartridge may require access to a file on disk. A common example is for reading of property files or mapping rules.

A solution may also need to provide configuration files for reference via parameters in the oms-config.xml file for OSM use (for example, for operational order jeopardies and OACC runtime configuration).

- Make a copy of the OSM_CNTK/samples/customExtensions/custom-file-support.yaml file.

- Edit it so that it contains the contents of the files. See the comments in the file for specific instructions.

- Save it (retaining its name) into the directory where you save all extension files. Say extension_directory. See "Extending the WebLogic Server Deploy Tooling (WDT) Model" for details.

- Edit your project specification to reference the desired files in

the

customFileselement:#customFiles: # - mountPath: /some/path/1 # configMapSuffix: "path1" # - mountPath: /some/other/path/2 # configMapSuffix: "path2"

When you run create-instance.sh or upgrade-instance.sh, provide the extension_directory in the "-m" command-line argument. In your oms-config.xml file or in your cartridge code, you can refer to these custom files as mountPath/filename, where mountPath comes from your project specification and filename comes from your custom-file-support.yaml contents. For example, if your custom-file-support.yaml file contains a file called properties.txt and you have a mount path of /mycompany/mysolution/config, then you can refer to this file in your cartridge or in the oms-config.xml file as /mycompany/mysolution/config/properties.txt.

- The files created are read-only for OSM and for the cartridge code.

- The mountPath parameter provided in the project specification should point to a new directory location. If the location is an existing location, all of its existing content will occlude with the files you are injecting.

- Do not provide the same mountPath more than once in a project specification.

- The custom-file-support.yaml file in your extension_directory is part of your configuration-as-code, and must be version controlled as with other extensions and specifications.

To modify the contents of a custom file, update your custom-file-support.yaml file in your extension_directory and invoke upgrade-instance.sh. Changes to the contents of the existing files are immediately visible to the OSM pods. However, you may need to perform additional actions in order for these changes to take effect. For example, if you changed a property value in your custom file, that will only be read the next time your cartridge runs the appropriate logic.

- Update the instance specification to set the size to 0 and then run upgrade-instance.sh.

- Update the instance specification to set the size to the initial value and remove the file from your custom-file-support.yaml file.

- Update the

customFilesparameter in your project specification and run upgrade-instance.sh.

Choosing Worker Nodes for Running OSM Cloud Native

By default, OSM cloud native has its pods scheduled on all worker nodes in the Kubernetes cluster in which it is installed. However, in some situations, you may want to choose a subset of nodes where pods are scheduled.

- Licensing restrictions: Coherence could be limited to be deployed on specific shapes. Also, there could be a limit on the number of CPUs where Coherence is deployed.

- Non license restrictions: Limitation on the deployment of OSM on specific worker nodes per team for reasons such as capacity management, chargeback, budgetary reasons, and so on.

# If OSM cloud native instances must be targeted to a subset of worker nodes in the

# Kubernetes cluster, tag those nodes with a label name and value, and choose

# that label+value here.

# key : any node label key

# values : list of values to choose the node.

# If any of the values is found for the above label key, then that

# node is included in the pod scheduling algorithm.

#

# This can be overridden in instance specification if required.

# osmWLSTargetNodes, if defined, restricts all OSM cloud native WebLogic pods and DB

# Installer pods to worker nodes that match the label conditions and for these

# pods, will take precedence over osmcnTargetNodes.

osmWLSTargetNodes: {} # This empty declaration should be removed if adding items here.

#osmWLSTargetNodes:

# nodeLabel:

# example.com/licensed-for-coherence is just an indicative example; any label and its values can be used for choosing nodes.

# key: example.com/licensed-for-coherence

# values:

# - true

# osmcnTargetNodes, if defined, restricts all OSM cloud native pods to worker nodes

# that match the label conditions. This value will be ignored for OSM cloud native

# WebLogic pods and DB Installer pods if osmWLSTargetNodes is also specified.

osmcnTargetNodes: {} # This empty declaration should be removed if adding items here.

#osmcnTargetNodes:

# nodeLabel:

# # example.com/use-for-osm is just an indicative example; any label and its values can be used for choosing nodes.

# key: oracle.com/use-for-osm

# values:

# - true- There is no restriction on node label key. Any valid node label can be used.

- There can be multiple valid values for a key.

- You can override this configuration in the instance specification yaml file, if required.

Working with Ingress, Ingress Controller, and External Load Balancer

A Kubernetes ingress is responsible for establishing access to back-end services. However, creating an ingress is not sufficient. An Ingress controller allows for the configurable exposure of back-end services to clients outside the Kubernetes cluster, via edge objects like NodePort services, Load Balancers, and so on. In OSM cloud native, an ingress controller can be selected and configured in the project specification.

OSM cloud native supports annotation-based "generic ingress" creation, which means the use of the standard Kubernetes Ingress API (as opposed to a proprietary ingress Custom Resource Definition), as verified by Kubernetes Conformance tests. The benefit of this is that it works for any Kubernetes certified ingress controller, provided that the ingress controller offers annotations (which are generally proprietary to the ingress controller) required for proper functioning of OSM.

Annotations applied to an Ingress resource allow you to use advanced features (like connection timeout, URL rewrite, retry, additional headers, redirects, sticky cookie services, and so on) and to fine-tune behavior for that Ingress resource. Different Ingress controllers support different annotations. For information about different Ingress controllers, see Kubernetes documentation at: https://kubernetes.io/docs/concepts/services-networking/ingress-controllers/ Review this documentation for your Ingress controller to confirm which annotations are supported.

Any Ingress Controller, which conforms to the standard Kubernetes ingress API and supports annotations needed by OSM should work, although Oracle does not certify individual Ingress controllers to confirm this "generic" compatibility.

See the documentation about Ingress NGINX Controller at: https://github.com/kubernetes/ingress-nginx/blob/main/README.md#readme

Also, make sure Ingress has annotation to handle large body size of client

request, like large order payloads (during regular processing) or large cartridge par

files (while deploying from Design Studio). For example,

nginx.ingress.kubernetes.io/proxy-body-size: "50m

The configurations required in your project specification are as follows:

# valid values are TRAEFIK, GENERIC, OTHER

ingressController: "GENERIC"

# When ingressController is set to GENERIC, the actual ingress controller might require some annotations to be added to the Kubernetes

# Ingress object. Place annotations that are must-have for such a controller and/or common to all instances here. Instance specific

# annotations can be placed in the instance spec file.

ingress:

# This annotation is required for nginx ingress controller.

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: "50m"

nginx.ingress.kubernetes.io/affinity: 'cookie'

nginx.ingress.kubernetes.io/session-cookie-name: 'sticky'

nginx.ingress.kubernetes.io/affinity-mode: 'persistent'

osmgw:

# This annotation is required for nginx ingress controller for osm gateway.

annotations:

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$1

rtux:

# This annotation is required for nginx ingress controller for rtux.

annotations:

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /orchestration-operations/$1# SSL Configuration

ssl:

incoming: true

ingress:

# These annotations are required if project spec ingressController is "GENERIC" and SSL enabled.

# Different Ingress controller can have implementation specific annotations and can be added here.

# Provided annotations below for nginx and openshift.

# These annotations are required if project spec ingressController is "GENERIC"

# and the actual ingress controller is nginx with wls custom request headers.

annotations:

nginx.ingress.kubernetes.io/configuration-snippet: |

more_clear_input_headers "WL-Proxy-Client-IP" "WL-Proxy-SSL";

more_set_input_headers "X-Forwarded-Proto: https";

more_set_input_headers "WL-Proxy-SSL: true";

nginx.ingress.kubernetes.io/ingress.allow-http: "false"The nginx controller works by creating an operator in its own "nginx" (or other specified) namespace, and exposing this as a service outside of the Kubernetes cluster (NodePort, LoadBalancer, and so on). In order to accommodate all types of ingress controllers and exposure options, the instance.yaml file requires inboundGateway.host and inboundGateway.port to be specified.

Populate the values in the instance.yaml before invoking the create-instance.sh command to create an instance:

inboundGateway:

# FQDN (recommended) or IP address of the actual ingress point/loadbalancer

host:

# uncomment and provide if different from default http/https ports

port:$OSM_CNTK/scripts/create-ingress.sh -p project -i instance -s $SPEC_PATH$OSM_CNTK/scripts/delete-ingress.sh -p project -i instanceOracle recommends leveraging standard Kubernetes ingress API, with any Ingress Controller that supports annotations for the configurations described here.

# valid values are TRAEFIK, GENERIC, OTHER

ingressController: "TRAEFIK"Using an Alternate Ingress Controller

By default, OSM cloud native supports standard Kubernetes ingress API and provides sample files for integration. If your desired ingress controller does not support one or more configurations via annotations on generic ingress, or you wish to use your ingress controller's CRD instead, you can choose "OTHER".

By choosing this option, OSM cloud native does not create or manage any ingress required for accessing the OSM cloud native services. However, you may choose to create your own ingress objects based on the service and port details mentioned in the tables that follow. The toolkit uses an ingress Helm chart ($OSM_CNTK/samples/charts/ingress-per-domain/templates/generic-ingress.yaml) and scripts for creating the ingress objects. These samples can be used as a reference to make copies and customize as necessary.

The actual ingress controller might require some annotations to be added to the kubernetes ingress object. The toolkit has provided all those annotations in the sample project and instance spec files in the CNTK. These can be used as a reference to make copies and customize as necessary.

#

ingress:

annotations: {} # This empty declaration should be removed if adding items here.

# This annotation is required for nginx ingress controller.

#annotations:

# kubernetes.io/ingress.class: nginx

# nginx.ingress.kubernetes.io/proxy-body-size: "50m"

# nginx.ingress.kubernetes.io/affinity: 'cookie'

# nginx.ingress.kubernetes.io/session-cookie-name: 'sticky'

# nginx.ingress.kubernetes.io/affinity-mode: 'persistent'For SSL with terminate-at-ingress, refer to NGINX ingress specific annotations provided in $OSM_CNTK/samples/instance.yaml. Similar annotations for other ingress controller should be added. Here weblogic custom request headers "X-Forwarded-Proto: https" and "WL-Proxy-SSL: true" need to be added as annotations.

WL-Proxy-SSL header. This

protects you from a malicious user sending in a request to appear to WebLogic as secure

when it isn't. Add the following annotations in the NGINX ingress configuration to block

WL-Proxy headers coming from the client. In the following example,

the ingress resource will eliminate the client headers

WL-Proxy-Client-IP and

WL-Proxy-SSL. ingress:

# These annotations are required if project spec ingressController is "GENERIC" and SSL enabled.

# Different Ingress controller can have implementation specific annotations and can be added here.

# Provided annotations below for nginx and openshift.

annotations: {} # This empty declaration should be removed if adding items here.

# These annotations are required if project spec ingressController is "GENERIC"

# and the actual ingress controller is nginx with wls custom request headers.

#annotations:

# nginx.ingress.kubernetes.io/configuration-snippet: |

# more_clear_input_headers "WL-Proxy-Client-IP" "WL-Proxy-SSL";

# more_set_input_headers "X-Forwarded-Proto: https";

# more_set_input_headers "WL-Proxy-SSL: true";

# nginx.ingress.kubernetes.io/ingress.allow-http: "false"The host-based rules and the corresponding back-end Kubernetes service

mapping are provided using the clusterName definition, which is the

name of the cluster in lowercase. Replace any hyphens with underscores. The default,

unless overridden, is c1.

The following table lists the service name and service ports for Ingress rules. All services require ingress session stickiness to be turned on so that once authenticated, all subsequent requests reach the same endpoint. All these services can be addressed within the Kubernetes cluster using the standard Kubernetes DNS for services.

Table 8-3 Service Name and Service Ports for Host-based Ingress Rules

| Rule (host) | Service Name | Service Port | Purpose |

|---|---|---|---|

| instance.project.loadBalancerDomainName | project-instance-cluster-clusterName | 8001 | For access to OSM through UI, XMLAPI, Web Services, and so on. |

| t3.instance.project.loadBalancerDomainName | project-instance-cluster-clusterName | 30303 | OSM T3 Channel access for WLST, JMS, and SAF clients. |

| admin.instance.project.loadBalancerDomainName | project-instance-admin | 7001 | For access to OSM WebLogic Admin Console UI. |

The path-based rules and the corresponding back-end Kubernetes service mapping are provided using the following definitions. All these services can be addressed within the Kubernetes cluster using the standard Kubernetes DNS for services.

The following table lists the service name and service ports for Ingress rules:

Table 8-4 Service Name and Service Ports for Path-based Ingress Rules

| Rule (path) | rewrite-target | Service Name | Service Port | Purpose |

|---|---|---|---|---|

| /orchestration/project/instance/tmf-api/(.*) | /$1 | project-instance-osm-gateway | 8080 | For access to OSM TMF REST APIs. |

| /orchestration/project/instance/fallout/(.*) | /$1 | project-instance-osm-gateway | 8080 | For access to OSM Fallout Exception REST APIs. |

| /orchestration/project/instance/orchestration-operations/(.*) | /orchestration-operations/$1 | project-instance-osm-runtime-ux-server | 8080 | For user interface access to instance data. |

Ingresses need to be created for each of the above rules per the following guidelines:

- Before running create-instance.sh, ingress must be created.

- After running delete-instance.sh, ingress must be deleted.

You can develop your own code to handle your ingress controller or copy the

sample ingress-per-domain chart and add additional

template files for your ingress controller with a new value for the type.

- The reference sample for creation is: $OSM_CNTK/scripts/config-ingress.sh

- The reference sample for deletion is: $OSM_CNTK/scripts/delete-ingress.sh

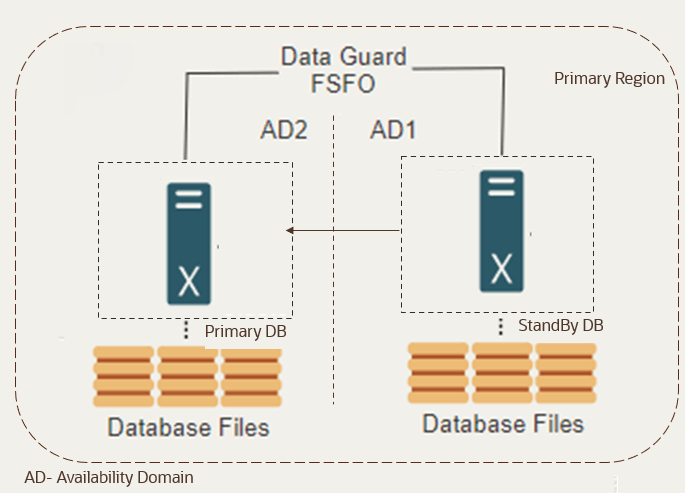

Preconfiguration on Primary and Standby Database

To achieve a highly available database service with automatic failover and role interchange you must adopt a role based approach utilizing both primary and standby databases. This configuration ensures that the service always connects to the active database. In the event of a failover or switchover, roles are automatically interchanged and the connection is rerouted to the new active database.

Preconfigure the service at the PDB level using the command below. The default service created during PDB creation cannot be used.

srvctl add service -d database_Uniquename -s servicename -pdb pdb_name -l PRIMARY -e SESSION

-m BASIC -w 10 -z 10Example: srvctl add service -d database_name -s service_name -pdb pdbname -l PRIMARY -e

SESSION -m BASIC -w failover_delay -z failback_delayIn order to know more, refer to Appendix F Oracle Database Clusterware Administration and Deployment Guide.

Note:

The service name must be identical on both servers.

Configuring the OSM Application for High Availability

You can configure OSM to recognize both the primary and standby databases, so all OSM components and microservices interact with these databases and benefit from high availability and data protection.

In instance specification add the secondary database details as shown below.

In order to know more about adding a secondary database in case of a standalone setup, refer to Planning and Validating Your Cloud Native Environment.

db:

type: "STANDARD"

# datasourcesPrimary section is applicable only for STANDARD DB. For ADB, values will be used from Autonomous Database Serverless secrets+configMap.

datasourcesPrimary:

port: 1521

host: dbserverPrimary-ip

datasourcesSecondary:

port: 1521

host: dbserverSecondary-ip- Always ensure that there are at least two managed servers when configuring the secondary database in OSM. For example, when the clusterSize >= 2.

- Standby database configuration in OSM is applicable only for a single instance database and not RAC database.

db.typeshould be standard in instance.yaml.

Data Guard Setup on OCI

Oracle Data Guard setup on OCI allows for the configuration of a standby database that automatically syncs with the primary Oracle database. OCI can handle both the set up and monitoring of Data Guard as a service. To set up Oracle Data Guard on OCI, do the following:

- Provision Primary and Standby Databases: Use the OCI console to provision the primary and standby databases, ensuring they are in the same region.

- Enable Data Guard Configuration: In the OCI console, navigate to the database service and configure Data Guard by creating a standby database from the existing primary database.

In order to know more, you can refer to the Oracle Cloud Infrastructure documentation and Oracle Database Data Guard Broker documentation.

Note:

It's recommended that you select the Protection Mode as high availability.

Reusing the Database State

When an OSM instance is deleted, the state of the database remains unaffected, which makes it available for re-use. This is common in the following scenarios:

- When an instance is deleted and the same instance is re-created using the same project and the instance names, the database state is unaffected. For example, consider a performance instance that does not need to be up and running all the time, consuming resources. When it is no longer actively being used, its specification files and PDB can be saved and the instance can be deleted. When it is needed again, the instance can be rebuilt using the saved specifications and the saved PDB. Another common scenario is when developers delete and re-create the same instance multiple times while configuration is being developed and tested.

- When a new instance is created to point to the data of another instance with a new project and instance names, the database state is unaffected. A developer, who might want to create a development instance with the data from a test instance in order to investigate a reported issue, is likely to use their own instance specification and the OSM data from PDB of the test instance.

- The OSM DB (schema and data)

- The RCU DB (schema and data)

Recreating an Instance

You can re-create an OSM instance with the same project and instance names, pointing to the same database. In this case, both the OSM DB and the RCU DB are re-used, making the sequence of events for instance re-creation relatively straightforward.

- PDB

- The project and instance specification files

Reusing the OSM Schema

To reuse the OSM DB, the secret for the PDB must still exist:

project-instance-database-credentialsThis is the osmdb credential in the

manage-instance-credentials.sh script.

Reusing the RCU

- project-instance

-rcudb-credentials. This is thercudbcredential. - project-instance

-opss-wallet-password-secret. This is theopssWPcredential. - project-instance

-opss-walletfile-secret. This is theopssWFcredential.

opssWP and opssWF secrets no longer exist and cannot be re-created from offline

data, then drop the RCU schema and re-create it using the OSM DB Installer.

$OSM_CNTK/scripts/create-instance.sh -p sr -i quick -s $SPEC_PATHCreating a New Instance

If the original instance does not need to be retained, then the original PDB can be re-used directly by a new instance. If however, the instance needs to be retained, then you must create a clone of the PDB of the original instance. This section describes using a newly cloned PDB for the new instance.

If possible, ensure that the images specified in the project specification (project.yaml) match the images in the specification files of the original instance.

Reusing the OSM Schema

osmdb credential in

manage-instance-credentials.sh and points to your cloned

PDB:project-instance-database-credentialsIf your new instance must reference a newer OSM DB installer image in its specification files than the original instance, it is recommended to invoke an in-place upgrade of OSM schema before creating the new instance.

# Upgrade the OSM schema to match new instance's specification files

# Do nothing if schema already matches

$OSM_CNTK/scripts/install-osmdb.sh -p project -i instance -s spec_path -c 1- Create a new RCU

- Reuse RCU

Creating a New RCU

If you only wish to retain the OSM schema data (cartridges and orders), then you can create a new RCU schema.

The following steps provide a consolidated view of RCU creation described in "Managing Configuration as Code".

- project-instance

-rcudb-credentials. This is thercudbcredential and describes the new RCU schema you want in the clone. - project-instance

-opss-wallet-password-secret. This is theopssWPcredential unique to your new instance

# Create a fresh RCU DB schema while preserving OSM schema data

$OSM_CNTK/scripts/install-osmdb.sh -p project -i instance -s spec_path -c 7Reusing the RCU

Using the manage-instance-credentials.sh script, create the following secret using your new project and instance names:

project-instance-rcudb-credentialsThe secret should describe the old RCU schema, but with new PDB details.

-

Reusing RCU Schema Prefix

Over time, if PDBs are cloned multiple times, it may be desirable to avoid the proliferation of defunct RCU schemas by re-using the schema prefix and re-initializing the data. There is no OSM metadata or order data stored in the RCU DB so the data can be safely re-initialized.

project-instance

-opss-wallet-password-secret. This is theopssWPcredential unique to your new instance.To re-install the RCU, invoke DB Installer:$OSM_CNTK/scripts/install-osmdb.sh -p project -i instance -s spec_path -c 5 -

Reusing RCU Schema and Data

In order to reuse the full RCU DB from another instance, the original

opssWFandopssWPmust be copied to the new environment and renamed following the convention: project-instance-opss-wallet-password-secret and project-instance-opss-walletfile-secret.This directs Fusion MiddleWare OPSS to access the data using the secrets.

$OSM_CNTK/scripts/create-instance.sh -p project -i instance -s spec_pathSetting Up Persistent Storage

OSM cloud native can be configured to use a Kubernetes Persistent Volume to store data that needs to be retained even after a pod is terminated. This data includes application logs, JFR recordings and DB Installer logs, but does not include any sort of OSM state data. When an instance is re-created, the same persistent volume need not be available. When persistent storage is enabled in the instance specification, these data files, which are written inside a pod are re-directed to the persistent volume.

Data from all instances in a project may be persisted, but each instance does not need a unique location for logging. Data is written to a project-instance folder, so multiple instances can share the same end location without destroying data from other instances.

The final location for this data should be one that is directly visible to the users of OSM cloud native. The development instances may simply direct data to a shared file system for analysis and debugging by cartridge developers. Whereas, formal test and production instances may need the data to be scraped by a logging toolchain such as EFK, that can then process the data and make it available in various forms. The recommendation therefore is to create a PV-PVC pair for each class of destination within a project. In this example, one for developers to access and one that feeds into a toolchain.

A PV-PVC pair would be created for each of these "destinations", that multiple instances can then share. A single PVC can be used by multiple OSM domains. The management of the PV and PVC lifecycles is beyond the scope of OSM cloud native.

The OSM cloud native infrastructure administrator is responsible for creating and deleting PVs or for setting up dynamic volume provisioning.

The OSM cloud native project administrator is responsible for creating and deleting PVCs as per the standard documentation in a manner such that they consume the pre-created PVs or trigger the dynamic volume provisioning. The specific technology supporting the PV is also beyond the scope of OSM cloud native. However, samples for PV supported by NFS are provided.

Creating a PV-PVC Pair

The technology supporting the Kubernetes PV-PVC is not dictated by OSM cloud native. Samples have been provided for NFS and can either be used as is, or as a reference for other implementations.

To create a PV-PVC pair supported by NFS:

- Edit the sample PV and PVC yaml files and update entries with enclosing

brackets

Note:

PVCs need to be ReadWriteMany.

vi $OSM_CNTK/samples/nfs/pv.yaml vi $OSM_CNTK/samples/nfs/pvc.yaml - Create the Kubernetes PV and

PVC.

kubectl create -f $OSM_CNTK/samples/nfs/pv.yaml kubectl create -f $OSM_CNTK/samples/nfs/pvc.yaml

# The storage volume must specify the PVC to be used for persistent storage.

storageVolume:

enabled: true

pvc: storage-pvc[oracle@localhost project-instance]$ dir

db-installer logs performanceSetting Up Database Optimizer Statistics

As part of the setup of a highly performant database for OSM, it is necessary to set up database optimizer statistics. OSM DB Installer can be used to set up the database partition statistics, which ensures a consistent source of statistics for new partitions so that the database generates optimal execution plans for queries in those partitions.

About the Default Partition Statistics

The OSM DB Installer comes with a set of default partition statistics. These statistics come from an OSM system running a large number of orders (over 400,000) for a cartridge of reasonable complexity. These partition statistics are usable as-is for production.

Setting Up Database Partition Statistics

To use the provided default partition statistics, no additional input, in terms of specification files, secrets or other runtime aspects, is required for the OSM cloud native DB Installer.

The OSM cloud native DB Installer is invoked during the OSM instance creation, to either create or update the OSM schema. The installer is configured to automatically populate the default partition statistics (to all partitions) for a newly created OSM schema when the "prod", "prodsmall", or "prodlarge" (Production) shape is declared in the instance specification. The statistics.loadPartitionStatistics field within these shape files is set to true to enable the loading.

If you want to load partition statistics for a non-production shape, or if you want to reload statistics due to a DB or schema upgrade, use the command with 11 to load the statistics to all existing partitions in the OSM schema:

$OSM_CNTK/scripts/install-osmdb.sh -p project -i instance -s $SPEC_PATH -c 11Note:

The partition name is specified in -b parameter with a comma delimited list of partition names.$OSM_CNTK/scripts/install-osmdb.sh -p project -i instance -s $SPEC_PATH -b the_newly_created_partition_1,the_newly_created_partition_2 -c 11$OSM_CNTK/scripts/install-osmdb.sh -p project -i instance -s $SPEC_PATH -a existing_partition_name -b the_newly_created_partition_1,the_newly_created_partition_2 -c 11Leveraging Oracle WebLogic Server Active GridLink

If you are using a RAC database for your OSM cloud native instance, by default, OSM uses WebLogic Multi-DataSource (MDS) configurations to connect to the database.

If you are licensed to use Oracle WebLogic Server Active GridLink (AGL) separately from your OSM license (consult any additional WebLogic licenses you possess that may apply), you can configure OSM cloud native to use AGL configurations where possible. This will better distribute load across RAC nodes.

db:

aglLicensed: trueManaging Logs

OSM cloud native generates traditional textual logs. By default, these log files are generated in the managed server pod, but can be re-directed to a Persistent Volume Claim (PVC) supported by the underlying technology that you choose. See "Setting Up Persistent Storage" for details.

# The storage volume must specify the PVC to be used for persistent storage. If enabled, the log, metric and JFR data will be directed here.

storageVolume:

enabled: true

pvc: storage-pvc- The OSM application logs can be found at: pv-directory/project-instance/logs

- The OSM DB Installer logs can be found at: pv_directory/project-instance/db-installer

- The OSM Gateway logs can be found at: pv_directory/project-instance/osm-gateway

- The OSM Runtime UX logs can be found at: pv_directory/project-instance/osm-runtime-ux-server

- Each log file gets rolled over daily and will be retained for 30 days.

- The size of each log file is limited to 10 MB.

Configuring Fluentd Logging

- The WebLogic pods (admin server and managed server) and DB Installer pods log to Fluentd via a sidecar. For the pods covered by a sidecar, you configure Fluentd using Helm charts.

- The microservice pods (OSM Gateway and RTUX) write to container logs. Fluentd has to be configured externally to process these logs.

Configuring Fluentd Logging for OSM Core Pods

OSM supports integration of Fluentd as a sidecar container to read the log entries from WebLogic pods and DB Installer pods. Fluentd can be integrated with ElasticSearch, OpenSearch or other equivalent upstream components and Kibana, OpenSearch Dashboard or other equivalent visualization components.

- Fluentd, ElasticSearch, and Kibana

- Fluentd, OpenSearch and OpenSearch Dashboards

- In the instance.yaml file, enable

fluentdLogging.fluentdLogging: enabled: true outputType: elasticsearch # Acceptable values are elasticsearch and opensearch, Default is elasticsearch outputProtocol: http # Acceptable values are http and https, Default is http # image: fluent/fluentd-kubernetes-daemonset:v1.14.5-debian-elasticsearch7-1.1 # default if none specified # imagePullPolicy: IfNotPresent- Example for enabling Fluentd logging with

ElasticSearch

fluentdLogging: enabled: true outputType: elasticsearch outputProtocol: http # Protocol of elasticsearch # image: fluent/fluentd-kubernetes-daemonset:v1.14.5-debian-elasticsearch7-1.1 # default if none specified # imagePullPolicy: IfNotPresent

- Example for enabling Fluentd logging with OpenSearch

fluentdLogging: enabled: true outputType: opensearch outputProtocol: http # Protocol of opensearch image: fluent/fluentd-kubernetes-daemonset:v1-debian-opensearch # imagePullPolicy: IfNotPresent

Note:

If you are using OpenSearch, then you must provide a Fluentd-OpenSearch image as the default image is for Fluentd-ElasticSearch. You can use any Fluentd image which is mandated by your organization or else use the Fluentd image from Docker Hub or an equivalent. - Example for enabling Fluentd logging with

ElasticSearch

- Get the IP address or host name and port details of ElasticSearch, OpenSearch or other equivalent upstream component for Fluentd.

- Create a secret for Fluentd

credentials:

$OSM_CNTK/scripts/manage-instance-credentials.sh -p sr -i quick -s $SPEC_PATH create fluentd Provide 'elasticsearch' credentials for 'sr-quick' ... Host: host_IP_address Port: port Username: username Password: password secret/sr-quick-fluentd-credentials configured - Create or upgrade the OSM instance. Use the Kibana user interface,

OpenSearch Dashboard or other equivalent visualization tools to view the

resulting logs.

By default, OSM populates some default fields, which you can use to filter the log messages in a visualization tool such as Kibana or OpenSearch Dashboards.

The following table lists some fields for filtering logs.

Table 8-5 Fields for Filtering Logs

| Field | Description | Example |

|---|---|---|

| tag | Retrieves the log events related to a particular log

file. The value should be in the following

pattern: |

|

| -index | Retrieves the log messages related to a particular

instance. The value should be in the following

pattern: |

sr-quick |

| servername | Retrieves the log messages related to a particular

server. The value should be in the following

pattern: |

sr-quick-admin, sr-quick-ms1 |

| level | Retrieves the log messages of specific log level. The

value should be in the following

pattern: |

|

| logger | Retrieves the log messages generated by a class. The

value should be the logger name. For example, if you want to see the

status of automation plugins, enter

oracle.communications.ordermanagement.automation.plugin.AutomationPluginManager |

oracle.communications.ordermanagement.automation.plugin.AutomationPluginManager |

Configuring Fluentd Logging for Microservices

For the microservice pods, you must configure Fluentd externally to process the logs. The Fluentd configuration to interpret OSM logs is provided in the cloud native toolkit's samples/fluentd/fluentd.conf sample file. Refer to Fluentd documentation for information about setting up Fluentd and importing this configuration into it.

All logs of OSM microservices logs are written to stdout/stderr and then appear in container logs. You can parse the OSM microservices logs using the tool of your choice. You can use Fluentd deployed as a daemonset within your Kubernetes cluster. To interpret the OSM microservices logs, utilize the log format provided.

All OSM microservices logs are written in the following format:

%date{yyyy-MM-dd HH:mm:ss.SSS} [%t] [%-5level] [%logger] - %msg%nThe log messages start with a date in the yyyy-mm-dd hh:mm:ss.sss

format. This is followed by the thread id and severity, after which, the logger and the

log message can be seen. Parse all OSM microservices logs using this pattern.

For parsing OSM microservices logs, use Fluentd deployed as a daemonset within your Kubernetes cluster.

The following is an example of the OSM Gateway microservice log:

2023-07-14 14:13:02.842 [pool-3-thread-32] [INFO ] [oracle.comms.ordermanagement.noa.cloudevent.HttpNOAEventProcessor] - path:productOrderingManagement/v4.0.0.1.0/listener/productOrderStateChangeEvent\nThe following is an example of the RTUX microservice log:

2023-07-14 14:13:02.842 [pool-3-thread-32] [INFO ] [oracle.comms.ordermanagement.noa.cloudevent.HttpNOAEventProcessor] - path:productOrderingManagement/v4.0.0.1.0/listener/productOrderStateChangeEvent\nNote:

Ensure that all OSM microservices logs originating in the same Kubernetes cluster are either in JSON or Text format. Do not generate them in both the formats.Obfuscating Sensitive Data in Logs

You can mask sensitive data (personal information) that is logged to files, the terminal (stdout and stderr), or sent to a log monitor. Sensitive data includes details such as names of persons, addresses, and account numbers.

The masking of sensitive data applies only to the DEBUG log level. Cartridge developers are not expected to expose potentially sensitive data unless they do it via DEBUG logs. OSM itself does not expose potentially sensitive data except in DEBUG logs. OSM masks such exposed sensitive data only in DEBUG logs.

- Cartridge developers have to provide a draft of the Personal Information (PI) regex configuration required to identify sensitive data, as part of the cartridge development process.

- Testers have to monitor log outputs and adjust the PI regex configuration as required to ensure all sensitive information is masked.

- Administrators have to ensure the tested PI regex configuration is added to the instance specification when creating or upgrading an OSM cloud native instance.

Prerequisite Configuration

...

log:

# handlerLevel filters the logs lower than its level.

# Here log level TRACE takes a numeric value between 1(highest severity) and 32(lowest severity) e.g. TRACE:1

handlerLevel: "TRACE:1"

# This is to optionally control logging level for specific classes. Uncomment to add the entries.

# 'class' will have full ClassName e.g. com.mslv.oms.poller.EventPoller

# 'level' should have same possible values as above e.g. TRACE:1

# Give class as "root" to set level for all classes.

#loggers:

# - class:

# level:

loggers:

- class: "root"

level: "TRACE:1"

...The OSM cloud native loggers.level property TRACE is equivalent to DEBUG.

...

osm-gateway:

log:

level: FINE

osmRuntimeUX:

log:

level: FINE

...The osm-gateway and osmRuntimeUX log.level properties determine the logging level of the logs and must be set to FINE to enable log masking. FINE is equivalent to DEBUG.

Configuring Log Masking

Sensitive data is identified and masked based on Java regular expression (regex) patterns defined in the instance specification as a list of entries under "logMaskingCustomRegexes". Each entry describes one item of PI data and how to recognize it using a regex. Any entries provided here are added to predefined entries. To see the full list of predefined entries for your version of the toolkit, review the contents of the charts/osm/templates/osm-gdpr-regex-json.yaml file in the toolkit. Predefined entries include patterns for email addresses and for phone numbers contained in an element called "phoneNumber".

Once PI data is identified in a log message using one of these regexes, the masking is done by substituting the sensitive data with a string of 4 stars "****".

Custom Regex Patterns

Depending on the logs emitted by the cartridge code, additional regex patterns can be configured to mask PI data that may become exposed. This is done by adding entries to the "logMaskingCustomRegexes" element in the instance specification:

...

logMaskingCustomRegexes:

- description: "account number"

type: partial

regex: "\"(?i)accountNumber\"\\s*:\\s*\"(.*?)\""

- description: "ssn"

type: exact

regex: "\\d{3}-\\d{2}-\\d{4}"

...descriptionis a human readable description of the field targeted by this regex entry.typeis the type of the regex pattern. Possible values are either partial or exact.regexis the Java regex pattern for the value to be recognized

Partial Regex Patterns

Partial regexes provide a pattern that matches only some part of the target field's values. These regex patterns are applied to XML and JSON documents or fragments in log messages. The regex pattern should begin with the field name in it and encompass the rest of the value, using wildcards as necessary. If this regex matches an XML or JSON line in a log message, the value part of the matching field is masked.

For example, to mask the account number contained in a JSON field called "accountNumber".

...

logMaskingCustomRegexes:

- description: "account number"

type: partial

regex: "\"(?i)accountNumber\"\\s*:\\s*\"(.*?)\""

...The following block shows a sample log message as generated by the cartridge:

...

"ownerAccount": {

"accountNumber": "1234321",

"id": "0CX-1XYHGQ",

"@type": "AccountRef",

}

...The following block shows a sample output log message:

...

"ownerAccount": {

"accountNumber": "****",

"id": "0CX-1XYHGQ",

"@type": "AccountRef",

}

...Exact Regex Patterns

Exact regex patterns provide a complete match mechanism to identify the PI data to mask. OSM looks through all log messages (not just XML and JSON portions) for such regex matches.

Everything in the log message that matches an exact regex pattern will be masked. Field names or other situating strings cannot be part of an exact regex pattern as otherwise, they too will get masked.

For example, given the below exact regex pattern, OSM will look for any string composed of digits in the format xxx-xx-xxxx in any log message. If found, that string is masked. To illustrate the scope of application of exact regex patterns, in the example below, even though the intention was to mask US Social Security Numbers, the masking feature will apply suppression to any string or sub-string that has digits in the format xxx-xx-xxxx.

The following block shows the Exact regex pattern in the instance specification:

...

logMaskingCustomRegexes:

- description: "US Social Security Number"

type: exact

regex: "\\d{3}-\\d{2}-\\d{4}"

...The following block shows a sample log as generated by a cartridge:

...

"US Social Security Number": "232-45-3434",

"orderReference": "987-87-8765"

...The following block shows a sample output log message:

...

"US Social Security Number": "****",

"orderReference": "****"

...Configuring Logging and Log Rotation

OSM cloud native provides a way to configure Oracle Diagnostic Logging (ODL) logging level to debug logs in an efficient manner.

This configuration is defined via the instance specification as follows:

# The valid log levels in descending order are:

# INCIDENT_ERROR (highest value)

# ERROR

# WARNING

# NOTIFICATION

# TRACE (lowest value)

# Each log level also takes a numeric value between 1(highest severity) and 32(lowest severity) e.g. ERROR:1

log: [] # This empty declaration should be removed if adding items here.

#log:

# handlerLevel filters the logs lower than its level.

# Set the handlerLevel lower or equal to class level.

# handlerLevel: ""

# This is to optionally control logging level for specific classes. Uncomment to add the entries.

# 'class' will have full ClassName e.g. com.mslv.oms.poller.EventPoller

# 'level' will have same possible values as above e.g. ERROR:1

# Give class as "root" to set level for all classes.

# loggers:

# - class:

# level:Valid ODL Log Levels

For ODM log levels, refer to the "About Log Severity Levels" section in OSM Cloud Native System Administrator's Guide. Each message type can also take a numeric value between 1 (highest severity) and 32 (lowest severity) that you can use to further restrict log output (for example ERROR:1).

When you specify a level, ODL returns all log messages of that type, as well as the messages that have a higher severity. For example, if you set the level to WARNING, ODL also returns log messages of type INCIDENT_ERROR and ERROR.

Configure ODL Handlers Logging Level

To configure Logging level for the ODL handlers (odl-handler, console-handler and wls-domain), set log.handlerLevel with appropriate value (for example, ERROR:1). An empty value would have the default setting (WARNING) for the handlers.

Configure Logging Level for Specific Class

To enable logging for a specific class or package, log.loggers[] can also be configured as follows. It can have multiple entries for different classes.

The log level for class should be of equal or higher level compared to log handlerLevel. Logs of lower level than the handlerLevel do not appear in the logs.

log:

handlerLevel: ""

loggers:

- class: "root"

level: "NOTIFICATION:1"

- class: "com.mslv.oms.poller.EventPoller"

level: "ERROR:1"Log Files Rotation

OSM pods use ephemeral storage to store log files, GC logs, and JFR data. All of these have to be managed so that worker nodes do not fail because they run out of ephemeral/container storage. For all the logs, GC logs, and JFR data, OSM cloud native provides log rotation and retention mechanisms to put an upper limit on the space they take. These are defined via specifications as described in the following table:

Table 8-6 Log Files Rotation in Specification Files

| Data | Specification | Example | Log Location: PVC Enabled | Log Location: PVC Disabled |

|---|---|---|---|---|

| OSM logs |

Shape

specification

|

Dev shape has file count 7 and size 500k |

Admin server: /logMount/${DOMAIN_ID}/logs/admin.log Managed server: /logMount/${DOMAIN_ID}/logs/ms1.log |

Admin server: /u01/oracle/user_projects/domains/domain/servers/admin/logs/admin.log Managed server: /u01/oracle//user_projects/domains/domain/servers/ms1/logs/ms1.log |

| GC logs |

Shape

specification

|

Dev shape has file count 7 and size 500k |

Admin server: /logMount/$(DOMAIN_UID)/logs/admin-gc-%t.log Managed server:/logMount/$(DOMAIN_UID)/logs /gc-$(SERVER_NAME)-%t.log |

Admin server: /u01/oracle/user_projects/domains/domain/gc-$(SERVER_NAME)-%t.log Managed server: /u01/oracle/user_projects/domains/domain/gc-$(SERVER_NAME)-%t.log |

| JFR data |

Instance

specification

|

Default maximum age is 4 hours, and the maximum size is 100 MB | /logMount/$(DOMAIN_UID)/performance/$(SERVER_NAME) | /logMount/$(DOMAIN_UID)/performance/$(SERVER_NAME)/ |

Managing OSM Cloud Native Metrics

All managed server pods running OSM cloud native carry annotations added by WebLogic Operator and an additional annotation by OSM cloud native.

osmcn.metricspath: /OrderManagement/metrics

osmcn.metricsport: 8001

prometheus.io/scrape: trueBy default, the OSM Gateway pod and the RTUX pod expose metrics to Prometheus (or any other compliant tool) scrape. This is controlled by the instance specification as follows:

# # Prometheus monitoring is enabled by default.

# prometheus:

# enabled: trueTo disable this behavior, set prometheus.enabled to

false.

Configuring Prometheus for OSM Cloud Native Metrics

For OSM cloud native metrics, configure the scrape job in Prometheus as follows:

Note:

During the installation, the OSM installer creates a user who is authorized to view OSM and Weblogic server metrics.- job_name: 'osmcn'

# HTTP basic authentication information

basic_auth:

username: oms-metrics

password: password

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: ['__meta_kubernetes_pod_annotationpresent_osmcn_metricspath']

action: 'keep'

regex: 'true'

- source_labels: [__meta_kubernetes_pod_annotation_osmcn_metricspath]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: ['__meta_kubernetes_pod_annotation_prometheus_io_scrape']

action: 'drop'

regex: 'false'

- source_labels: [__address__, __meta_kubernetes_pod_annotation_osmcn_metricsport]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: pod_name

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: namespace

- job_name: osmgateway

oauth2:

client_id: client-id

client_secret: client-secret

scopes:

- scope

token_url: OIDC_token_URL

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

action: keep

regex: true

- source_labels:

- __meta_kubernetes_pod_label_app

action: keep

regex: (^.+osm-gateway$)

- source_labels:

- __meta_kubernetes_pod_container_port_number

action: keep

regex: (8080)

- source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

action: replace

regex: '([^:]+)(?::\d+)?;(\d+)'

replacement: '$1:$2'

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels:

- __meta_kubernetes_namespace

action: replace

target_label: kubernetes_namespace

- source_labels:

- __meta_kubernetes_pod_name

action: replace

target_label: kubernetes_pod_nameNote:

OSM cloud native has been tested with Prometheus and Grafana installed and configured using the Helm chart prometheus-community/kube-prometheus-stack available at: https://prometheus-community.github.io/helm-charts. Use Prometheus chart version v14.1.2.0+.The endpoint for the OSM Gateway and RTUX microservices metrics is secured using OAUTH2 credential. Hence, it is required to configure scrape jobs for the pods with the same OAUTH2 credential.

Viewing Metrics Without Using Prometheus

- Find the internal IP address of the pod by running the following

command:

kubectl get pod pod-name -n namespace -o yaml - Log in to any of the worker nodes of the Kubernetes cluster.

- Do the following:

-

For microservices, curl to

http://pod_ip:port/metricsusing the oath2 token. - For OSM, curl to

http://pod_ip:port/OrderManagement/metricsusing basic authorization. OSM metrics should be accessed either with oms-metrics as a user or, if you are using another user, then make sure to have the role omsMetricsGroup assigned to that user.

-

For more details, see Kubernetes documentation at: https://kubernetes.io/docs/tutorials/services/connect-applications-service/#exposing-pods-to-the-cluster.

Viewing OSM Cloud Native Metrics in Grafana

OSM cloud native metrics scraped by Prometheus can be made available for further processing and visualization. The OSM SDK comes with sample Grafana dashboards to get you started with visualizations.

Import the dashboard JSON files from the location SDK/Samples/Grafana into your Grafana environment.

- OSM by Instance: Provides a view of OSM cloud native metrics for one or more instances in the selected project namespace.

- OSM by Server: Provides a view of OSM cloud native metrics for one or more managed servers for a given instance in the selected project namespace.

- OSM by Order Type: Provides a view of OSM cloud native metrics for one or more order types for a given cartridge version in the selected instance and project namespace.

- OSM and Weblogic by Server: Provides a view of OSM cloud native metrics and WebLogic Monitoring Exporter metrics for one or more managed servers for a given instance in the selected project namespace.

- TMF and SI Messaging by Instance: Provides a view of the TMF API and System Interaction messaging metrics for one or more instances in the selected project namespace.

These are provided as samples. You can import them as-is into a Grafana environment. They can also be used as a pattern to create a set of dashboards for specific requirements.

You can use the following global filters in the sample dashboard:

- Project: Displays the available projects and the default. This does not have multi-selection.

- Instance: Displays the filter value based on the selected project.

- Pod: Displays the filter value based on the selected instance.

- API Category: Displays the filter value based on the selected pod.

- API: Displays the API names such as serviceOrdering.

- API Version: Displays the API version based on the selected API.

- Target System: Displays the target system based on the selected API and version.

- Message Status: Displays the status of request or events.

You can use the following detail panels in the sample dashboard:

- Incoming REST Requests per Hour: Displays the rate at which the incoming REST calls are processed per hour. This reflects the incoming traffic to the OSM Gateway. This detail panel displays the consolidated view of successful and failed requests. It also displays the failed request rate per hour. You can use this to check for bottlenecks during the processing of a REST request.

- Outgoing REST Requests per Hour: Displays the rate at which the outgoing messages are processed per hour. This reflects the outgoing traffic from the OSM Gateway. This detail panel displays the consolidated view of successful and failed outgoing REST requests. It also displays the failed outgoing REST request rate per hour. Any spike in failed REST request rate indicates an issue in delivering the events to the target system.

- Average Incoming REST Request Duration: Displays the average time taken for processing the incoming REST request to OSM. It reflects the efficiency of request processing of OSM.

- CPU Load: Displays the current usage of CPU.

- Heap Usage: Displays the amount of heap space used out of the available heap space.

Exposed OSM Order Metrics

The following OSM metrics are exposed via Prometheus APIs.

Note:

- All metrics are per managed server. Prometheus Query Language can be used to combine or aggregate metrics across all managed servers.

- All metric values are short-lived and indicate the number of orders (or tasks) in a particular state since the managed server was last restarted.

- When a managed server restarts, all the metrics are reset to 0. These metrics do not refer to the exact values, which can be queried via OSM APIs such as Web Services and XML API.

Order Metrics

The following table lists order metrics exposed via Prometheus APIs.

Table 8-7 Order Metrics Exposed via Prometheus APIs

| Name | Type | Help Text | Notes |

|---|---|---|---|

| osm_orders_created | Counter | Counter for the number of orders in the Created state. | N/A |

| osm_orders_completed | Counter | Counter for the number of orders in the Completed state. | N/A |

| osm_orders_failed | Counter | Counter for the number of orders in the Failed state. | N/A |

| osm_orders_cancelled | Counter | Counter for the number of orders in the Canceled state. | N/A |

| osm_orders_aborted | Counter | Counter for the number of orders in the Aborted state. | N/A |

| osm_orders_in_progress | Gauge | Gauge for the number of orders currently in the In Progress state. | N/A |

| osm_order_items | Histogram | Histogram that tracks the number of order items in an order with buckets for 0, 10, 25, 50, 100, 250, 1000, and 5000 order items. | N/A |

| osm_orders_amending | Gauge | Gauge for the number of orders currently in the Amending state. | N/A |

| osm_short_lived_orders | Histogram |

Histogram that tracks the duration of all orders in seconds with buckets for 1 second, 3 seconds, 5 seconds, 10 seconds, 1 minute, 3 minutes, 5 minutes, and 15 minutes. Enables focus on short-lived orders. |

Buckets for 1 second, 3 seconds, 5 seconds, 10 seconds, 1 minute, 3 minutes, 5 minutes, and 15 minutes. |

| osm_medium_lived_orders | Histogram |

Histogram that tracks the duration of all orders in minutes with buckets for 5 minutes, 15 minutes, 1 hour, 12 hours, 1 day, 3 days, 1 week, and 2 weeks. Enables focus on medium-lived orders. |

Buckets for 5 minutes, 15 minutes, 1 hour, 12 hours, 1 day, 3 days, 7 days, and 14 days. |

| osm_long_lived_orders | Histogram | Histogram that tracks the duration of all orders in days with buckets for 1 week, 2 weeks, 1 month, 2 months, 3 months, 6 months, 1 year and 2 years. Enables focus on long-lived orders. | Buckets for 7 days, 14 days, 30 days, 60 days, 90 days, 180 days, 365 days, and 730 days. |

| osm_order_cache_entries_total | Gauge | Gauge for the number of entries in the cache of type order, orchestration, historical order, closed order, and redo order. | N/A |

| osm_order_cache_max_entries_total | Gauge | Gauge for the maximum number of entries in the cache of type order,orchestration, historical order, closed order, and redo order | N/A |

Labels for All Order Metrics

The following table lists labels for all order metrics.

Table 8-8 Labels for All Order Metrics

| Label Name | Sample Value | Notes | Source of the Label |

|---|---|---|---|

| cartridge_name_version | SimpleRabbits_1.7.0.1.0 | Combined Cartridge Name and Version | OSM Metric Label Name/Value |

| order_type | SimpleRabbitsOrder | OSM Order Type | OSM Metric Label Name/Value |

| server_name | ms1 | Name of the Managed Server | OSM Metric Label Name/Value |

| instance | 10.244.0.198:8081 | Indicates the Pod IP and Pod port from which this metric is being scraped. | Prometheus Kubernetes SD |

| job | omscn | Job name in Prometheus configuration which scraped this metric. | Prometheus Kubernetes SD |

| namespace | quick | Project Namespace | Prometheus Kubernetes SD |

| pod_name | quick-sr-ms1 | Name of the Managed Server Pod | Prometheus Kubernetes SD |

| weblogic_clusterName | c1 | OSM Cloud Native WebLogic Cluster Name | WebLogic Operator Pod Label |

| weblogic_clusterRestartVersion | v1 | OSM Cloud Native WebLogic Operator Cluster Restart Version | WebLogic Operator Pod Label |

| weblogic_createdByOperator | true | WebLogic Operator Pod Label to identify operator created pods | WebLogic Operator Pod Label |

| weblogic_domainName | domain | WebLogic Operator pod label | WebLogic Operator pod label |

| weblogic_domainRestartVersion | v1 | OSM Cloud Native WebLogic Operator Domain Restart Version | WebLogic Operator Pod Label |

| weblogic_domainUID | quick-sr | OSM Cloud Native WebLogic Operator Domain UID | WebLogic Operator Pod Label |

| weblogic_modelInImageDomainZipHash | md5.3d1b561138f3ae3238d67a023771cf45.md5 | Image md5 hash | WebLogic Operator Pod Label |

| weblogic_serverName | ms1 | WebLogic Operator Pod Label for Name of the Managed Server | WebLogic Operator Pod Label |

Task Metrics

The following metrics are captured for Manual or Automated Task Types only. All other Task Types are currently not being captured.

Table 8-9 Task Metrics Captured for Manual or Automated Task Types Only

| Name | Type | Help Text |

|---|---|---|

| osm_tasks_created | Counter | Counter for the number of Tasks Created |

| osm_tasks_completed | Counter | Counter for the number of Tasks Completed |

Labels for All Task Metrics

A task metric has all the labels that an order metric has. In addition, a task metric has two more labels.

Table 8-10 Labels for All Task Metrics

| Label | Sample Value | Notes | Source of Label |

|---|---|---|---|

| task_name | RabbitRunTask | Task Name | OSM Metric Label Name/Value |

| task_type | A |

A for Automated M for Manual |

OSM Metric Label Name/Value |

TMF and System Interaction Messaging Metrics

The following table lists the OSM Gateway metrics in additions to Helidon standard metrics that are exposed via Prometheus APIs.

Table 8-11 TMF and System Interaction Messaging Metrics

| Name | Type | Label Name | Description |

|---|---|---|---|

| application_osmgw_outgoing_messages_pending | Gauge | targetSystem, cn_project, cn_instance, pod_name, api_name, api_version, and spec_usage_type. | Count of outgoing messages waiting to be sent. |

| application_osmgw_incoming_messages | Counter |

cn_project, cn_instance, pod_name, api_name, api_version, spec_usage_type, managed_server_names, and status. "status" has the following values:

|

Count of incoming messages processed. |

| application_osmgw_outgoing_messages_processed | Counter |

targetSystem, cn_project, cn_instance, pod_name, api_name, api_version, spec_usage_type, and status. "status" has the following values:

|

Count of outgoing messages processed. |

| application_osmgw_incoming_rest_duration | SimpleTimer |

cn_project, cn_instance, pod_name, api_name, api_version, spec_usage_type, managed_server_names, and status. "status" has the following values:

|

Cumulative time taken to process incoming REST messages. |

| vendor_cpu_processCpuLoad | Gauge | cn_project, cn_instance, pod_name, and managed_server_name. | Displays the recent CPU usage for the Java Virtual Machine process. |

Labels for TMF and System Interaction Messaging Metrics

The following table lists the labels for TMF and System Interaction Messaging Metrics

Table 8-12 Labels for TMF and System Interaction Messaging Metrics

| Label Name | Sample Value | Notes | Source of the Label |

|---|---|---|---|

| cn_project | quick | Project Namespace | Gateway Metric Label Name/Value |

| cn_instance | sr | Instance name within the project | Gateway Metric Label Name/Value |

| pod_name | quick-sr-gateway-0 | Name of the OSM Gateway Pod | Gateway Metric Label Name/Value |

| api_name | serviceOrdering | TMF API name | Gateway Metric Label Name/Value |

| api_version | 4.1.0 | TMF API version | Gateway Metric Label Name/Value |

| spec_usage_type | Hosted | Roles of TMF API in OSM: either hosted or SI | Gateway Metric Label Name/Value |

| managed_server_names | ms1 | Name of the Managed Server pinned to Gateway pod | Gateway Metric Label Name/Value |

| status | successful | Status of REST request or SI message | Gateway Metric Label Name/Value |

| targetSystem | Billing | Downstream or upstream system | Gateway Metric Label Name/Value |

Helidon provides the standard Helidon base and vendor metrics for OSM Gateway and RTUX microservices.

The response for the metrics endpoint contains the standard Helidon application and vendor metrics. The following sample shows some of the metrics in the response:

# TYPE base_classloader_loadedClasses_count gauge

# HELP base_classloader_loadedClasses_count Displays the number of

classes that are currently loaded in the Java virtual machine.

base_classloader_loadedClasses_count 9667

# TYPE base_classloader_loadedClasses_total counter

# HELP base_classloader_loadedClasses_total Displays the total number

of classes that have been loaded since the Java virtual machine has

started execution.

base_classloader_loadedClasses_total 9672

# TYPE base_classloader_unloadedClasses_total counter

# HELP base_classloader_unloadedClasses_total Displays the total

number of classes unloaded since the Java virtual machine has started

execution.

base_classloader_unloadedClasses_total 5

# TYPE base_cpu_availableProcessors gauge