C Leveraging OSM Cloud Native SAF Connectivity Patterns for Your Use-Case

This appendix provides details about leveraging OSM cloud native SAF connectivity patterns for your use-case. See the following topics for more information:

About SAF Connectivity Patterns

The integration of OSM with upstream, downstream and peer systems is an essential part of the role it plays at a service provider. These integrations typically use SAF. While the specific integrations are dependent on the service provider's solution, some common patterns can be laid out for OSM cloud native and are described in the rest of this document. These can be used to plan, capture and communicate the connectivity.

When laying out OSM cloud native integration for a project, Oracle recommends that you carefully review the chapter Integrating OSM. You can then use the common patterns below to identify which one best fits each integration. Prepare an overall integration diagram, or set of diagrams, that use the pattern diagrams here, annotated for the specific solution being implemented. Such an artifact is very useful to control the integration, and drive content into the project and instance specifications. Additionally, if an OSM cloud native integration issue is referred to Oracle Support, the service request will require such an integration diagram to describe your use-case.

The diagrams in this document were prepared using draw.io, leveraging its built-in palette of standard Kubernetes icons (as per https://github.com/kubernetes/community/tree/master/icons).

The draw.io sources for all diagrams are available in the OSM SDK, under the ConnectivityDiagrams folder.

Common Integration Patterns

JMS and SAF messages use the T3 or T3S protocols. While WebLogic itself can process this protocol and perform any necessary routing within its cluster, the components involved in exposing OSM cloud native to the outside world cannot perform T3 or T3S routing. Such components could be Kubernetes-based Ingress Controllers or Load Balancers, or similar reverse-proxy functions. Kubernetes services themselves do not care about the protocol and can handle T3 or T3S.

This means that if OSM cloud native and the other system are in the same Kubernetes cluster, they must use T3 or T3S protocols. The underlying infrastructure supports it, and direct use of these protocols removes any packaging overhead.

However, if the other system is outside OSM cloud native's Kubernetes cluster, JMS and SAF traffic have to use the tunneling feature of WebLogic. For more information about this feature, see "Setting Up WebLogic Server for HTTP Tunneling" in Administering Server Environments for Oracle WebLogic Server. Here the T3 messages are carried in an http or https envelope and can therefore be routed by Ingress Controllers and Load Balancers. OSM cloud native supports this capability. It is automatically triggered if the JMS or SAF URI begins with "http://" or "https://". The other system needs similar configuration. See WebLogic documentation for more details.

There are two aspects to consider regarding hostname resolution in the context of OSM cloud native integration:

- Accessing an OSM cloud native environment from outside the Kubernetes cluster requires the use of a hostname derived from the instance name and project name. For example, an environment with the project name "com" and instance name "prod" requires the use of the hostname "prod.com.osm.org". Configuration can override the "osm.org" portion as required. Say this results in "prod.com.csp.net". All external systems (browsers trying to access OSM UIs, peer systems trying to invoke OSM web services, peer systems wanting to interact via JMS or SAF, etc) need to use this hostname and have it mapped to the IP address of the Load Balancer or Ingress Controller that exposes OSM cloud native. Ideally, this is done using wildcards in the corporate DNS as described in the OSM Cloud Native Deployment Guide. An alternative would be to add this mapping locally to each system (for instance, via /etc/hosts on Linux-based systems).

- Adding hostname resolution within OSM cloud native itself as the equivalent of /etc/hosts requires such mappings to be injected into the OSM pods if there is no DNS system configured to handle it. This is done by specifying the mapping in the instance specification's "hostAliases" list.

An external system needs to establish SAF or JMS connectivity with OSM cloud native.

Table C-1 Remotes Within the Same Kubernetes Cluster and Outside the Kubernetes Cluster

| x | Remote in Same Kubernetes Cluster | Remote Outside Kubernetes Cluster |

|---|---|---|

| Protocol | t3 | http or https |

| Hostname | project-instance-cluster-c1.project.svc.cluster.local | instance.project.domainname |

| Port | 31313 | 30303 |

When OSM cloud native needs to talk to an external system, it can do so via T3, http or https, depending on what that external system accepts. Other details, like hostname and port, also depend on that external system.

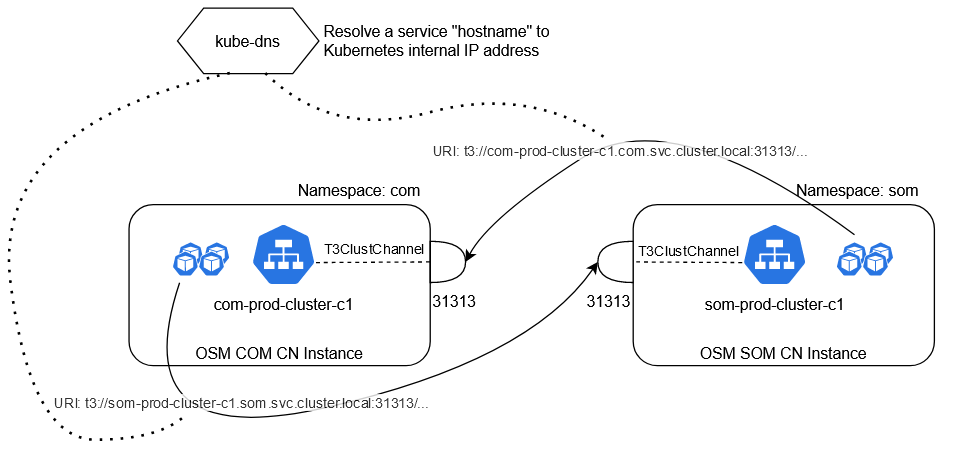

OSM Cloud Native Colocated SAF

This section describes how an OSM cloud native instance can have bidirectional SAF with another WLS based component (WLS Peer Component) which is also cloud native and running in the same Kubernetes cluster. This other component can be another instance of OSM cloud native or an instance of UIM cloud native, for example.

OSM cloud native has a service project-instance-cluster-c1 for each instance. This service includes a "T3ClustChannel" - which allows it to ingest t3 messages and route them to the OSM pods - exposed at port 31313 with the "hostname" project-instance-cluster-c1.namespace.svc.cluster.local.

The above image, Same Cluster, can be accessed as a draw.io file from the OSM SDK. See the Raw Sources section for more details.

Note that the URIs are both still t3. Since we are in the same Kubernetes cluster, we do not need to involve any ingresses or load balancers. Also note that the URIs take the form t3://service.namespace.svc.cluster.local:31313/...

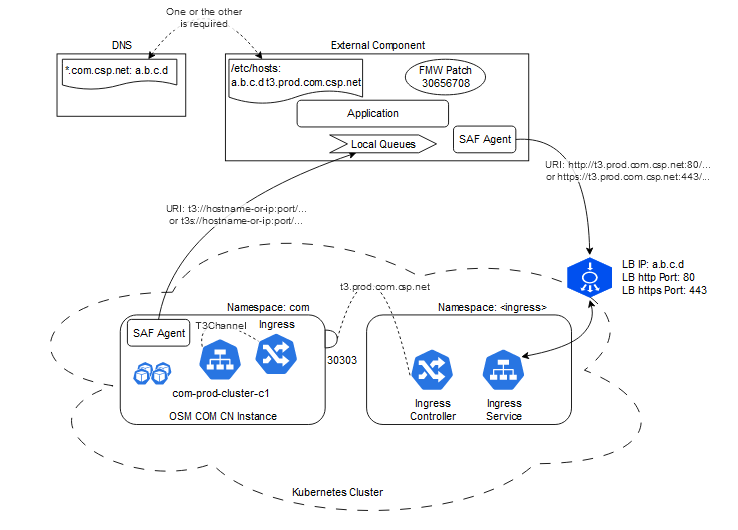

OSM Remote SAF

If the WLS Peer Component is not hosted on the same Kubernetes cluster as OSM cloud native, then the SAF connectivity is done using URIs that reference DNS resolvable hostnames, externally exposed ports and the http/https protocol, as opposed to Kubernetes resolvable hostnames, internally exposed ports and the t3 protocol.

Internally, OSM cloud native exposes this as a "T3Channel" in its project-instance-cluster-c1 service at port 30303, with the "hostname" instance.project.domainname. This channel is meant to be accessed via an ingress route over http or https. This channel is different from the "T3ClustChannel" described above.

The above image, Remote SAF, can be accessed as a draw.io file from the OSM SDK. See the Raw Sources section for more details.

Here, an OSM cloud native instance called "prod" is running in the namespace "com", with a configured domain name of "csp.net". It exposes its endpoints to the outside world (outside Kubernetes) using an Ingress. For the purpose of SAF configuration, in this example, the Ingress contains a rule to recognize http or https traffic addressed to "t3.prod.com.csp.net". This rule routes the http or https traffic to the com-prod-cluster-c1 service's t3channel (port 30303). Elsewhere in the cluster, an Ingress Controller monitors Ingress rules and implements them. It exposes its endpoint to the outside world via an Ingress Service, which is typically a LoadBalancer in type. This will result in a load balancer on the cluster boundary. Details of the Ingress Service and the Load Balancer are specific to each Ingress Controller (like Traefik, nginx, etc.) and to each deployment environment (like Oracle OCI, Google GKE, etc.).

The Load Balancer is routable from the outside world in some controlled fashion via its own IP address and hostname. In the above example, the Load Balancer is exposing http ingress rules via port 80 and https ingress rules via port 443.

DNS Considerations

If the external system is addressed via a hostname instead of IP address by OSM cloud native, this hostname must be resolvable by the DNS configured for the Kubernetes cluster. If this is not the case, the OSM cloud native instance specification must have its "hostAliases" list updated to include this hostname. An example of this is in the next section.

The external system must ensure its SAF traffic hits the Load Balancer using http or https. However, for the Ingress Controller to apply the instance's routing rule internally, the SAF traffic has to be addressed to t3.com.osm.csp.net. To satisfy both these requirements, two options exist:

- The external WebLogic system has its /etc/hosts file update on each machine to include a line that matches the Load Balancer IP with the t3.prod.com.csp.net hostname.

- An external DNS used by the external WebLogic system (for example, a corporate DNS) is updated to return the Load Balancer IP for all hostnames with the pattern "*.com.csp.net" or "*.csp.net".

Regardless of the DNS approach chosen, the external WebLogic system must ensure it has WebLogic patch 30656708 or its equivalent for the WebLogic version or patch-level in use.

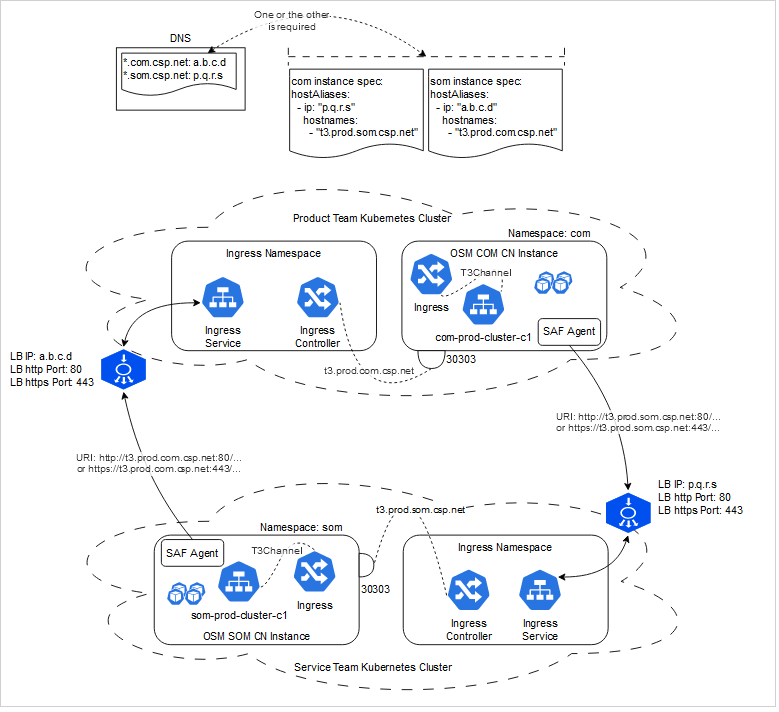

Cloud Native to Cloud Native Remote SAF

A special case of the above is when an OSM cloud native instance running in one Kubernetes cluster needs to have SAF communication with another OSM cloud native instance running in a different Kubernetes cluster. An example might be an OSM COM instance running in one organization's cluster while needing to send service orders to an OSM SOM instance running in another organization's cluster. This would include the need for OSM SOM to send events back to OSM COM as well.

The above image, CN to CN Remote SAF, can be accessed as a draw.io file from the OSM SDK. See the Raw Sources section for more details.

This is an extrapolation or doubling of the Remote SAF scenario. The main difference is that in the absence of a ubiquitous DNS mechanism, the integrator must leverage OSM cloud native capability to inject Linux /etc/hosts based name resolution via the instance specification.