3.1.6.2 Configuration

The PySpark interpreter can be configured through the Spark interpreter, with

the only exception being the Python version used. By default, the Python version is set

to 3 that can be changed either in the interpreter JSON files before the startup or from

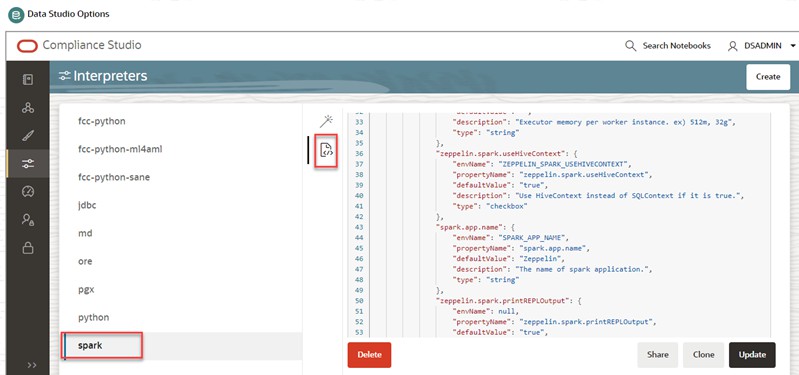

the Interpreters page of the Compliance Studio application UI during runtime by changing

the following properties:

To change the value of the

spark.pyspark.python property before installing the Compliance

Studio, follow these steps:

- Navigate to

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/mmgstudio/ server/builtin/interpreters/spark.jsondirectory. - Update the value in

spark.pyspark.pythonproperty of the spark.json file.

To change the value of the

spark.pyspark.python property after

installing the Compliance Studio, follow these steps: