- User Guide

- Workspaces

- Populate a Workspace

Populate a Workspace

- Navigate to the Workspace Summary page. The page displays Workspace records in a table.

- Click Action next to corresponding Workspace and select Populate

to populate the Workspace with data from a dataset in the

Populate Workspace window.

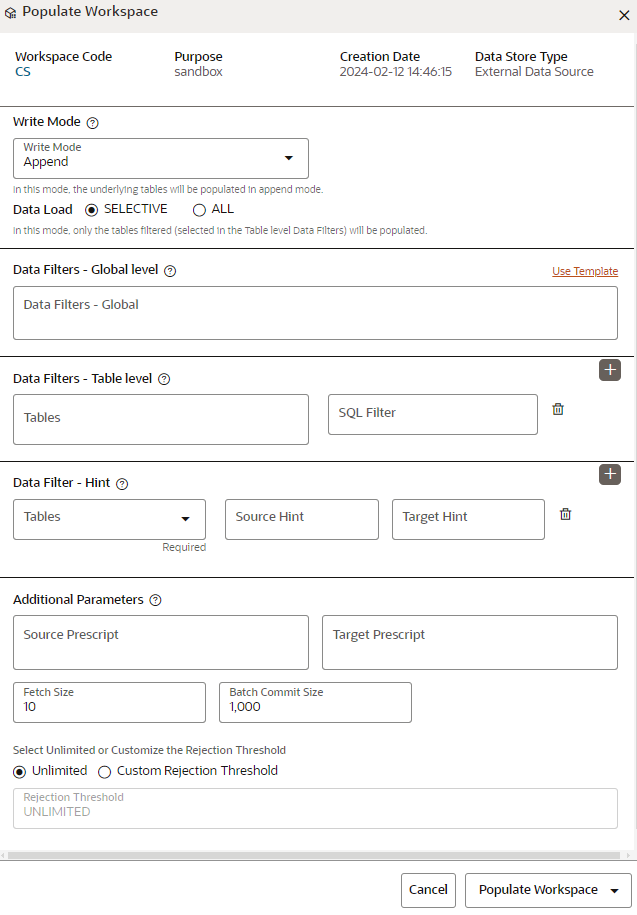

Figure 6-11 Populate Workspace window

The following table provides descriptions for the fields in the Populate Workspace window.

The following table provides descriptions for the fields in the Populate Workspace window.Table 6-2 Details for the Populate Sandbox Window

Field Description Workspace Code The code of the Workspace. Purpose The description for the Workspace. Creation Date The date and time on which the Workspace was created. Data Source Type The source of data. The value can be the OFSAA Data Schema or an external data source. Write Mode You can either overwrite the existing data (truncate and insert) or to append to the existing data. - Overwrite: In this mode, the underlying tables will be populated in truncate and followed by insert operation.

- Append: In this mode, the underlying tables

will be populated in append mode.

- Selective: In this mode, only the tables filtered (selected in the Table level Data Filters) will be populated.

- ALL: In this mode, all the underlying tables mapped to the workspace will be populated along with the filters mentioned below for specific tables.

Data Filter- Global Level Enter the data filter that needs to be applied on all the tables selected for data sourcing. For example: If MISDATE is equal to Today, then it is applied to all tables (wherever it is available) for selected Data Sources during population. If this field is not found (MISDATE) in the tables, it is not updated. OR

Click Use Template to select a library.

On selecting the template, any pre-filled values will be overridden with the template provided values.Data Filter- Table level You can provide the data filters individually on the tables here. Select the table and enter the SQL filter. NOTE: You can provide multiple table names for the same SQL filter.

For example, there are two tables called Student and Employee in the target data source, and below filters are applied

MISDATE as Today for Student and Employee tables

ID as 1 for Student table

Then, Student table will be populated with MISDATE and ID filters and Employee table will be populated with only MISDATE filter.

Global Filters will not be applicable for those tables on which filters have been applied individually.

If the same table name is provided in more than one rows here, then filter condition is generated as a conjunction of all the provided filters.

Data Filter - Hint You can provide database Hints at table-level and SQL Prescripts at schema level for data load performance improvement during Workspace Population. Select the table and enter source and target hint filters. You can add multiple filters by clicking on Add icon.

Note:

You can also pass runtime values in workspace population for the user-defined parameters in Data Filter – Global/Table.Example: Table Filter /Global Filter

[{"id":1,"filter":"CUSTOMER_ID =$CUSTID and INCOME =$income and CUSTOMER_NAME =$customerName","tables":["CUSTOMERS"]}]Additional Parameters:

{"fetch_size" :10, "batch_commit_size" :1000, "rejection_threshold" :"UNLIMITED", "write_mode" :"APPEND", "dynamic_parameters": [\{"key":"$CUSTID","value":"125"} ,{"key":"$income$","value":"30000"},{"key":"$customerName","value":"Cust125"}]}The Runtime parameters can be passed as part of additional parameters json .Key = "dynamic_parameters" .It will replace all the $ values in Table filter /Global Filters.

Source Prescript Enter the source prescript of JDBC properties for data upload. Target Prescript Enter the target prescript of JDBC properties for data upload. Fetch Size Enter the Fetch size of JDBC properties for data upload Batch Commit Size Enter the Batch Commit size of JDBC properties for data upload Write Mode You can either overwrite the existing data (truncate and insert) or to append to the existing data. You can choose to either overwrite the data or append to the existing data. Rejection Threshold Following two options are available: - Custom Rejection Threshold

Enter the maximum of number of inserts that may fail for any of the selected tables. You can provide the maximum number of inserts that can fail while loading data to a given table from all the sources. In case of threshold breach, all the inserts into the particular target schema will be rolled back. However, it will continue with populating the next target schema.

- Unlimited

Here, all the errors will be ignored during the data population.

- Click Populate Workspace to start the process. Here, you can create the batch using Create Batch, or create and execute using Create and Execute Batch option. On selecting either of these options, a workspace population task gets added to the batch.

- When you select Create and Execute Batch option, it allows you to create batch and triggers the batch as well.

- When you select 'Create Batch' option, it allows you to prepare the

batch and then execute or schedule the batch at a later time through

Scheduler Service window.

The Workspace population task execution can be tracked in the 'Monitor Batch' window in the Scheduler Service

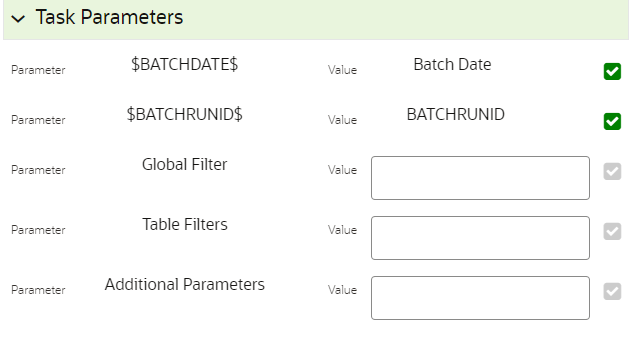

Figure 6-12 Task Parameters

- Enter the following parameters for workspace population. This step is optional.

- Additional Parameters Enter the Additional

Parameters in following format:

{"fetch_size" :10, "batch_commit_size" :1000, "rejection_threshold" :"UNLIMITED", "write_mode" :"OVERWRITE"}

- Global Filter

Provided input will be applied as a data filter on all the tables selected for data sourcing.

- Table Filter

You can provide data filters individually on the tables here. You must provide multiple table names for the same SQL filter. Global Filters will not be applicable for those tables on which filters have been applied individually. In case the same table name is provided in more than one rows here, the filter condition will be generated as a conjunction of all the provided filters.

Enter the Table filters in following format:

[{"id":1,"filter":"<filter condition>","tables":["TABLE1",

"TABLE2"]}, {"id":2,"filter":"<filter condition>","tables":["TABLE2"]}]

Note:

You can run workspace population for a given workspace any number of times. New tables may be added to the definition. Any new table added to the definition, that is not present in the target schema will be physicalized on update of the workspace. Also, user can add new sources if required.Any table that is deselected from the data sourcing definition will NOTbe dropped.

- Additional Parameters Enter the Additional

Parameters in following format: