13 Control and Tactical Center

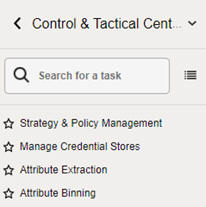

This chapter describes the Control and Tactical Center, where an administrative user can access and manage configurations for different applications.

Under the Control and Tactical Center, a user can access Strategy and Policy Management, which is the central place for managing the configurations of different applications and for setting up and configuring the demand forecasting runs. In addition, the modules for managing product attributes (Attribute Extraction and Attribute Binning) as well as the link for managing web service-related credentials can be also found under the Control and Tactical Center.

Depending on the role, a user will see one or multiple of the following links, as shown below. Typically, the administrative user has access to all of the following.

-

Strategy and Policy Management

-

Manage Credential Stores

-

Attribute Extraction

-

Attribute Binning

Strategy and Policy Management

In Strategy and Policy Management, a user can edit the configurations of different applications using the Manage System Configurations screen. Only the tables that are editable can be accessed in this screen. Within each table, one or multiple columns are editable and a user can override the values in those columns.

Manage Forecast Configurations can be used to set up, manage, and configure the demand forecasting runs for different applications such as Lifecycle Pricing Optimization (LPO), Inventory Planning Optimization-Inventory Optimization (IPO-IO), Inventory Planning Optimization-Demand Forecasting (IPO-DF), ), Inventory Planning Optimization-Lifecycle Allocation and Replenishment (IPO-LAR), Assortment Planning (AP), and Merchandise Financial Planning (MFP).

Forecast Configuration for MFP and AP

To configure the forecast process for MFP and AP, complete the following two steps:

-

Use the Manage System Configurations screen to review and modify the global configurations in RSE_CONFIG. For further details, see "Configuration Updates". The relevant configurations in RSE_CONFIG that must be reviewed and edited as required are listed in the following table.

Table 13-1 Configurations

APPL CODE PARAM NAME PARAM VALUE DESCR RSE

EXTENDED_HIERARCHY_SRC

Default value is NON-RMS.

Data source providing extended hierarchy data RMS/NON-RMS.

RSE

LOAD_EXTENDED_PROD_HIER

Default value is N. If the product hierarchy data had 9 levels, set this value as Y. If it has 7 levels, keep this value as N.

Y/N Value. This parameter is used by the product hierarchy ETL to determine if the extended product hierarchy is required.

PMO

PMO_PROD_HIER_TYPE

Default value is 3. If the product hierarchy data has nine levels (i.e., it has an extended hierarchy), keep this value as 3. If it has seven levels, change this value to 1.

The hierarchy ID to use for the product (installation configuration).

RSE

PROD_HIER_SLSTXN_HIER_LEVEL_ID

Default value is 9.

This parameter identifies the hierarchy level at which sales transactions are provided (7-Style, 8-Style/color, or 9 Style/color/Size). It must match the extended hierarchy leaf level.

RSE FCST_PURGE_RETENTION_DAYS Default value is 180. Number of days to wait before permanently deleting run data. RSE FCST_PURGE_ALL_RUNS Default value is N. Y/N flag indicating all run data should be deleted. RSE FCST_TY_PURGE_RETENTION_DAYS Default value is 180. Number of days to wait before permanently deleting run type data. RSE FCST_TY_PURGE_ALL_RUNS Default value is N. Y/N flag indicating all run type data should be deleted. -

Use the Manage Forecast Configurations screen to set up the forecast runs for MFP and AP, as follows.

In Manage Forecast Configurations, start by setting up a run type in the Setup train stop. Click the + icon above the table and fill in the fields in the pop-up. For MFP and AP forecasting, the preferred forecast method is Automatic Exponential Smoothing. However, the Sales & Promo and Life Cycle forecast methods are also supported. You must create a run type for each forecast measure/forecast intersection combination that is required for MFP and/or AP.

Once you are done with setting up the run types, click Start Data Aggregation in the Setup train stop. Select all the run types that were created and click Submit. When the aggregation is complete, the aggregation status will change to Complete. At this point, ad-hoc test runs and batch runs can be created and executed to generate a forecast.

To create an ad-hoc run, go to the Test train stop. First, click a run type in the top table and then click the + icon in the bottom table to create a run. In the Create Run pop-up, you can change the configurations parameters related to estimation process and forecast process in their respective tabs. For example, if you want to test a run using the Bayesian method, edit the Estimation Method parameter in the Estimation tab using the Edit icon above the table. After modifying and reviewing the configuration parameters, click Submit to start the run. Once the run is complete, the status will change to Forecast Generation Complete.

Doing test runs is an optional step. In addition to that, you must modify and review the configurations of the run type, activate the run type, enable auto-approve, and map the run type to the downstream application (in this case to MFP or AP). In the Manage train stop, select a row, click Edit Configurations Parameters, and edit the estimation and forecast parameters as necessary. Once you are done, go to Review tab and click Validate and then close tab.

Note:

If the run type is active, you will only be able to view the parameters. In order to edit the parameters, the run type must be inactive.

To activate the run type and enable the auto-approve, select a run type in the table and click the corresponding buttons above the table. Lastly, to map the run type to MFP or AP, go to Map train stop and click the + icon to create a new mapping.

Run Type Configurations for MFP and AP to Set Up GA Runs

To set up GA runs, create one run type for each forecast intersection/measure combination using the Automatic Exponential Smoothing method. The following table shows the configurations for each run type.

Table 13-2 Run Type Configurations

| Forecast Level (Merchandise/Location/Calendar) | Forecast Measure | Forecast Method | Data Source | |

|---|---|---|---|---|

|

Location Plan (MFP) |

Department/Location/Week |

Total Gross Sales Units |

Automatic Exponential Smoothing for all run types |

Store Sales for all run types |

|

Total Gross Sales Amount |

||||

|

Total Returns Units |

||||

|

Total Returns Amount |

||||

|

Location Target (MFP) |

Company/Location/Week |

Total Gross Sales Units |

||

|

Total Gross Sales Amount |

||||

|

Total Returns Units |

||||

|

Total Returns Amount |

||||

|

Merchandise Plan (MFP) |

Subclass/Area/Week |

Clearance Gross Sales Units |

||

|

Clearance Gross Sales Amount |

||||

|

Regular and Promotion Gross Sales Units |

||||

|

Regular and Promotion Gross Sales Amount |

||||

|

Total Returns Units |

||||

|

Total Returns Amount |

||||

| Other Gross Sales Units | ||||

| Other Gross Sales Amount | ||||

|

Merchandise Target (MFP) |

Department/Area/Week |

Clearance Gross Sales Units |

||

|

Clearance Gross Sales Amount |

||||

|

Regular and Promotion Gross Sales Units |

||||

|

Regular and Promotion Gross Sales Amount |

||||

|

Total Returns Units |

||||

|

Total Returns Amount |

||||

| Other Gross Sales Units | ||||

| Other Gross Sales Amount | ||||

|

Assortment Planning (AP) |

Subclass/Location/Week |

Clearance Gross Sales Unit |

||

|

Clearance Gross Sales Amount |

||||

|

Regular and Promotion Gross Sales Amount |

||||

|

Regular and Promotion Gross Sales Amount |

||||

|

Item Planning (AP) |

SKU/Location/Week |

Clearance Gross Sales Units |

||

|

Clearance Gross Sales Amount |

||||

|

Regular and Promotion Gross Sales Units |

||||

|

Regular and Promotion Sales Units |

||||

Note:

For all batch runs, in addition to the pre-season forecast, the Bayesian forecast is also generated by default. In the Manage train stop, you must only set the method for the pre-season forecast (either as Automatic Exponential Smoothing or Seasonal Exponential Smoothing).

Batch and Ad Hoc Jobs for MFP and AP Forecasting

The Batch and Ad Hoc jobs listed in the following table are used for loading foundation data (product hierarchy, location hierarchy, calendar, product attributes, and so on).

Table 13-3 Configuration and Main Data Load Jobs

| JobName | Description | RmsBatch |

|---|---|---|

|

ORASE_START_BATCH_JOB |

ORASE_START_BATCH_JOB |

rse_process_state_update.ksh |

|

ORASE_START_BATCH_SET_ACTIVE_JOB |

ORASE_START_BATCH_SET_ACTIVE_JOB |

rse_batch_type_active.ksh |

|

ORASE_START_BATCH_REFRESH_RESTR_JOB |

ORASE_START_BATCH_REFRESH_RESTR_JOB |

rse_batch_freq_restriction.ksh |

|

ORASE_START_BATCH_END_JOB |

ORASE_START_BATCH_END_JOB |

rse_process_state_update.ksh |

|

RSE_WEEKLY_INPUT_FILES_START_JOB |

RSE_WEEKLY_INPUT_FILES_START_JOB |

rse_process_state_update.ksh |

|

WEEKLY_INPUT_FILES_WAIT_JOB |

WEEKLY_INPUT_FILES_WAIT_JOB |

rse_batch_zip_file_wait.ksh |

|

WEEKLY_INPUT_FILES_VAL_JOB |

WEEKLY_INPUT_FILES_VAL_JOB |

rse_batch_zip_file_extract.ksh |

|

WEEKLY_INPUT_FILES_COPY_JOB |

WEEKLY_INPUT_FILES_COPY_JOB |

rse_batch_inbound_file_copy.ksh |

|

RSE_WEEKLY_INPUT_FILES_END_JOB |

RSE_WEEKLY_INPUT_FILES_END_JOB |

rse_process_state_update.ksh |

|

RSE_PROD_HIER_ETL_START_JOB |

RSE_PROD_HIER_ETL_START_JOB |

rse_process_state_update.ksh |

|

RSE_PROD_SRC_XREF_LOAD_JOB |

RSE_PROD_SRC_XREF_LOAD_JOB |

rse_prod_src_xref_load.ksh |

|

RSE_PROD_HIER_LOAD_JOB |

RSE_PROD_HIER_LOAD_JOB |

rse_prod_hier_load.ksh |

|

RSE_PROD_TC_LOAD_JOB |

RSE_PROD_TC_LOAD_JOB |

rse_prod_tc_load.ksh |

|

RSE_PROD_DH_LOAD_JOB |

RSE_PROD_DH_LOAD_JOB |

rse_prod_dh_load.ksh |

|

RSE_PROD_GROUP_LOAD_JOB |

RSE_PROD_GROUP_LOAD_JOB |

rse_load_prod_group.ksh |

|

RSE_PROD_HIER_ETL_END_JOB |

RSE_PROD_HIER_ETL_END_JOB |

rse_process_state_update.ksh |

|

RSE_LOC_HIER_ETL_START_JOB |

RSE_LOC_HIER_ETL_START_JOB |

rse_process_state_update.ksh |

|

RSE_LOC_SRC_XREF_LOAD_JOB |

RSE_LOC_SRC_XREF_LOAD_JOB |

rse_loc_src_xref_load.ksh |

|

RSE_LOC_HIER_LOAD_JOB |

RSE_LOC_HIER_LOAD_JOB |

rse_loc_hier_load.ksh |

|

RSE_LOC_HIER_TC_LOAD_JOB |

RSE_LOC_HIER_TC_LOAD_JOB |

rse_loc_hier_tc_load.ksh |

|

RSE_LOC_HIER_DH_LOAD_JOB |

RSE_LOC_HIER_DH_LOAD_JOB |

rse_loc_hier_dh_load.ksh |

|

RSE_LOC_HIER_ETL_END_JOB |

RSE_LOC_HIER_ETL_END_JOB |

rse_process_state_update.ksh |

|

RSE_LOC_ATTR_LOAD_START_JOB |

RSE_LOC_ATTR_LOAD_START_JOB |

rse_process_state_update.ksh |

|

RSE_CDA_ETL_LOAD_LOC_JOB |

RSE_CDA_ETL_LOAD_LOC_JOB |

rse_cda_etl_load_location.ksh |

|

RSE_LOC_ATTR_LOAD_END_JOB |

RSE_LOC_ATTR_LOAD_END_JOB |

rse_process_state_update.ksh |

|

RSE_LIKE_LOC_LOAD_START_JOB |

RSE_LIKE_LOC_LOAD_START_JOB |

rse_process_state_update.ksh |

|

RSE_LIKE_LOC_STG_JOB |

RSE_LIKE_LOC_STG_JOB |

rse_like_loc_stg.ksh |

|

RSE_LIKE_LOC_COPY_JOB |

RSE_LIKE_LOC_COPY_JOB |

|

|

RSE_LIKE_LOC_STG_CNE_JOB |

RSE_LIKE_LOC_STG_CNE_JOB |

|

|

RSE_LIKE_LOC_LOAD_JOB |

RSE_LIKE_LOC_LOAD_JOB |

rse_like_loc_load.ksh |

|

RSE_LIKE_LOC_LOAD_END_JOB |

RSE_LIKE_LOC_LOAD_END_JOB |

rse_process_state_update.ksh |

|

RSE_LIKE_PROD_LOAD_START_JOB |

RSE_LIKE_PROD_LOAD_START_JOB |

rse_process_state_update.ksh |

|

RSE_LIKE_PROD_STG_JOB |

RSE_LIKE_PROD_STG_JOB |

rse_like_prod_stg.ksh |

|

RSE_LIKE_PROD_COPY_JOB |

RSE_LIKE_PROD_COPY_JOB |

|

|

RSE_LIKE_PROD_STG_CNE_JOB |

RSE_LIKE_PROD_STG_CNE_JOB |

|

|

RSE_LIKE_PROD_LOAD_JOB |

RSE_LIKE_PROD_LOAD_JOB |

rse_like_prod_load.ksh |

|

RSE_LIKE_PROD_LOAD_END_JOB |

RSE_LIKE_PROD_LOAD_END_JOB |

rse_process_state_update.ksh |

|

RSE_DATA_STAGING_START_JOB |

RSE_DATA_STAGING_START_JOB |

rse_process_state_update.ksh |

|

RSE_MD_CDA_VALUES_STG_JOB |

RSE_MD_CDA_VALUES_STG_JOB |

rse_md_cda_values_stg.ksh |

|

RSE_MD_CDA_VALUES_COPY_JOB |

RSE_MD_CDA_VALUES_COPY_JOB |

|

|

RSE_MD_CDA_VALUES_STG_CNE_JOB |

RSE_MD_CDA_VALUES_STG_CNE_JOB |

|

|

RSE_PR_LC_CDA_STG_JOB |

RSE_PR_LC_CDA_STG_JOB |

rse_pr_lc_cda_stg.ksh |

|

RSE_PR_LC_CDA_COPY_JOB |

RSE_PR_LC_CDA_COPY_JOB |

|

|

RSE_PR_LC_CDA_STG_CNE_JOB |

RSE_PR_LC_CDA_STG_CNE_JOB |

|

|

RSE_PR_LC_CAL_CDA_JOB |

RSE_PR_LC_CAL_CDA_JOB |

rse_pr_lc_cal_cda_stg.ksh |

|

RSE_PR_LC_CAL_CDA_COPY_JOB |

RSE_PR_LC_CAL_CDA_COPY_JOB |

|

|

RSE_PR_LC_CAL_CDA_STG_CNE_JOB |

RSE_PR_LC_CAL_CDA_STG_CNE_JOB |

|

|

RSE_MD_CDA_STG_JOB |

RSE_MD_CDA_STG_JOB |

rse_md_cda_stg.ksh |

|

RSE_MD_CDA_COPY_JOB |

RSE_MD_CDA_COPY_JOB |

|

|

RSE_MD_CDA_STG_CNE_JOB |

RSE_MD_CDA_STG_CNE_JOB |

|

|

RSE_MD_CDA_LOAD_JOB |

RSE_MD_CDA_LOAD_JOB |

rse_md_cda_load.ksh |

|

RSE_MD_CDA_VALUES_LOAD_JOB |

RSE_MD_CDA_VALUES_LOAD_JOB |

rse_md_cda_values_load.ksh |

|

RSE_DATA_STAGING_END_JOB |

RSE_DATA_STAGING_END_JOB |

rse_process_state_update.ksh |

|

RSE_PROD_ATTR_LOAD_START_JOB |

RSE_PROD_ATTR_LOAD_START_JOB |

rse_process_state_update.ksh |

|

RSE_CDA_ETL_LOAD_PROD_JOB |

RSE_CDA_ETL_LOAD_PROD_JOB |

rse_cda_etl_load_product.ksh |

|

RSE_PROD_ATTR_GRP_VALUE_STG_JOB |

RSE_PROD_ATTR_GRP_VALUE_STG_JOB |

rse_prod_attr_grp_value_stg.ksh |

|

RSE_PROD_ATTR_GRP_VALUE_COPY_JOB |

RSE_PROD_ATTR_GRP_VALUE_COPY_JOB |

|

|

RSE_PROD_ATTR_GRP_VALUE_STG_CNE_JOB |

RSE_PROD_ATTR_GRP_VALUE_STG_CNE_JOB |

|

|

RSE_PROD_ATTR_GRP_VALUE_LOAD_JOB |

RSE_PROD_ATTR_GRP_VALUE_LOAD_JOB |

rse_prod_attr_grp_value_load.ksh |

|

RSE_PROD_ATTR_VALUE_XREF_STG_JOB |

RSE_PROD_ATTR_VALUE_XREF_STG_JOB |

rse_prod_attr_value_xref_stg.ksh |

|

RSE_PROD_ATTR_VALUE_XREF_COPY_JOB |

RSE_PROD_ATTR_VALUE_XREF_COPY_JOB |

|

|

RSE_PROD_ATTR_VALUE_XREF_STG_CNE_JOB |

RSE_PROD_ATTR_VALUE_XREF_STG_CNE_JOB |

|

|

RSE_PROD_ATTR_VALUE_XREF_LOAD_JOB |

RSE_PROD_ATTR_VALUE_XREF_LOAD_JOB |

rse_prod_attr_value_xref_load.ksh |

|

RSE_PROD_ATTR_LOAD_END_JOB |

RSE_PROD_ATTR_LOAD_END_JOB |

rse_process_state_update.ksh |

|

RSE_CM_GRP_HIER_LOAD_START_JOB |

RSE_CM_GRP_HIER_LOAD_START_JOB |

rse_process_state_update.ksh |

|

RSE_CM_GRP_XREF_LOAD_JOB |

RSE_CM_GRP_XREF_LOAD_JOB |

rse_cm_grp_xref_load.ksh |

|

RSE_CM_GRP_HIER_LOAD_JOB |

RSE_CM_GRP_HIER_LOAD_JOB |

rse_cm_grp_hier_load.ksh |

|

RSE_CM_GRP_TC_LOAD_JOB |

RSE_CM_GRP_TC_LOAD_JOB |

rse_cm_grp_tc_load.ksh |

|

RSE_CM_GRP_DH_LOAD_JOB |

RSE_CM_GRP_DH_LOAD_JOB |

rse_cm_grp_dh_load.ksh |

|

RSE_CM_GRP_HIER_LOAD_END_JOB |

RSE_CM_GRP_HIER_LOAD_END_JOB |

rse_process_state_update.ksh |

|

RSE_TRADE_AREA_HIER_LOAD_START_JOB |

RSE_TRADE_AREA_HIER_LOAD_START_JOB |

rse_process_state_update.ksh |

|

RSE_TRADE_AREA_SRC_XREF_LOAD_JOB |

RSE_TRADE_AREA_SRC_XREF_LOAD_JOB |

rse_trade_area_src_xref_load.ksh |

|

RSE_TRADE_AREA_HIER_LOAD_JOB |

RSE_TRADE_AREA_HIER_LOAD_JOB |

rse_trade_area_hier_load.ksh |

|

RSE_TRADE_AREA_TC_LOAD_JOB |

RSE_TRADE_AREA_TC_LOAD_JOB |

rse_trade_area_tc_load.ksh |

|

RSE_TRADE_AREA_DH_LOAD_JOB |

RSE_TRADE_AREA_DH_LOAD_JOB |

rse_trade_area_dh_load.ksh |

|

RSE_TRADE_AREA_HIER_LOAD_END_JOB |

RSE_TRADE_AREA_HIER_LOAD_END_JOB |

rse_process_state_update.ksh |

|

RSE_CUST_CONS_SEG_HIER_ETL_START_JOB |

RSE_CUST_CONS_SEG_HIER_ETL_START_JOB |

rse_process_state_update.ksh |

|

RSE_CUSTSEG_SRC_XREF_LOAD_JOB |

RSE_CUSTSEG_SRC_XREF_LOAD_JOB |

rse_custseg_src_xref_load.ksh |

|

RSE_CUSTSEG_HIER_LOAD_JOB |

RSE_CUSTSEG_HIER_LOAD_JOB |

rse_custseg_hier_load.ksh |

|

RSE_CUSTSEG_HIER_TC_LOAD_JOB |

RSE_CUSTSEG_HIER_TC_LOAD_JOB |

rse_custseg_hier_tc_load.ksh |

|

RSE_CUSTSEG_CUST_XREF_LOAD_JOB |

RSE_CUSTSEG_CUST_XREF_LOAD_JOB |

rse_custseg_cust_xref_load.ksh |

|

RSE_CONSEG_LOAD_JOB |

RSE_CONSEG_LOAD_JOB |

rse_conseg_load.ksh |

|

RSE_CONSEG_ALLOC_LOAD_JOB |

RSE_CONSEG_ALLOC_LOAD_JOB |

rse_conseg_alloc_load.ksh |

|

RSE_CUSTSEG_ALLOC_LOAD_JOB |

RSE_CUSTSEG_ALLOC_LOAD_JOB |

rse_custseg_alloc_load.ksh |

|

RSE_CUST_CONS_SEG_HIER_ETL_END_JOB |

RSE_CUST_CONS_SEG_HIER_ETL_END_JOB |

rse_process_state_update.ksh |

|

RSE_CAL_HIER_ETL_START_JOB |

RSE_CAL_HIER_ETL_START_JOB |

rse_process_state_update.ksh |

|

RSE_REGULAR_MAIN_LOAD_JOB |

RSE_REGULAR_MAIN_LOAD_JOB |

rse_regular_main_load.ksh |

|

RSE_FISCAL_MAIN_LOAD_JOB |

RSE_FISCAL_MAIN_LOAD_JOB |

rse_fiscal_main_load.ksh |

|

RSE_CAL_HIER_ETL_END_JOB |

RSE_CAL_HIER_ETL_END_JOB |

rse_process_state_update.ksh |

|

RSE_DIMENSION_LOAD_END_START_JOB |

RSE_DIMENSION_LOAD_END_START_JOB |

rse_process_state_update.ksh |

|

RSE_DIMENSION_LOAD_END_END_JOB |

RSE_DIMENSION_LOAD_END_END_JOB |

rse_process_state_update.ksh |

|

RSE_DIMENSION_LOAD_START_START_JOB |

RSE_DIMENSION_LOAD_START_START_JOB |

rse_process_state_update.ksh |

|

RSE_DIMENSION_LOAD_START_END_JOB |

RSE_DIMENSION_LOAD_START_END_JOB |

rse_process_state_update.ksh |

|

RSE_SLS_START_START_JOB |

RSE_SLS_START_START_JOB |

rse_process_state_update.ksh |

|

RSE_SLS_START_END_JOB |

RSE_SLS_START_END_JOB |

rse_process_state_update.ksh |

|

RSE_SLS_END_START_JOB |

RSE_SLS_END_START_JOB |

rse_process_state_update.ksh |

|

RSE_SLS_END_END_JOB |

RSE_SLS_END_END_JOB |

rse_process_state_update.ksh |

|

ORASE_END_START_JOB |

ORASE_END_START_JOB |

rse_process_state_update.ksh |

|

ORASE_END_RUN_DATE_UPDT_JOB |

ORASE_END_RUN_DATE_UPDT_JOB |

rse_batch_run_date_update.ksh |

|

ORASE_END_END_JOB |

ORASE_END_END_JOB |

rse_process_state_update.ksh |

|

RSE_MASTER_ADHOC_JOB |

Run RSE master script |

rse_master.ksh |

|

PMO_MASTER_ADHOC_JOB |

Run PMO master script |

pmo_master.ksh |

| PMO_CUMUL_SLS_SETUP_ADHOC_JOB | Ad hoc setup job for cumulative sales calculation | pmo_cum_sls_load_setup.ksh |

| PMO_CUMUL_SLS_PROCESS_ADHOC_JOB | Ad hoc process job for cumulative sales calculation | pmo_cum_sls_load_process.ksh |

| PMO_ACTIVITY_STG_ADHOC_JOB | Ad hoc PMO Activity load setup job | pmo_activity_load_setup.ksh |

| PMO_ACTIVITY_LOAD_ADHOC_JOB | Ad hoc PMO Activity load process job | pmo_activity_load_process.ksh |

| PMO_ACTIVITY_WH_LOAD_SETUP_ADHOC_JOB | Ad hoc PMO Activity setup job to aggregate sales data for warehouse transfers | pmo_activity_wh_load_setup.ksh |

| PMO_ACTIVITY_WH_LOAD_PROCESS_ADHOC_JOB | Ad hoc PMO Activity process job to aggregate sales data for warehouse transfers | pmo_activity_wh_load_process.ksh |

| PMO_ACTIVITY_SHIPMENT_LOAD_SETUP_ADHOC_JOB | Ad hoc PMO Activity setup job to aggregate sales data for warehouse shipments | pmo_activity_shipment_load_setup.ksh |

| PMO_ACTIVITY_SHIPMENT_LOAD_PROCESS_ADHOC_JOB | Ad hoc PMO Activity process job to aggregate sales data for warehouse shipments | pmo_activity_shipment_load_process.ksh |

| PMO_RETURN_DATAPREP_SETUP_ADHOC_JOB | Ad hoc PMO Returns data load preparation setup job | pmo_return_dataprep_setup.ksh |

| PMO_RETURN_DATAPREP_PROCESS_ADHOC_JOB | Ad hoc PMO Returns data load preparation processing job | pmo_return_dataprep_process.ksh |

| PMO_RETURN_CALC_PROCESS_ADHOC_JOB | Ad hoc PMO Returns calculations processing job | pmo_return_calculation.ksh |

| PMO_HOLIDAY_LOAD_ADHOC_JOB | Ad hoc PMO Holiday load job | pmo_holiday_load.ksh |

|

PMO_ACTIVITY_LOAD_START_JOB |

PMO Activity load start job |

rse_process_state_update.ksh |

|

PMO_ACTIVITY_STG_JOB |

PMO Activity data staging |

pmo_activity_load_setup.ksh |

|

PMO_ACTIVITY_LOAD_JOB |

PMO Activity load job |

pmo_activity_load_process.ksh |

|

PMO_ACTIVITY_LOAD_END_JOB |

PMO Activity load end job |

rse_process_state_update.ksh |

The Batch and Ad hoc jobs listed in the following table are used to prepare weekly data and to run weekly batches for MFP and AP.

Table 13-4 Batch and Ad Hoc Jobs for MFP and AP Forecasting

| JobName | Description | RmsBatch |

|---|---|---|

|

RSE_FCST_SALES_PLAN_START_JOB |

Start job for sales plan data load for Bayesian method |

rse_process_state_update.ksh |

|

RSE_FCST_SALES_PLAN_LOAD_JOB |

Load job for sales plan data for Bayesian method |

rse_fcst_sales_plan_load.ksh |

|

RSE_FCST_SALES_PLAN_END_JOB |

End job for sales plan data load for Bayesian method |

rse_process_state_update.ksh |

|

RSE_ASSORT_PLAN_LOAD_START_JOB |

Loading assortment plan through interface for AP |

rse_process_state_update.ksh |

|

RSE_ASSORT_PLAN_LOAD_STG_JOB |

Loading assortment plan through interface for AP |

rse_assort_plan_per_stg.ksh |

|

RSE_ASSORT_PLAN_LOAD_COPY_JOB |

Loading assortment plan through interface for AP |

|

|

RSE_ASSORT_PLAN_LOAD_STG_CNE_JOB |

Loading assortment plan through interface for AP |

|

|

RSE_RDX_ASSORT_PLAN_IMPORT_JOB |

Importing assortment plan from RDX to AIF for AP |

rse_rdx_assort_plan_import.ksh |

|

RSE_ASSORT_PLAN_LOAD_JOB |

Loading assortment plan through interface for AP |

rse_assort_plan_per_load.ksh |

|

RSE_ASSORT_PLAN_LOAD_END_JOB |

Loading assortment plan through interface for AP |

rse_process_state_update.ksh |

|

RSE_PLANNING_PERIOD_LOAD_START_JOB |

Loading assortment period through interface for AP |

rse_process_state_update.ksh |

|

RSE_PLANNING_PERIOD_STG_JOB |

Loading assortment period through interface for AP |

rse_planning_period_stg.ksh |

|

RSE_PLANNING_PERIOD_COPY_JOB |

Loading assortment period through interface for AP |

|

|

RSE_PLANNING_PERIOD_STG_CNE_JOB |

Loading assortment period through interface for AP |

|

|

RSE_RDX_ASSORT_PERIOD_IMPORT_JOB |

Importing assortment period from RDX to AIF for AP |

rse_rdx_assort_period_import.ksh |

|

RSE_PLANNING_PERIOD_LOAD_JOB |

Loading assortment period through interface for AP |

rse_planning_period_load.ksh |

|

RSE_PLANNING_PERIOD_LOAD_END_JOB |

Loading assortment period through interface for AP |

rse_process_state_update.ksh |

|

RSE_MFP_FCST_BATCH_RUN_START_JOB |

Start job for executing MFP and AP forecast batch runs |

rse_process_state_update.ksh |

|

RSE_CREATE_MFP_BATCH_RUN_PROC_JOB |

Create MFP and AP batch runs |

rse_create_mfp_batch_run_proc.ksh |

|

RSE_MFP_FCST_BATCH_PROCESS_JOB |

Execute MFP and AP forecast batch |

rse_fcst_mfp_batch_process.ksh |

|

RSE_MFP_FCST_BATCH_RUN_END_JOB |

End job for executing MFP and AP forecast batch runs |

rse_process_state_update.ksh |

|

RSE_CREATE_MFP_BATCH_RUN_PROC_ADHOC_JOB |

Ad hoc job to create MFP and AP batch runs |

rse_create_mfp_batch_run_proc.ksh |

|

RSE_MFP_FCST_BATCH_PROCESS_ADHOC_JOB |

Ad hoc job to execute MFP and AP forecast batch |

rse_fcst_mfp_batch_process.ksh |

The forecast values generated by runs that are associated with active run types are exported to the RDX schema. The jobs for exporting the outputs to the RDX schema are listed in the following table.

Table 13-5 Export Jobs for MFP and AP from AIF to RDX

| JobName | Description | RmsBatch |

|---|---|---|

|

RSE_FCST_EXPORT_START_JOB |

Start job for MFP and AP forecast export |

rse_process_state_update.ksh |

|

RSE_MFP_FCST_EXPORT_JOB |

Export MFP forecast |

rse_mfp_export.ksh |

|

RSE_AP_FCST_EXPORT_JOB |

Export AP forecast |

rse_ap_export.ksh |

|

RSE_FCST_EXPORT_END_JOB |

End job for MFP and AP forecast export |

rse_process_state_update.ksh |

|

RSE_MFP_FCST_EXPORT_ADHOC_JOB |

Ad hoc job to export MFP forecast |

rse_mfp_export.ksh |

|

RSE_AP_FCST_EXPORT_ADHOC_JOB |

Ad hoc job to export AP forecast |

rse_ap_export.ksh |

|

RSE_RDX_ASSORT_ELASTICITY_EXPORT_JOB |

Export assortment elasticity for AP |

rse_rdx_assort_elasticity_export.ksh |

|

RSE_RDX_ASSORT_ELASTICITY_EXPORT_ADHOC_JOB |

Ad hoc job to export assortment elasticity for AP |

rse_rdx_assort_elasticity_export.ksh |

|

RSE_RDX_LOC_CLUSTER_EXPORT_JOB |

Export store clusters for AP |

rse_rdx_loc_cluster_export.ksh |

|

RSE_RDX_LOC_CLUSTER_EXPORT_ADHOC_JOB |

Ad hoc job to export store clusters for AP |

rse_rdx_loc_cluster_export.ksh |

|

RSE_RDX_SIZE_PROFILE_EXPORT_JOB |

Export size profiles for AP |

rse_rdx_size_profile_export.ksh |

|

RSE_RDX_SIZE_PROFILE_EXPORT_ADHOC_JOB |

Ad hoc job to export size profiles for AP |

rse_rdx_size_profile_export.ksh |

Forecast Configuration for IPO (DF, IO, and LAR) and LPO

To configure forecast process for IPO and LPO, do the following.

-

Use the Manage System Configurations screen to review and modify the global configurations in RSE_CONFIG. For further details, see "Configuration Updates". The relevant configurations in RSE_CONFIG that must be reviewed and edited as required are listed in the following table.

Table 13-6 RSE Configurations

APPL_CODE PARAM_NAME PARAM_VALUE DESCR RSE

EXTENDED_HIERARCHY_SRC

Default value is NON-RMS.

Data source providing extended hierarchy data RMS/NON-RMS.

RSE

LOAD_EXTENDED_PROD_HIER

Default value is N. If the product hierarchy data had 9 levels, set this value as Y. If it has 7 levels, keep this value as N.

Y/N Value. This parameter is used by the product hierarchy ETL to know if the extended product hierarchy is needed.

PMO

PMO_PROD_HIER_TYPE

Default value is 3. If the product hierarchy data has 9 levels (i.e., it has extended hierarchy), keep this value as 3. If it has 7 levels, change this value to 1.

The hierarchy id to use for the product (Installation configuration).

RSE

PROD_HIER_SLSTXN_HIER_LEVEL_ID

Default value is 9.

This parameter identifies the hierarchy level at which sales transactions are provided (7-Style, 8-Style/color or 9 Style/color/Size). It must match the extended hierarchy leaf level

PMO

PMO_AGGR_INVENTORY_DATA_FLG

Default value is Y.

Specifies if inventory data is present and if it should be used when aggregating activities data. Set this value to N if inventory data is not loaded (inventory data is not required for MFP and AP applications but it may be required for other applications such as LPO and IPO..

RSE

SLS_TXN_EXCLUDE_LIABILITY_SLS

Default value is N.

Y/N flag indicating if liability sales columns should be excluded when retrieving sales transaction data.

RSE

RSE_LOC_HIER_EXCL_FRANCHISE

Default value is N.

Y/N flag indicating if franchise locations should be excluded from the location hierarchy.

RSE

PROMOTIONAL_SALES_AVAIL

Default value is Y.

Y/N flag indicating if promotion data is available for Sales & Promo run types.

Set this value to N if promotion data is not yet loaded into AIF.

RSE

DFLT_LIFE_CYCLE

Default value is SLC.

This parameter identifies the primary forecast method for the env.

Keep this value as SLC if the primary forecast method is Life Cycle and change this value to LLC if the primary forecast method is Sales & Promo.

PMO PMO_OUTLIER_CALCULATION_FLG Default value is Y. Y/N flag indicating if the Outlier flag calculation should happen internally in AIF. RSE FCST_PURGE_RETENTION_DAYS Default value is 180. Number of days to wait before permanently deleting run data. RSE FCST_PURGE_ALL_RUNS Default value is N. Y/N flag indicating all run data should be deleted. RSE FCST_TY_PURGE_RETENTION_DAYS Default value is 180. Number of days to wait before permanently deleting run type data. RSE FCST_TY_PURGE_ALL_RUNS Default value is N. Y/N flag indicating all run type data should be deleted. RSE RSE_FIRST_INV_RECEIPT_HIST_MAX_WEEKS Default value is 106. Records with inventory first receipt date older than these many weeks (from the latest week with inventory) will be excluded from model start date calculation. RSE INV_SRC_RI Default value is Y. Flag which indicates whether inventory is obtained from AIF data warehouse (Y) or loaded directly to AIF applications (N). RSE INV_RC_DT_SRC_RI Default value is Y. Flag that indicates whether receipt dates are obtained from AIF data warehouse (Y) or loaded directly to AIF applications (N). RSE RSE_FCST_NEW_ITEM_STRATEGY Default value is RDX. Allowed values are RDX (for population from RDX), RI (for population from RI interface), and RSP (programmatic population using RSE_FCST_NEW_ITEM_AGE_THRESH). RSE RSE_FCST_NEW_ITEM_AGE_THRESH Default value is 28. When RSE_FCST_NEW_ITEM_STRATEGY is RSP, this threshold will be used to identify new items. If the number of days passed from the prod_created_dt of the item is smaller than or equal to this value, the item will be considered new. RSE RSE_BD_ENABLE_POISSON PARAM_VALUE

Default value is Y. Y/N flag indicating whether to also use the Poisson method for calculating base demand for Sales & Promo run types. RSE SWITCH_TO_TXN_LOAD_DATE

PARAM_VALUE

Default value is SYSDATE. For customers who provide FACT data at aggregate level, this date indicates the stop point for loading aggregate data. If the SYSDATE is greater than or equal to this date, the data load from RI FACT tables to AIF will not run. Applicable date format YYYYMMDD. -

Use the Manage Forecast Configurations screen to set up the forecast runs, as follows.

In Manage Forecast Configurations, start by setting up a run type in the Setup train stop. Click the + icon above the table and fill in the fields in the pop-up. The customer should decide the primary forecast method for the environment and accordingly set the value of the parameter DFLT_LIFE_CYCLE in the table RSE_CONFIG (APPL_CODE= RSE) from the Manage System Configurations screen. All the items that are not supposed to be forecasted by the primary forecast method must be stored in the table RSE_FCST_LIFE_CYCLE_CLSF (populated through an interface). If the customer wants to forecast separately on the items stored in RSE_FCST_LIFE_CYCLE_CLSF and the items that are not, then both Sales & Promo and Life Cycle forecast method run types must be created. The run types will either use the items present in the table RSE_FCST_LIFE_CYCLE_CLSF or use the rest of the items depending on the Forecast Method for the run type and the DFLT_LIFE_CYCLE parameter. You must create a run type for each forecast intersection combination that is required.

Once you have finished setting up the run types, click Start Data Aggregation in the Setup train stop. Select all the run types that were created and click Submit. When the aggregation is complete, the aggregation status will change to Complete. At this point, ad-hoc test runs and batch runs can be created and executed to generate a forecast.

To create an ad-hoc run, go to the Test train stop. First, click a run type in the top table and then click the + icon in the bottom table to create a run. In the Create Run pop-up, you can change the configurations parameters related to estimation process, base demand, and forecast process in their respective tabs. After modifying and reviewing the configuration parameters, click Submit to start the run. Upon submit, a validation process runs to validate the value of configuration parameters. If there is any error, correct the corresponding parameter and submit again. Once the run is complete, the status will change to Forecast Generation Complete.

Once you are done with testing, you must modify and review the configurations of the run type, activate the run type, enable auto-approve, and map the run type to the downstream application (for example, IPO-DF). In the Manage train stop, select a row, click Edit Configurations Parameters, and edit the estimation and forecast parameters as necessary. Once you are done, go to Review tab and click Validate and then close tab.

Note:

If the run type is active, you will only be able to view the parameters. In order to edit the parameters, the run type must be inactive.

To activate the run type and enable the auto-approve, select a run type in the table and click the corresponding buttons above the table. Lastly, to map the run type to IPO-DF, go to Map train stop and click the + icon to create a new mapping.

Note:

For IPO-DF, you will be able to map one or multiple run types to the same "external key" run type. The run types that are being mapped to the same external key must have same forecast intersection, same forecast measure, same price zone and customer segment flag, and same data source, but different forecast method.

By default, the approved forecast source for a run type is AIF. But, when a run type is mapped to IPO-DF, irrespective of what other applications it is mapped to, the approved forecast source changes to RDX. The approved forecast source changes back to AIF when the run type mapping to IPO-DF is deleted. So, the approved forecast source is unique to a run type and changes based on the mapping situation. Also, note that when a run type is mapped to RI/RMS, the approved forecast from that run type (either directly from AIF or via IPO-DF depending on whether a mapping also exists to IPO-DF) will be pushed to RI for BI reporting and/or RMS for merchandising. Another nuance is that when a Life Cycle method run type is mapped to LPO-Promotion/Markdown, this run type will treat the value of the DFLT_LIFE_CYCLE parameter as SLC. This enables an environment to forecast on all items using both Sales & Promo and Life Cycle methods, given that the Life Cycle method run type is mapped to LPO-Promotion/Markdown, the RSE_FCST_LIFE_CYCLE_CLSF table is empty, and the DFLT_LIFE_CYCLE parameter is set to LLC.

Batch and Ad-Hoc Jobs for IPO-DF Forecasting

The configuration and main data load jobs listed in Table 13-3 are used for loading foundation data (product hierarchy, location hierarchy, calendar, product attributes, and so on).

The Batch and Ad-Hoc jobs listed in the following table are used for preparing weekly data and running weekly batches for IPO-DF.

Table 13-7 Batch and Ad Hoc Jobs for IPO-DF Forecasting

| JobName | Description | RmsBatch |

|---|---|---|

|

RSE_PROMO_HIER_ETL_START_JOB |

Start job for loading promotion hierarchy data |

rse_process_state_update.ksh |

|

RSE_PROMO_SRC_XREF_LOAD_JOB |

Job for loading promotion hierarchy data |

rse_promo_src_xref_load.ksh |

|

RSE_PROMO_HIER_LOAD_JOB |

Job for loading promotion hierarchy data |

rse_promo_hier_load.ksh |

|

RSE_PROMO_HIER_DH_LOAD_JOB |

Job for loading promotion hierarchy data |

rse_promo_hier_dh_load.ksh |

|

RSE_PROMO_HIER_ETL_END_JOB |

End job for loading promotion hierarchy data |

rse_process_state_update.ksh |

|

PMO_ACTIVITY_LOAD_PL_LLC_START_JOB |

Start job for loading promotion lift data |

rse_process_state_update.ksh |

| PMO_ACTIVITY_LOAD_PL_LLC_SETUP_JOB | Setup job for loading promotion lift data | pmo_activity_load_llc_pl_setup.ksh |

| PMO_ACTIVITY_LOAD_PL_LLC_PROCESS_JOB | Process job for loading promotion lift data | pmo_activity_load_llc_pl_process.ksh |

|

PMO_ACTIVITY_LOAD_PL_LLC_END_JOB |

End job for loading promotion lift data |

rse_process_state_update.ksh |

| PMO_ACTIVITY_LOAD_PL_LLC_SETUP_ADHOC_JOB | Ad-hoc setup job for loading promotion lift data | pmo_activity_load_llc_pl_setup.ksh |

| PMO_ACTIVITY_LOAD_PL_LLC_PROCESS_ADHOC_JOB | Ad-hoc process job for loading promotion lift data | pmo_activity_load_llc_pl_process.ksh |

|

PMO_ACTIVITY_LOAD_OFFERS_INITIAL_ADHOC_JOB |

Initial one-time job for loading promotion offers data |

pmo_activity_load_offers_initial.ksh |

| PMO_ACTIVITY_LOAD_PL_LLC_SETUP_JOB | Setup job for loading promotion lift data. | pmo_activity_load_llc_pl_setup.ksh |

| PMO_ACTIVITY_LOAD_PL_LLC_PROCESS_JOB | Process job for loading promotion lift data | pmo_activity_load_llc_pl_process.ksh |

|

PMO_ACTIVITY_LOAD_OFFERS_START_JOB |

Start job for loading promotion offers data |

rse_process_state_update.ksh |

|

PMO_ACTIVITY_LOAD_OFFERS_LOAD_JOB |

Job for loading promotion offers data |

pmo_activity_load_offers_weekly.ksh |

|

PMO_ACTIVITY_LOAD_OFFERS_END_JOB |

End job for loading promotion offers data |

rse_process_state_update.ksh |

|

PMO_ACTIVITY_LOAD_OFFERS_LOAD_ADHOC_JOB |

Ad-hoc job for loading promotion offers data |

pmo_activity_load_offers_weekly.ksh |

|

RSE_FLEX_GROUP_LOAD_START_JOB |

Loading flex group data through interface |

rse_process_state_update.ksh |

|

RSE_FLEX_GROUP_DTL_STG_JOB |

Loading flex group data through interface (populates rse_flex_group_dtl_stg) |

rse_flex_group_dtl_stg.ksh |

|

RSE_FLEX_GROUP_DTL_COPY_JOB |

Loading flex group data through interface |

|

|

RSE_FLEX_GROUP_DTL_STG_CNE_JOB |

Loading flex group data through interface |

|

|

RSE_FLEX_GROUP_DTL_LOAD_JOB |

Loading flex group data through interface (populates rse_flex_group and rse_flex_group_dtl based on rse_flex_group_set and rse_flex_group_dtl_stg) |

rse_flex_group_dtl_load.ksh |

|

RSE_FLEX_GROUP_LOAD_END_JOB |

Loading flex group data through interface |

rse_process_state_update.ksh |

|

RSE_FCST_SPREAD_PROFILE_LOAD_START_JOB |

Loading spread profile data through interface |

rse_process_state_update.ksh |

|

RSE_FCST_SPREAD_PROFILE_STG_JOB |

Loading spread profile data through interface (populates rse_fcst_spread_profile_stg) |

rse_fcst_spread_profile_stg.ksh |

|

RSE_FCST_SPREAD_PROFILE_COPY_JOB |

Loading spread profile data through interface |

|

|

RSE_FCST_SPREAD_PROFILE_STG_CNE_JOB |

Loading spread profile data through interface |

|

|

RSE_FCST_SPREAD_PROFILE_LOAD_JOB |

Loading spread profile data through interface (populates rse_fcst_spread_profile based on rse_fcst_spread_profile_stg) |

rse_fcst_spread_profile_load.ksh |

|

RSE_FCST_SPREAD_PROFILE_LOAD_END_JOB |

Loading spread profile data through interface |

rse_process_state_update.ksh |

|

RSE_FCST_LIFE_CYCLE_CLSF_LOAD_START_JOB |

Loading exception life cycle items through interface |

rse_process_state_update.ksh |

|

RSE_FCST_LIFE_CYCLE_CLSF_STG_JOB |

Loading exception life cycle items through interface (populates rse_fcst_life_cycle_clsf_stg) |

rse_fcst_life_cycle_clsf_stg.ksh |

|

RSE_FCST_LIFE_CYCLE_CLSF_COPY_JOB |

Loading exception life cycle items through interface |

|

|

RSE_FCST_LIFE_CYCLE_CLSF_STG_CNE_JOB |

Loading exception life cycle items through interface |

|

|

RSE_FCST_LIFE_CYCLE_CLSF_LOAD_JOB |

Loading exception life cycle items through interface (populates rse_fcst_life_cycle_clsf based on rse_fcst_life_cycle_clsf_stg) |

rse_fcst_life_cycle_clsf_load.ksh |

|

RSE_FCST_LIFE_CYCLE_CLSF_LOAD_END_JOB |

Loading exception life cycle items through interface |

rse_process_state_update.ksh |

| RSE_INV_LOAD_START_JOB | Inventory Load Start Job | rse_process_state_update.ksh |

| RSE_INV_PR_LC_WK_STG_JOB | Inventory price location week file stage (populates rse_inv_pr_lc_wk_a_stg) | rse_inv_pr_lc_wk_stg.ksh |

| RSE_INV_PR_LC_WK_COPY_JOB | Loading price location week file | |

| RSE_INV_PR_LC_WK_STG_CNE_JOB | Loading price location week file | |

| RSE_INV_PR_LC_WK_LOAD_JOB | Inventory price location week file load (populates rse_inv_pr_lc_wk_a and rse_inv_pr_lc_hist based on rse_inv_pr_lc_wk_a_stg) | rse_inv_pr_lc_wk_load.ksh |

| RSE_INV_PR_LC_WK_SETUP_JOB | Inventory price location week AIF Data load setup | rse_inv_pr_lc_wk_setup.ksh |

| RSE_INV_PR_LC_WK_PROCESS_JOB | Inventory price location week AIF Data load process (populates rse_inv_pr_lc_wk_a and rse_inv_pr_lc_hist based on w_rtl_inv_it_lc_wk_a and w_rtl_invu_it_lc_wk_a | rse_inv_pr_lc_wk_process.ksh |

| RSE_INV_LOAD_END_JOB | Inventory Load End Job | rse_process_state_update.ksh |

| RSE_EXIT_DATE_LOAD_START_JOB | Start job for exit dates | rse_process_state_update.ksh |

| RSE_EXIT_DATE_STG_JOB | Staging job for exit dates (populates rse_exit_date_stg) | rse_exit_date_stg.ksh |

| RSE_EXIT_DATE_COPY_JOB | CNE copy job for exit dates | |

| RSE_EXIT_DATE_STG_CNE_JOB | CNE staging job for exit dates | |

| RSE_EXIT_DATE_LOAD_JOB | Load job for exit dates (populates rse_exit_date based on rse_exit_date_stg) | rse_exit_date_load.ksh |

| RSE_EXIT_DATE_LOAD_END_JOB | End job for exit dates | rse_process_state_update.ksh |

|

PMO_RUN_EXEC_START_JOB |

Start job for PMO run execution |

rse_process_state_update.ksh |

|

PMO_CREATE_BATCH_RUN_JOB |

Create batch run for PMO execution |

rse_create_pmo_batch_run_proc.ksh |

|

PMO_RUN_EXEC_SETUP_JOB |

Setup job for PMO run execution |

pmo_run_exec_setup.ksh |

|

PMO_RUN_EXEC_PROCESS_JOB |

Process job for PMO run execution |

pmo_run_exec_process.ksh |

|

PMO_RUN_EXEC_END_JOB |

End job for PMO run execution |

rse_process_state_update.ksh |

|

PMO_RUN_EXEC_ADHOC_JOB |

Ad-hoc job for PMO run execution |

pmo_run_execution.ksh |

|

RSE_FCST_BATCH_RUN_START_JOB |

Start job for forecast batch run |

rse_process_state_update.ksh |

|

RSE_CREATE_FCST_BATCH_RUN_JOB |

Create forecast batch run |

rse_create_fcst_batch_run_proc.ksh |

|

RSE_FCST_BATCH_PROCESS_JOB |

Execute Base Demand and Demand Forecast for forecast run |

rse_fcst_batch_process.ksh |

|

RSE_FCST_BATCH_RUN_END_JOB |

End job for creating forecast batch run |

rse_process_state_update.ksh |

|

RSE_CREATE_FCST_BATCH_RUN_ADHOC_JOB |

Adhoc job to create forecast batch run |

rse_create_fcst_batch_run_proc.ksh |

|

RSE_FCST_BATCH_PROCESS_ADHOC_JOB |

Adhoc job to execute Base Demand and Demand Forecast for forecast run |

rse_fcst_batch_process.ksh |

The forecast values generated by runs that are approved, associated with active run types and mapped to IPO-DF are exported to RDX schema. The following table lists the jobs to export the main forecast data.

Table 13-8 Jobs for Exporting Forecast Outputs from AIF to RDX

| JobName | Description | RMSBatch |

|---|---|---|

|

RSE_RDF_FCST_EXPORT_JOB |

Export IPO-DF forecast |

rse_rdf_export.ksh |

|

RSE_RDF_FCST_EXPORT_ADHOC_JOB |

Ad hoc job to export IPO-DF forecast |

rse_rdf_export.ksh |

The following table lists the additional jobs for exporting different data such as promotions, and configuration parameters for run types.

Table 13-9 Additional Jobs for Exporting Data from AIF to RDX

| JobName | Description | RmsBatch |

|---|---|---|

|

RSE_HIST_PROMO_OFFER_SALES_EXPORT_ADHOC_JOB |

Initial one-time job for exporting promotion offer sales figures |

rse_rdf_offer_sales_hist_exp.ksh |

|

RSE_PROMO_OFFER_EXPORT_START_JOB |

Start job for exporting promotion offer |

rse_process_state_update.ksh |

|

RSE_PROMO_OFFER_EXPORT_JOB |

Export promotion offers |

rse_rdf_offers_hier_exp.ksh |

|

RSE_PROMO_OFFER_SALES_EXPORT_JOB |

Export promotion offer sales figures |

rse_rdf_offer_sales_exp.ksh |

|

RSE_PROMO_OFFER_EXPORT_END_JOB |

End job for exporting promotion offer |

rse_process_state_update.ksh |

|

RSE_PROMO_OFFER_EXPORT_ADHOC_JOB |

Ad hoc job to export promotion offers |

rse_rdf_offers_hier_exp.ksh |

|

RSE_PROMO_OFFER_SALES_EXPORT_ADHOC_JOB |

Ad hoc job to export promotion offer sales figures |

rse_rdf_offer_sales_exp.ksh |

|

RSE_FCST_RUN_TYPE_CONF_EXPORT_START_JOB |

Start job for exporting runtype config |

rse_process_state_update.ksh |

|

RSE_FCST_RUN_TYPE_CONF_EXPORT_SETUP_JOB |

Setup job for exporting runtype config |

rse_fcst_run_type_conf_exp_setup.ksh |

|

RSE_FCST_RUN_TYPE_CONF_EXPORT_PROCESS_JOB |

Process job for exporting runtype con |

rse_fcst_run_type_conf_exp_process.ksh |

|

RSE_FCST_RUN_TYPE_CONF_EXPORT_END_JOB |

End job for exporting runtype config |

rse_process_state_update.ksh |

|

RSE_FCST_RUN_TYPE_CONF_EXPORT_SETUP_ADHOC_JOB |

Ad-hoc setup job for exporting runtype config |

rse_fcst_run_type_conf_exp_setup.ksh |

|

RSE_FCST_RUN_TYPE_CONF_EXPORT_PROCESS_ADHOC_JOB |

Ad-hoc process job for exporting runtype config |

rse_fcst_run_type_conf_exp_process.ksh |

The jobs for importing forecast parameters from RDX schema are listed in the following table.

Table 13-10 Jobs for Importing Forecast Parameters from RDX to AIF

| JobName | Description | RMSBatch |

|---|---|---|

|

RSE_RDX_FCST_PARAM_START_JOB |

Start job for importing forecast parameters |

rse_process_state_update.ksh |

|

RSE_RDX_FCST_PARAM_SETUP_JOB |

Setup job for importing forecast parameters |

rse_rdx_fcst_param_setup.ksh |

|

RSE_RDX_FCST_PARAM_PROCESS_JOB |

Process job for importing forecast parameters |

rse_rdx_fcst_param_process.ksh |

|

RSE_RDX_FCST_PARAM_END_JOB |

End job for importing forecast parameters |

rse_process_state_update.ksh |

|

RSE_RDX_FCST_PARAM_SETUP_ADHOC_JOB |

Ad-hoc setup job for importing forecast parameters |

rse_rdx_fcst_param_setup.ksh |

|

RSE_RDX_FCST_PARAM_PROCESS_ADHOC_JOB |

Ad-hoc process job for importing forecast parameters |

rse_rdx_fcst_param_process.ksh |

|

RSE_FCST_RDX_NEW_ITEM_ENABLE_START_JOB |

Start job for importing enablement flags for new item forecast |

rse_process_state_update.ksh |

|

RSE_FCST_RDX_NEW_ITEM_ENABLE_SETUP_JOB |

Setup job for importing enablement flags for new item forecast |

rse_fcst_rdx_new_item_enable_setup.ksh |

|

RSE_FCST_RDX_NEW_ITEM_ENABLE_PROCESS_JOB |

Process job for importing enablement flags for new item forecast |

rse_fcst_rdx_new_item_enable_process.ksh |

|

RSE_FCST_RDX_NEW_ITEM_ENABLE_END_JOB |

End job for importing enablement flags for new item forecast |

rse_process_state_update.ksh |

|

RSE_FCST_RDX_NEW_ITEM_ENABLE_SETUP_ADHOC_JOB |

Ad-hoc setup job for importing enablement flags for new item forecast |

rse_fcst_rdx_new_item_enable_setup.ksh |

|

RSE_FCST_RDX_NEW_ITEM_ENABLE_PROCESS_ADHOC_JOB |

Ad-hoc process job for importing enablement flags for new item forecast |

rse_fcst_rdx_new_item_enable_process.ksh |

|

PMO_EVENT_IND_RDF_START_JOB |

Start job for importing event indicators for preprocessing |

rse_process_state_update.ksh |

|

PMO_EVENT_IND_RDF_SETUP_JOB |

Setup job for importing event indicators for preprocessing |

pmo_event_ind_rdf_setup.ksh |

|

PMO_EVENT_IND_RDF_PROCESS_JOB |

Process job for importing event indicators for preprocessing |

pmo_event_ind_rdf_process.ksh |

|

PMO_EVENT_IND_RDF_END_JOB |

End job for importing event indicators for preprocessing |

rse_process_state_update.ksh |

|

PMO_EVENT_IND_RDF_SETUP_ADHOC_JOB |

Ad-hoc setup job for importing event indicators for preprocessing |

pmo_event_ind_rdf_setup.ksh |

|

PMO_EVENT_IND_RDF_PROCESS_ADHOC_JOB |

Ad-hoc process job for importing event indicators for preprocessing |

pmo_event_ind_rdf_process.ksh |

|

RSE_LIKE_RDX_RSE_START_JOB |

Start job for importing new item parameters |

rse_process_state_update.ksh |

|

RSE_LIKE_RDX_RSE_SETUP_JOB |

Setup job for importing new item parameters |

rse_like_rdx_rse_setup.ksh |

|

RSE_LIKE_RDX_RSE_PROCESS_JOB |

Process job for importing new item parameters |

rse_like_rdx_rse_process.ksh |

|

RSE_LIKE_RDX_RSE_END_JOB |

End job for importing new item parameters |

rse_process_state_update.ksh |

|

RSE_LIKE_RDX_RSE_SETUP_ADHOC_JOB |

Ad-hoc setup job for importing new item parameters |

rse_like_rdx_rse_setup.ksh |

|

RSE_LIKE_RDX_RSE_PROCESS_ADHOC_JOB |

Ad-hoc process job for importing new item parameters |

rse_like_rdx_rse_process.ksh |

The approved forecast is exported out of RDX into AIF, which then goes to RMS via flat files. The jobs are listed in the following table. Note that the approved forecast source for a run type depends on whether it is mapped to the IPO-DF application. If this mapping exists, then the approved forecast source for the run type is RDX, otherwise it is AIF.

Table 13-11 Approved Forecast Export Jobs

| JobName | Description | RMSBatch |

|---|---|---|

|

RSE_RDX_APPD_FCST_START_JOB |

Start job for exporting approved forecast data |

rse_process_state_update.ksh |

|

RSE_RDX_APPD_FCST_SETUP_JOB |

Setup job for exporting approved forecast data |

rse_rdx_appd_fcst_setup.ksh |

|

RSE_RDX_APPD_FCST_PROCESS_JOB |

Process job for exporting approved forecast data |

rse_rdx_appd_fcst_process.ksh |

|

RSE_RDF_APPR_FCST_EXPORT_JOB |

Export approved weekly forecast to a flat file |

rse_rdf_appr_fcst_export.ksh |

|

RSE_RDF_APPR_FCST_DAY_EXPORT_JOB |

Export approved daily forecast to a flat file |

rse_rdf_appr_fcst_day_export.ksh |

|

RSE_RDX_APPD_FCST_END_JOB |

End job for exporting approved forecast data |

rse_process_state_update.ksh |

|

RSE_RDX_APPD_FCST_SETUP_ADHOC_JOB |

Ad-hoc setup job for exporting approved forecast data |

rse_rdx_appd_fcst_setup.ksh |

|

RSE_RDX_APPD_FCST_PROCESS_ADHOC_JOB |

Ad-hoc process job for exporting approved forecast data |

rse_rdx_appd_fcst_process.ksh |

|

RSE_RDF_APPR_FCST_EXPORT_ADHOC_JOB |

Ad hoc job to export approved weekly forecast to a flat file |

rse_rdf_appr_fcst_export.ksh |

|

RSE_RDF_APPR_FCST_DAY_EXPORT_ADHOC_JOB |

Ad-hoc job to export approved daily forecast to a flat file |

rse_rdf_appr_fcst_day_export.ksh |

Loading and Calculating Event Flags in AIF

The Out-of-Stock and Outlier indicators are important for the estimation stage of forecasting. These two flags are stored in the AIF table PMO_EVENT_INDICATOR that is used by the Sales & Promo forecast method.

Loading Out-of-Stock and Outlier Indicators Through the RAP Interface

The customer can load Out-of-Stock and Outlier indicators at the SKU/Store/Week level using the RAP interface (staging table: W_RTL_INVOOS_IT_LC_WK_FS; target table: W_RTL_INVOOS_IT_LC_WK_F). The indicators will flow into AIF that will be then used in forecasting.

Loading Out-of-Stock and Outlier Indicators Through the RDX Interface

This is implemented via a package RAP_INTF_SUPPORT_UTIL which is also granted to IW, and through the use of granting DML permissions on all the interface tables that reside in RDX01. In order to publish data for an interface, the implementer must first know some things about the interface being published. For this specific use case, the interface name is RDF_FCST_PARM_CAL_EXP.

SELECT * FROM RAP_INTF_CFG WHERE INTF_NAME = 'RDF_FCST_PARM_CAL_EXP';

Of the output from the above query, the PUBLISH_APP_CODE is important to pay attention to (for this example, the value is PDS). Also, the value in CUSTOMER_PUBLISHABLE_FLG is important. In order to publish data to these interfaces, it is necessary to have this value be a Y for any interface that IW is going to be populating. For customer implementations, this would require requesting AMS to update the value to a Y, like this:

UPDATE RAP_INTF_CFG SET CUSTOMER_PUBLISHABLE_FLG = 'Y' WHERE INTF_NAME = 'RDF_FCST_PARM_CAL_EXP'; COMMIT;

DECLARE

v_run_id NUMBER;

v_partition_name VARCHAR2(30);

BEGIN

RAP_INTF_SUPPORT_UTIL.PREP_INTF_RUN('PDS', 'RDF_FCST_PARM_CAL_EXP', v_run_id, v_partition_name);

--Note, the PDS value is what was obtained from the above RAP_INTF_CFG query.

EXECUTE IMMEDIATE 'INSERT /*+ append */ INTO RDF_FCST_PARM_CAL_EXP partition ( '||v_partition_name|| ') .... ';

--NOTE, the .... is to be replaced with the actual list of columns and an appropriate SELECT statement to provide the values.

--Of importance, it should be noted that the RUN_ID column must be populated with the value that was returned into the v_run_id variable above.

--After the data has been populated into the above table, it can be made available for retrieval by consuming application modules by the following:

RAP_INTF_SUPPORT_UTIL.PUBLISH_DATA('PDS', v_run_id, 'RDF_FCST_PARM_CAL_EXP', 'Ready');

END;

Once the above steps have been completed, the data will now be ready to be consumed by the application modules which use this interface.

Note:

If an attempt is made to call any of the routines inside RAP_INTF_SUPPORT_UTIL for an interface that was not updated so that CUSTOMER_PUBLISHABLE_FLG = Y, then an error will be provided indicating that "No Interfaces for [<values they provided to the procedure>] are allowed/available to be published from custom code.Calculating Outlier Indicators in AIF

Outlier indicators can also be calculated directly in AIF at any Product/Location/Week level. A historical sale for a particular Product/Location/Week is flagged as an outlier if it is more than the last 52–week average rate-of-sales for the Product/Location times a threshold. The threshold value is provided using the parameter PP_LLC_OUTLIER_THRESHOLD in the Preprocessing tab while setting up a Sales & Promo forecast run in Manage Forecast Configurations screen. This feature can be turned on/off using the parameter PMO_OUTLIER_CALCULATION_FLG in the table RSE_CONFIG (APPL_CODE = PMO) from the Manage System Configurations screen. It is expected that an environment will either load outlier indicators through the RAP/RDX interface or calculate in AIF.

Workflow for IPO-DF Implementation

The AIF-IPO-DF workflow can be implemented in two ways.

The main dependency for the first approach is that even before exporting first round of forecast from AIF to RDX, parameters need to be imported from RDX to AIF (the reason for this is IPO-DF prefers to receive forecast for a subset of the product/locations for which forecast is generated in AIF). For the import of parameters to work properly, run types must be mapped to IPO-DF and assigned an external key. Here are the steps for the first approach:

-

Create run types in AIF in Setup train stop screen.

-

Create test runs in AIF in Test train stop screen to see if the setup has been correct.

-

Map run types to IPO-DF and assign external keys in Map train stop screen.

-

Import parameters from RDX to AIF (IPO-DF sends parameters using the same external keys used in Step 3).

-

Run forecasts again in Test train stop screen (this time, the forecasts will use the imported parameters).

-

Approve the estimation and forecast runs in Test train stop screen.

-

Activate the run types in Manage train stop screen.

-

Export forecasts from AIF to RDX.

-

Cycle continues:

-

Import parameters from RDX to AIF.

-

Run forecasts.

-

Approve runs.

-

Export forecasts from AIF to RDX.

-

In the second approach, there is no dependency of importing parameters from RDX to AIF before exporting forecast from AIF to RDX. (This simplifies the workflow and saves time during implementation; however, the batch processes will still have the dependency.) Here are the steps for the second approach:

-

Create run types in AIF in Setup train stop screen.

-

Create test runs in AIF in Test train stop screen to see if the setup has been correct.

-

Approve the estimation and forecast runs in Test train stop screen.

-

Activate the run types in Manage train stop screen.

-

Map run types to IPO-DF and assign external keys in Map train stop screen.

-

Export forecasts from AIF to RDX (this will export all product/locations for which AIF has generated forecast; however, this export will take longer time because it is a huge amount of data; this is the tradeoff between the two implementation approaches).

-

Cycle continues as in Step 9 of the first approach.

The internal (w.r.t. AIF) difference between the two approaches is as follows: When the table in AIF that stores imported parameters from RDX is empty, AIF will export forecast for all product/locations (second approach); otherwise, AIF will export forecast for only a subset of product/locations as imported from RDX (first approach).

Note that once a forecast run has been exported from AIF to RDX, it can't be exported again. For an active run type, the export code finds the latest forecast run that has completed, has been approved, and has not been exported before.

AIF-IPO-DF Workflow Example

Here is a set of sample steps using the first approach.

- In AIF Manage Forecast Configurations train stop 1 Setup, create

Run Type.

- For forecasts used by IPO-DF, choose Sales & Promo or Life Cycle as the Forecast Method.

- If any run type has an aggregation status of Not Started, click the Start Data Aggregation button for the new run type just created, this process can take a few hours to complete.

- In AIF Manage Forecast Configurations train stop 2 Test.

-

Once aggregation completes, create a new run under selected run type, choose to run Estimation and Base Demand in this step, you do not have to toggle to Generate Forecast initially.

- Approve the estimation parameters by clicking on Approve Demand Parameters.

- Tables to check:

- Seasonality (from estimation)

-

For both methods: RSE_SEAS_CURVE_DIM (using pmo run id)

- For both methods: RSE_SEAS_CURVE_WK (using pmo run id)

-

- Base Demand

-

For Life Cycle: RSE_BASE_DEMAND (using fcst run id)

- For Sales & Promo: RSE_LLC_BD_RES (using fcst run id)

-

- Forecast (when Generate Forecast is selected)

-

For Life Cycle: RSE_DMD_FCST (using fcst run id)

- For Sales & Promo: RSE_LLC_FCST_BLINE (using fcst run id)

-

- Seasonality (from estimation)

-

- In AIF Manage Forecast Configurations train stop 4 Map.

-

Map the forecast run type to IPO-DF

- Make sure to assign external application key for IPO-DF, which is usually Weekly Forecast (this name has to be matched to IPO-DF side)

-

- In IPO-DF:

-

Perform your initial IPO-DF Workflow Tasks to set up forecast parameters (History Length, Forecast Start Date, Forecast End Date), new items, etc.

- Run the IPO-DF Pre-Forecast and Export Forecast Parameters Batches

using IPO_PRE_BATCH_W_JOB and IPO_PRE_EXP_RDX_W_JOB processes.

-

POM job IPO_PRE_BATCH_W_JOB triggers Pre-Forecast Batch task in IPO-DF OAT

- POM job IPO_PRE_EXP_RDX_W_JOB triggers Export Forecast Parameters task in IPO-DF OAT

- Check the following tables after the batches complete.

-

RDF_FCST_PARM_HDR_EXP

-

rdf_rdfruntype01:RDF_RUN_TYPE_KEY

-

- RDF_RUN_TYPE_PARM_EXP

-

rdf_rdfruntype01:RDF_RUN_TYPE_KEY

- rdf_runnewitem01:ENABLE_NEW_ITEM

- rdf_runfrcst01:ENABLE_FORECAST

-

- RDF_FCST_PARM_EXP

-

rdf_hislen01:FCST_HIST_LENGTH

- rdf_frcststartdt01:FCST_START_DATE

- rdf_frcstenddtlw01:FCST_END_DATE

-

- RDF_FCST_PARM_CAL_EXP

-

rdf_ppsbopdate01:BOP_DATE

- rdf_totadjslsovr01:USER_OVERRIDE

- rdf_outageind01:PPS_OUTAGE_IND

- rdf_outlierind01:PPS_OUTLIER_IND

-

- RDF_NIT_PARM_EXP

-

PROD_HIER_LEVEL:SKU

- LOC_HIER_LEVEL:STOR

- rdf_fcpsubm:NIT_SUB_METHOD

- rdf_fcpros:NIT_ROS_USER_IN

- rdf_nitapplkitm1:NIT_LIKE_ITEM

- rdf_nstapplkstr1:NIT_LIKE_STOR

- rdf_fcpadj:ADJUSTMENT_FACTOR

-

-

-

-

- Run jobs in POM to import parameters from RDX to AIF.

-

RSE_RDX_FCST_PARAM_ADHOC_PROCESS

-

RSE_RDX_FCST_PARAM_SETUP_ADHOC_JOB

- RSE_RDX_FCST_PARAM_PROCESS_ADHOC_JOB

- Table to check:

-

RSE_RDF_FCST_RUN_TYPE_PRM (from RDF_FCST_PARM_HDR_EXP and RDF_FCST_PARM_EXP)

-

-

- RSE_FCST_RDX_NEW_ITEM_ENABLE_ADHOC_PROCESS

-

RSE_FCST_RDX_NEW_ITEM_ENABLE_SETUP_ADHOC_JOB

- RSE_FCST_RDX_NEW_ITEM_ENABLE_PROCESS_ADHOC_JOB

- Table to check:

-

RSE_FCST_RDX_NEW_ITEM_ENABLE (from RDF_RUN_TYPE_PARM_EXP)

-

-

- RSE_LIKE_RDX_RSE_ADHOC_PROCESS

-

RSE_LIKE_RDX_RSE_SETUP_ADHOC_JOB

- RSE_LIKE_RDX_RSE_PROCESS_ADHOC_JOB

- Table to check:

-

RSE_FCST_NEW_ITEM_PARAM (from RDF_NIT_PARM_EXP)

-

-

- PMO_EVENT_IND_RDF_ADHOC_PROCESS

-

PMO_EVENT_IND_RDF_SETUP_ADHOC_JOB

- PMO_EVENT_IND_RDF_PROCESS_ADHOC_JOB

- Table to check:

-

PMO_EVENT_INDICATOR (from RDF_FCST_PARM_CAL_EXP)

-

-

-

- In AIF Manage Forecast Configurations train stop 2 Test.

-

Create a new run under the same run type you have been working with, choose to run Base Demand only if there is no need to run Estimation, and toggle to Generate Forecast this time.

- If estimation has been run, approve the Estimation parameters by clicking on Approve Demand Parameters

- Approve the base demand and forecast by clicking on Approve Base Demand and Forecast

- Tables to check:

-

Seasonality (when estimation is run):

-

For both methods: RSE_SEAS_CURVE_DIM (using pmo run id)

- For both methods: RSE_SEAS_CURVE_WK (using pmo run id)

-

- Base Demand

-

For Life Cycle: RSE_BASE_DEMAND (using fcst run id)

- For Sales & Promo: RSE_LLC_BD_RES (using fcst run id)

-

- Forecast

-

For Life Cycle: RSE_DMD_FCST (using fcst run id)

- For Sales & Promo: RSE_LLC_FCST_BLINE (using fcst run id)

-

-

-

- In AIF Manage Forecast Configurations train stop 3 Manage.

-

View/Edit Configuration Parameters to see/apply parameters from previously approved run (these parameters will be used for batch runs; not needed for forecast runs created from AIF UI)

- Activate the forecast run type

- Manage Auto-Approve to enable Auto-Approve for the run type before starting batch cycles (not needed for forecast runs created from AIF UI)

-

- Run jobs in POM to export forecasts to RDX from AIF.

-

RSE_FCST_RUN_TYPE_CONF_EXPORT_ADHOC_PROCESS

-

RSE_FCST_RUN_TYPE_CONF_EXPORT_SETUP_ADHOC_JOB

- RSE_FCST_RUN_TYPE_CONF_EXPORT_PROCESS_ADHOC_JOB

-

- RSE_FCST_EXPORT_ADHOC_PROCESS (Do not run the process as it includes

AP and MFP forecast export too)

-

RSE_RDF_FCST_EXPORT_ADHOC_JOB

-

-

- After the jobs complete, check the following tables in Innovation

Workbench to do a quick validation.

-

Table: rdx01.rse_fcst_run_type_config (gets populated by RSE_FCST_RUN_TYPE_CONF_EXPORT_ADHOC_PROCESS; based on an independent export run id)

- Table: rdx01.rse_fcst_run_hdr_exp (gets populated by RSE_RDF_FCST_EXPORT_ADHOC_JOB; this table associates the export run id to the forecast run id approved in Step 6)

- Table: rdx01.rse_fcst_run_config (gets populated by RSE_RDF_FCST_EXPORT_ADHOC_JOB; based on the export run id from rdx01.rse_fcst_run_hdr_exp)

- Table: rdx01.rse_fcst_appr_base_dmd_exp (gets populated by RSE_RDF_FCST_EXPORT_ADHOC_JOB; based on the export run id from rdx01.rse_fcst_run_hdr_exp)

- Table: rdx01.rse_fcst_demand_dtl_exp (gets populated by RSE_RDF_FCST_EXPORT_ADHOC_JOB; based on the export run id from rdx01.rse_fcst_run_hdr_exp)

- Table: rdx01.rse_fcst_demand_src_exp (gets populated by RSE_RDF_FCST_EXPORT_ADHOC_JOB; based on the export run id from rdx01.rse_fcst_run_hdr_exp)

- Table: rdx01.rse_fcst_demand_dtl_cal_exp (gets populated by RSE_RDF_FCST_EXPORT_ADHOC_JOB; based on the export run id from rdx01.rse_fcst_run_hdr_exp)

-

- In IPO-DF:

-

Import the forecasts to IPO-DF using IPO_POST_DATA_IMP_PROCESS, which runs the following two OAT tasks in IPO-DF:

-

Import Forecast and Preprocessing Component task in IPO-DF OAT

-

RSE_FCST_RUN_HDR_EXP

-

rdf_rserunid01:RUN_ID

-

- RSE_FCST_DEMAND_DTL_CAL_EXP

-

rdf_sysbaseline01:BASELINE_FCST_QTY

- rdf_sysfrcst01:DEMAND_FCST_QTY

- rdf_syspeak01:EVENT_PEAK_QTY

- rdf_eventfut01:EVENT_CLND

- rdf_prcdiscfut01:PRICE_DISC_PERCENT

-

- RSE_FCST_DEMAND_DTL_EXP

-

rdf_basedemand01:BASE_DEMAND_QTY

- rdf_priceelas01:PRICE_ELASTICITY

-

- RSE_FCST_APPR_BASE_DMD_EXP

-

rdf_appchosenlevel01:APPR_BASE_DEMAND_QTY

-

- RSE_FCST_DEMAND_SRC_EXP

-

rdf_outageind01:STOCKOUT_IND

- rdf_outageadj01:LOST_SLS_QTY

- rdf_outlierind01:OUTLIER_IND

- rdf_outlieradj01:OUTLIER_SLS_QTY

- rdf_depromoadj01:PROMO_SLS_QTY

- rdf_deprice01:CLR_SLS_QTY

- rdf_totadjsls01:PREPROCESSED_SLS_QTY

-

-

- Post Forecast Batch task in IPO-DF OAT

-

- Build Forecast Review workspace to review the forecasts, review, update and approve forecasts

- Export approved forecasts by running IPO_POST_EXP_RDX POM job

-

Export Approved Forecast OAT Task

-

RDF_APPR_FCST_HDR_EXP

-

rdf_apprserunid01:RSE_RUN_ID

-

- RDF_APPR_FCST_CAL_EXP

-

rdf_bopdate01:BOP_DATE

- rdf_appbaseline01:APPR_BASELINE_FCST

- rdf_appfrcst01:APPR_DEMAND_FCST

- rdf_appcumint01:APPR_CUMINT

-

-

-

-

- To push approved forecast from RDX to RMS/object store (as a flat

file) via AIF.

-

Run RSE_RDX_APPD_FCST_ADHOC_PROCESS in POM to import approved forecast from RDX to AIF:

-

RSE_RDX_APPD_FCST_SETUP_ADHOC_JOB

- RSE_RDX_APPD_FCST_PROCESS_ADHOC_JOB

- Table to check:

-

RSE_RDF_APPR_FCST (from RDF_APPR_FCST_HDR_EXP and RDF_APPR_FCST_CAL_EXP)

-

-

- Run RSE_RDF_APPR_FCST_EXPORT_ADHOC_PROCESS (RSE_RDF_APPR_FCST_EXPORT_ADHOC_JOB) in POM to export the data from RSE_RDF_APPR_FCST_VW (week-level forecast) to RMS/object store as a flat file

- Run RSE_RDF_APPR_FCST_DAY_EXPORT_ADHOC_PROCESS (RSE_RDF_APPR_FCST_DAY_EXPORT_ADHOC_JOB) in POM to export the data from RSE_RDF_APPR_FCST_DAY_VW (day-level forecast) to RMS/object store as a flat file, if day-level forecast is generated

-

- Run W_RTL_PLANFC_PROD2_LC2_T2_F_JOB job in POM to import approved

forecasts in RI (This job is in RI_FORECAST_CYCLE Jobs)

-

Note that W_RTL_PLANFC_PROD2_LC2_T2_F gets the source data from RSE_RDF_APPR_FCST_VW

-

Note:

For Steps 11 and 12 to work, the run types also need to be mapped to the RI/RMS application in AIF Manage Forecast Configurations train stop 4 Map.Using the Add Multiple Run Types Feature

An "Add Multiple Run Types" feature is available in the Setup train stop within the Manage Forecast Configurations screen. To add/edit/delete the rows visible within the "Add Multiple Run Types" table, please edit the two tables, RSE_CUSTOM_PROCESS_HDR and RSE_CUSTOM_PROCESS_DTL, available in the Manage System Configurations screen.

Building an Alternate Hierarchy in AIF Applications

AIF applications have the ability to build alternate location and alternate product hierarchies. Alternate hierarchy information should be available in the AIF data warehouse tables (also used by Retail Insights). RSE_ALT_HIER_TYPE_STG and RSE_ALT_HIER_LEVEL_STG are the two relevant tables available in the Manage System Configurations screen. It is possible to add/edit/delete rows in these tables. Information about the alternate hierarchy types and levels must be provided through these tables. Then, the alternate hierarchy jobs must be executed to generate the alternate hierarchies in AIF. The following table lists the relevant jobs.

Table 13-12 Alternate Hierarchy Jobs

| JobName | Description | RMSBatch |

|---|---|---|

|

RSE_ALT_HIER_SETUP_START_JOB |

Start Job for Alternate Hierarchy Type Setup |

rse_process_state_update.ksh |

|

RSE_ALT_HIER_LOAD_JOB |

Setup alternate hierarchy types by loading data from RSE_ALT_HIER_TYPE_STG table |

rse_alt_hier_load.ksh |

|

RSE_ALT_HIER_SETUP_END_JOB |

End Job for Alternate Hierarchy Type Setup |

rse_process_state_update.ksh |

| RSE_ALT_HIER_LOAD_ADHOC_JOB | Adhoc job to Setup alternate hierarchy types by loading data from RSE_ALT_HIER_TYPE_STG table | rse_alt_hier_load.ksh |

|

RSE_ALT_LOC_HIER_START_JOB |

Start Job for Alternate Location Hierarchy Load |

rse_process_state_update.ksh |

|

RSE_ALT_LOC_HIER_SRC_XREF_LOAD_JOB |

Load the RSE_LOC_SRC_XREF table with alternate location hierarchy data |

rse_alt_loc_hier_process.ksh |

| RSE_ALT_LOC_SRC_XREF_DUP_CHECK_JOB | Check for RSE_ALT_LOC_SRC_XREF column values that were either modified or for which rows were bypassed to avoid duplicate keys on index errors | rse_alt_loc_src_xref_dup_check.ksh |

|

RSE_ALT_LOC_HIER_LOAD_JOB |

Load the RSE_LOC_HIER table with alternate location hierarchy data |

rse_alt_loc_hier_load.ksh |

|

RSE_ALT_LOC_HIER_TC_LOAD_JOB |

Load the RSE_LOC_HIER_TC table with alternate location hierarchy data |

rse_alt_loc_hier_tc_load.ksh |

|

RSE_ALT_LOC_HIER_DH_LOAD_JOB |

Load the RSE_LOC_HIER_DH table with alternate location hierarchy data |

rse_alt_loc_hier_dh_load.ksh |

|

RSE_ALT_LOC_HIER_END_JOB |

End Job for Alternate Location Hierarchy Load |

rse_process_state_update.ksh |

| RSE_ALT_LOC_HIER_SRC_XREF_LOAD_ADHOC_JOB | Adhoc job to Load the RSE_LOC_SRC_XREF table with alternate location hierarchy data | rse_alt_loc_hier_process.ksh |

| RSE_ALT_LOC_SRC_XREF_DUP_CHECK_ADHOC_JOB | Adhoc job to Check for RSE_ALT_LOC_SRC_XREF column values that were either modified or for which rows were bypassed to avoid duplicate keys on index errors | rse_alt_loc_src_xref_dup_check.ksh |

| RSE_ALT_LOC_HIER_LOAD_ADHOC_JOB | Adhoc job to Load the RSE_LOC_HIER table with alternate location hierarchy data | rse_alt_loc_hier_load.ksh |

| RSE_ALT_LOC_HIER_TC_LOAD_ADHOC_JOB | Adhoc job to Load the RSE_LOC_HIER_TC table with alternate location hierarchy data | rse_alt_loc_hier_tc_load.ksh |

| RSE_ALT_LOC_HIER_DH_LOAD_ADHOC_JOB | Adhoc job to Load the RSE_LOC_HIER_DH table with alternate location hierarchy data | rse_alt_loc_hier_dh_load.ksh |

|

RSE_ALT_PROD_HIER_START_JOB |

Start Job for Alternate Product Hierarchy Load |

rse_process_state_update.ksh |

|

RSE_ALT_PROD_HIER_SRC_XREF_LOAD_JOB |

Load the RSE_PROD_SRC_XREF table with alternate product hierarchy data |

rse_alt_prod_hier_process.ksh |

| RSE_ALT_PROD_SRC_XREF_DUP_CHECK_JOB | Check for RSE_ALT_PROD_SRC_XREF column values that were either modified or for which rows were bypassed to avoid duplicate keys on index errors | rse_alt_prod_src_xref_dup_check.ksh |

|

RSE_ALT_PROD_HIER_LOAD_JOB |

Load the RSE_PROD_HIER table with alternate product hierarchy data |

rse_alt_prod_hier_load.ksh |

|

RSE_ALT_PROD_HIER_TC_LOAD_JOB |

Load the RSE_PROD_HIER_TC table with alternate product hierarchy data |

rse_alt_prod_hier_tc_load.ksh |

|

RSE_ALT_PROD_HIER_DH_LOAD_JOB |

Load the RSE_PROD_HIER_DH table with alternate product hierarchy data |

rse_alt_prod_hier_dh_load.ksh |

|

RSE_ALT_PROD_HIER_END_JOB |

End Job for Alternate Product Hierarchy Load |

rse_process_state_update.ksh |

| RSE_ALT_PROD_HIER_SRC_XREF_LOAD_ADHOC_JOB | Adhoc job to Load the RSE_PROD_SRC_XREF table with alternate product hierarchy data | rse_alt_prod_hier_process.ksh |

| RSE_ALT_PROD_SRC_XREF_DUP_CHECK_ADHOC_JOB | Adhoc job to Check for RSE_ALT_PROD_SRC_XREF column values that were either modified or for which rows were bypassed to avoid duplicate keys on index errors | rse_alt_prod_src_xref_dup_check.ksh |

| RSE_ALT_PROD_HIER_LOAD_ADHOC_JOB | Adhoc job to Load the RSE_PROD_HIER table with alternate product hierarchy data | rse_alt_prod_hier_load.ksh |

| RSE_ALT_PROD_HIER_TC_LOAD_ADHOC_JOB | Adhoc job to Load the RSE_PROD_HIER_TC table with alternate product hierarchy data | rse_alt_prod_hier_tc_load.ksh |

| RSE_ALT_PROD_HIER_DH_LOAD_ADHOC_JOB | Adhoc job to Load the RSE_PROD_HIER_DH table with alternate product hierarchy data | rse_alt_prod_hier_dh_load.ksh |

Note:

Before changing the location and/or product hierarchy type for the environment, all existing forecast run types need to be deleted. New run types need to be created after the hierarchy type changes are done.-

Update the delete flag to Y on the row in the RSE_ALT_HIER_TYPE_STG table corresponding to the alternate hierarchy being deleted.

-

Delete all the level rows from the RSE_ALT_HIER_LEVEL_STG table corresponding to the alternate hierarchy being deleted.

-

Run the processes/jobs to rebuild the alternate hierarchies, in the same way you previously did to build those.

If you are working with standalone jobs, then either use RSE_MASTER_ADHOC_JOB to run the hierarchy loads again (using -X flag for the alternate hierarchy load, and others as needed) or use the adhoc jobs listed in the table above. Also, for batch runs, the jobs listed in the table above will handle the deletion. Note that you cannot delete and rebuild the same hierarchy in the same batch cycle or standalone process.

- The delete flag in the RSE_ALT_HIER_TYPE table will be set to Y.

- The corresponding row in the RSE_HIER_TYPE table will be deleted.

- The corresponding rows in the RSE_ALT_HIER_LEVEL table will be deleted.

- The corresponding rows in the RSE_HIER_LEVEL table will be deleted.

- The corresponding partition in the RSE_LOC_HIER and/or RSE_PROD_HIER tables will be dropped.

- The corresponding partition in the RSE_LOC_HIER_DH and/ or RSE_PROD_HIER_DH tables will be dropped.

- The corresponding partition in the RSE_LOC_HIER_TC and/or RSE_PROD_HIER_TC tables will be dropped.

- The corresponding partition in the RSE_LOC_SRC_XREF and/or RSE_PROD_SRC_XREF tables will be dropped.