5 Batch Orchestration

This chapter describes the tools, processes, and implementation considerations for configuring and maintaining the batch schedules used by the Retail Analytics and Planning. This includes nightly, weekly, and ad hoc batch cycles added in the Process Orchestration and Monitoring (POM) tool for each of the RAP applications.

Overview

All applications on the Retail Analytics and Planning have either a nightly or weekly batch schedule. Periodic batches allow the applications to move large amounts of data during off-peak hours. They can perform long-running calculations and analytical processes that cannot be completed while users are in the system, and close out the prior business day in preparation for the next one.

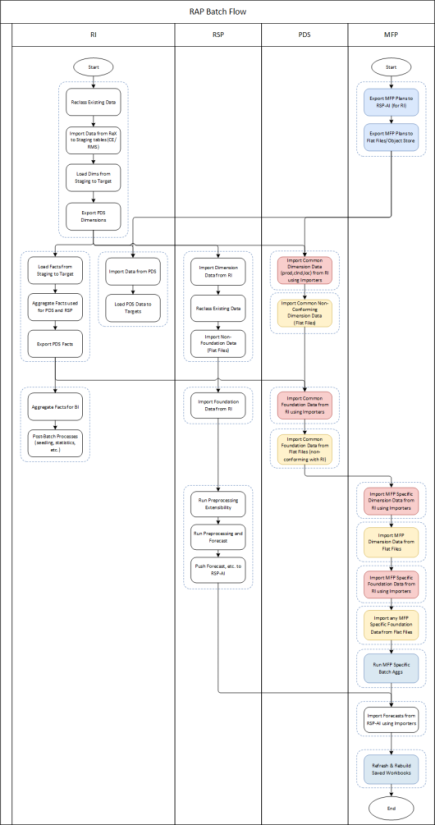

To ensure consistency across the platform, all batch schedules have some level of interdependencies established, where jobs of one application require processes from another schedule to complete successfully before they can begin. The flow diagram below provides a high-level view of schedule dependencies and process flows across RAP modules.

Figure 5-1 Batch Process High-Level Flow

The frequency of batches will vary by application. However, the core data flow through the platform must execute nightly. This includes data extraction from RMS by way of RDE (if used) and data loads into Retail Insights and AI Foundation.

Downstream applications from Retail Insights, such as Merchandise Financial Planning, may only execute the bulk of their jobs on a weekly basis. This does not mean the schedule itself can run weekly (as MFP batch has been run in previous versions); those end-of-week processes now rely on consumption and transformations of data happening in nightly batches. For example, Retail Insights consumes sales and inventory data on a daily basis. However, the exports to Planning (and subsequent imports in those applications) are only run at the end of the week, and are cumulative for all the days of data up to that point in the week.

For this reason, assume that most of the data flow and processing that is happening within the platform will happen every day and plan your file uploads and integrations with non-Oracle systems accordingly.

While much of the batch process has been automated and pre-configured in POM, there are still several activities that need to be performed which are specific to each implementation. TheTable 5-1 table summarizes these activities and the reasons for doing them. Additional details will be provided in subsequent sections of this document

Table 5-1 Common Batch Orchestration Activities

| Activity | Description |

|---|---|

|

By default, most batch processes are enabled for all of the applications. It is the implementer’s responsibility to disable batches that will not be used by leveraging the Customer Modules Management screen in Retail Home. |

|

|

The POM application supports external integration methods including external dependencies and process callbacks. Customers that leverage non-Oracle schedulers or batch processing tools may want to integrate POM with their existing processes. |

|

|

Schedules in POM must be given a start time to run automatically. Once started, you have a fixed window of time to provide all the necessary file uploads, after which time the batch will fail due to missing data files. |

|

|

It is possible to export the batch schedules from POM to review process/job mappings, job dependencies, inter-schedule dependencies, and other details. This can be very useful when deciding which processes to enable in a flow or when debugging fails at specific steps in the process, and how that impacts downstream processing. |

Initial Batch Setup

As discussed in Implementation Tools, setting up the initial batches requires access to two tools: POM and Retail Home. Refer to that chapter if you have not yet configured your user accounts to enable batch management activities.

When using POM and Customer Modules Management, it is required to enable or disable the application components based on implementation needs. The sections below describe which modules are needed to leverage the core integrations between applications on the platform. If a module is not present in the below lists, then it is only useful if you are implementing that specific application and require that specific functionality. For example, most modules within Retail Insights are not required for the platform as a whole and can be disabled if you do not plan to implement RI.

After you make changes to the modules, make sure to synchronize your batch schedule in POM following the steps in Implementation Tools. If you are unsure whether a module should be enabled or not, you can initially leave it enabled and then disable jobs individually from POM as needed.

Common Modules

The following table lists the modules that are used across all platform implementations. These modules process core foundation data, generate important internal datasets, and move data downstream for other applications to use. Verify in your environment that these are visible in Customer Modules Management and are enabled.

Table 5-2 RAP Common Batch Modules

| Application | Module | Usage Notes |

|---|---|---|

|

RAP |

COMMON > BATCH |

Contains the minimum set of batch processes needed to load data into the platform and prepare it for one or more downstream applications (such as MFP or AI Foundation) when RI is not being implemented. This allows implementers to disable RI modules entirely to minimize the number of active batch jobs. |

|

RAP |

COMMON > RDXBATCH |

Contains the batch processes for extracting data from RI to send to one or more Planning applications. |

|

RAP |

COMMON > ZIP_FILES |

Choose which ZIP packages must be present before the nightly batch process begins. The batch only looks for the enabled files when starting and fails if any are not present. |

|

RAP |

COMMON > SIBATCH |

Choose which input interfaces will be executed for loading flat files into RAP. Disabled interfaces will not look for or load the associated file even if it is provided. |

|

RAP |

COMMON > SICONTROLFILES |

Choose which input files must be present before the nightly batch process begins. Active control files mark a file as required, and the batch fails if it is not provided. Inactive control files are treated as optional and are loaded if their associated job is enabled, but the batch will not fail if they are not provided. |

After setting up the common modules and syncing with POM, ensure that certain critical batch processes in the RI schedule (which is used by all of RAP) are enabled in Batch Monitoring. This can be used as a check to validate the POM sync occurred:

-

RESET_ETL_THREAD_VAL_STG_JOB

-

TRUNCATE_STAGE_TABLES_JOB

-

DELETE_STATS_JOB

-

RI_UPDATE_TENANT_JOB

Some of these jobs begin in a disabled state in POM (depending on the product version) so the POM sync should ensure they are enabled. If they are not enabled after the POM sync, be sure to enable them before attempting any batch runs.

RI Modules

The following table lists modules within the Retail Insights application which may be used by one or more RAP applications, in addition to the common modules from the prior section.

Table 5-3 RI Shared Batch Modules

| Application | Module | Usage Notes |

|---|---|---|

|

RI |

COMMON |

Use this section to choose your ZIP files for all flat file integrations. The same ZIP files can be selected from other application modules and they only need to be enabled in one place to become active. |

|

RI |

RSP > CONTROLFILES |

Enable only the files you plan to send based on your AI Foundation implementation plan. Refer to the Interfaces Guide for details. This is only used for non-foundation or legacy DAT files. This directly affects what files are required to be in the zip files, when an RI Daily Batch is executed. Required files that are missing will fail the batch. |

|

RI |

RSP > BATCH |

Enable |

AI Foundation Modules

The following table lists modules within the AI Foundation applications which may be used by one or more other RAP applications, in addition to the common modules from the prior section. This primarily covers forecasting and integration needs for Planning application usage. It is important to note that the underlying process for generating forecasts leverages jobs from the Promotion and Markdown Optimization (PMO) application, so you will see references to that product throughout the POM batch flows and in Retail Home modules.

Table 5-4 AI Foundation Shared Batch Modules

| Application | Module | Usage Notes |

|---|---|---|

|

AI Foundation |

FCST > Batch |

Collection of batch jobs associated with foundation loads, demand estimation, and forecast generation/export. The modules under this structure are needed to get forecasts for Planning. |

To initialize data for the forecasting program, use the ad hoc POM process RSE_MASTER_ADHOC_JOB described

in Sending Data to AI Foundation. After the platform is initialized, you may use the Forecast Configuration user interface to set up and run your

initial forecasts. For complete details on the requirements and implementation process for forecasting, refer to the Retail

AI Foundation Cloud Services Implementation Guide.

For reference, the set of batch programs that should be enabled for forecasting are listed below (not including foundation data loads common to all of AI Foundation). Enable these by syncing POM with MDF modules, though it is best to validate that the expected programs are enabled after the sync.

Note:

Jobs with PMO in the name are also used for Promotion & Markdown Optimization and are shared with the forecasting module.| Job Name |

|---|

|

PMO_ACTIVITY_LOAD_START_JOB |

|

PMO_ACTIVITY_STG_JOB |

|

PMO_ACTIVITY_LOAD_JOB |

|

PMO_ACTIVITY_LOAD_END_JOB |

|

PMO_CREATE_BATCH_RUN_JOB |

|

PMO_RUN_EXEC_SETUP_JOB |

|

PMO_RUN_EXEC_START_JOB |

|

PMO_RUN_EXEC_PROCESS_JOB |

|

PMO_RUN_EXEC_END_JOB |

|

RSE_CREATE_FCST_BATCH_RUN_JOB |

|

RSE_FCST_BATCH_PROCESS_JOB |

|

RSE_FCST_BATCH_RUN_END_JOB |

|

RSE_CREATE_FCST_BATCH_RUN_ADHOC_JOB |

|

RSE_FCST_BATCH_PROCESS_ADHOC_JOB |

There are also two processes involved in forecast exports to MFP, one as part of weekly batch and the other as an ad hoc job which you can run during implementation.

| Job Name |

|---|

|

RSE_MFP_FCST_EXPORT_JOB |

|

RSE_MFP_FCST_EXPORT_ADHOC_JOB |

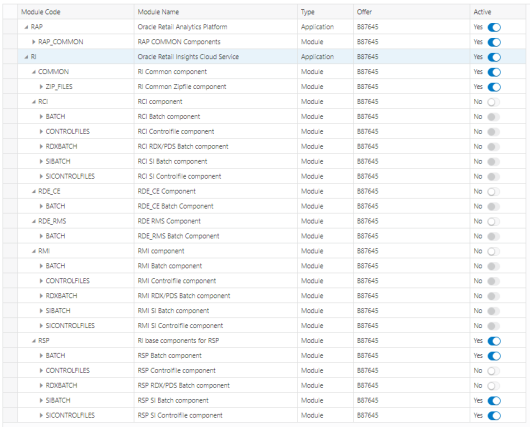

Module Setup Example

For a basic implementation of AI Foundation, your modules in Retail Home might be set up as shown below. The RAP common

components are enabled, as well as some parts of the RI>RSP modules. Within the RI>RSP>BATCH component, RSP_DAT_STAGE is enabled to support the loading of DAT files, RSP_REQUIRED is

enabled for basic foundation jobs, and other components can be turned on or off depending on your use cases. In this case

the CONTROLFILES section is disabled because there are no DAT files being loaded to the platform, but SICONTROLFILES is enabled because there are CSV files being sent.

If you have multiple applications running on AI Foundation (such as Profile Science and Offer Optimization) then there will be additional Module Codes listed in the screen that should also be enabled. Planning applications do not use Customer Modules at this time, so there are no additional sets of modules for those applications. If the modules are set up for AI Foundation + Forecasting, that would also be sufficient for Planning usage.

Once all changes are made, make sure to Save the updates using the button below the table. Only after saving the changes will they be available to sync with POM.

Adjustments in POM

While the bulk of your batch setup should be done in Retail Home, it may be necessary to fine-tune your schedule in POM after the initial configuration is complete. You may need to disable specific jobs in the nightly schedules (usually at Oracle’s recommendation) or reconfigure the ad hoc processes to use different programs. The general steps to perform this activity are:

-

From Retail Home, click the link to navigate to POM or go to the POM URL directly if known. Log in as a batch administrator user.

-

Navigate to the Batch Administration screen.

-

Select the desired application tile, and then select the schedule type from the nightly, recurring, or standalone options.

-

Optionally, search for specific job names, and then use the Enabled option to turn the program on or off.

Note:

If you sync with MDF again in the future, it may re-enable jobs that you turned off inside a module that is turned on in Retail Home. For that reason, the module configuration is typically used only during implementation, then POM is used once you are live in production.Self Service Features

The RI POM schedule includes jobs that support self-service actions where corrective action can be taken without waiting for assistance from Oracle. It is important to ensure the jobs below are enabled as part of your schedule, and you leverage the ad hoc jobs through the Standalone Jobs section of POM as needed:

-

CTXFILE_VALIDATOR_JOB– This ad hoc job verifies the contents of your context files to ensure all non-nullable and required columns are being sent in the data files. The job will fail if it detects that any columns are missing, allowing you to take corrective action on the files you sent in the most recent upload. -

EXPSQLLDRLOGS_JOB– This ad hoc job will package and export any SQL Loader logs relating to invalid data which was rejected by the flat file load process and place it in an outgoing directory for download. The POM logs will generally show all summary information relating to these failures, but it can sometimes be useful to see the bad records and logs output by the SQL Loader script itself. By default it will export files for the last 1 day but an input parameter can specify the number of days to extract. -

LOWVOLUME_VALIDATOR_JOB– This ad hoc job provides an implementation method for loading a small set of records from your input files for initial validation purposes, before loading the entire history file. You can specify a number of rows to load as a parameter inC_ODI_PARAM. -

RUN_PARTITION_VALIDATOR_JOB– This nightly batch job will verify the current state of your table partitions, check whether data is being loaded into theMAXpartitions (which should not occur), and auto-create partitions if possible. -

DIM_*_VALIDATOR_JOB– Jobs with this naming format perform validations on some staging table data prior to loading it to the final target tables. These jobs will detect common mistakes and problems in the data and throw errors or warnings depending on the severity of the issue. Details on the validation architecture can be found in the AI Foundation Operations Guide.

Additionally, there is an intraday process named RI_MAINTENANCE_CYCLE that can be scheduled from the RI

POM intraday scheduler administration screen. This process should be scheduled to run once per day before the nightly batch

and all jobs within it should be enabled. This process performs important upkeep activities such as database defragmentation

and partition maintenance. Schedule it several hours before nightly batch is going to start, as the defragmentation and partition

processes sometimes need a longer time to run, based on the amount of recently changed data in the database.

Configure POM Integrations

Retailers often have external applications that manage batch execution and automation of periodic processes. They might also require other downstream applications to be triggered automatically based on the status of programs in the Retail Analytics and Planning. POM supports the following integrations that should be considered as part of your implementation plan.

Table 5-5 POM Integrations

| Activity | References |

|---|---|

|

Trigger RAP batches from an external program |

POM Implementation Guide > Integration > Invoking Cycles in POM |

|

Trigger external processes based on RAP batch statuses |

POM Implementation Guide > Integration > External Status Update |

|

Add external dependencies into the RAP batch to pause execution at specific points |

POM Implementation Guide > Integration > External Dependency |

Schedule the Batches

Once you are ready to begin regularly scheduled batch processing, you must log in to POM to enable each batch cycle and provide a start time for the earliest one in the batch sequence. The general steps are:

-

Log into POM as an administrator user.

-

Access the Scheduler Administration screen.

-

Select each available tile representing a batch schedule and select the Nightly batch option.

-

Edit the rows in the table to enable each batch and enter a start time. You only need to set a start time for the first batch in a linked sequence. For example, if you enter a start time for Retail Insights, then the AI Foundation and MFP schedules will trigger automatically at the appropriate times, based on their dependencies and batch links.

-

For each batch after RI that must execute, you must also verify the inter-schedule dependencies are enabled for those batches. You can find the dependencies and enable them by going to the Batch Monitoring screen and selecting each batch’s Nightly set of jobs. Click the number in front of Inter-Schedule Dependencies, and click Enable to the right of each displayed row if the current status is Disabled. This should change the status of the row to Pending.

Refer to the POM User Guide for additional information about the Scheduler screens and functionality. Scheduling the batch to run automatically is not required if you are using an external process or scheduler to trigger the batch instead.

For the Retail Insights nightly batch (which consumes the foundation input files for platform data), the batch will first look for all the required input ZIP packages configured through Customer Modules Management. The batch will wait for 4 hours for all required files, after which it will fail with an error in POM. When choosing a start time for the batch, it is best to start the 4-hour window an hour before you expect to have all the files uploaded for it to use. The batch performs reclassification of aggregate tables as its first step, which can take the full hour to complete in the case of very large reclassifications. This still provides 3+ hours during which you can schedule all file uploads and non-Oracle integration processes to occur.

The other application batches, such as those for the AI Foundation modules, begin automatically once the required Retail Insights batch jobs are complete (assuming you have enabled those batches). Batch dependencies have been pre-configured to ensure each downstream process begins as soon as all required data is present.

Batch Flow Details

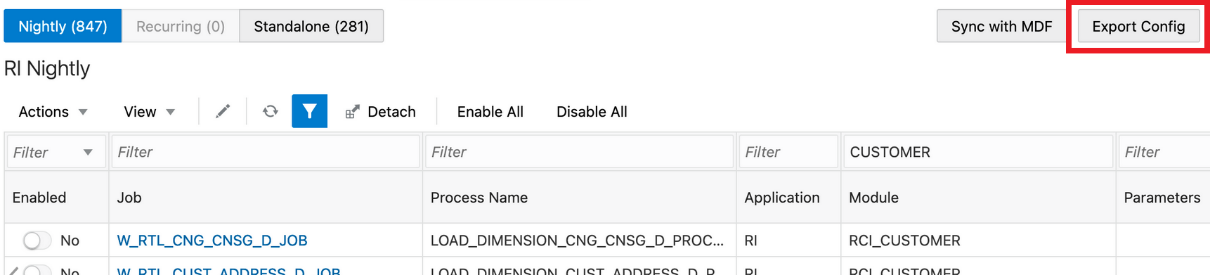

POM allows you to export the full batch schedule configuration to a spreadsheet for review. This is an easier way to see all of the jobs, processes, and dependencies that exist across all batch schedules that you are working with on the platform. Perform the following steps to access batch configuration details:

-

From Retail Home, click the link to navigate to POM, or go to the POM URL directly if known. Log in as a batch administrator user.

-

Navigate to the Batch Administration screen.

-

Select the desired application tile, and then select the schedule type from the nightly, recurring, or standalone options.

-

Click the Export Config button to download the schedule data.

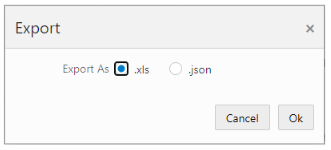

-

Choose your preferred format (XLS or JSON).

-

XLS is meant for reviewing it visually.

-

JSON is used for importing the schedule to another environment.

-

-

Save the file to disk and open it to review the contents.

For more details about the tabs in the resulting XLS file, refer to the POM User Guide, “Export/Import Schedule Configuration”.

Planning Applications Job Details

Planning applications such as Merchandise Financial Planning schedule jobs through POM, and run ad hoc tasks directly using Online Administration Tasks (OAT) within the application. POM is the preferred approach for scheduling jobs in RAP for managing interdependencies with jobs from RI and AI Foundation. Refer to the application Administration Guides for more details on scheduling tasks through OAT.

Planning applications also use a special Batch Framework that controls jobs using batch control files. You may configure these batch control files for various supported changes within the application. The POM schedule for Planning internally calls an OAT task controlled by the batch control entries. A standard set of nightly and weekly jobs for Planning are defined to schedule them in POM. You also have the option to disable or enable the jobs either directly through POM, or by controlling the entries in batch control files. Refer to the Planning application-specific Implementation Guides for details about the list of jobs and how jobs can be controlled by making changes to batch control files.