3 Data Loads and Initial Batch Processing

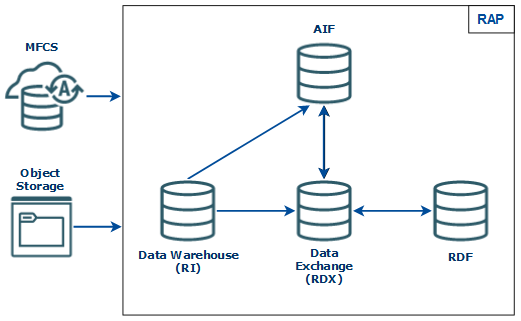

This chapter describes the common data requirements for implementing any of the Retail Analytics and Planning modules, where to get additional information for optional or application-specific data interfaces, and how to load an initial dataset into the cloud environments and distribute it across your desired applications.

Data Requirements

Preparing data for one or more Retail Analytics and Planning modules can consume a significant amount of project time, so it is crucial to identify the minimum data requirements for the platform first, followed by additional requirements that are specific to your implementation plan. Data requirements that are called out for the platform are typically shared across all modules, meaning you only need to provide the inputs once to leverage them everywhere. This is the case for foundational data elements, such as your product and location hierarchies. Foundation data must be provided for any module of the platform to be implemented. Foundation data is provided using different sources depending on your current software landscape, including the on-premise Oracle Retail Merchandising System (RMS) or 3rd-party applications.

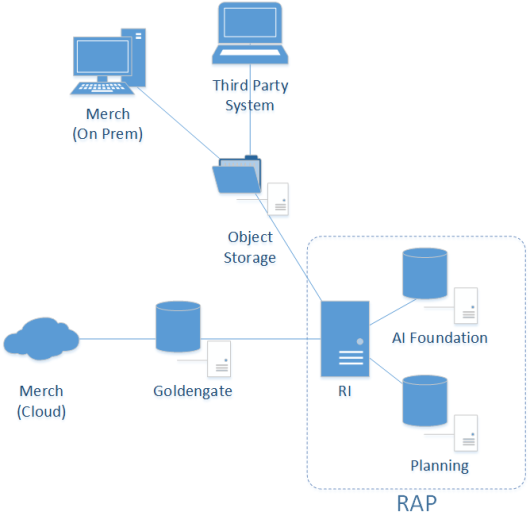

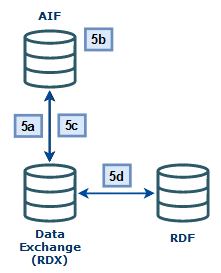

Figure 3-1 Inbound Foundation Data Flows

Retail Insights is used as the foundational data warehouse that collects and coordinates data on the platform. You do not need to purchase Retail Insights Cloud Service to leverage the data warehouse for storage and integration; it is included as part of any RAP solution. Regardless of which RAP solutions you are implementing, the integration flows shown above are used.

Application-specific data requirements are in addition to the shared foundation data, and may only be used by one particular module of the platform. These application data requirements may have different formats and data structures from the core platform-level dataset, so pay close attention to those additional interface specifications. References and links are provided later in this chapter to guide you to the relevant materials for application-specific inputs and data files.

If you are using RMS as your primary data source, then you may not need to produce some or all of these foundation files, as they will be created by other Oracle Retail processes for you. However, it is often the case that historical data requires a different set of foundation files from your future post-implementation needs. If you are loading manually-generated history files, or you are not using an Oracle Retail data source for foundation data, then review the rest of this section for details.

Platform Data Requirements

There is a subset of core platform data files that can be created and loaded once and then used across some or all application modules. These files use a specific format as detailed below.

Note:

Every application included with Retail Analytics and Planning has additional data needs beyond this foundation data. But this common set of files can be used to initialize the system before moving on to those specific requirements.The first table defines the minimum dimensional data. A dimension is a collection of descriptive elements, attributes, or hierarchical structures that provide context to your business data. Dimensions tell the platform what your business looks like and how it operates. This is not the entire list of possible dimension files, just the main ones needed to use the platform. Refer to Legacy Foundation File Reference for a complete list of available platform foundation files, along with a cross-reference to the legacy interfaces they most closely align with. A complete interface specification document is also available in My Oracle Support to assist you in planning your application-specific interface needs.

Table 3-1 Common Foundation Dimensions

| Dimension | Filename(s) | Usage |

|---|---|---|

|

Product |

PRODUCT.csv |

The product foundation data includes the items you sell, their core merchandise attributes, and their hierarchical structure in your source systems. |

|

Product |

PRODUCT_ALT.csv |

Alternate product attributes and hierarchy levels intended for downstream Planning application extensions. |

|

Organization |

ORGANIZATION.csv |

The organization foundation data includes all of your business entities involved in the movement or sale of merchandise. This includes stores, warehouses, partner/finisher locations, web stores, and virtual warehouses. It also includes your organizational hierarchy and core location attributes. |

|

Organization |

ORGANIZATION_ALT.csv |

Alternate location attributes and hierarchy levels intended for downstream Planning application extensions. |

|

Calendar |

CALENDAR.csv |

The calendar foundation data defines your business (or fiscal) calendar. This is the calendar that you operate in when making critical business decisions, reporting on financial results, and planning for the future. The most common calendar used by all modules of the platform is a 4-5-4 fiscal calendar. |

|

Exchange Rates |

EXCH_RATE.csv |

Exchange rates define the conversion of monetary values from the currency they are recorded in to your primary operating currency. Most data is provided to the platform in the local currency of the data source, and it is converted to your primary currency during the load process. |

|

Product Attributes |

ATTR.csv PROD_ATTR.csv |

Product attributes describe the physical and operational characteristics of your merchandise and are a critical piece of information for many AI Foundation modules, such as Demand Transference and Size Profile Optimization. They are not required as an initial input to start data loads but will eventually be needed for most applications to function. |

|

System Parameters |

RA_SRC_CURR_PARAM_G.dat |

Parameters file that supports certain batch processes, such as the ability to load multiple ZIP files and run batches in sequence. Include this file with nightly batch uploads to specify the current business date, which enables the system to run multiple batches in sequence without customer input. Required once you begin nightly or weekly batch uploads. |

The other set of foundation files are referred to as facts. Fact data covers all of the actual events, transactions, and activities occurring throughout the day in your business. Each module in the platform has specific fact data needs, but the most common ones are listed below. At a minimum, you should expect to provide Sales, Inventory, and Receipts data for use in most platform modules. The intersection of all data (meaning which dimensional values are used) is at a common level of item/location/date. Additional identifiers may be needed on some files; for example, the sales data should be at the transaction level, the inventory file has a clearance indicator, and the adjustments file has type codes and reason codes.

Table 3-2 Common Foundation Facts

| Dimension | Filename(s) | Usage |

|---|---|---|

|

Sales |

SALES.csv |

Transaction-level records for customer sales (wholesale data provided separately). Used across all modules. |

|

Inventory |

INVENTORY.csv |

Physical inventory levels for owned merchandise as well as consignment and concession items. Used across all modules. |

|

Receipts |

RECEIPT.csv |

Inventory receipts into any location, including purchase order and transfer receipts. Used by Insights, Planning, and the AI Foundation modules to identify the movement of inventory into a location and to track first/last receipt date attributes. |

|

Adjustments |

ADJUSTMENT.csv |

Inventory adjustments made at a location, including shrink, wastage, theft, stock counts, and other reasons. Used by Insights and Planning modules. |

|

Purchase Orders |

ORDER_HEAD.csv ORDER_DETAIL.csv |

The purchase order data for all orders placed with suppliers. Held at a level of order number, supplier, item, location, and date. Separate files are needed for the order attributes and order quantities/amounts. Used by Insights and Planning modules. |

|

Markdowns |

MARKDOWN.csv |

The currency amount above or below the listed price of an item when that item's price is modified for any reason (planned markdown, POS discount, promotional sale, and so on). Used By Insights and Planning modules. |

|

Transfers |

TRANSFER.csv |

The movement of inventory between two locations (both physical and virtual). Used By Insights and Planning modules. |

|

Returns to Vendor |

RTV.csv |

The return of owned inventory to a supplier or vendor. Used by Insights and Planning modules. |

|

Prices |

PRICE.csv |

The current selling retail value of an item at a location. Used by Insights and AI Foundation modules. |

|

Costs |

COST.csv |

The base unit cost and derived net costs of an item at a location. Used by Retail Insights only. |

|

Wholesale/Franchise |

SALES_WF.csv |

Sales and markdown data from wholesale and franchise operations. Used by all modules. |

|

Deal Income |

DEAL_INCOME.csv |

Income associated with deals made with suppliers and vendors. Used by Insights and Planning modules. |

Details on which application modules make use of specific files (or columns within a file) can be found in the Interfaces Guide on My Oracle Support. Make sure you have a full understanding of the data needs for each application you are implementing before moving on to later steps in the process. If it is your first time creating these files, read Data File Generation, for important information about key file structures and business rules that must be followed for each foundation file.

File Upload Samples

When you first upload foundation data into the platform, you will likely provide a small subset of the overall set of files required by your applications. The following examples show a possible series of initial file uploads to help you verify that you are providing the correct sets of data. All dimension files in initialization and history loads must be full snapshots of data; never send partial or incremental files.

Example #1: Calendar Initialization

When you first configure the system you must upload and process the CALENDAR.csv file. You will generate

the file following the specifications, and also provide a context (ctx) file, as described in Data File Generation.

File to upload: RAP_DATA_HIST.zip

Zip file contents:

-

CALENDAR.csv -

CALENDAR.csv.ctx

Example #2: Product and Location Setup

You have finalized the calendar and want to initialize your core product and organization dimensions. You must provide

the PRODUCT.csv and ORGANIZATION.csv files along with their context files, as described

in Data File Generation.

File to upload: RAP_DATA_HIST.zip

Zip file contents:

-

PRODUCT.csv -

PRODUCT.csv.ctx -

ORGANIZATION.csv -

ORGANIZATION.csv.ctx

Example #3: Full dimension load

You have a set of dimension files you want to process using the initial dimension load ad hoc processes in POM.

File to upload: RAP_DATA_HIST.zip

Zip file contents:

-

PRODUCT.csv -

PRODUCT.csv.ctx -

ORGANIZATION.csv -

ORGANIZATION.csv.ctx -

ATTR.csv -

ATTR.csv.ctx -

PROD_ATTR.csv -

PROD_ATTR.csv.ctx -

EXCH_RATE.csv -

EXCH_RATE.csv.ctx

Example #4: Sales Data Load

You have finished the dimensions and you are ready to start processing sales history files.

File to upload: RAP_DATA_HIST.zip

Zip file contents:

-

SALES.csv -

SALES.csv.ctx

Example #5: Multi-File Fact Data Load

Once you are confident in your data file format and contents, you may send multiple files as separate ZIP uploads for sequential loading in the same run. This process uses a numerical sequence on the end of the ZIP file name. You should still include the base ZIP file to start the process. The actual method to loop over these files is to use the intraday cycle in the RI POM schedule, which executes the fact history load once every cycle, for up to 12 cycles per day.

Files to upload: RAP_DATA_HIST.zip, RAP_DATA_HIST.zip.1, RAP_DATA_HIST.zip.2, RAP_DATA_HIST.zip.3

Zip file contents (in each uploaded zip):

-

SALES.csv -

SALES.csv.ctx– The CTX is only required in the first ZIP file, but it’s best to always include it so you can refer to it later in archived files, if needed.

In this example you are loading sales month by month iteratively, but the intraday process supports all other fact loads

as well. You can also combine multiple fact files (for different facts with the same period of time) in each ZIP file upload.

Track the status of the files in the C_HIST_FILES_LOAD_STATUS table after each cycle execution; it shows

whether the file was loaded successfully and how many more files are available to process.

Uploading ZIP Packages

When providing data to the platform, push the compressed files into Object Storage using a ZIP file format. Review the File Transfer Services section

for details on how to interact with Object Storage. The ZIP file you use will depend on the data you are attempting to load.

The default ZIP file packages are below, but the history ZIP file name is configurable in C_ODI_PARAM using

parameter name HIST_ZIP_FILE if a different one is desired.

Table 3-3 Platform ZIP File Usage

| Filenames | Frequency | Notes |

|---|---|---|

|

RAP_DATA_HIST.zip |

Ad Hoc |

Used for:

|

|

RAP_DATA_HIST.zip.1 RAP_DATA_HIST.zip.2 … RAP_DATA_HIST.zip.N |

Ad Hoc / Intraday |

Multiple zip uploads are supported for sending historical fact data which should be loaded sequentially.

Append a sequence number on the ZIP files starting from 1 and increasing to N, where N is the number of files you are loading.

Track the status of the files in |

|

RAP_DATA.zip RI_RMS_DATA.zip RI_CE_DATA.zip RI_MFP_DATA.zip RI_EXT_DATA.zip |

Daily |

Can be used for daily ongoing loads into the platform (for RI and foundation common inputs), and for any daily data going to downstream applications through RI’s nightly batch. Different files can be used for different source systems. |

|

RI_REPROCESS_DATA.zip |

Ad Hoc |

Used to upload individual files which will be appended into an existing nightly batch file set. |

|

ORASE_WEEKLY_ADHOC.zip |

Ad Hoc |

Used for loading AI Foundation files with ad hoc processes. |

|

ORASE_WEEKLY.zip |

Weekly |

Used for weekly batch files sent directly to AI Foundation. |

|

ORASE_INTRADAY.zip |

Intraday |

Used for intraday batch files sent directly to AI Foundation. |

Other supported file packages, such as output files and optional input files, are detailed in each module’s implementation guides. Except for Planning-specific integrations and customizations (which support additional integration paths and formats), it is expected that all files will be communicated to the platform using one of the filenames above.

Preparing to Load Data

Implementations can follow this general outline for the data load process:

-

Initialize the business and system calendars and perform table partitioning, which prepares the database for loading fact data.

-

Load initial dimension data into the dimension and hierarchy tables and perform validation checks on the data from DV/APEX or using RI reports.

-

If implementing any AI Foundation or Planning module, load the dimension data to those systems now. Data might work fine on the input tables but have issues only visible after processing in those systems. Don’t start loading history data if your dimensions are not working with all target applications.

-

Load the first set of history files (for example, one month of sales or inventory) and validate the results using DV/APEX.

-

If implementing any AI Foundation or Planning module, stop here and load the history data to those systems as well. Validate that the history data in those systems is complete and accurate per your business requirements.

-

Continue loading history data into RAP until you are finished with all data. You can stop at any time to move some of the data into downstream modules for validation purposes.

-

After history loads are complete, all positional tables, such as Inventory Position, need to be seeded with a full snapshot of source data before they can be loaded using regular nightly batches. This seeding process is used to create a starting position in the database which can be incremented by daily delta extracts. These full-snapshot files can be included in the first nightly batch you run, if you want to avoid manually loading each seed file through one-off executions.

-

When all history and seeding loads are completed and downstream systems are also populated with that data, nightly batches can be started.

Before you begin this process, it is best to prepare your working environment by identifying the tools and connections needed for all your Oracle cloud services that will allow you to interact with the platform, as detailed in Implementation Tools and Data File Generation.

Prerequisites for loading files and running POM processes include:

| Prerequisite | Tool / Process |

|---|---|

|

Upload ZIPs to Object Storage |

File Transfer Service (FTS) scripts |

|

Invoke adhoc jobs to unpack and load the data |

Postman (or similar REST API tool) |

|

Monitor job progress after invoking POM commands |

POM UI (Batch Monitoring tab) |

|

Monitoring data loads |

APEX / DV (direct SQL queries) |

Users must also have the necessary permissions in Oracle Cloud Infrastructure Identity and Access Management (OCI IAM) to perform all the implementation tasks. Before you begin, ensure that your user has at least the following groups:

| Access Needed | Groups Needed |

|---|---|

|

Batch Job Execution |

BATCH_ADMINISTRATOR_JOB PROCESS_SERVICE_ADMIN_JOB |

|

Database Monitoring |

<tenant ID>-DVContentAuthor (DV) DATA_SCIENCE_ADMINISTRATOR_JOB (APEX) |

|

Retail Home |

RETAIL_HOME_ADMIN PLATFORM_SERVICES_ADMINISTRATOR PLATFORM_SERVICES_ADMINISTRATOR_ABSTRACT |

|

RI and AI Foundation Configurations |

ADMINISTRATOR_JOB |

|

MFP Configurations |

MFP_ADMIN_STAGE / PROD |

|

RDF Configurations |

RDF_ADMIN_STAGE / PROD |

|

AP Configurations |

AP_ADMIN_STAGE / PROD |

Calendar and Partition Setup

This is the first step that must be performed in all new environments, including projects that will not be implementing

RI, but only AI Foundation or Planning solutions. Before beginning this step, ensure your configurations are complete per

the initial configuration sections in the prior chapter. Your START_DT and END_DT variables

must be set correctly for your calendar range (START_DT at least 12 months before start of history data)

and the C_MODULE_ARTIFACT table must have all of the required tables enabled for partitioning. C_MODULE_EXACT_TABLE must be configured if you need PLAN partitions for planning data loads.

-

Upload the calendar file

CALENDAR.csv(and associated context file) through Object Storage or SFTP (packaged using theRAP_DATA_HIST.zipfile). -

Execute the

HIST_ZIP_FILE_LOAD_ADHOCprocess. Example Postman message body:{ "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"HIST_ZIP_FILE_LOAD_ADHOC" } -

Verify that the jobs in the ZIP file load process completed successfully using the POM Monitoring screen. Download logs for the tasks as needed for review.

-

Execute the

CALENDAR_LOAD_ADHOCprocess. This transforms the data and moves it into all internal RI tables. It also performs table partitioning based on your input date range.Sample Postman message body:

{ "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"CALENDAR_LOAD_ADHOC", "requestParameters":"jobParams.CREATE_PARTITION_PRESETUP_JOB=2018-12-30,jobParams.ETL_BUSINESS_DATE_JOB=2021-02-06" }There are two date parameters provided for this command:

-

The first date value specifies the first day of partitioning. It must be some time before the first actual day of data being loaded. The recommendation is 1-6 months prior to the planned start of the history so that you have room for back-posted data and changes to start dates. You should not create excessive partitions for years of data you won’t be loading however, as it can impact system performance. The date should also be >=

START_DTvalue set inC_ODI_PARAM_VW, because RAP cannot partition dates that don’t exist in the system; but you don’t need to partition your entire calendar range. -

The second date (ETL business date) specifies the target business date, which is typically the day the system should be at after loading all history data and starting daily batches. It is okay to guess some date in the future for this value, but note that the partition process automatically extends 4 months past the date you specified. Your fiscal calendar must have enough periods in it to cover the 4 months after this date or this job will fail. This date can be changed later if needed, and partitioning can be re-executed multiple times for different timeframes.

-

-

If this is your first time loading a calendar file, check the

RI_DIM_VALIDATION_Vview to confirm no warnings or errors are detected. Refer to the AI Foundation Operations Guide for more details on the validations performed. The validation job will fail if it doesn’t detect data moved to the final table (W_MCAL_PERIOD_D). Refer to Sample Validation SQLs for sample queries you can use to check the data. -

If you need to reload the same file multiple times due to errors, you must Restart the Schedule in POM and then run the ad hoc process

C_LOAD_DATES_CLEANUP_ADHOCbefore repeating these steps. This will remove any load statuses from the prior run and give you a clean start on the next execution.Note:

If any job havingSTGin the name fails during the run, then review the POM logs and it should provide the name of an external LOG or BAD table with more information. These error tables can be accessed from APEX using a support utility. Refer to the AI Foundation Operations Guide section on “External Table Load Logs” for the utility syntax and examples.

You can monitor the partitioning process while it’s running by querying the RI_LOG_MSG table from APEX.

This table captures the detailed partitioning steps being performed by the script in real time (whereas POM logs are only

refreshed at the end of execution). If the process fails in POM after exactly 4 hours, this is just a POM process timeout

and it may still be running in the background so you can check for new inserts to the RI_LOG_MSG table.

The partitioning process will take some time (~5 hours per 100k partitions) to complete if you are loading multiple years of history, as this may require 100,000+ partitions to be created across the data model. This process must be completed successfully before continuing with the data load process. Contact Oracle Support if there are any questions or concerns. Partitioning can be performed after some data has been loaded; however, it will take significantly longer to execute, as it has to move all of the loaded data into the proper partitions.

You can also estimate the number of partitions needed based on the details below:

-

RAP needs to partition around 120 week-level tables if all functional areas are enabled, so take the number of weeks in your history time window multiplied by this number of tables.

-

RAP needs to partition around 160 day-level tables if all functional areas are enabled, so take the number of days in your history time window multiplied by this number of tables.

For a 3-year history window, this results in: 120*52*3 + 160*365*3 = 193,920 partitions. If you wish to confirm your final counts before proceeding to the next dataload steps, you can execute these queries from APEX:

select count(*) cnt from dba_tab_partitions where table_owner = 'RADM01' and table_name like 'W_RTL_%'

select table_name, count (*) cnt from dba_tab_partitions where table_owner = 'RADM01' and table_name like 'W_RTL_%' group by table_nameThe queries should return a count roughly equal to your expected totals (it will not be exact, as the data model will add/remove

tables over time and some tables come with pre-built partitions or default MAXVALUE partitions).

Loading Data from Files

When history and initial seed data comes from flat files, use the following tasks to upload them into RAP:

Table 3-4 Flat File Load Overview

| Activity | Description |

|---|---|

|

Initialize dimensional data (products, locations, and so on) to provide a starting point for historical records to join with. Separate initial load processes are provided for this task. |

|

|

Run history loads in one or multiple cycles depending on the data volume, starting from the earliest date in history and loading forward to today. |

|

|

Reload dimensional data as needed throughout the process to maintain correct key values for all fact data. Dimensional files can be provided in the same package with history files and ad hoc processes run in sequence when loading. |

|

|

Seed initial values for positional facts using full snapshots of all active item/locations in the source system. This must be loaded for the date prior to the start of nightly batches to avoid gaps in ongoing data. |

|

|

Nightly batches must be started from the business date after the initial seeding was performed. |

Completing these steps will load all of your data into the Retail Insights data model, which is required for all implementations. From there, proceed with moving data downstream to other applications as needed, such as AI Foundation modules and Merchandise Financial Planning.

Note:

All steps are provided sequentially, but can be executed in parallel. For example, you may load dimensions into RI, then on to AI Foundation and Planning applications before loading any historical fact data. While historical fact data is loaded, other activities can occur in Planning such as the domain build and configuration updates.

Initialize Dimensions

Loading Dimensions into RI

You cannot load any fact data into the platform until the related dimensions have been processed and verified. The processes in this section are provided to initialize the core dimensions needed to begin fact data loads and verify file formats and data completeness. Some dimensions which are not used in history loads are not part of the initialization process, as they are expected to come in the nightly batches at a later time.

For the complete list of dimension files and their file specifications, refer to the AI Foundation Interfaces Guide on My Oracle Support. The steps below assume you have enabled or disabled the appropriate dimension loaders in POM per your requirements. The process flow examples also assume CSV file usage, different programs are available for legacy DAT files. The AI Foundation Operations Guide provides a list of all the job and process flows used by foundation data files, so you can identify the jobs required for your files and disable unused programs in POM.

When you are using RDE jobs to source dimension data from RMFCS and you are not providing any flat files like PRODUCT.csv, it is necessary to disable all file-based loaders in the LOAD_DIM_INITIAL_ADHOC process flow from POM.

Any job name starting with the following text can be disabled, because RDE jobs will bypass these steps and insert directly

to staging tables:

-

COPY_SI_

-

STG_SI_

-

SI_

-

STAGING_SI_

-

Provide your dimension files and context files through File Transfer Services (packaged using the

RAP_DATA_HIST.zipfile). All files should be included in a single zip file upload. If your prior upload included dimensions along with the calendar file, skip to the third step. -

Execute the

HIST_ZIP_FILE_LOAD_ADHOCprocess if you need to unpack a new ZIP file. -

Execute the

LOAD_DIM_INITIAL_ADHOCprocess to stage, transform, and load your dimension data from the files. The ETL date on the command should be at a minimum one day before the start of your history load timeframe, but 3-6 months before is ideal. It is best to give yourself a few months of space for reprocessing dimension loads on different dates prior to start of history. Date format isYYYY-MM-DD; any other format will not be processed. After running the process, you can verify the dates are correct in theW_RTL_CURR_MCAL_Gtable. If the business date was not set correctly, your data may not load properly.Sample Postman message body:

{ "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"LOAD_DIM_INITIAL_ADHOC", "requestParameters":"jobParams.ETL_BUSINESS_DATE_JOB=2017-12-31" }Note:

If any job havingSTGin the name fails during the run, then review the POM logs and it should provide the name of an external LOG or BAD table with more information. These error tables can be accessed from APEX using a support utility. Refer to the AI Foundation Operations Guide section on “External Table Load Logs” for the utility syntax and examples.

If this is your first dimension load, you will want to validate the core dimensions such as product and location hierarchies using APEX. Refer to Sample Validation SQLs for sample queries you can use for this.

If any jobs fail during this load process, you may need to alter one or more dimension data files, re-send them in a new

zip file upload, and re-execute the programs. Only after all core dimension files have been loaded (CALENDAR, PRODUCT, ORGANIZATION, and EXCH_RATE) can you proceed to history loads

for fact data. Make sure to query the RI_DIM_VALIDATION_V view for any warnings/errors after the run. Refer

to the AI Foundation Operations Guide for more details on the validation messages that may occur. This view primarily

uses the table C_DIM_VALIDATE_RESULT, which can be separately queried instead of the view to see all the

columns available on it.

If you need to reload the same file multiple times due to errors, you must Restart the Schedule in POM and then run the

ad hoc process C_LOAD_DATES_CLEANUP_ADHOC before repeating these steps. This will remove any load statuses

from the prior run and give you a clean start on the next execution.

Note:

Starting with version 23.1.101.0, the product and organization file loaders have been redesigned specifically for the initial ad hoc loads. In prior versions, you must not reload multiple product or organization files for the same ETL business date, as it treats any changes as a reclassification and can cause data issues while loading history. In version 23.x, the dimensions are handled as “Type 1” slowly changing dimensions, meaning the programs do not look for reclasses and instead perform simple merge logic to apply the latest hierarchy data to the existing records, even if levels have changed.As a best practice, you should disable all POM jobs in the LOAD_DIM_INITIAL_ADHOC process except the ones

you are providing new files for. For example, if you are loading the PRODUCT, ORGANIZATION, and EXCH_RATE files as your dimension data for AI Foundation, then you could just execute the set of jobs

for those files and disable the others. Refer to the AI Foundation Operations Guide for a list of the POM jobs involved

in loading each foundation file, if you wish to disable jobs you do not plan to use to streamline the load process.

Hierarchy Deactivation

Beginning in version 23, foundation dimension ad hoc loads have been changed to use Type 1 slowly-changing dimension (SCD)

behavior, which means that the system will no longer create new records every time a parent/child relationship changes. Instead,

it will perform a simple merge on top of existing data to maintain as-is hierarchy definitions. The foundation data model

holds hierarchy records separately from product data, so it is also necessary to perform maintenance on hierarchies to maintain

a single active set of records that should be propagated downstream to other RAP applications. This maintenance is performed

using the program W_PROD_CAT_DH_CLOSE_JOB in the LOAD_DIM_INITIAL_ADHOC process. The program

will detect unused hierarchy nodes which have no children after the latest data has been loaded into W_PROD_CAT_DH and it will close them (set to CURRENT_FLG=N). This is required because, in the data model, each hierarchy

level is stored as a separate record, even if that level is not being used by any products on other tables. Without the cleanup

activity, unused hierarchy levels would accumulate in W_PROD_CAT_DH and be available in AI Foundation, which

is generally not desired.

There are some scenarios where you may want to disable this program. For example, if you know the hierarchy is going to

change significantly over a period of time and you don’t want levels to be closed and re-created every time a new file is

loaded, you must disable W_PROD_CAT_DH_CLOSE_JOB. You can re-enable it later and it will close any unused

levels that remain after all your changes are processed. Also be aware that the program is part of the nightly batch process

too, so once you switch from historical to nightly loads, this job will be enabled and will close unused hierarchy levels

unless you intentionally disable it.

Loading Dimensions to Other Applications

Once you have successfully loaded dimension data, you should pause the dataload process and push the dimensions to AI Foundation Cloud Services and the Planning Data Store (if applicable). This allows for parallel data validation and domain build activities to occur while you continue loading data. Review sections Sending Data to AI Foundation and Sending Data to Planning for details on the POM jobs you may execute for this.

The main benefits of this order of execution are:

-

Validating the hierarchy structure from the AI Foundation interface provides an early view for the customer to see some application screens with their data.

-

Planning apps can perform the domain build activity without waiting for history file loads to complete, and can start to do other planning implementation activities in parallel to the history loads.

-

Data can be made available for custom development or validations in Innovation Workbench.

Do not start history loads for facts until you are confident all dimensions are working throughout your solutions. Once you begin loading facts, it becomes much harder to reload dimension data without impacts to other areas. For example, historical fact data already loaded will not be automatically re-associated with hierarchy changes loaded later in the process.

Load History Data

Historical fact data is a core foundational element to all solutions in Retail Analytics and Planning. As such, this phase of the implementation can take the longest amount of time during the project, depending on the volumes of data, the source of the data, and the amount of transformation and validation that needs to be completed before and after loading it into the Oracle database.

It is important to know where in the RAP database you can look to find what data has been processed, what data may have been rejected or dropped due to issues, and how far along in the overall load process you are. The following tables provide critical pieces of information throughout the history load process and can be queried from APEX.

Table 3-5 Inbound Load Status Tables

| Table | Usage |

|---|---|

|

C_HIST_LOAD_STATUS |

Tracks the progress of historical ad hoc load programs for inventory and pricing facts. This table will tell you which Retail Insights tables are being populated with historical data, the most recent status of the job executions, and the most recently completed period of historical data for each table. Use APEX or Data Visualizer to query this table after historical data load runs to ensure the programs are completing successfully and processing the expected historical time periods. |

|

C_HIST_FILES_LOAD_STATUS |

Tracks the progress of zip file processing when loading multiple files in sequence using scheduled intraday cycles. |

|

C_LOAD_DATES |

Check for detailed statuses of historical load jobs. This is the only place that tracks this information at the individual ETL thread level. For example, it is possible for an historical load using 8 threads to successfully complete 7 threads but fail on one thread due to data issues. The job itself may just return as Failed in POM, so knowing which thread failed will help identify the records that may need correcting and which thread should be reprocessed. |

|

W_ETL_REJECTED_RECORDS |

Summary table capturing rejected fact record counts that do not get processed into their target tables in Retail Insights. Use this to identify other tables with specific rejected data to analyze. Does not apply to dimensions, which do not have rejected record support at this time. |

|

E$_W_RTL_SLS_TRX_IT_LC_DY_TMP |

Example of a rejected record detail table for Sales Transactions. All rejected record

tables start with the |

When loading data from flat files for the first time, it is common to have bad records that cannot be processed by the RAP load procedures, such as when the identifiers on the record are not present in the associated dimension tables. The foundation data loads leverage rejected record tables to capture all such data so you can see what was dropped by specific data load and needs to be corrected and reloaded. These tables do not exist until rejected records occur during program execution. Periodically monitor these tables for rejected data which may require reloading.

The overall sequence of files to load will depend on your specific data sources and conversion activities, but the recommendation is listed below as a guideline.

-

Sales – Sales transaction data is usually first to be loaded, as the data is critical to running most applications and needs the least amount of conversion.

-

Inventory Receipts – If you need receipt dates for downstream usage, such as in Offer Optimization, then you need to load receipt transactions in parallel with Inventory Positions. For each file of receipts loaded, also load the associated inventory positions afterwards.

-

Inventory Position – The main stock-on-hand positions file is loaded next. This history load also calculates and stores data using the receipts file, so

INVENTORY.csvandRECEIPT.csvmust be loaded at the same time, for the same periods. -

Pricing – The price history file is loaded after sales and inventory are complete because many applications need only the first two datasets for processing. Potentially, price history may also be the largest volume of data; so it’s good to be working within your other applications in parallel with loading price data.

-

All other facts – There is no specific order to load any of the other facts like transfers, adjustments, markdowns, costs, and so on. They can be loaded based on your downstream application needs and the availability of the data files.

Automated History Loads

Once you have performed your history loads manually a couple of times (following all steps in later sections) and validated the data is correct, you may wish to automate the remaining file loads. An intraday cycle is available in POM that can run the fact history loads multiple times using your specified start times. Follow the steps below to enable this process:

-

Upload multiple ZIP files using FTS, each containing one set of files for the same historical period. Name the files like

RAP_DATA_HIST.zip,RAP_DATA_HIST.zip.1,RAP_DATA_HIST.zip.2and so on, incrementing the index on the end of the zip file name after the first one. -

In the POM batch administration screen, ensure all of the jobs in the

RI_INTRADAY_CYCLEare enabled, matching your initial ad hoc runs. Schedule the intraday cycles from Scheduler Administration to occur at various intervals throughout the day. Space out the cycles based on how long it took to process your first file. -

Monitor the load progress from the Batch Monitoring screen to see the results from each run cycle. Validate that data is being loaded successfully in your database periodically throughout the intraday runs. If an intraday run fails for any reason, it will not allow more runs to proceed until the issue is resolved.

Sales History Load

RAP supports the loading of sales transaction history using actual transaction data or daily/weekly sales totals. If loading data at an aggregate level, all key columns (such as the transaction ID) are still required to have some value. The sales data may be provided for a range of dates in a single file. The data should be loaded sequentially from the earliest week to the latest week but, unlike inventory position, you may have gaps or out-of-order loads, because the data is not stored positionally. Refer to Data File Generation for more details on the file requirements.

Note:

Many parts of AI Foundation require transactional data for sales, so loading aggregate data should not be done unless you have no better alternative.If you are not loading sales history for Retail Insights specifically, then there are many aggregation programs that can be disabled in the POM standalone process. These programs populate additional tables used only in BI reporting. The following list of jobs can be disabled if you are not using RI (effectively all aggregate “A” tables and jobs except for the item/location/week level can be turned off):

-

W_RTL_SLS_IT_LC_DY_A_JOB

-

W_RTL_SLS_SC_LC_WK_A_JOB

-

W_RTL_SLS_CL_LC_DY_A_JOB

-

W_RTL_SLS_CL_LC_WK_A_JOB

-

W_RTL_SLS_DP_LC_DY_A_JOB

-

W_RTL_SLS_DP_LC_WK_A_JOB

-

W_RTL_SLS_IT_DY_A_JOB

-

W_RTL_SLS_IT_WK_A_JOB

-

W_RTL_SLS_SC_DY_A_JOB

-

W_RTL_SLS_SC_WK_A_JOB

-

W_RTL_SLS_LC_DY_A_JOB

-

W_RTL_SLS_LC_WK_A_JOB

-

W_RTL_SLS_IT_LC_DY_SN_TMP_JOB

-

W_RTL_SLS_IT_LC_DY_SN_A_JOB

-

W_RTL_SLS_IT_LC_WK_SN_A_JOB

-

W_RTL_SLS_IT_DY_SN_A_JOB

-

W_RTL_SLS_IT_WK_SN_A_JOB

-

W_RTL_SLS_SC_LC_DY_CUR_A_JOB

-

W_RTL_SLS_SC_LC_WK_CUR_A_JOB

-

W_RTL_SLS_CL_LC_DY_CUR_A_JOB

-

W_RTL_SLS_DP_LC_DY_CUR_A_JOB

-

W_RTL_SLS_CL_LC_WK_CUR_A_JOB

-

W_RTL_SLS_DP_LC_WK_CUR_A_JOB

-

W_RTL_SLS_SC_DY_CUR_A_JOB

-

W_RTL_SLS_CL_DY_CUR_A_JOB

-

W_RTL_SLS_DP_DY_CUR_A_JOB

-

W_RTL_SLS_SC_WK_CUR_A_JOB

-

W_RTL_SLS_CL_WK_CUR_A_JOB

-

W_RTL_SLS_DP_WK_CUR_A_JOB

-

W_RTL_SLSPR_PC_IT_LC_DY_A_JOB

-

W_RTL_SLSPR_PP_IT_LC_DY_A_JOB

-

W_RTL_SLSPR_PE_IT_LC_DY_A_JOB

-

W_RTL_SLSPR_PP_CUST_LC_DY_A_JOB

-

W_RTL_SLSPR_PC_CS_IT_LC_DY_A_JOB

-

W_RTL_SLSPR_PC_HH_WK_A_JOB

-

W_RTL_SLS_CNT_LC_DY_A_JOB

-

W_RTL_SLS_CNT_IT_LC_DY_A_JOB

-

W_RTL_SLS_CNT_SC_LC_DY_A_JOB

-

W_RTL_SLS_CNT_CL_LC_DY_A_JOB

-

W_RTL_SLS_CNT_DP_LC_DY_A_JOB

-

W_RTL_SLS_CNT_SC_LC_DY_CUR_A_JOB

-

W_RTL_SLS_CNT_CL_LC_DY_CUR_A_JOB

-

W_RTL_SLS_CNT_DP_LC_DY_CUR_A_JOB

After confirming the list of enabled sales jobs, perform the following steps:

-

Create the file

SALES.csvcontaining one or more days of sales data along with a CTX file defining the columns which are populated. Optionally include theSALES_PACK.csvfile as well. -

Upload the history files to Object Storage using the

RAP_DATA_HIST.zipfile. -

Execute the

HIST_ZIP_FILE_LOAD_ADHOCprocess. -

Execute the

HIST_STG_CSV_SALES_LOAD_ADHOCprocess to stage the data in the database. Validate your data before proceeding. Refer to Sample Validation SQLs for sample queries you can use for this. -

Execute the

HIST_SALES_LOAD_ADHOCbatch processes to load the data. If no data is available for certain dimensions used by sales, then the load process can seed the dimension from the history file automatically. Enable seeding for all of the dimensions according to the initial configuration guidelines; providing the data in other files is optional.Several supplemental dimensions are involved in this load process, which may or may not be provided depending on the data requirements. For example, sales history data has promotion identifiers, which would require data on the promotion dimension.

Sample Postman message bodies:

{ "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"HIST_STG_CSV_SALES_LOAD_ADHOC" } { "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"HIST_SALES_LOAD_ADHOC" }Note:

If any job havingSTGin the name fails during the run, then review the POM logs and it should provide the name of an external LOG or BAD table with more information. These error tables can be accessed from APEX using a support utility. Refer to the AI Foundation Operations Guide section on “External Table Load Logs” for the utility syntax and examples.

After the load is complete, you should check for rejected records, as this will not cause the job to fail but it will mean

not all data was loaded successfully. Query the table W_ETL_REJECTED_RECORDS from IW to see a summary of

rejections. If you cannot immediately identify the root cause (for example, missing products or locations causing the data

load to skip the records) there is a utility job W_RTL_REJECT_DIMENSION_TMP_JOB that allows you analyze the

rejections for common reject reasons. Refer to the AIF Operations Guide for details on configuring and running the

job for the first time if you have not used it before.

This process can be repeated as many times as needed to load all history files for the sales transaction data. If you are sending data to multiple RAP applications, do not wait until all data files are processed to start using those applications. Instead, load a month or two of data files and process them into all apps to verify the flows before continuing.

Note:

Data cannot be reloaded for the same records multiple times, as sales data is treated as additive. If data needs correction, you must post only the delta records (for example, send -5 to reduce a value by 5 units) or erase the table and restart the load process using RI_SUPPORT_UTIL procedures in APEX. Raise a Service Request with Oracle if neither of these options resolve your issue.Once you have performed the load and validated the data one time, you may wish to automate the remaining file loads. An intraday cycle is available in POM that can run the sales history load multiple times using your specified start times. Follow the steps below to leverage this process:

-

Upload multiple ZIP files each containing one

SALES.csvand naming them asRAP_DATA_HIST.zip,RAP_DATA_HIST.zip.1,RAP_DATA_HIST.zip.2and so on, incrementing the index on the end of the zip file name. Track the status of the files in theC_HIST_FILES_LOAD_STATUStable once they are uploaded and at least one execution of theHIST_ZIP_FILE_UNLOAD_JOBprocess has been run. -

In the POM batch administration screen, ensure all of the jobs in the

RI_INTRADAY_CYCLEare enabled, matching your initial ad hoc run. Schedule the intraday cycles from Scheduler Administration to occur at various intervals throughout the day. Space out the cycles based on how long it took to process your first file. -

Monitor the load progress from the Batch Monitoring screen to see the results from each run cycle.

Inventory Position History Load

RAP supports the loading of inventory position history using full, end-of-week snapshots. These weekly snapshots may be provided one week at a time or as multiple weeks in a single file. The data must be loaded sequentially from the earliest week to the latest week with no gaps or out-of-order periods. For example, you cannot start with the most recent inventory file and go backward; you must start from the first week of history. Refer to Data File Generation for more details on the file requirements.

A variety of C_ODI_PARAM_VW settings are available in the Control Center to disable inventory features

that are not required for your implementation. All of the following parameters can be changed to a value of N during the history load and enabled later for daily batches, as it will greatly improve the load times:

-

RI_INVAGE_REQ_IND– Disables calculation of first/last receipt dates and inventory age measures. Receipt date calculation is used in RI and required for Offer Optimization (as a method of determining entry/exit dates for items). It is also required for Forecasting for the Short Lifecycle (SLC) methods. Set toYif using any of these applications. -

RA_CLR_LEVEL– Disables the mapping of clearance event IDs to clearance inventory updates. Used only in RI reporting. -

RI_PRES_STOCK_IND– Disables use of replenishment data for presentation stock to calculate inventory availability measures. Used only in RI reporting. -

RI_BOH_SEEDING_IND– Disables the creation of initial beginning-on-hand records so analytics has a non-null starting value in the first week. Used only in RI reporting. -

RI_MOVE_TO_CLR_IND– Disables calculation of move-to-clearance inventory measures when an item/location goes into or out of clearance status. Used only in RI reporting. -

RI_MULTI_CURRENCY_IND– Disables recalculation of primary currency amounts if you are only using a single currency. Should be enabled for multi-currency, or disabled otherwise.

The following steps describe the process for loading inventory history:

-

If you need inventory to keep track of First/Last Receipt Dates for use in Offer Optimization or Forecasting (SLC) then you must first load a

RECEIPT.csvfile for the same historical period as your inventory file (because it is used in forecasting, that may make it required for your Inventory Optimization loads as well, if you plan to use SLC forecasting). You must also setRI_INVAGE_REQ_INDtoY. Receipts are loaded using the processHIST_CSV_INVRECEIPTS_LOAD_ADHOC. Receipts may be provided at day or week level depending on your history needs. -

Create the file

INVENTORY.csvcontaining one or more weeks of inventory snapshots in chronological order along with your CTX file to define the columns that are populated. TheDAY_DTvalue on every record must be an end-of-week date (Saturday by default). The only exception to this is the final week of history, which may be the middle of the week as long as you perform initial seeding loads on the last day of that week. -

Upload the history file and its context file to Object Storage using the

RAP_DATA_HIST.zipfile. -

Update column

HIST_LOAD_LAST_DATEon the tableC_HIST_LOAD_STATUSto be the date matching the last day of your overall history load (will be later than the dates in the current file). This can be done from the Control & Tactical Center. If you are loading history after your nightly batches were already started, then you must set this date to be the last week-ending date before your first daily/weekly batch. No other date value can be used in this case. -

Execute the

HIST_ZIP_FILE_LOAD_ADHOCprocess. -

If you are providing

RECEIPT.csvfor tracking receipt dates in history, runHIST_CSV_INVRECEIPTS_LOAD_ADHOCat this time. -

Execute the

HIST_STG_CSV_INV_LOAD_ADHOCprocess to stage your data into the database. Validate your data before proceeding. Refer to Sample Validation SQLs for sample queries you can use for this. -

Execute the

HIST_INV_LOAD_ADHOCbatch process to load the file data. The process loops over the file one week at a time until all weeks are loaded. It updates theC_HIST_LOAD_STATUStable with the progress, which you can monitor from APEX or DV. Sample Postman message bodies:{ "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"HIST_STG_CSV_INV_LOAD_ADHOC" } { "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"HIST_INV_LOAD_ADHOC" }

This process can be repeated as many times as needed to load all history files for the inventory position. Remember that inventory cannot be loaded out of order, and you cannot go back in time to reload files after you have processed them. If you load a set of inventory files and then find issues during validation, erase the tables in the database and restart the load with corrected files.

If you finish the entire history load and need to test downstream systems (like Inventory Optimization) then you will want

to populate the table W_RTL_INV_IT_LC_G first (the history load skips this table). There is a separate standalone

job HIST_LOAD_INVENTORY_GENERAL_JOB that you may execute to copy the final week of inventory from the fact

table to this table.

If your inventory history has invalid data, you may get rejected records and the batch process will fail with a message that rejects exist in the data. If this occurs, you cannot proceed until you resolve your input data, because rejections on positional data MUST be resolved for one date before moving onto the next. If you move onto the next date without reprocessing any rejected data, that data is lost and cannot be loaded at a later time without starting over. When this occurs:

-

The inventory history load will automatically populate the table

W_RTL_REJECT_DIMENSION_TMPwith a list of invalid dimensions it has identified. If you are running any other jobs besides the history load, you can also run the processW_RTL_REJECT_DIMENSION_TMP_ADHOCto populate that table manually. You have the choice to fix the data and reload new files or proceed with the current file -

After reviewing the rejected records, run

REJECT_DATA_CLEANUP_ADHOC, which will erase theE$table and move all rejected dimensions into a skip list. You must pass in the module code you want to clean up data for as a parameter on the POM job (in this case the module code isINV). The skip list is loaded to the tableC_DISCARD_DIMM. Skipped identifiers will be ignored for the current file load, and then reset for the start of the next run.Example Postman message body:

{ "cycleName": "Adhoc", "flowName":"Adhoc", "processName":"REJECT_DATA_CLEANUP_ADHOC", "requestParameters":"jobParams.REJECT_DATA_CLEANUP_JOB=INV" } -

If you want to fix your files instead of continuing the current load, stop here and reload your dimensions and/or fact data following the normal process flows.

-

If you are resuming with the current file with the intent to skip all data in

C_DISCARD_DIMM, restart the failed POM job now. The skipped records are permanently lost and cannot be reloaded unless you erase your inventory data and start loading files from the beginning.

Log a Service Request with Oracle Support for assistance with any of the above steps if you are having difficulties with loading inventory history or dealing with rejected records.

Price History Load

Certain applications, such as Promotion and Markdown Optimization, require price history to perform their calculations. Price history is similar to inventory in that it is a positional, but it can be loaded in a more compressed manner due to the extremely high data volumes involved. The required approach for price history is as follows:

-

Update

C_HIST_LOAD_STATUSfor thePRICErecords in the table, specifying the last date of history load, just as you did for inventory. If you are loading history after your nightly batches already started, then you must set this date to be the last week-ending date before your first daily/weekly batch. No other date value can be used in this case. -

If you are loading prices for PMO/OO applications specifically, then go to

C_ODI_PARAM_VWin the Control Center and change the parameterRI_LAST_MKDN_HIST_INDto have a value ofY. This will populate some required fields for PMO markdown price history -

Create an initial, full snapshot of price data in

PRICE.csvfor the first day of history and load this file into the platform using the history processes in this section. All initial price records must come with a type code of 0. -

Create additional

PRICEfiles containing just price changes for a period of time (such as a month) with the appropriate price change type codes and effective day dates for those changes. Load each file one at a time using the history processes. -

The history procedure will iterate over the provided files day by day, starting from the first day of history, up to the last historical load date specified in

C_HIST_LOAD_STATUSfor the pricing fact. For each date, the procedure checks the staging data for effective price change records and loads them, then moves on to the next date.

The process to perform price history loads is similar to the inventory load steps. It uses the PRICE.csv file and the HIST_CSV_PRICE_LOAD_ADHOC process (the price load only has one load process instead of two

like sales/inventory). Just like inventory, you must load the data sequentially; you cannot back-post price changes to earlier

dates than what you have already loaded. Refer to Data File Generation for complete details on how to build this file.

Just like inventory, the REJECT_DATA_CLEANUP_ADHOC process may be used when records are rejected during

the load. Price loads cannot continue until you review and clear the rejections.

{

"cycleName": "Adhoc",

"flowName":"Adhoc",

"processName":"REJECT_DATA_CLEANUP_ADHOC",

"requestParameters":"jobParams.REJECT_DATA_CLEANUP_JOB=PRICE"

}

Other History Loads

While sales and inventory are the most common facts to load history for, you may also want to load history for other areas such as receipts and transfers. Separate ad hoc history load processes are available for the following fact areas:

-

HIST_CSV_ADJUSTMENTS_LOAD_ADHOC

-

HIST_CSV_INVRECEIPTS_LOAD_ADHOC

-

HIST_CSV_MARKDOWN_LOAD_ADHOC

-

HIST_CSV_INVRTV_LOAD_ADHOC

-

HIST_CSV_TRANSFER_LOAD_ADHOC

-

HIST_CSV_DEAL_INCOME_LOAD_ADHOC

-

HIST_CSV_ICMARGIN_LOAD_ADHOC

-

HIST_CSV_INVRECLASS_LOAD_ADHOC

All of these interfaces deal with transactional data (not positional) so you may use them at any time to load history files in each area.

Note:

These processes are intended to support history data for downstream applications such as AI Foundation and Planning, so the tables populated by each process by default should satisfy the data needs of those applications. Jobs not needed by those apps are not included in these processes.Some data files used by AIF and Planning applications do not have a history load process, because the data is only used

from the current business date forwards. For Purchase Order data (ORDER_DETAIL.csv), refer to the section

below on Seed Positional Facts if you need to load the file before starting your nightly batch processing. For other

areas like transfers/allocations used by Inventory Optimization, those jobs are only included in the nightly batch schedule

and do not require any history to be loaded.

Modifying Staged Data

If you find problems in the data you’ve staged in the RAP database (specific to RI/AIF input interfaces) you have the option

to directly update those tables from APEX, thus allowing you to reprocess the records without uploading new files through

FTS. You have the privileges to insert, delete, or update records in tables where data is staged before being loaded into

the core data model, such as W_RTL_INV_IT_LC_DY_FTS for inventory data.

Directly updating the staging table data can be useful for quickly debugging load failures and correcting minor issues.

For example, you are attempting to load PRODUCT.csv for the first time and you discover some required fields

are missing data for some rows. You may directly update the W_PRODUCT_DTS table to put values in those fields

and rerun the POM job, allowing you to progress with your dataload and find any additional issues before generating a new

file. Similarly, you may have loaded an inventory receipts file, but discovered after staging the file that data was written

to the wrong column (INVRC_QTY contains the AMT values and vice versa). You can update the

fields and continue to load it to the target tables to verify it, and then correct your source data from the next run forwards

only.

These privileges extend only to staging tables, such as table names ending in FTS, DTS, FS, or DS. You cannot modify internal tables holding the final fact or dimension data.

You cannot modify configuration tables as they must be updated from the Control & Tactical Center. The privileges do not

apply to objects in the RDX or PDS database schemas.

Reloading Dimensions

It is common to reload dimensions at various points throughout the history load, or even in-sync with every history batch run. Ensure that your core dimensions, such as the product and location hierarchies, are up-to-date and aligned with the historical data being processed. To reload dimensions, you may follow the same process as described in the Initial Dimension Load steps, ensuring that the current business load date in the system is on or before the date in history when the dimensions will be required. For example, if you are loading history files in a monthly cadence, ensure that new product and location data required for the next month has been loaded no later than the first day of that month, so it is effective for all dates in the history data files. Do not reload dimensions for the same business date multiple times, advance the date to some point after the prior load each time you want to load new sets of changed dimension files.

It is also very important to understand that history load procedures are unable to handle reclassifications that have occurred in source systems when you are loading history files. For example, if you are using current dimension files from the source system to process historical data, and the customer has reclassified products so they are no longer correct for the historical time periods, then your next history load may place sales or inventory under the new classifications, not the ones that were relevant in history. For this reason, reclassifications should be avoided if at all possible during history load activities, unless you can maintain historical dimension snapshots that will accurately reflect historical data needs.

Seed Positional Facts

Once sales and inventory history have been processed, you will need to perform seeding of the positional facts you wish to use. Seeding a fact means to load a full snapshot of the data for all active item/locations, thus establishing a baseline position for every possible record before nightly batches start loading incremental updates to those values. Seeding of positional facts should only occur once history data is complete and daily batch processing is ready to begin. Seed loads should also be done for a week-ending date, so that you do not have a partial week of daily data in the system when you start daily batches.

Instead of doing separate seed loads, you also have the option of just providing full snapshots of all positional data in your first nightly batch run. This will make the first nightly batch take a long time to complete (potentially 8+ hours) but it allows you to skip all of the steps documented below. This method of seeding the positional facts is generally the preferred approach for implementers, but if you want to perform manual seeding as a separate activity, review the rest of this section. If you are also implementing RMFCS, you can leverage Retail Data Extractor (RDE) programs for the initial seed load as part of your first nightly batch run, following the steps in that chapter instead.

If you did not previously disable the optional inventory features in C_ODI_PARAM (parameters RI_INVAGE_REQ_IND, RA_CLR_LEVEL, RI_PRES_STOCK_IND, RI_BOH_SEEDING_IND, RI_MOVE_TO_CLR_IND, and RI_MULTI_CURRENCY_IND) then you should review these settings now and set all parameters to N if the functionality is not required. Once this is done, follow the steps below to perform positional seeding:

-

Create the files containing your initial full snapshots of positional data. It may be one or more of the following:

-

PRICE.csv -

COST.csv(used for both BCOST and NCOST data interfaces) -

INVENTORY.csv -

ORDER_DETAIL.csv(ORDER_HEAD.csvshould already be loaded using dimension process) -

W_RTL_INVU_IT_LC_DY_FS.dat

-

-

Upload the files to Object Storage using the

RAP_DATA_HIST.zipfile. -

Execute the

LOAD_CURRENT_BUSINESS_DATE_ADHOCprocess to set the load date to be the next week-ending date after the final date in your history load.{ "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"LOAD_CURRENT_BUSINESS_DATE_ADHOC", "requestParameters":"jobParams.ETL_BUSINESS_DATE_JOB=2017-12-31" } -

Execute the ad hoc seeding batch processes depending on which files have been provided. Sample Postman messages:

{ "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"SEED_CSV_W_RTL_PRICE_IT_LC_DY_F_PROCESS_ADHOC" } { "cycleName": "Adhoc", "flowName":"Adhoc", "processName":"SEED_CSV_W_RTL_NCOST_IT_LC_DY_F_PROCESS_ADHOC" } { "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"SEED_CSV_W_RTL_BCOST_IT_LC_DY_F_PROCESS_ADHOC" } { "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"SEED_CSV_W_RTL_INV_IT_LC_DY_F_PROCESS_ADHOC" } { "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"SEED_CSV_W_RTL_INVU_IT_LC_DY_F_PROCESS_ADHOC" } { "cycleName":"Adhoc", "flowName":"Adhoc", "processName":"SEED_CSV_W_RTL_PO_ONORD_IT_LC_DY_F_PROCESS_ADHOC" }

Once all initial seeding is complete and data has been validated, you are ready to perform a regular batch run. Provide

the data files expected for a full batch, such as RAP_DATA.zip or RI_RMS_DATA.zip for foundation

data, RI_MFP_DATA.zip for externally-sourced planning data (for RI reporting and AI Foundation forecasting),

and any AI Foundation Cloud Services files using the ORASE_WEEKLY.zip files. If you are sourcing daily data

from RMFCS then you need to ensure that the RDE batch flow is configured to run nightly along with the RAP batch schedule.

Batch dependencies between RDE and RI should be checked and enabled, if they are not already turned on.

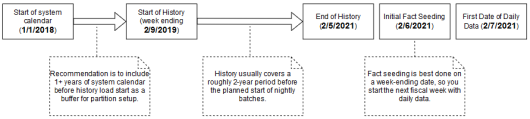

From this point on, the nightly batch takes care of advancing the business date and loading all files, assuming that you want the first load of nightly data to occur the day after seeding. The following diagram summarizes a potential set of dates and activities using the history and seeding steps described in this chapter:

Note:

The sequential nature of this flow of events must be followed for positional facts (for example, inventory) but not for transactional facts (such as sales). Transactional data supports posting for dates other than what the current system date is, so you can choose to load sales history at any point in this process.Run Nightly Batches

As soon as initial seeding is performed (or instead of initial seeding), you need to start nightly batch runs. If you are using the nightly batch to seed positional facts, ensure your first ZIP file upload for the batch has those full snapshots included. Once those full snapshots are loaded through seeding or the first full batch, then you can send incremental files rather than full snapshots.

Nightly batch schedules can be configured in parallel with the history load processes using a combination of the Customer Modules Management (in Retail Home) and the POM administration screens. It is not recommended to configure the nightly jobs manually in POM, as there are over 500 batch programs; choosing which to enable can be a time-intensive and error-prone activity. Customer Modules Management greatly simplifies this process and preserves dependencies and required process flows. Batch Orchestration describes the batch orchestration process and how you can configure batch schedules for nightly execution.

Once you move to nightly batches, you may also want to switch dimension interfaces from Full to Incremental loading of

data. Several interfaces, such as the Product dimension, can be loaded incrementally, sending only the changed records every

day instead of a full snapshot. These options use the IS_INCREMENTAL flag in the C_ODI_PARAM_VW table and can be accessed from the Control & Tactical Center. If you are unsure of which flags you want to change, refer

to the Retail Insights Implementation Guide for detailed descriptions of all parameters.

Note:

At this time, incremental product and location loads are supported when using RDE for integration or when using legacy DAT files. CSV files should be provided as full snapshots.As part of nightly batch uploads, also ensure that the parameter file RA_SRC_CURR_PARAM_G.dat is included

in each ZIP package, and that it is being automatically updated with the current business date for that set of files. This

file is used for business date validation so incorrect files are not processed. This file will help Oracle Support identify

the current business date of a particular set of files if they need to intervene in the batch run or retrieve files from the

archives for past dates. Refer to the System Parameters File section for file format details.

In summary, here are the main steps that must be completed to move from history loads to nightly batches:

-

All files must be bundled into a supported ZIP package like

RAP_DATA.zipfor the nightly uploads, and this process should be automated to occur every night. -

Include the system parameter file

RA_SRC_CURR_PARAM_G.datin each nightly upload ZIP and automate the setting of thevdateparameter in that file (not applicable if RDE jobs are used). -

Sync POM schedules with the Customer Module configuration using the Sync with MDF button in the Batch Administration screen, restart the POM schedules to reflect the changes, and then review the enabled/disabled jobs to ensure the necessary data will be processed in the batch.

-

Move the RI ETL business date up to the date one day before the current nightly load (using

LOAD_CURRENT_BUSINESS_DATE_ADHOC). The nightly load takes care of advancing the date from this point forward. -

Close and re-open the batch schedules in POM as needed to align the POM business date with the date used in the data (all POM schedules should be open for the current business date before running the nightly batch).

-

Schedule the start time from the Scheduler Administration screen > RI schedule > Nightly tab. Enable it and set a start time. Restart your schedule again to pick up the new start time.

Sending Data to AI Foundation

All AI Foundation modules leverage a common batch infrastructure to initialize the core dataset, followed by ad hoc, application-specific programs to generate additional data as needed. Before loading any data into an AI Foundation module, it is necessary to complete initial dimension loads into RI and validate that core structures (calendar, products, locations) match what you expect to see. Once you are comfortable with the data that has been loaded in, leverage the following jobs to move data into one or more AI Foundation applications.

Table 3-6 Extracts for AI Foundation

| POM Process Name | Usage Details |

|---|---|

|

INPUT_FILES_ADHOC_PROCESS |

Receive inbound zip files intended for AI Foundation, archive and extract the files.

This process looks for the |

|

RSE_MASTER_ADHOC_PROCESS |

Foundation data movement from RI to AI Foundation, including core hierarchies and dimensional data. Accepts many different parameters to run specific steps in the load process. |

|

<app>_MASTER_ADHOC_PROCESS |

Each AI Foundation module, such as SPO or IO, has a master job for extracting and loading

data that is required for that application, in addition to the |

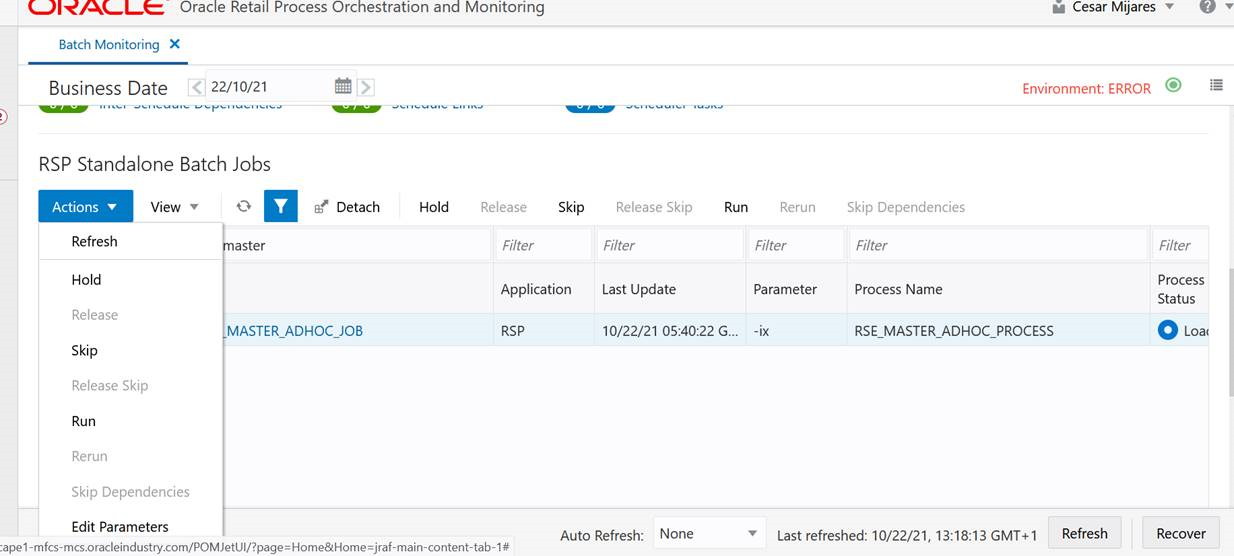

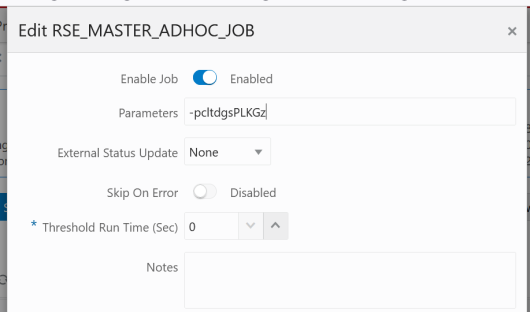

Because AI Foundation Cloud Services ad hoc procedures have been exposed using only one job in POM, they are not triggered like RI procedures. AI Foundation programs accept a number of single-character codes representing different steps in the data loading process. These codes can be provided directly in POM by editing the Parameters of the job in the Batch Monitoring screen, then executing the job through the user interface.

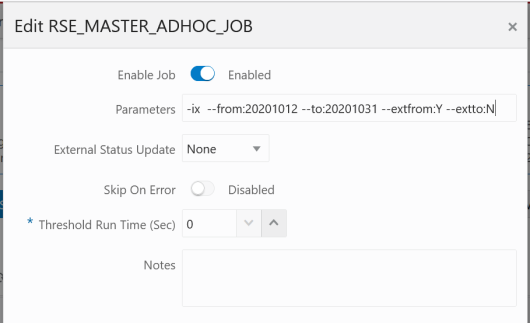

For example, this string of parameters will move all dimension data from RI to AI Foundation:

Additional parameters are available when moving periods of historical data, such as inventory and sales:

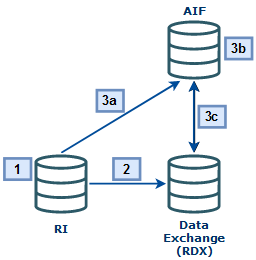

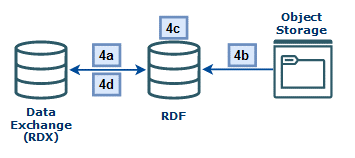

A typical workflow for moving core foundation data into AI Foundation is:

-

Load the core foundation files (like Calendar, Product, and Organization) into RI.

-

Use the

RSE_MASTER_ADHOC_PROCESSto move those same datasets to AI Foundation, providing specific flag values to only run the needed steps. -

Load some of your history files for Sales to validate the inputs.

-

Load the same range of sales to AI Foundation using the sales load with optional from/to date parameters.

-

Repeat the previous two steps until all sales data is loaded into both RI and AI Foundation.

Performing the process iteratively provides you early opportunities to find issues in the data before you’ve loaded everything, but it is not required. You can load all the data into AI Foundation at one time.

Follow the same general flow for the other application-specific, ad hoc flows into the AI Foundation modules. For a complete list of parameters in each program, refer to the AI Foundation Operations Guide.

Sending Data to Planning

If a Planning module is being implemented, then additional RI jobs should be executed as part of the initial and nightly batch runs. These jobs are available through ad hoc calls, and the nightly jobs are included in the RI nightly schedule. Review the list below for more details on the core Planning extracts available.

Table 3-7 Extracts for Planning

| POM Job Name | Usage Details |

|---|---|

|

W_PDS_PRODUCT_D_JOB |

Exports a full snapshot of Product master data (for non-pack items only) and associated hierarchy levels. |

|

W_PDS_ORGANIZATION_D_JOB |

Exports a full snapshot of Location master data (for stores and warehouses only) and associated hierarchy levels. |

|

W_PDS_CALENDAR_D_JOB |

Exports a full snapshot of Calendar data at the day level and associated hierarchy levels. Note: While RI exports the entire calendar, PDS will only import 5 years around theRPAS_TODAY date (current year

+/- 2 years).

|

|

W_PDS_EXCH_RATE_G_JOB |

Exports a full snapshot of exchange rates. |

|

W_PDS_PRODUCT_ATTR_D_JOB |

Exports a full snapshot of item-attribute relationships. This is inclusive of both diffs and UDAs. |

|

W_PDS_DIFF_D_JOB |

Exports a full snapshot of Differentiators such as Color and Size. |

|

W_PDS_DIFF_GRP_D_JOB |

Exports a full snapshot of differentiator groups (most commonly size groups used by SPO and AP). |

|

W_PDS_UDA_D_JOB |

Exports a full snapshot of User-Defined Attributes. |

|

W_PDS_BRAND_D_JOB |

Exports a full snapshot of Brand data (regardless of whether they are currently linked to any items). |

|

W_PDS_SUPPLIER_D_JOB |

Exports a full snapshot of Supplier data (regardless of whether they are currently linked to any items). |

|

W_PDS_REPL_ATTR_IT_LC_D_JOB |

Exports a full snapshot of Replenishment Item/Location Attribute

data (equivalent to |

|

W_PDS_DEALINC_IT_LC_WK_A_JOB |

Incremental extract of deal income data (transaction codes 6 and 7 from RMFCS) posted in the current business week. |

|

W_PDS_PO_ONORD_IT_LC_WK_A_JOB |

Incremental extract of future on-order amounts for the current business week, based on the expected OTB date. |

|

W_PDS_INV_IT_LC_WK_A_JOB |

Incremental extract of inventory positions for the current business week. Inventory is always posted to the current week, there are no back-posted records. |

|

W_PDS_SLS_IT_LC_WK_A_JOB |

Incremental extract of sales transactions posted in the current business week (includes back-posted transactions to prior transaction dates). |

|

W_PDS_INVTSF_IT_LC_WK_A_JOB |

Incremental extract of inventory transfers posted in the current business week (transaction codes 30, 31, 32, 33, 37, 38 from RMFCS). |

|

W_PDS_INVRC_IT_LC_WK_A_JOB |

Incremental extract of inventory receipts posted in the current business week. Only includes purchase order receipts (transaction code 20 from RMFCS). |

|

W_PDS_INVRTV_IT_LC_WK_A_JOB |

Incremental extract of returns to vendor posted in the current business week (transaction code 24 from RMFCS). |

|

W_PDS_INVADJ_IT_LC_WK_A_JOB |

Incremental extract of inventory adjustments posted in the current business week (transaction codes 22 and 23 from RMFCS). |

|

W_PDS_SLSWF_IT_LC_WK_A_JOB |

Incremental extract of wholesale and franchise transactions posted in the current business week (transaction codes 82, 83, 84, 85, 86, 88 from RMFCS). |

|