9 Extensibility

The Retail Analytics and Planning (RAP) suite of applications can be extended and customized to fit the needs of your implementation.

Custom applications, services and interfaces can be developed for AI Foundation using the Innovation Workbench module. Innovation Workbench is also the first choice for programmatic extensibility within RAP applications and provides access to data from both PDS and AIF.

Planning application configurations can be extended using the native RPASCE platform functionality, and further extended using Innovation Workbench.

Retail Insights can be extended with custom datasets brought into the application using Data Visualizer. This chapter will provide an overview of the RAP extensibility capabilities with links and references to find more information.

Note:

Before continuing with this section, please read the application-specific implementation/user guides.This chapter includes the following sections:

AI Foundation Extensibility

The Innovation Workbench as a part of the AI Foundation module consists primarily of Application Express (APEX) and Data Studio. These tools provide significant extensibility features for custom analytical applications, advanced data science processes, 3rd party integrations, and much more. Some examples of IW capabilities for AI Foundation include:

-

Custom database schema with full read/write access allows you to store data, run queries, perform custom calculations, and debug integrations across the RAP platform

-

Use advanced Oracle database features like Oracle Data Mining (ODM) and other machine-learning models

-

Use Notebooks in Data Studio to create custom Python scripts for analytics, data mining, or machine learning

-

Notebooks and APEX jobs can be scheduled to run automatically to refresh data and calculations

-

Create Restful API services both to request data from IW out to other systems and to consume non-Oracle data into the platform

-

Build flat file integrations into and out of IW for large data movements and custom dataset extensions

-

Build custom monitoring and utilities to manage integrations and science models with business IT processes

More details on Innovation Workbench features and examples of custom extensions can be found in the AI Foundation Implementation Guide chapter on Innovation Workbench.

Planning Applications Extensibility

Planning applications have a number of paths for extensibility. The configuration itself is extensively customizable in terms of business logic and rules, fact definition, data integration, and the user interface.

Provided that the application extensibility framework is followed, any customizations will be preserved in future patch and service upgrades.

Additionally, there is programmatic extensibility provided through the incorporation of PDS into Innovation Workbench. This allows the customer to extend the features provided by planning applications and build their own novel functionality. In addition, they can leverage the rich abilities and data already provided by IW.

Supported Application Configuration Customization

The following sections list the customizations that are allowed for application configuration. The following configuration components can be customized:

Note:

These customizations must be made through RPASCE Configuration Tools.-

Solution

-

Measures

-

Rules and Rule groups

-

Workbooks and worksheets

-

Hierarchy

-

Taskflow

-

Styles

For the customizations to be recognized, all names of custom-realized measures, rule sets, rule groups, rules, workbooks,

worksheets, and styles should begin with the prefix c_ or C_.

Custom worksheets may only be added into existing workbook tabs for plug-in generated solutions.

Rules for Customizing Hierarchy

The following hierarchy customizations can be made to the application configurations:

-

New hierarchies may be added, and/or new dimensions added to the existing hierarchy. However, no dimension may be added to a calendar hierarchy that is lower than “day”. Finally, no change can be made to internal hierarchies.

-

Changes are permitted to the labeling of existing hierarchies or dimensions.

-

All dimension and roll-up orders in the product, RHS product, location, and RHS location hierarchy must be preserved in the custom configuration.

Rules for Adding Measures

The following rules apply when adding measures to the application configurations:

-

Customers may add new custom measures into the custom solution and reference them as an external measure in the extensible solutions.

-

Customers can also add new custom metrics as a major component in the extensible solutions. It is strongly recommended to not mix custom metrics with application metrics.

-

Custom measures should follow the naming convention and should begin with a

C_orc_prefix. -

Only the published GA measures can be used in custom rules and custom workbooks. Only writable GA measures can be used on the left-hand side of a rule expression. The read-only GA measures can only be used on the right-hand side of the rule expression.

Publishing Measures

The published GA measures can be divided into the following categories:

Read only—can only be used on the right-hand side of the expression

Writable—can be used on both the left-hand side and right-hand side of the expression

RuleGroupOnlyWritable—a specific measure that can be read/written in the specified rule group

Loadable—measures that can be loaded using OAT and can be present in the custom load batch control file

WorkbookMeasureOverride—measures which property can be overridden in the associated workbook

ReadableExecutionSet—list of GA batch control execution set names that can be called from within a custom batch control execution file

The list of published measures will change based upon configuration. Therefore, the list is dynamically generated at each configuration regeneration.

The contents of the list are saved in a file named: publishedMeasures.properties.

The file is located under [config]/plugins. Before writing custom rules, regenerate your application configuration

and then open the file to search for published application measures.

Custom Measure Characteristics:

Sample Custom Measure

-

Each line of the file contains multiple fields that are pipe (“|”) separated.

-

The first field is one of the category names previously listed.

-

The second field is the name of the measure or execution set.

-

The third field is the measure label.

-

For

RuleGroupOnlyWritable, the fourth field is the rule group name. -

For

WorkbookMeasureOverride, the fourth field is the name of the workbook in which this measure is allowed to be overridden.

ReadOnly|PreSeaProf|Seasonal Profile

ReadOnly|activefcstitem01|Active Forecast Items

ReadOnly|activefcstitem07|Active Forecast ItemsGenerally, forecasting parameter overrides such as Forecast Method Override, Custom Exception, Custom Exception Metric, auxiliary inputs to applications such as Promotion Aggregation Profile, and Grouping Membership are writable because an implementer may set them up through customized rules.

Rules for Adding Custom Rules

The following rules apply when adding custom rules to the Application configuration:

-

Custom rule sets, rule groups and rule names should begin with the

C_orc_prefix. -

Custom rule groups should not include any GA rules.

-

Custom rules can use the published read-only GA measures listed in the

publishedMeasures.propertiesfile. However, the custom rules cannot modify the value of the read-only GA measure. Hence the read-only GA measure cannot appear on the LHS of a custom rule. -

Custom Rules can be added to custom rule group. They can also be added to the plug-in generated GA workbook rule groups such as load rule group, calc rule group, refresh rule group, commit rule group and custom menu rule. However, Custom Rules cannot be added to a plug-in generated batch rule group.

Rules for Workbooks and Worksheets Extensibility

The following rules apply when adding custom rules to the workbooks and worksheets extensibility:

-

New Custom workbook and worksheets names should begin with the

C_orc_prefix. -

Apart from the Custom Solution, custom workbooks can also be added to the extensible GA solutions.

Workbook Measure Override Extensibility

Certain GA measures can be overridden in the GA workbook. These measures are listed in the WorkbookMeasureOverride section of the published<app>Measures.properties file.

For example:

WorkbookMeasureOverride|<measure name>|<measure label>|<workbook template>

This indicates that the measure can be overridden in the workbook.

The following rules apply to override measure properties:

-

Base State and Agg State can be overridden.

-

Range property of static picklists can be overridden.

Note:

Options can only be removed; new options cannot be added.

Elapsed Lock Override

Elapsed Lock Override on RPASCE is supported in the following scenarios:

-

Custom measures in a workbook can have the Elapsed Lock Override set to

true. -

Custom workbooks can have this field set to

truefor GA measures that are in the Writable list of the published measures.Note:

If a GA measure has not been enabled as Elapsed Lock Override, the following steps can achieve the same behavior:

-

Make sure the GA measure is writable.

-

Register a custom measure and load it from the GA measure.

-

Set the custom measure as Elapsed Lock Override.

-

Edit the custom measure in the workbook.

-

Commit the custom measure back into the GA measure.

-

Rules for Adding Custom Real-time Alerts into Existing Workbooks

Perform the following steps when adding custom real-time alerts into existing workbooks.

Note:

These steps must be performed using RPASCE Configuration Tools. Copying, pasting or direct editing of XML files is unsupported.-

To add custom real-time alert into existing workbooks, all measures related to the custom real-time alert need to be added to the workbook.

-

Create a style for the custom real-time alert in the configuration.

-

Create a custom real-time alert in a workbook using the measures and style created from the previous steps.

-

If a real-time alert defined in custom solution will be used in a GA workbook, the real-time alert measure should be imported as an external measure in the corresponding GA solution.

-

We must ensure that the rule group consistency is maintained while adding any custom rules that might be needed to calculate an alert measure.

The application plug-in will preserve a custom real-time alert during regeneration

Adding a Custom Solution

A custom solution is a separate solution within the configuration. It can be used to accommodate custom workbooks, rules, alerts to do custom reporting, custom logic, and threshold alerts by using GA measures (based on the extensible GA measures in Table 9-1). In addition, measures and alerts defined in the custom solution can be plugged into existing workbooks in GA solution based on the contexts defined. Clients are allowed to create their own custom solutions by following the rules mentioned above. To use a GA measure in custom workbooks, the GA measure should be imported as an external measure in custom solution.

Adding Custom Styles

New styles can be added in the Style Definition window of Configuration Tools. The custom style name should be prefixed

with either c_ or C_. Style names that do not adhere to the naming convention will be caught

during the configuration validation. Any new style added will be retained during upgrades and patches.

Validating the Customized Configuration

A script, ra_config_validation.sh, has been provided to allow the customer or implementer to validate

that the customizations conform to the rules outlined above. For details of the script, refer to Configuration Validation.

This script can be run on Windows with the application starter kit. To do this, the implementer will need to make sure that they have a pristine copy of the GA configuration as well as the custom configuration.

For example, if the GA configuration has been copied to C:\Oracle\configurations\GA\RDF and the custom

configuration is in C:\Oracle\configurations\RDF, then the script can be called from a Cygwin zsh shell:

$RPAS_HOME/bin/ra_config_validation.sh -n RDF -d /cygdrive/c/Oracle/configurations -c /cygdrive/c/Oracle/configurations/GA/RDF/RDF.xmlSuccessful Run of the Validation Script

If all the validations pass, it will output the following message:

Message for Successful Run of Validation Script

09:04:47 : INFORMATION : ra_config_validation.sh[0] - ra_config_validation.sh completed.

09:04:47 : INFORMATION : ra_config_validation.sh[0] - Program completed successfully.

09:04:47 : INFORMATION : ra_config_validation.sh[0] - Exiting script with code: 0Unsuccessful Run of the Validation Script

If all the validations do not pass, it will output the following message:

Note:

The bold line shows where the details of the validation failure are in the log. (In the actual log, this line is not bold.)Message for Unsuccessful Run of Validation Script

09:15:12 : INFORMATION : ra_config_validation.sh[0] - For details of validation, look in '/cygdrive/d/retek/logs/2017-07-18/rdf_config_validation.091506.1/rdf_config_validation.log'.

09:15:12 : INFORMATION : ra_config_validation.sh[0] - _call executing command 'execplug-inTask.sh RDF:com.retek.labs.rdf.plug-in.installer.RDFConfigurationValidation /cygdrive/c/Oracle/configurations/GA/RDF/RDF.xml /cygdrive/c/Oracle/configurations RDF'

09:15:17 : INFORMATION : ra_config_validation.sh[0] - _call of command 'execplug-inTask.sh RDF:com.retek.labs.rdf.plug-in.installer.RDFConfigurationValidation /cygdrive/c/Oracle/configurations/GA/RDF/RDF.xml /cygdrive/c/Oracle/configurations RDF' complete

09:15:17 : ERROR : ra_config_validation.sh[0] - Nonzero exit status code.

09:15:17 : INFORMATION : ra_config_validation.sh[0] - Exiting script with code: 9Taskflow Extensibility

The application taskflow is extensible, the implementer can add custom taskflow components such as activities, tasks, steps,

tabs, and worksheets. Any custom taskflow component added to a GA taskflow component will be retained after plug-in automation.

As part of extensibility, applications provide a mechanism wherein the implementor can hide certain components of the GA configuration

and taskflow by editing a property file. The property file is a simple text file named extend_app.properties and is located inside the plug-in directory of the configuration. A sample file is included in the plug-ins directory of

the GA configuration for reference.

For example, <App>\plug-ins\extend_app.properties

The format of the file is shown as:

Stage|Component|Action|ValueAn example entry is:

Customization | Worksheet | Hide | activity_ni.task_niattmaint.NITREVSht1Each line consists of four fields separated by the | character. The value field can contain a comma-separated

list of values. Note that the value field should specify the fully qualified name of the taskflow component. Refer to the

sample file. Any line that begins with a # character is considered a comment line and is ignored.

The names of the Taskflow entities can be found in the taskflow.xml file located in the configuration

directory.

The various GA configuration components that can be hidden are listed in the following table:

| Component | Description |

|---|---|

|

Activity |

Hides the specified taskflow activity. The value field is the taskflow activity name. |

|

Task |

Hides the specified Taskflow task. The value field is the taskflow task name. |

|

Step |

Hides the specified Taskflow step. The value field is the taskflow step name. |

|

Tab |

Hides the specified Taskflow tab. The value field is the taskflow tab name. |

|

Worksheet |

Hides the specified worksheet. The value field is the worksheet name. |

|

Realtime Alert |

Hides the specified Real-time Alert. The value field is the real-time alert name. |

Customizing the Batch Process

This section describes how to customize the GA batch process to meet the business needs of the retailer. Details on the GA batch process are described in the Oracle Retail Demand Forecasting Administration Guide. The Configured Batch tasks have the following tasks related to batch control:

-

Retrieve Batch Control File – allows the current batch control files to be retrieved for inspection and modification.

-

Update Batch Control File – After inspecting the current batch control files, the implementor can edit the batch control files to customize the batch process.

Details on the previous two tasks are described in the Oracle Retail Predictive Application Server Cloud Edition Implementation Guide.

The application batch process is based on the RPASCE Enterprise Edition Batch Framework, which makes use of a set of control files. Table 9-1 lists the batch control files that can be customized. For detailed information on the RPASCE Batch Framework, refer to the Oracle Retail Predictive Application Server Implementation Guide.

Table 9-1 Customizable Batch Control Files

| Control File | Description |

|---|---|

|

batch_exec_list.txt |

This is the controller and entry point for all the other services, specifying groups of services to be run in a specific order. |

|

batch_calc_list.txt |

This control file groups all the calc services that need to run using mace. |

|

batch_refresh_list.txt |

This control file groups all Workbook refresh rule groups |

|

batch_loadmeas_list.txt |

This control file groups measures that need to be loaded into domain using the measure load service |

|

batch_exportmeas_list.txt |

This control file groups measures that need to be exported out of the domain using export measure service. |

|

batch_xform_list.txt |

This control file handles the transform file service to perform file transformations to support simple integration capabilities. |

|

batch_oat_list.txt |

This file lists the configured batch tasks that appear in the OAT drop down list. |

Custom Hooks and Boolean Scalar Measures for Flow Control

There are two ways to customize the batch control files:

Boolean Scalar Measures for Flow Control

The custom hooks are an optional batch set executed by GA batch control files. The implementer can define the contents of these batch sets in the customized batch control files that can be uploaded. If these hooks are not defined, then the batch process skips these hooks; otherwise, its contents are executed.

The application also defines a list of Boolean Scalar Measures in the domain to control whether certain GA-defined batch sets can be skipped or not. The following tables list the hooks and Boolean Scalar Measures.

Please refer to Application Specific Batch Control Information for details and examples.

Batch Control File Customization Guidelines

Follow these guidelines for Batch Control File Customization:

-

The file

batch_oat_list.txtis the only batch control file in which customers can overwrite the label for GA set names listed in OAT. -

For all other batch control files, avoid overwriting GA set names. GA batch control files have provided various hooks for the batch process. For additional custom steps, try to put them into the hooks.

-

GA batch control files have provided a mechanism to skip certain GA steps using boolean scalar measure that can be set in the domain.

-

For a GA hierarchy that is unused in your implementation (such as attribute hierarchy), provide an empty hierarchy file. For unused GA measures, there is no requirement to provide the data file. RPASCE will be able to skip it if no files are provided.

-

Do not remove any GA

clndhierarchy reorder steps; these steps are essential to the proper functioning of the application. -

For ease of maintenance, all custom batch set name or step names should be prefixed with

c_

Examples

The following is an example of custom batch_exec_list.txt, batch_calc_list.txt, batch_loadmeas_list.txt, and batch_exportmeas_list.txt.

In this example, the following

modification were added to the batch _weekly process:

-

Hierarchy and measure data file were unpacked.

-

Custom measures were loaded after GA measure load.

-

Outlier indicators for preprocessing were calculated use custom rules

-

Custom approval alerts were run after GA alerts and before approval

-

Promotion effects were exported after GA exports

Custom Batch Control Validation

The extensible / custom batch control files need to follow the guidelines previously to futureproof the retailer. That means the retailer should receive software updates without breaking the existing customizations. To ensure that the batch control file guidelines are adhered to, a batch control validation module has been added.

The ra_config_validation.sh script has an optional parameter -b <parent directory of batch

control files> which will validate the batch control files.

Batch control validation rules:

-

Apart from

batch_oat_list.txt, none of the set names in the other batch control files can be overridden. That is, GA set names cannot be used in custom batch control files. -

None of the custom batch control files can call the GA set names.

-

The

batch_calc_list.txtfile can only specify custom rule group names. Cannot specify expressions and GA rule group names. -

The

batch_loadmeas.txt_listfile can specify measures that are listed in the Loadable or Writable list of the published measures in thepublished<app>Measures.propertiesfile -

The

batch_exportmeas_list.txtfile can specify measures that are listed in the ReadOnly or Writable list of the published measures in thepublished<app>Measures.propertiesfile. -

All custom set names should have a prefix of

c_.

Note:

The batch control validation is called automatically during domain build or patch. It is also called when the batch control files are uploaded using the Upload Batch Control files from OAT.Dashboard Extensibility

Currently, RDF supports Dashboard Extensibility by allowing the Dashboard Settings configuration file to be customized. The other planning applications, such as MFP and AP, support customizing the dashboard, but these are not extensible (please refer to Customizing the MFP/AP Dashboard).

RDF Dashboard Extensibility

For detailed information on Dashboard components, please refer to the chapter, “Configuring Dashboards in RPASCE EE” in the Oracle Retail Predictive Application Server Cloud Edition (RPASCE) Configuration Tools User Guide.

As part of extensible dashboard, the following are supported:

-

Adding custom Metric and Exception profiles.

-

Adding a custom tile to GA Metric and Exception profiles.

-

Removing GA tiles and profiles.

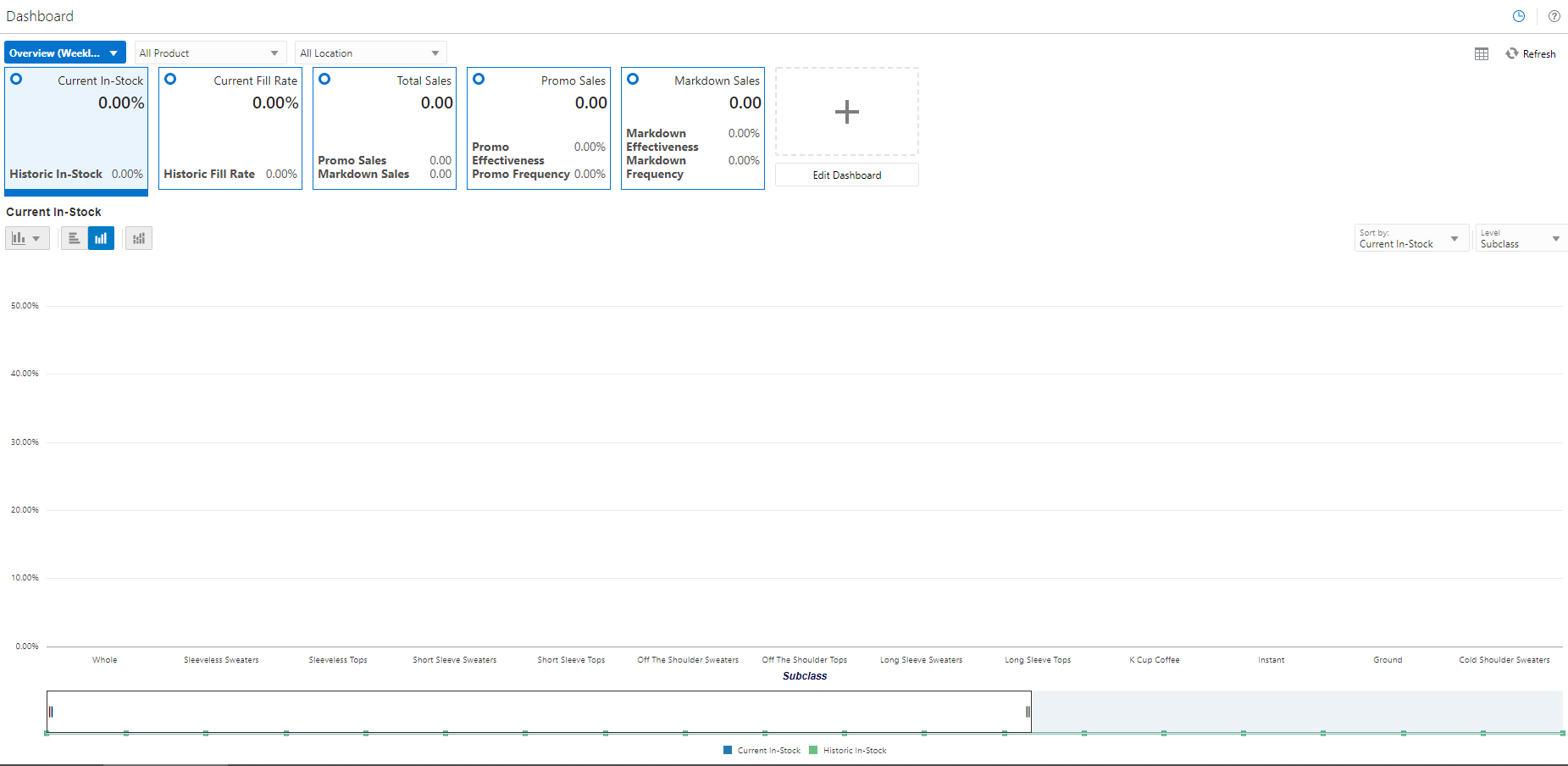

Figure 9-1 shows the RDF Dashboard as seen in the UI. It consists of two Metric profiles and two Exception profiles.

Figure 9-1 RDF CS Dashboard

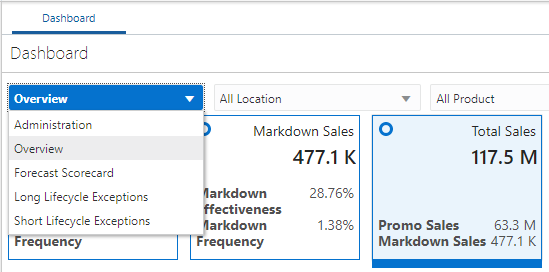

In Figure 9-2,the Overview Metric profile is selected, and the Total Sales tile is highlighted with two sub-measures: Promo Sales and Markdown Sales.

Figure 9-2 RDF CS Dashboard Selection

Note:

The Exception profiles consist of Exception Tiles, and the Metric Profile consists of metric tiles of the type Comparison Tile. Currently, RDF does not support the Variance Metric tile.Dashboard Intersection

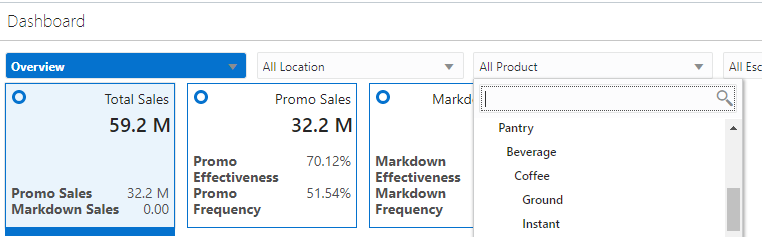

The RDF GA Dashboard workbook is built at the Sub-class, District level which is controlled by the Dashboard Intersection specified in the RDF plug-in. Refer to the "Configuring the RDF CS Solution" section in the Retail Demand Forecasting Cloud Service Implementation Guide. The Dashboard intersection also defines the level to which we can drill down the Product and Location filters in the Dashboard.

Figure 9-3 Product / Location Filters in the Dashboard

Process to Customize the Dashboard

Dashboard profiles correspond to a worksheet in the Dashboard workbook template in the configuration; and the measures displayed in the tiles are measures present in the worksheet corresponding to that profile. Customizing the dashboard is a three-step process:

In the Configuration, add the worksheet, measures, and rules to the Dashboard workbook template.

Regenerate the configuration by running the plug-in automation and then validate the configuration by running the ra_config_validation.sh script. Refer to the section, Validating the Customized Configuration for more information.

Customize the GA Dashboard Settings file in the Deployment Tool.

Note:

The Deployment Tool is a utility within the Configuration Tools. Refer to the section, Deployment Tool – Dashboard Settings Resource in the Oracle Retail Predictive Application Server Cloud Edition (RPASCE) Configuration Tools User Guide.The RDF GA Dashboard Settings configuration file is found within the configuration: RDF\plugins\dashboardSettings.json

Steps to add a custom profile:

-

In the Configuration Tool, add custom worksheet and measures to the worksheet in the dashboard workbook template in the configuration. Also add load/calc rules for the measures.

-

In the Deployment Tool, open the GA Dashboard Settings configuration file.

-

Add the custom profile (Exception or Metric) to the Dashboard Settings configuration file.

-

Save the file in the Deployment Tool.

Steps to add a custom tile:

-

Identify the profile and worksheet to which the custom tiles need to be added.

-

In the Configuration Tool, add the custom measures to the corresponding worksheet. Also add load/calc rules for the measures.

-

In the Deployment Tool, open the GA Dashboard Settings configuration file.

-

Based on whether Exception or Metric profile, add the Exception tile or Comparison Metric Tile.

-

Save the file in the Deployment Tool.

Steps to remove GA tiles and profiles:

Note:

Do not remove the GA measures or worksheet from the Dashboard workbook template in the configuration.-

In the Deployment Tool, open the GA Dashboard Settings configuration file.

-

Delete the GA profile or tile.

-

Save the file in the Deployment Tool.

Save the Dashboard Settings Configuration file in the same location in the configuration, that is: RDF\plugins\dashboardSettings.json. Because this file is stored inside the configuration, whenever the customer uploads the configuration to the Object

Store, the customized Dashboard Configuration file will be used by the application during the domain build or patch process.

Once the domain is built or patched, if minor changes need to be made to the Dashboard that do not require a configuration change, then RPASCE provides a mechanism to Upload and Retrieve JSON files from the application.

This is supported through the Configured Batch OAT task -> Manage JSON File option. Refer to the Oracle Retail Predictive Application Server Cloud Edition (RPASCE) Administration Guide for detailed information on the OAT tasks.

Steps to Retrieve/Upload the Dashboard Configuration File:

-

Go to the Configured Batch OAT task -> Manage JSON Files -> Retrieve option.

-

The dashboard settings file will be downloaded into the Object Store as

RDF_json.tar.gz -

Un-tar the file and open it in the Deployment Tools.

-

Edit the file. Note that only minor updates that do not require a configuration change can be made at this time.

-

Save the file and zip it up as

RDF_json.tar.gzand then upload it to the Object Store -

Then go to the Configured Batch OAT task -> Manage JSON Files -> Upload option.

-

Log out and log in to the client.

-

The Dashboard should be updated with the changes

Customizing the MFP/AP Dashboard

The application Dashboard gets data from the regular dashboard workbook template like any other workbook segments to define

the measures used in metric tiles that are shown in the dashboard. This Dashboard can be customized using the same extensibility

rules for regular workbooks for adding new measures into that dashboard workbook (pl_db). The customer can

then update the MFP/AP Dashboard JSON file to include the newly added custom measures to show as tiles in the Dashboard.

Following are the steps for customizing the Dashboard:

-

Update application Configuration to include the required new custom measures and rules to include those measures in the existing dashboard template (

pl_db) in the application Configuration within regular extensibility framework. Patch the domain with the new updated configuration. -

Download the application dashboard JSON file (

dashboardSettings.json) from the Starter kit or directly from the customer-provisioned environment by running the Online Administration Tools task Patch Application Task -> Manage JSON Files -> Retrieve JSON files to Object Storage. This will download the JSON file into the Object Storage location atoutgoing/dashboardSettings.json. -

Open the downloaded dashboard JSON file using the RPASCE Configuration Tools -> Utilities -> Deployment Tool and selecting the Open option under

dashdoardSettings.json. -

It should open the dashboard JSON file in edit mode. The customer can then edit the dashboard to add the newly added measures into their required profiles. They can also add new profiles or change profiles but can only use the measures available in the dashboard workbook. For more information on working with the JSON file using RPASCE Configuration Tools, see the Oracle Retail Predictive Application Server Cloud Edition Configuration Tools User Guide.

-

Once the JSON file is updated, it can be uploaded into the MFP environment by uploading the file to the Object Storage location as

incoming/config/dashboardSettings.json, and running the Online Administration Tool task Patch Application Task -> Manage JSON Files > Update JSON files from Object Storage. Successful completion of the task will copy the file to the required location under the application domain. -

After uploading, rebuild the dashboard to view the updated dashboard.

-

The entire process can be validated in the Virtual machine before trying to upload the completed JSON file into the customer environment.

RAP Integration Interface Extensibility

interface.cfg is a PDS configuration file that defines the bidirectional exchange of hierarchy and fact

data between AIF and PDS, through RDX. For detailed information about the interface configuration file, please refer to the Oracle Retail Predictive Application Server Cloud Edition Implementation Guide.

The extensibility of interface.cfg is supported provided that the below guidelines are followed.

Note:

The permissible and restricted interface customization is published in the filepublishedMeasures.properties located in the [config]/plugins directory.

| Type | Rule | Comments/Sample Entries |

|---|---|---|

|

Hierarchy |

A new hierarchy interface can be defined provided the table already exists in RDX. |

|

|

Fact |

A new fact interface can be defined provided the table already exists in RDX. |

Note that the interface parameter (second field) must be different than the GA interface. |

|

Views |

Custom views cannot be defined. GA interfaces that are views can be customized. |

|

|

Hierarchy |

The dimension mapping for a GA hierarchy interface can be modified, provided it is allowed in the published property file. |

|

|

Hierarchy |

Custom dimensions can be added to a GA interface and mapped to existing RDX fields. |

For

example, if adding custom dimension 'area' to the

Note that custom dimensions should have IDs >= 50 |

|

Hierarchy |

Custom (New) hierarchy can be added, provided table exists in RDX.. |

Note that custom dimensions should have IDs >= 50 |

|

Facts (Import) |

Existing GA facts can be imported from a different source field (controlled by an extensibility property file). |

GA entry:

Custom entry:

|

|

Facts (Import) |

Custom facts can import from existing fields from a source table in RDX. |

|

|

Facts (Export) |

GA facts can be exported to another external field alongside the GA entry. |

|

|

Facts (Export) |

The external field can be populated by a different fact. Delete the GA entry and add the custom entry. |

|

|

Facts (default value) |

The default value can be customized for only facts allowed in property file. |

|

|

InterfaceFilters (Allow) |

Filters for an interface can be customized, provided it is allowed and not restricted in the property file. Note that the GA filter entry needs to be marked as deleted and then a custom entry added. |

means the above interfaces can have the filters customized. |

|

InterfaceFilters (Restrict) |

Although an interface is allowed for filters to be customized, some filters can be marked as restricted and cannot be customized. |

In this case, the |

Note:

-

Any customization in the

interface.cfgfile should be marked with acustom_???keyword in the 7th field. -

Note the keywords used to extend the GA

interface.cfg:-

custom_mod: to indicate an existing GA entry is being modified -

custom_del: to indicate an existing GA entry is being removed -

custom_add: to indicate a custom entry is being added.

-

-

If we are deleting and adding an entry, make sure they are consecutive entries.

Validations for a custom interface.cfg file:

-

Custom entries cannot have more than seven (7) fields.

-

Filter entries can only have

custom_addorcustom_delkeywords. -

Entries cannot be deleted from the Hierarchy interface. Therefore,

custom_delentries are not valid for the Hierarchy interface. -

Dimensions specified in the Hierarchy interface must be valid dimensions in the configuration.

-

Custom dimensions added to the Hierarchy interface should have a dimension ID greater than or equal to 50.

-

For Fact/Data interfaces, the dimensions/IDs cannot be modified.

-

Only Hierarchy dimensions published in the property file can be modified.

-

Only Interface and Facts published in the property file can be modified.

-

Only Interface Filters published and not restricted in the property file can be edited.

Follow this process to update the interface.cfg file:

-

Download the Application interface configuration from OAT

-

Update the

interface.cfgusing the previously listed guidelines. -

Upload the updated

interface.cfgto object store and then patch or build the application.

Application Specific Batch Control Information

Below sections describes Batch Control details that are specific to RDF:

Table 9-2 Custom Hooks

| Hook | Description |

|---|---|

|

hook_calc_attb_CF_ |

This hook is executed right after GA attributes exception

|

|

hook_frcst_adjust_CF_ |

This hook is provided to add custom forecast adjustment calculations. This hook is before the business rule group related calculation, approval, and navigation logic.

|

|

hook_frcst_alert_CF_ |

This hook is provided to merge the user specified parameters associated with approval business rule group before running exceptions. After merging the user specified parameters, the custom approval exceptions and exception metric should be executed.

|

|

hook_frcst_approval_CF_ |

This hook is provided to perform any post-processing to approval forecast after GA approval step.

|

|

hook_navi_attb_CF_ |

This hook is provided so that implementor can calculate the custom calculated attributes used in the navigation business rule groups.

|

|

hook_populate_aprvrulg_eligiblemask_CF_ |

This hook is for populate rulgeligmask_CF measure using custom logic. This measure is the eligible mask at sku/store/rulegroup. It can be populated with custom logic to calculate eligible items for approval business rule groups.

|

|

hook_post_export |

This hook is after export. |

|

hook_post_forecast |

This hook is between forecast and export. |

|

hook_post_preprocess |

This hook is after the preprocessing phase and before generating the forecasts. |

|

hook_pre_forecast |

This hook is after New Item calculation and before the forecast generation step. |

|

hook_pre_post_data_load |

This hook is between GA measure load and |

|

hook_RDF_COM_DATA_IMP_OBS_D hook_RDF_COM_DATA_IMP_OBS_W hook_RDF_COM_DATA_IMP_RDX_D hook_RDF_COM_DATA_IMP_RDX_W |

This hook is for the calling steps using any import of common data interfaces. |

|

hook_RDF_COM_HIER_IMP_OBS_D hook_RDF_COM_HIER_IMP_OBS_W hook_RDF_COM_HIER_IMP_RDX_D hook_RDF_COM_HIER_IMP_RDX_W |

This hook is for the calling steps using any import of common hierarchies. |

|

hook_RDF_HIER_IMP_OBS_D hook_RDF_HIER_IMP_OBS_W hook_RDF_HIER_IMP_RDX_D hook_RDF_HIER_IMP_RDX_W |

This hook is for the calling steps using any import of application-specific hierarchies. |

|

hook_RDF_INIT_EXP_OBS_D hook_RDF_INIT_EXP_OBS_W hook_RDF_INIT_EXP_RDX_D hook_RDF_INIT_EXP_RDX_W |

This hook is for calling steps for initial batch exports. |

|

hook_RDF_POST_BATCH_D hook_RDF_POST_BATCH_W |

This hook is for calling steps after the batch has run. |

|

hook_RDF_POST_DATA_IMP_OBS_D hook_RDF_POST_DATA_IMP_OBS_W hook_RDF_POST_DATA_IMP_RDX_D hook_RDF_POST_DATA_IMP_RDX_W |

This hook is for the calling steps using any import of application-specific data interfaces after the calc steps. |

|

hook_RDF_POST_EXP_OBS_D hook_RDF_POST_EXP_OBS_W hook_RDF_POST_EXP_RDX_D hook_RDF_POST_EXP_RDX_W |

This hook is for the calling steps using any exports after the batch aggregations. |

|

hook_RDF_PRE_BATCH_D hook_RDF_PRE_BATCH_W |

This hook is for calling steps prior to the batch being run. |

|

hook_RDF_PRE_DATA_IMP_OBS_D hook_RDF_PRE_DATA_IMP_OBS_W hook_RDF_PRE_DATA_IMP_RDX_D hook_RDF_PRE_DATA_IMP_RDX_W |

This hook is for the calling steps using any import of application-specific data interfaces. |

|

hook_RDF_PRE_EXP_OBS_D hook_RDF_PRE_EXP_OBS_W hook_RDF_PRE_EXP_RDX_D hook_RDF_PRE_EXP_RDX_W |

This hook is for calling steps prior to exports. |

|

hook_RDF_WB_BUILD_D hook_RDF_WB_BUILD_W |

This hook is for the calling steps specific to workbook refresh or build. |

Table 9-3 Boolean Scalar Measures

| Boolean Scalar Measure | Description |

|---|---|

|

appfalrton_CF_ |

This measure is set by the plug-in only.

|

|

cslpeakalrton_CF_ |

This measure is set by the plug-in only.

|

|

flysalrton_CF_ |

This measure is set by the plug-in only.

|

|

fralrton_CF_ |

This measure is set by the plug-in only.

|

|

runnewitembatch |

This measure is defaulted to true. Set it to false if new item is not configured or user would like to skip new item batch for pre-forecast batch. |

|

runfrcst_CF_ |

This measure is defaulted to true. Set it to false if customer would like to avoid running forecast on certain final level.

|

|

runnewitem_CF_ |

This measure is defaulted to true. Set it to false if customer would like to avoid incorporate new item forecast on certain final level.

|

|

runrulgeligga_CF_ |

This measure is defaulted to false. If enabled, this makes sure that the only forecastable items are handled by the business rule engine.

|

Batch Control Samples

The following sections list samples of batch control processes.

batch_exec_list.txt

# unpack data file before data load

hook_pre_load | unpack | rdf_hier.tar.gz

hook_pre_load | unpack | rdf_meas.tar.gz# load custom measures after GA hier and measure load

hook_pre_post_data_load | measload | c_weeklyLoad# calculate outlier indicator used in preprocess using custom rules

hook_ppsindicator | calc | c_outlier_calc# calculate custom approval alerts after GA approval alerts

hook_frcst_alert07 | exec | c_calc_cust_alerts# custom export

hook_post_export | measexport | c_export_promoeffects

c_calc_cust_alerts | calc |c_custalert1

c_calc_cust_alerts | calc |c_custalert2batch_calc_list.txt

#outlier calculation

c_outlier_calc | G | GROUP | c_HBICalcTodayIdx

c_outlier_calc | G | GROUP | c_dataprocess

c_outlier_calc | G | GROUP | c_calc_outlier#custom approval alerts calculation

c_custalert1 | G | GROUP | c_custalert1

c_custalert2 | G | GROUP | c_custalert2batch_loadmeas_list.txt

# load custom measure

c_weeklyLoad | M | c_ActiveItem

c_weeklyLoad | M | c_DisContinuebatch_exportmeas_list.txt

# export custom measure

c_export_promoeffects|O|promoeffects.csv.dat

c_export_promoeffects|X|storsku_lprm

c_export_promoeffects|F|c_ExportMask

c_export_promoeffects|S|ftp

c_export_promoeffects|M|prmbldeff07Below sections describe Batch Control details that are specific to MFP:

The following table describes the Custom Hooks available in the batch process if the customer is scheduling jobs directly through the OAT.

Table 9-4 Custom Hooks in the Batch Process to Directly Run from OAT

| Hook | Description |

|---|---|

|

hook_postbuild |

This hook is added at the end of the |

|

hook_postpatch |

This hook is added at the end of the service patch process, which runs after the service patch. |

|

hook_batch_daily_pre |

This hook is added before the daily batch process. |

|

hook_batch_daily_post |

This hook is added at the end of the daily batch process before the dashboard build. |

|

hook_batch_weekly_pre |

This hook is added before the weekly batch process. |

|

hook_batch_weekly_post |

This hook is added at the end of the weekly batch process before the workbook refresh and segment build. |

If the customer is using the JOS/POM flow schedule to schedule jobs in MFP, then the following hooks can be used.

The MFP JOS/POM job flow is connected to use the same set names, like the hooks shown in the following table without hook_* in it and in turn calls each of the corresponding hooks. So the customer can easily customize their MFP batch

flow based on their needs by simply changing the hooks or adding additional steps to the existing, pre-configured hooks.

The naming convention followed is:

-

_RDXis used for any integration step using RDX. -

_OBSis used for any steps using Object Storage. -

_Dis for jobs that run daily. -

_Wis for jobs that run weekly.

Table 9-5 Custom Hooks in the Batch Process if JOS/POM is Used to Schedule the Flow

| Hook | Description |

|---|---|

|

hook_MFP_PRE_EXP_RDX_D |

This hook is for the calling steps using the Daily Export Interfaces to RDX as soon as the batch starts. |

|

hook_MFP_PRE_EXP_OBS_D |

This hook is for the calling steps using the Daily Export Interfaces to Object Storage as soon as the batch starts. |

|

hook_MFP_PRE_EXP_RDX_W |

This hook is for calling steps using the Weekly Export Interfaces to RDX as soon as the batch starts. |

|

hook_MFP_PRE_EXP_OBS_W |

This hook is for the calling steps using the Weekly Export Interfaces to Object Storage as soon as the batch starts. |

|

hook_MFP_COM_HIER_IM P_RDX_D |

This hook is for the calling steps using any Daily Import of common hierarchies from RDX. |

|

hook_MFP_COM_HIER_IM P_OBS_D |

This hook is for the calling steps using any Daily Import of common hierarchies from Object Storage. |

|

hook_MFP_COM_HIER_IM P_RDX_W |

This hook is for the calling steps using any Weekly Import of common hierarchies from RDX. |

|

hook_MFP_COM_HIER_IM P_OBS_W |

This hook is for the calling steps using any Weekly Import of common hierarchies from Object Storage. |

|

hook_MFP_COM_DATA_IM P_RDX_D |

This hook is for the calling steps using any Daily Import of common data interfaces from RDX. |

|

hook_MFP_COM_DATA_IM P_OBS_D |

This hook is for the calling steps using any Daily Import of common data interfaces from Object Storage. |

|

hook_MFP_COM_DATA_IM P_RDX_W |

This hook is for the calling steps using any Weekly Import of common data interfaces from RDX. |

|

hook_MFP_COM_DATA_IM P_OBS_W |

This hook is for the calling steps using any Weekly Import of common data interfaces from Object Storage. |

|

hook_MFP_HIER_IMP_RD X_D |

This hook is for the calling steps using any Daily Import of application-specific hierarchies from RDX. |

|

hook_MFP_HIER_IMP_OB S_D |

This hook is for the calling steps using any Daily Import of application-specific hierarchies from Object Storage. |

|

hook_MFP_HIER_IMP_RD X_W |

This hook is for the calling steps using any Weekly Import of application-specific hierarchies from RDX. |

|

hook_MFP_HIER_IMP_OB S_W |

This hook is for the calling steps using any Weekly Import of application-specific hierarchies from Object Storage. |

|

hook_MFP_PRE_DATA_IMP_RDX_D |

This hook is for the calling steps using any Daily Import of application-specific data interfaces from RDX. |

|

hook_MFP_PRE_DATA_IMP_OBS_D |

This hook is for the calling steps using any Daily Import of application-specific data interfaces from Object Storage. |

|

hook_MFP_PRE_DATA_IMP_RDX_W |

This hook is for the calling steps using any Weekly Import of application-specific data interfaces from RDX. |

|

hook_MFP_PRE_DATA_IMP_OBS_W |

This hook is for the calling steps using any Weekly Import of application-specific data interfaces from Object Storage. |

|

hook_MFP_BATCH_AGG_D |

This hook is for the calling steps doing any regular daily batch aggregation after hierarchy and data loads. |

|

hook_MFP_BATCH_AGG_ W |

This hook is for the calling steps doing any regular weekly batch aggregation after hierarchy and data loads. |

|

hook_MFP_POST_DATA_IM P_RDX_D |

This hook is for the calling steps using any Daily Import of application-specific data interfaces from RDX after the calc steps. |

|

hook_MFP_POST_DATA_IM P_OBS_D |

This hook is for the calling steps using any Daily Import of application-specific data interfaces from Object Storage after the calc steps. |

|

hook_MFP_POST_DATA_IM P_RDX_W |

This hook is for the calling steps using any Weekly Import of application-specific data interfaces from RDX after the calc steps. |

|

hook_MFP_POST_DATA_IM P_OBS_W |

This hook is for the calling steps using any Weekly Import of application-specific data interfaces from Object Storage after the calc steps. |

|

hook_MFP_POST_EXP_RD X_D |

This hook is for the calling steps using any Daily Exports to RDX after the batch aggs. |

|

hook_MFP_POST_EXP_OB S_D |

This hook is for the calling steps using any Daily Exports to Object Storage after the batch aggs. |

|

hook_MFP_POST_EXP_RD X_W |

This hook is for the calling steps using any Weekly Exports to RDX after the batch aggs. |

|

hook_MFP_POST_EXP_OB S_W |

This hook is for the calling steps using any Weekly Exports to Object Storage after the batch aggs. |

|

hook_MFP_WB_BUILD_D |

This hook is for the calling steps specific to workbook refresh or build in the daily cycle. |

|

hook_MFP_WB_BUILD_W |

This hook is for the calling steps specific to workbook refresh or build in the weekly cycle. |

Boolean Scalar Measures for Flow Control

The following table describes the Boolean Scalar measures.

Table 9-6 Boolean Scalar Measures

| Boolean Scalar Measure | Description |

|---|---|

|

drdvrmsb |

This measure is defaulted to true. Set it to true if MFP is integrated with RMF CS. |

|

drdvrdxb |

This measure is defaulted to false. Set it to true enable RAP integration for hierarchy and transaction data. |

|

drdvexpdb |

This measure is defaulted to true. If set to false, it will skip exporting the standard exports in the daily batch. |

|

drdvexpwb |

This measure is defaulted to true. If set to false, it will skip exporting the standard exports in the weekly batch. |

Batch Control Samples

The following sections show samples of the batch control processes.

batch_exec_list.txt

# Load a custom hierarchy, measure before weekly

batch hook_batch_weekly_pre |hierload |suph~0~N

hook_batch_weekly_pre |measload |c_load_vndr

# Run Batch calc and new custom exports after end of weekly batch

hook_batch_weekly_post |calc |c_calc_vndr

hook_batch_weekly_post |exportmeasure |c_exp_vndrbatch_calc_list.txt

# Run newly added custom calc rule group in

batch c_calc_vndr | G | GROUP | c_batch_agg_vndrbatch_loadmeas.txt

# Load custom measure

c_load_vndr | M |c_drtyvndrfndrbatch_exportmeas.txt

# Export custom measure

c_exp_vndr|O|vendo_plan.csv.dat

c_exp_vndr|X|storsclsweek

c_exp_vndr|F|c_exportmask

c_exp_vndr|M|c_mpcpvndrplanBelow sections describes Batch Control details that are specific to AP:

The following table describes the Custom Hooks available in the batch process.

Table 9-7 Custom Hooks in the Batch Process

| Hook | Description |

|---|---|

|

hook_postbuild_pre |

This hook is added at the beginning of the postbuild batch which runs after the initial domain build. |

|

hook_postbuild_post |

This hook is added at the end of the postbuild batch which runs after the initial domain build. |

|

hook_postpatch |

This hook is added at the end of the service patch process which runs after the service patch. |

|

hook_batch_daily_pre |

This hook is added before the daily batch process. |

|

hook_batch_daily_post |

This hook is added at the end of daily batch process before the dashboard build. |

|

hook_batch_weekly_pre |

This hook is added before the weekly batch process. |

|

hook_batch_weekly_post |

This hook is added at the end of the weekly batch process before the workbook refresh and segment build. |

If the customer is using the JOS/POM flow schedule to schedule jobs in AP, then the following hooks can be used.

The AP JOS/POM job flow is connected to use the same set names similar to the hooks shown in the following table without hook_* in it and in turn calls each of the corresponding hooks. So the customer can easily customize their AP batch

flow based on their needs by simply changing the hooks or adding additional steps to the existing pre-configured hooks.

The naming convention followed is:

-

_RDXthat is used for any integration step using RDX. -

_OBSis used for any steps using Object Storage. -

_Dis for jobs that runs daily. -

_Wis for jobs that runs weekly.

Table 9-8 Custom Hooks in the Batch Process

| Hook | Description |

|---|---|

|

hook_AP_PRE_EXP_RDX_D |

This hook is for the calling steps using the Daily Export Interfaces to RDX as soon as the batch starts. |

|

hook_AP_PRE_EXP_OBS_D |

This hook is for the calling steps using the Daily Export Interfaces to Object Storage as soon as the batch starts. |

|

hook_AP_PRE_EXP_RDX_W |

This hook is for calling steps using the Weekly Export Interfaces to RDX as soon as the batch starts. |

|

hook_AP_PRE_EXP_OBS_W |

This hook is for the calling steps using the Weekly Export Interfaces to Object Storage as soon as the batch starts. |

|

hook_AP_COM_HIER_IMP_RDX_D |

This hook is for the calling steps using any Daily Import of common hierarchies from RDX. |

|

hook_AP_COM_HIER_IMP_OBS_D |

This hook is for the calling steps using any Daily Import of common hierarchies from Object Storage. |

|

hook_AP_COM_HIER_IMP_RDX_W |

This hook is for the calling steps using any Weekly Import of common hierarchies from RDX. |

|

hook_AP_COM_HIER_IMP_OBS_W |

This hook is for the calling steps using any Weekly Import of common hierarchies from Object Storage. |

|

hook_AP_COM_DATA_IMP_RDX_D |

This hook is for the calling steps using any Daily Import of common data interfaces from RDX. |

|

hook_AP_COM_DATA_IMP_OBS_D |

This hook is for the calling steps using any Daily Import of common data interfaces from Object Storage. |

|

hook_AP_COM_DATA_IMP_RDX_W |

This hook is for the calling steps using any Weekly Import of common data interfaces from RDX. |

|

hook_AP_COM_DATA_IMP_OBS_W |

This hook is for the calling steps using any Weekly Import of common data interfaces from Object Storage. |

|

hook_AP_HIER_IMP_RDX_D |

This hook is for the calling steps using any Daily Import of application-specific hierarchies from RDX. |

|

hook_AP_HIER_IMP_OBS_D |

This hook is for the calling steps using any Daily Import of application-specific hierarchies from Object Storage. |

|

hook_AP_HIER_IMP_RDX_W |

This hook is for the calling steps using any Weekly Import of application-specific hierarchies from RDX. |

|

hook_AP_HIER_IMP_OBS_W |

This hook is for the calling steps using any Weekly Import of application-specific hierarchies from Object Storage. |

|

hook_AP_PRE_DATA_IMP_RDX_D |

This hook is for the calling steps using any Daily Import of application-specific data interfaces from RDX. |

|

hook_AP_PRE_DATA_IMP_OBS_D |

This hook is for the calling steps using any Daily Import of application-specific data interfaces from Object Storage. |

|

hook_AP_PRE_DATA_IMP_RDX_W |

This hook is for the calling steps using any Weekly Import of application-specific data interfaces from RDX. |

|

hook_AP_PRE_DATA_IMP_OBS_W |

This hook is for the calling steps using any Weekly Import of application-specific data interfaces from Object Storage. |

|

hook_AP_BATCH_AGG_D |

This hook is for the calling steps doing any regular daily batch aggregation after hierarchy and data loads. |

|

hook_AP_BATCH_AGG_W |

This hook is for the calling steps doing any regular weekly batch aggregation after hierarchy and data loads. |

|

hook_AP_POST_DATA_IMP_RDX_D |

This hook is for the calling steps using any Daily Import of application-specific data interfaces from RDX after the calc steps. |

|

hook_AP_POST_DATA_IMP_OBS_D |

This hook is for the calling steps using any Daily Import of application-specific data interfaces from Object Storage after the calc steps. |

|

hook_AP_POST_DATA_IMP_RDX_W |

This hook is for the calling steps using any Weekly Import of application-specific data interfaces from RDX after the calc steps. |

|

hook_AP_POST_DATA_IMP_OBS_W |

This hook is for the calling steps using any Weekly Import of application-specific data interfaces from Object Storage after the calc steps. |

|

hook_AP_POST_EXP_RDX_D |

This hook is for the calling steps using any Daily Exports to RDX after the batch aggs. |

|

hook_AP_POST_EXP_OBS_D |

This hook is for the calling steps using any Daily Exports to Object Storage after the batch aggs. |

|

hook_AP_POST_EXP_RDX_W |

This hook is for the calling steps using any Weekly Exports to RDX after the batch aggs. |

|

hook_AP_POST_EXP_OBS_W |

This hook is for the calling steps using any Weekly Exports to Object Storage after the batch aggs. |

|

hook_AP_WB_BUILD_D |

This hook is for the calling steps specific to workbook refresh or build in the daily cycle. |

|

hook_AP_WB_BUILD_W |

This hook is for the calling steps specific to workbook refresh or build in the weekly cycle. |

Boolean Scalar Measures for Flow Control

The following table describes the Boolean Scalar measures.

Table 9-9 Boolean Scalar Measures

| Boolean Scalar Measure | Description |

|---|---|

|

drdvrmsb |

This measure is defaulted to true. Set it to true if AP is integrated with RMF CS. |

|

drdvexpdb |

This measure is defaulted to true. If set to false, it will skip exporting the standard exports in the daily batch. |

|

drdvexpwb |

This measure is defaulted to true. If set to false, it will skip exporting the standard exports in the weekly batch. |

Batch Control Samples

The following sections show samples of the batch control processes.

batch_exec_list.txt

# Load a custom hierarchy, measure before weekly batch

hook_batch_weekly_pre |hierload |suph~0~N

hook_batch_weekly_pre |measload |c_load_vndr

# Run Batch calc and new custom exports after end of weekly batch

hook_batch_weekly_post |calc |c_calc_vndr

hook_batch_weekly_post |exportmeasure |c_exp_vndrbatch_calc_list.txt

# Run newly added custom calc rule group in batch

c_calc_vndr | G | GROUP | c_batch_agg_vndrbatch_loadmeas.txt

# Load custom measure

c_load_vndr | M |c_drtyvndrfndrbatch_exportmeas.txt

# Export custom measure

c_exp_vndr|O|vendo_plan.csv.dat

c_exp_vndr|X|storsclsweek

c_exp_vndr|F|c_expmask

c_exp_vndr|M|c_mpcpvndrpln

Programmatic Extensibility of RPASCE Through Innovation Workbench

Innovation Workbench is the first choice for programmatic extensibility of RAP applications.

This section describes how Innovation Workbench (IW) can be used to extend RPASCE.

IW provides the ability for customers to upload and use custom PL/SQL functions and procedures within the RPASCE framework. These customer-supplied PL/SQL functions and procedures will, by default, have read access to all the metadata tables and fact data that is present across different RPASCE applications within PDS, such as MFP, RDF, AP, and so on.

Facts that are marked as customer-managed in the application configuration will additionally have write access and can be modified by the customer-supplied code. The ability to change fact data is a deliberate opt-in, as any data modification made by the custom PL/SQL must conform to the RPASCE norms to be successful.

RPASCE provides a helper PL/SQL package: rp_g_rpas_helper_pkg. This is the package that the custom PL/SQL

functions and procedures should use. These will help simplify commonly used tasks, such as looking up the fact table name

for a fact or finding the NA value of a FACT.

Finally, the applications will be able to invoke the custom PL/SQL functions and procedures from within the RPASCE rules

and expressions framework using the new special expression execplsql.

Architectural Overview

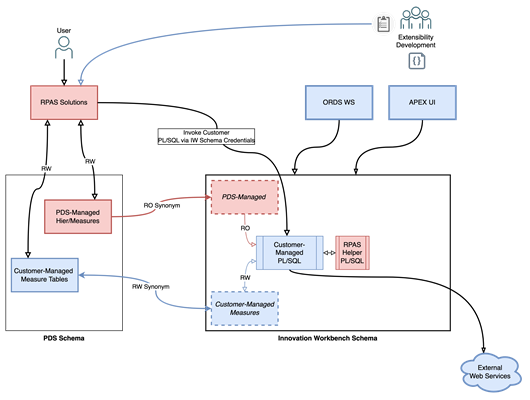

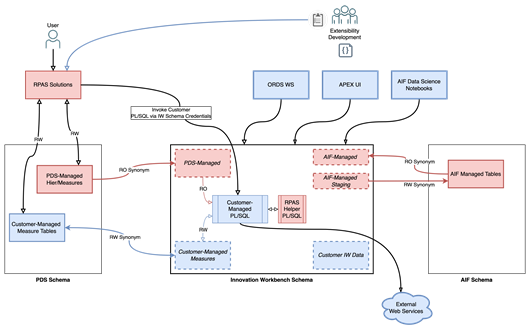

The figures in this section describe how the IW schema fits into the PDS and RAP contexts respectively.

Innovation Workbench from an RPASCE Context

The figure below shows a high-level architecture diagram of the IW schema in an RPASCE context. The interaction between the PDS schema and IW schema and how users interact with the IW schema is captured in this figure.

Innovation Workbench from a RAP Context

The figure below depicts how the IW schema fits into the larger RAP context, including AIF. Within the IW schema, the customer has access to data from both AIF and PDS configurations, which allows for the development of innovative extensions. These can be invoked from RPASCE, APEX, Data Studio notebooks, or an external context through ORDS web services.

RPASCE Configuration Tools Additions

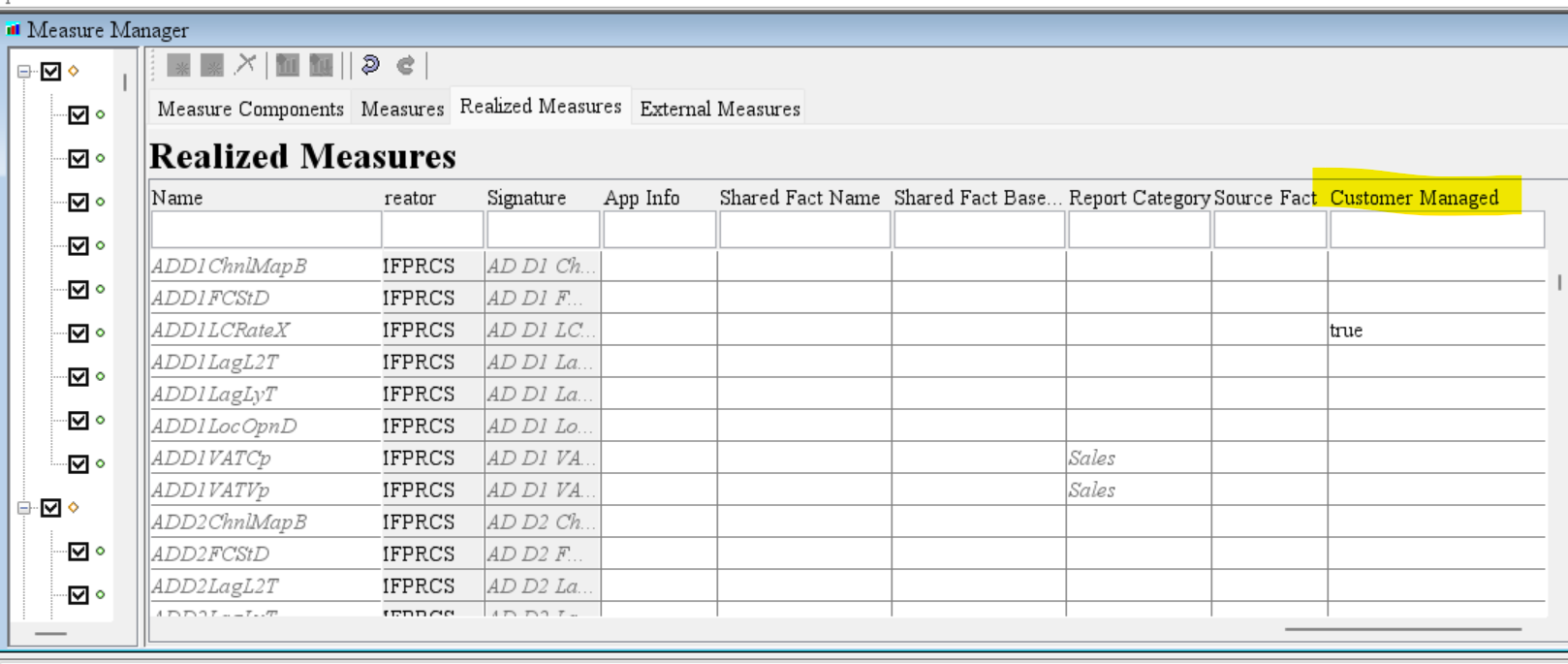

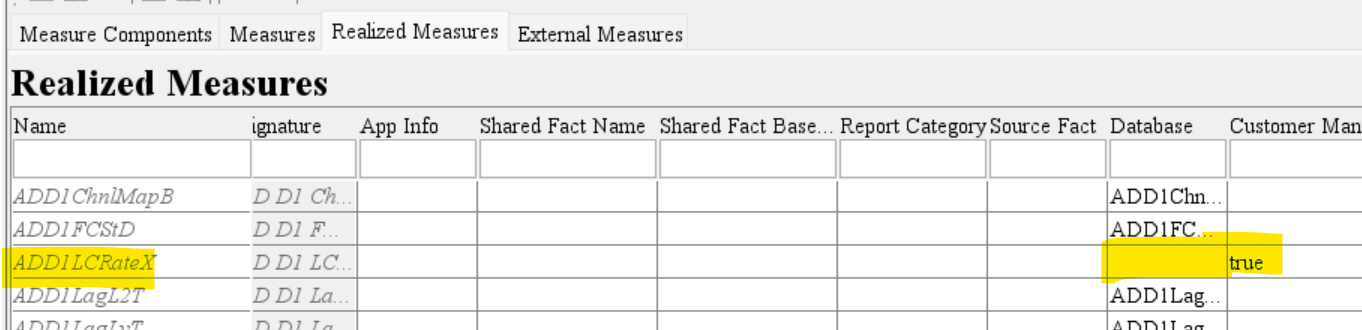

Measure Properties

If the customer-provided PL/SQL functions and procedures require write-access to any RPASCE measures, then they must be marked as "Customer-Managed" in the application configuration.

In ConfigTools Workbench, a new column Customer Managed is added to the Measure Definition Table. This new column is defaulted to empty, which means false.

To mark a measure as customer managed the value should be changed to true.

The "customer-managed" measures must have a database field specified, otherwise an error will be thrown.

Note:

The "customer-managed" measures cannot be used in cycle groups and the left-hand side of special expressions because these measures need to be in the same fact group. Making part of these measures as customer-managed measures/facts will split this fact group because customer-managed measures are assigned to a separate fact group.Rules and Expressions

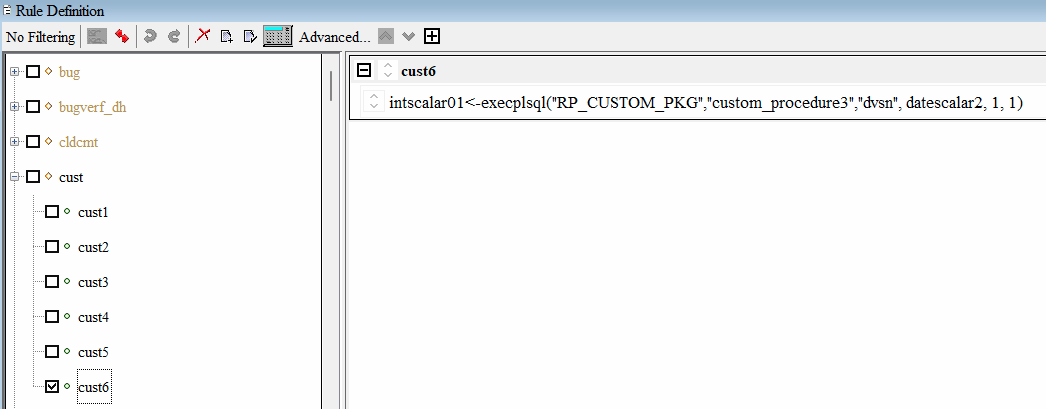

To invoke the uploaded PL/SQL functions and procedures, add rules with the special expression execplsql into the RPASCE rule definitions in the configtools. For more details about the special expression execplsql please refer to the section Special Expression - execplsql in this document.

Example

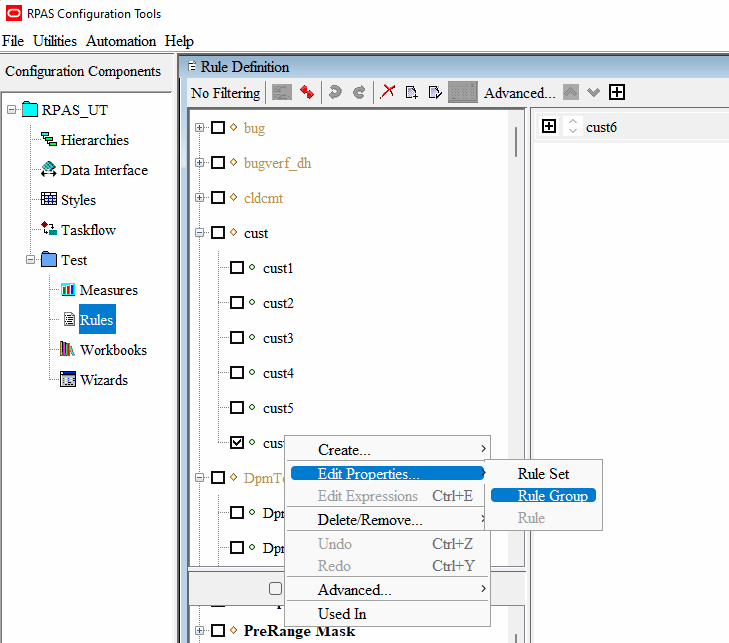

In the example below, a rule containing execplsql is added to rule group cust6.

However, there a couple of extra requirements for configuring execplsql. First the rulegroup

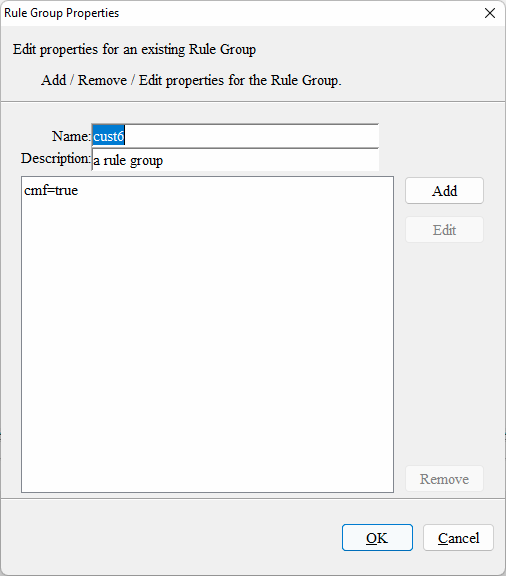

must have the property cmf=true set. See image below on how to access the rulegroup property.

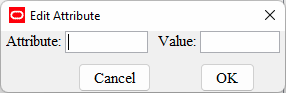

Use the add button

to add cmf as an attribute and set value to true.

The image below shows the cmf property set for a rulegroup.

The second requirement is that a cmf rulegroup must have only plsqlexec rules. It is not possible to mix other kinds of rules with the plsqlexec rule. There can be many plsqlexec rules in the same rulegroup. Also please make sure keep only one expression in each rule.

Integration Configuration

The Integration Configuration Tool will have a new column Customer-Managed for the Integration Map table. The integration configuration is generated internally and is only shown here for information purposes.

<integration_map>

<entry>

<fact>ADDVChWhMapT</fact>

<domain>mfpcs</domain>

<measure>ADDVChWhMapT</measure>

<outbound>N</outbound>

<customer-managed>Y</customer-managed>

</entry>

</integration_map>RPASCE Special Expression - execplsql

The special expression execplsql provides the ability to invoke the customer uploaded PL/SQL

functions and procedures from within the RPASCE rules and expressions framework. Both functions and procedures are supported.

Also execplsql is variadic and can take an arbitrary number of arguments that can be of different

types according to the PL/SQL signature. The number and type depend on the signature of the function or procedures being executed.

The first two arguments to execplsql are reserved to indicate the package name and the function or procedure

name to be executed.

Example

drdvsrcti<-execplsql("RP_CUSTOM_PKG","sum",drdvsrctt, adhdlcratet, add2locopnd)In this example the LHS measure drdvsrcti is a scalar integer measure. It will be set to the

integer value returned by the function named sum in the customer uploaded package RP_CUSTOM_PKG.

Arguments

LHS

The LHS measure must be a scalar integer measure. It will be set to the integer value returned

by the customer-uploaded PL/SQL function or procedure. The integer value is meant to be a return code indicating the result

of the procedure or function execution. In case of exceptions, RPASCE will set the LHS measure to a value of -1 to indicate an error. If there are any exceptions or failures, then the logs will provide further information regarding

the reason for the failure.

RHS

-

First argument:

The type of the first argument is

string. It can either be a string constant or a scalar string measure. The first argument is the name of the customer-uploaded package. For more details regarding uploading custom packages refer to section Uploading Custom PL/SQL Packages. -

Second Argument:

The type of the first argument is

string. It can either be a string constant or a scalar string measure. The second argument is the name of a function or procedure within in the custom package specified as the first argument ofexecplsql. This function or procedure will be executed by theexecplsqlspecial expression when it is evaluated.If a function is being specified, make sure the return type is declared as a number in the PL/SQL function declaration.

If a procedure is being specified, make sure there is exactly 1 out type parameter of type number in the PL/SQL procedure declaration.

Examples

Consider the PL/SQL function SUM present in the package RP_CUSTOM_PKG. To execute the SUM function in the RPASCE application batch, first upload RP_CUSTOM_PKG as described in the section Uploading Custom PL/SQL Packages. The PL/SQL function SUM is declared as below in the package RP_CUSTOM_PKG.

function SUM (lhsMeas IN VARCHAR2, rhsMeas1 IN VARCHAR2, rhsMeas2 IN VARCHAR2) return number; Here is a sample definition of the SUM function that adds 2 measures and writes the result to a third

measure. Note that the measure lhsMeas is an IN type argument although the function SUM updates it. The measure lhsMeas must be marked as a customer managed measure as described

in the Measure Properties subsection of the RPASCE Configuration Tools Additions section.

FUNCTION sum (

lhsmeas IN VARCHAR2,

rhsmeas1 IN VARCHAR2,

rhsmeas2 IN VARCHAR2

) RETURN NUMBER IS

-- EXPR 1: lhsMeas = rhsMeas2 + rhsMeas1

na_ut_lhsmeas BINARY_DOUBLE := cell_dbl(lhsmeas, NULL);

na_ut_rhsmeas1 BINARY_DOUBLE := cell_dbl(rhsmeas1, NULL);

na_ut_rhsmeas2 BINARY_DOUBLE := cell_dbl(rhsmeas2, NULL);

lhsfactgroup VARCHAR2(4000);

rhs1factgroup VARCHAR2(4000);

rhs2factgroup VARCHAR2(4000);

lhsfacttable VARCHAR2(4000);

rhs1facttable VARCHAR2(4000);

rhs2facttable VARCHAR2(4000);

stmt VARCHAR2(8000);

BEGIN

rp_g_common_pkg.clear_facts(varchar2_table(lhsmeas));

SELECT

fact_group

INTO lhsfactgroup

FROM

rp_g_fact_info_md

WHERE

fact_name = lhsmeas;

SELECT

fact_group

INTO rhs1factgroup

FROM

rp_g_fact_info_md

WHERE

fact_name = rhsmeas1;

SELECT

fact_group

INTO rhs2factgroup

FROM

rp_g_fact_info_md

WHERE

fact_name = rhsmeas2;

lhsfacttable := 'rp_g_'

|| lhsfactgroup

|| '_ft';

rhs1facttable := 'rp_g_'

|| rhs1factgroup

|| '_ft';

rhs2facttable := 'rp_g_'

|| rhs2factgroup

|| '_ft';

na_ut_lhsmeas := ( na_ut_rhsmeas2 + na_ut_rhsmeas1 );

stmt := 'MERGE INTO '

|| lhsfacttable

|| ' lhs

USING (

SELECT

( coalesce(rhsft01.partition_id, rhsft02.partition_id) ) AS partition_id,

( coalesce(rhsft01.dept_id, rhsft02.dept_id) ) AS dept_id,

( coalesce(rhsft01.stor_id, rhsft02.stor_id) ) AS stor_id,

( ( coalesce(rhsft02.'

|| rhsmeas2

|| ', '

|| na_ut_rhsmeas2

|| ') + coalesce(rhsft01.'

|| rhsmeas1

|| ', '

|| na_ut_rhsmeas1

|| ') ) ) AS '

|| lhsmeas

|| '

FROM

(

SELECT

partition_id,

dept_id,

stor_id,

'

|| rhsmeas1

|| '

FROM

'

|| rhs1facttable

|| '

) rhsft01

FULL OUTER JOIN (

SELECT

partition_id,

dept_id,

stor_id,

'

|| rhsmeas2

|| '

FROM

'

|| rhs2facttable

|| '

) rhsft02 ON rhsft01.partition_id = rhsft02.partition_id

AND rhsft01.dept_id = rhsft02.dept_id

AND rhsft01.stor_id = rhsft02.stor_id

)

rhs_final ON ( lhs.partition_id = rhs_final.partition_id

AND lhs.dept_id = rhs_final.dept_id

AND lhs.stor_id = rhs_final.stor_id )

WHEN MATCHED THEN UPDATE

SET lhs.'

|| lhsmeas

|| '= nullif(rhs_final.'

|| lhsmeas

|| ', '

|| na_ut_lhsmeas

|| ') DELETE

WHERE

rhs_final.'

|| lhsmeas

|| ' = '

|| na_ut_lhsmeas

|| '

WHEN NOT MATCHED THEN

INSERT (

lhs.partition_id,

lhs.dept_id,

lhs.stor_id,

lhs.'

|| lhsmeas

|| ' )

VALUES

( rhs_final.partition_id,

rhs_final.dept_id,

rhs_final.stor_id,

nullif(rhs_final.'

|| lhsmeas

|| ', '

|| na_ut_lhsmeas

|| ') )

WHERE

rhs_final.'

|| lhsmeas

|| ' != '

|| na_ut_lhsmeas;

dbms_output.put_line(stmt);

EXECUTE IMMEDIATE stmt;

COMMIT;

RETURN 0;

END sum;Now to execute this SUM function from the application batch, add the rule below to application configuration

as described in the Rules and Expressions subsection of RPASCE Configuration Tools Additions section. Add the rule group containing the rule to the batch

control files as described in the section RPASCE Batch Control File Changes. Patch the application with the updated configuration and batch control

files.

drdvsrcti<-execplsql("RP_CUSTOM_PKG","sum",drdvsrctt, adhdlcratet, add2locopnd) Here all 3 measures are placeholder scalar string measures that will point to the actual real measures that are being summed.

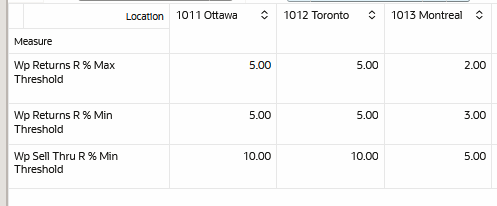

In this example, the input scalar measures are mapped as follows:

-

drdvsrctt: lpwpsellthrmn - dept_stor - customer managed (LHS measure)

Label: Wp Sell Thru R % Min Threshold

-

adhdlcratet: lpwprtnmn - dept_stor (RHS1)

Label: Wp Returns R % Min Threshold

-

add2locopnd: lpwprtnmx - dept_stor (RHS2)

Label: Wp Returns R % Max Threshold

Alternately, the rule could have been configured as below. However, that would mean that it is not possible to change the

input measures as part of the batch. It will need a patch to update the input measures to the SUM procedure.

Note:

The measures are in quotes as they are passed to PL/SQL as string constants. If the quotes are missing, then RPASCE will throw an error indicating that it is not possible to invokeexecplsql using non-scalar measures.

drdvsrcti<-execplsql("RP_CUSTOM_PKG","sum",'lpwpsellthrmn' , 'lpwprtnmn' ,'lpwprtnmx' )Execute this rule group through batch and build a measure analysis workbook with the involved measures. It can then be

verified that the SUM evaluated correctly.

If measure lhsMeas is not specified as a customer-managed measure in the application configuration,

then the error below will be thrown when execplsql is evaluated.

<E OCI_ERROR - (1031):

<E SQL Sid 'rpas_iw_conn' ORA-01031: insufficient privilegesThe following examples demonstrate execplsql and how a special expression can invoke PL/SQL with

a variable number and type of input arguments.

intscalar01<-execplsql("RP_CUSTOM_PKG","custom_procedure1","dvsn", true, 1, 1) intscalar01<-execplsql("RP_CUSTOM_PKG","custom_procedure2","dvsn", 1123.5813, 23, -1) intscalar01<-execplsql("RP_CUSTOM_PKG","custom_function1","dvsn", true, 1) intscalar01<-execplsql("RP_CUSTOM_PKG","custom_function2","dvsn", 1) intscalar01<-execplsql("RP_CUSTOM_PKG","custom_function2",strscalar1, intscalar02) intscalar01<-execplsql("RP_CUSTOM_PKG","custom_procedure3","dvsn", datescalar2, 1, 1)The PL/SQL counterparts are defined, through very simple demonstration code, in the example custom package below.

rp_custom_pdg.pkb

create or replace package body RP_CUSTOM_PKG is

procedure custom_procedure1(arg1 in varchar2, arg2 in CHAR, arg3 in number, ret out number )

is

begin

ret:=23;

end custom_procedure1;

procedure custom_procedure2(arg1 in varchar2, arg2 in BINARY_DOUBLE, arg3 in number, ret out number )

is

begin

ret:=23;

end custom_procedure2;

procedure custom_procedure3(arg1 in varchar2, arg2 in BINARY_DOUBLE, arg3 in timestamp, ret out number )

is

begin

dbms_output.put_line('arg3: ' || arg3);

ret:=23;

end custom_procedure3;

function custom_function1(arg1 in varchar2, arg2 in CHAR, arg3 in number) return number

is

ret integer;

begin

ret:=23;

return ret;

end custom_function1;

function custom_function2(arg1 in varchar2, arg3 in number) return number

is

ret number;

begin

ret:=23;

return ret;

end custom_function2;

function SUM

(lhsMeas IN VARCHAR2,

rhsMeas1 IN VARCHAR2,

rhsMeas2 IN VARCHAR2) return number

is

-- EXPR 1: lhsMeas = rhsMeas2 + rhsMeas1

na_ut_lhsMeas BINARY_DOUBLE := cell_dbl(lhsMeas, NULL);

na_ut_rhsMeas1 BINARY_DOUBLE := cell_dbl(rhsMeas1, NULL);

na_ut_rhsMeas2 BINARY_DOUBLE := cell_dbl(rhsMeas2, NULL);

lhsFactGroup varchar2(4000);

rhs1FactGroup varchar2(4000);

rhs2FactGroup varchar2(4000);

lhsFactTable varchar2(4000);

rhs1FactTable varchar2(4000);

rhs2FactTable varchar2(4000);

stmt varchar2(8000);

BEGIN

rp_g_common_pkg.clear_facts(varchar2_table(lhsMeas));

select fact_group into lhsFactGroup from RP_G_FACT_INFO_MD where FACT_NAME = lhsMeas;

select fact_group into rhs1FactGroup from RP_G_FACT_INFO_MD where FACT_NAME = rhsMeas1;

select fact_group into rhs2FactGroup from RP_G_FACT_INFO_MD where FACT_NAME = rhsMeas2;

lhsFactTable := 'rp_g_' || lhsFactGroup || '_ft';

rhs1FactTable := 'rp_g_' || rhs1FactGroup || '_ft';

rhs2FactTable := 'rp_g_' || rhs2FactGroup || '_ft';

na_ut_lhsMeas := ( na_ut_rhsMeas2 + na_ut_rhsMeas1 );

-- UPDATE rp_g_fact_info_md

-- SET

-- table_na =

-- CASE lower(fact_name)

-- WHEN 'b' THEN

-- to_char(na_ut_lhsMeas)

-- END

-- WHERE

-- lower(fact_name) IN ( lhsMeas );

stmt := 'MERGE INTO ' || lhsFactTable || ' lhs

USING (

SELECT

( coalesce(rhsft01.partition_id, rhsft02.partition_id) ) AS partition_id,

( coalesce(rhsft01.dept_id, rhsft02.dept_id) ) AS dept_id,

( coalesce(rhsft01.stor_id, rhsft02.stor_id) ) AS stor_id,

( ( coalesce(rhsft02.' || rhsMeas2 || ', ' || na_ut_rhsMeas2 || ') + coalesce(rhsft01.' || rhsMeas1 || ', ' || na_ut_rhsMeas1 || ') ) ) AS '|| lhsMeas||'

FROM

(

SELECT

partition_id,

dept_id,

stor_id,

'|| rhsMeas1 ||'

FROM

' || rhs1FactTable || '

) rhsft01

FULL OUTER JOIN (

SELECT

partition_id,

dept_id,

stor_id,

' || rhsMeas2 || '

FROM

' || rhs2FactTable || '

) rhsft02 ON rhsft01.partition_id = rhsft02.partition_id

AND rhsft01.dept_id = rhsft02.dept_id

AND rhsft01.stor_id = rhsft02.stor_id

)

rhs_final ON ( lhs.partition_id = rhs_final.partition_id

AND lhs.dept_id = rhs_final.dept_id

AND lhs.stor_id = rhs_final.stor_id )

WHEN MATCHED THEN UPDATE

SET lhs.' || lhsMeas || '= nullif(rhs_final.' || lhsMeas || ', ' || na_ut_lhsMeas || ') DELETE

WHERE

rhs_final.' || lhsMeas || ' = ' || na_ut_lhsMeas || '

WHEN NOT MATCHED THEN

INSERT (

lhs.partition_id,

lhs.dept_id,

lhs.stor_id,

lhs.' || lhsMeas || ' )

VALUES

( rhs_final.partition_id,

rhs_final.dept_id,

rhs_final.stor_id,

nullif(rhs_final.' || lhsMeas || ', ' || na_ut_lhsMeas || ') )

WHERE

rhs_final.' || lhsMeas || ' != ' || na_ut_lhsMeas ;

DBMS_OUTPUT.PUT_LINE (stmt);

execute immediate stmt;

commit;

return 0;

END SUM;

end RP_CUSTOM_PKG;rp_custom_pkg.pks

create or replace package RP_CUSTOM_PKG is

procedure custom_procedure1(arg1 in varchar2, arg2 in CHAR, arg3 in number, ret out number );

procedure custom_procedure2(arg1 in varchar2, arg2 in BINARY_DOUBLE, arg3 in number, ret out number );

procedure custom_procedure3(arg1 in varchar2, arg2 in BINARY_DOUBLE, arg3 in timestamp, ret out number );

function custom_function1(arg1 in varchar2, arg2 in CHAR, arg3 in number) return number ;

function custom_function2(arg1 in varchar2, arg3 in number) return number;

function SUM (lhsMeas IN VARCHAR2, rhsMeas1 IN VARCHAR2, rhsMeas2 IN VARCHAR2) return number;

end RP_CUSTOM_PKG;Limitations

Boolean arguments must be recast as character types, the PL/SQL function or procedure should declare them as a CHAR type. RPASCE will set the char to T for true and F for false. On the expression