4 Extension

RDS Extension Overview

RDS Architecture Basics

The defining feature of RDS is the data of each participating product resides in a single dedicated read-only schema, e.g., MFCS. Product data is made accessible to the customer in a dedicated companion, writeable schema using synonyms. All custom data objects are created in this companion, writeable schema. Management and retention of custom data objects is wholly the responsibility of the customer.

The product data in RDS is a replica of selected data residing in an operations system such as MFCS. RDS is not part of MFCS, but a repository of MFCS data. Moreover, the data exchange is one way from MFCS to RDS. Any data movement, directly or indirectly, from RDS to MFCS is orchestrated by the customer.

Although data from multiple products reside in RDS, there is only an informal guarantee that if there are no updates to a given set of data items, then eventually all accesses will return temporally consistent results. What this statement means is that after sufficient time has passed, RDS accurately reflects the state of the enterprise at some point in time in the recent past (recent could be measured in seconds, minutes, or hours). What qualifies as sufficient time depends on the temporal consistency of the separate subsystems that make up the enterprise, which depends metaphorically speaking on when each system closes its book. Temporal consistency also depends on the replication lag, which varies depending on system loading. This lag, however, is expected to be minimal under normal operating conditions. Temporal consistency may prove decidedly less relevant than semantic and data model differences between the products that reflect the specific problems each product was devised to solve.

Refer to your product data model to determine what data is available in RDS. Bear in mind, the data is a replica of inserts and updates as well as deletes. The point is, the data retention policy in RDS is effectively replicated from the operations system.

Environment Considerations

When embarking on the customization of a product, it is important to understand how the RDS implementation environment, which is a SaaS offering, differs from PaaS and on-premises. First of all, some or all product customization will be accomplished by making modifications to RDS (the product implementation guide will provide details on product customization). Those modifications are achieved using APEX.

APEX is a low code development environment. As a result it does not anticipate the need for (and does not provide) development life cycle tools. Application user interfaces are composed in an application builder. RESTful services are built in a similar fashion. In fact, one constructs most database objects using a UI rather than by executing code. One can, however, use the SQL Workshop to compose small amounts of PL/SQL (e.g., 100s to 1000s of lines of code). There is no access to SQL*Developer or SQL*Loader. In fact, most consoles are unavailable. It is an ideal environment for most business savvy users, but may be foreign to the skilled PL/SQL, front end, or back end developer. It is important to note that customizations that require coding will use SQL and PL/SQL. Moreover, most data interchange will rely on JSON formatted messages. All the examples in this document will employ JSON.

When using APEX, SQL command line type activities are performed in the SQL Commands tool within the SQL Workshop. For SQL script development (for blocks of code where reuse is anticipated), however, one uses the SQL Scripts tool.

When using APEX, one logs into a workspace and that workspace provides access to a single schema. Specifically, one can have access to the data for a single product within a workspace. In other words, it is not possible to execute a mutli-schema or cross-schema query from within a workspace. If one needs to combine information from multiple products, then one constructs schema specific integrations and then joins that information externally.

Lastly, it is important to remember that since RDS is a SaaS offering, some tools and features may not be available or availability may be provided with some limitations. It is important that one understand the dependencies inherent in customizations that one wishes to migrate. Expect to review these dependencies with an Oracle Representative.

Prerequisites

In order to fully explore the examples below, one will need to meet the following prerequisites:

-

Have access to an APEX workspace within an RDS tenant,

-

IDCS application credentials

-

Have access to a suitable Object Storage instance

APEX is a browser-based application; so, all that is needed is a URL and the necessary authorization to gain access to an APEX workspace. One’s RDS APEX workspace admin will be able to provide the necessary details. IDCS application credentials are needed to generate an access token that can be used to authenticate a RESTful service invocation. An object storage instance is needed as a data export destination.

Implementing a RESTful Service in APEX

Oracle RESTful Data Services play a role in both outbound data service and data export integration patterns. For the data service integration pattern, a RESTful service synchronously returns the requested data in whole or part (i.e., through data pagination). For the data export integration pattern, a RESTful service asynchronously initiates a data export and then returns with a suitable response. Much of the RESTful service implementation is the same regardless of data integration pattern. Ultimately, from the perspective of the RESTful service implementation, the two integration patterns differ only in terms of the actions taken in response to the REST service invocation.

Chapter 7 of the SQL Workshop Guide, Enabling Data Exchange with RESTful Services describes in great detail how one creates a RESTful service in APEX. Bear in mind, the above links are version specific. Although documentation across versions tends to be quite similar, it is generally best to consult the documentation for the version of APEX one is using. In any case, the following paragraphs will only provide an overview of how one creates the necessary RESTful service. Consult the documentation for additional details and example implementations.

The implementation of a RESTful service as two aspects, a URL pattern and a handler. When designing or composing a URL pattern, one is effectively constructing a dispatch mechanism that ties a given URL pattern to a specific action. This pattern-action pairing represents an API through which external systems access customer data residing in RDS.

URL Pattern

The URL pattern consists of three parts: a base path, a module name, and a URI template. The base path is the same for all RESTful services created within a given workspace, namely:

https://<host name>/<tenant_name>/ords/<schema name>where, the host name and tenant name are the same for all a given tenant’s RESTful services. The schema name is the workspace schema (remember, there is a one-to-one relationship between workspace and schema). The module name is just a hierarchical organizing feature. For example, one could have a module called po for RESTful services associated with purchase orders in MFCS. If this were the case, the path for all purchase orders services would begin with the following:

https://<host name>/<tenant_name>/ords/mfcs/po/The last part of the URL pattern is the URI template. The template consists of 0 or more path components followed by 0 or 1 bind variable. If there are 0 path components and no binding variable, then the URL template is blank or an empty string. If this were the case, then the complete URL is:

https://<host name>/<tenant_name>/ords/mfcs/po/In the more typical case, there are one or more path components with and without a bind variable. For example, one might have the following purchase order services:

| Service | Base Path + Module | URI Template | Description |

|---|---|---|---|

|

active |

…/mfcs/po/ |

active |

Return PO numbers for active (not completed or active) purchase orders. |

|

complete |

…/mfcs/po/ |

complete |

Return PO numbers for completed purchase orders. |

|

expired |

…/mfcs/po/ |

expired |

Return PO numbers for purchase orders that have expired with being fulfilled. |

|

summary |

…/mfcs/po/ |

summary/:po_num |

Return a summary for the purchase order with the given number. |

|

detail |

…/mfcs/po/ |

detail/:po_num |

Return the details for the purchase order with the given number. |

If one wanted purchase orders within a given date range, a query string specifying a date range can be added. For example, the following would return PO numbers between May 1st and May 15th, 2022.

https://<host name>/<tenant_name>/ords/mfcs/po/active?from=2022-05-01&to=2022-05-15The from and to become bind variables in a query.

Handler

The second aspect of a RESTful service is the handler. In any case, each URL pattern can be associated with up to four handlers, one for each of the following HTTP methods: GET, POST, PUT, and DELETE.

The source is parameterized using bind variables. Explicit bind variables are part of the URL, e.g.: po_num, from, and to. Consider the implementation of the handler that returns the details for a purchase order. The URL pattern might be:

https://<host name>/<tenant_name>/ords/mfcs/po/details/:po_numThe GET handler for this URI pattern could then be:

select … from purchase_orders where po_num=:po_num;

Ideally, purchase_orders is a view that is identified as part of the public API of the product data model in RDS. In general, accessing a view versus a table is preferable because the product makes stronger guarantees regarding the immutability of views (i.e., they are less likely to change in inconvenient ways).

Query string parameters can also be used to parameterize the RESTful service handler. The query string parameters are used like the :po_num above. For example, one can use the from-to range in the URL above to construct a query that returns only purchase orders within a data range, e.g.:

select … from purchase_orders where

supplier_id=:supplier_id and

order_date >= DATE :from and

order_date < DATE :to;

It is important that RESTful services respect the semantics of the methods used. The GET method strongly suggests a synchronous operation with no side effects. Whereas the semantics of POST, PUT, and DELETE methods are expected to have side effects, but could be synchronous or asynchronous.

RESTful data services can be paged. Paging can be useful for interactive operations that only require a subset of data, but it can also be used to return a multi-part result set. In either case, page data returns a subset of data as well as URLs for the previous and next result set.

Lastly, only explicit bind variables have been described. The term explicit is used because there are also a number of implicit bind variables, see Implicit Parameters. These include parameters that provide access to bind variables that implement pagination. They also provide access to the data portion or payload of an endpoint invocation. There is also a current_user parameter. This parameter, however, is the schema owner, not the user accessing the RESTful service.

Security

Authentication

Authentication is achieved using OAUTH 2. What this means is that a RESTful service invocation is authenticated using an access token. That access token can be obtained, for example, using curl as shown in Listing 1. The response is a JSON object. This access token then becomes the Bearer token that authorizes the RESTful service invocation.

LISTING 1: ACCESS TOKEN GENERATION

curl --location --request POST ‘https://<idcs host>/oauth2/v1/token' \

--header 'Content-Type: application/x-www-form-urlencoded' \

--header 'Authorization: Basic <base64 clientId:clientSecret>’ \

--data-urlencode 'grant_type=client_credentials' \

--data-urlencode 'scope=urn:opc:idm:__myscopes__'There is no implicit data or row level security. Any such security that is in effect in MFCS, for example, will not exist in RDS unless explicitly implemented by the customer. An effective approach to implementing data security will employ the ORDS Pre-hook functionality.

ORDS PRE-HOOK

Oracle REST Data Services (ORDS) provides the ability to use PL/SQL based pre-hook functions that are invoked prior to an ORDS based REST call. These functions can be used for a variety of purposes including auditing, custom authentication and authorization, and metrics gathering.

Each provided RDS workspace comes pre-configured with a simple

pre-hook function named ORDS_PREHOOK, and it has

a default implementation that simply returns true. As such, it has no effect on the REST calls made into custom applications.

It is provided as a starting point for extension to teams that required

additional processing on each REST call. For those teams, replacing

the implementation of the ORDS_PREHOOK function will

enable the additional capabilities they require. For more information

on pre-hook functions, please refer to Oracle REST Data Services Installation, Configuration,

and Development Guide: Overview of Pre-hook Functions.

Invoking a Data Service

A GET data service can be accessed and tested directly from a browser. If one is not already logged into the Oracle Cloud, then one will be redirected to a cloud login screen for authentication. Once authenticated, the data service endpoint will be invoked. The results of the invocation will be displayed in the browser window. If the data service is accessed from Retail Home, for example, the user is already authenticated.

Invoking a POST RESTful service or invoking a RESTful service from an external system is slightly more complicated. Listing 2 illustrates the invocation of a REST service endpoint using curl. Note the use of the access token. The response is dependent on the handler action.

LISTING 2: DATA SERVICE INVOCATION

curl --location --request POST ‘https://<host name>/<tenant id>/ords/mfcs/pom/job_start' \

--header 'content-type: application/json' \

--header 'Authorization: Bearer <access token>' \

--data-raw '{"param1": "value1", "param2": "value2"}'Using RDS to Build Integration

There are two approaches to transferring data between RDS and an external system, use a data service or use object storage to mediate the transfer. For an outbound integration, one uses a GET data service or exports data from ADW to object storage. For an inbound integration, one uses a POST (or DELETE if appropriate) data service or imports data from object storage to ADW. In the case where object storage is mediating the transfer, the external system is responsible for uploading or downloading data from object storage. When deciding which approach is best for a given situation, one should consider the latency tolerance, data volume, and tolerance for complexity.

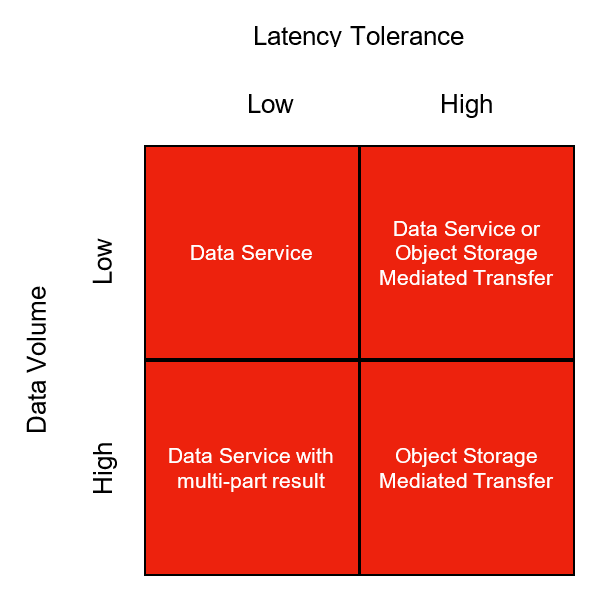

Figure 4-1 Data Integration Option Matrix

Latency tolerance is essentially a measure of how long the consuming process or agent can or will wait for the requested data to be made available. The most obvious low tolerance scenario is an interactive setting where latency tolerances are in the sub-second range. A less obvious scenario is one where the objective is to control some automated process in which responsiveness is critical. On the other hand, the typical retail ecosystem encompasses a multitude of automated processes that have a relatively high latency tolerance so long as the latency does not adversely impact process SLAs.

It is important to note that ORDS has a latency tolerance of 300 seconds. That is, the RESTful service source execution must complete within 300 seconds or a Socket Hung Up error will be returned. The 300 second threshold is a hard limit. Bear in mind that DB execution time jitter, which is the result of DB load variation, can increase typical execution times. Parameterized RESTful services may also exhibit a wide variation in execution time. Building a reliable service may entail introducing a retry component. In some cases, it may be necessary to use an asynchronous execution approach. An asynchronous approach is called for whenever the execution time for representative use cases plus jitter is likely to exceed the 300 second boundary.

The second factor one considers when selecting an integration approach is data volume. Distinctions between high and low volumes are best measured in terms of time rather than bytes. Essentially, the amount of data one can rapidly move from one place to another is ever increasing; however, what one considers long versus short transit times, though subjective, remain somewhat constant. For example, low data volumes are associated with transit times measure in seconds whereas high data volumes are associated with transit times measured in minutes.

Though both latency tolerance and data volume are best measured using time, they are measuring different things and are useful criteria when selecting an integration approach for a given problem. Figure 1 presents a data integration option matrix that provides a conceptual framework for deciding which approach, data service or object store mediated transfer, is best suited to a given problem. Although the figure is for the most part self-explanatory, data service with paged result warrants some discussion. Paged results concern outbound integrations only. These integrations produce JSON formatted results where each page returns one part of a multi-part result as well as URLs for the next and previous page.

The last factor one might consider is complexity. Creating a synchronous data service is relatively simple whereas implementing a data export is quite a bit more complicated. A synchronous data service includes a RESTful service implementation and a query implementation. A data export service (a service is used to initiate the export) includes everything that a data service includes as well as export data, managing credentials, and managing and monitoring export processes. Sometimes, however, complexity is not the most important factor when selecting a data integration pattern.

The remainder of this document will discuss how to implement outbound integration in RDS using a data services pattern and a data export pattern. Bear in mind, the examples provided below are illustrative. They are not robust. They do not implement error handling. The goal is to familiarize the reader with the parts of the problem, the tools one uses, and the relevant Oracle documentation.

Before the general features of the outbound integration problem are introduced, the reader should familiarize themselves with the basics of the RDS architecture and environment as well as prerequisites for implementation.

Outbound Integration using a Data Service

The vast majority of what one needs to know to implement a data integration using a RESTful service is described in the previous section. This short section will discuss some of the finer points of implementing actions. There are several key points to remember:

-

A handler’s PL/SQL source is not compiled when the handler is saved. Handler source compilation errors reveal themselves when the REST service is invoked. So keep the handler source as simple as possible and avoid an approach that frequently changes to handler source. Essentially, do something simple and then call a procedure or function to do the majority of the work.

-

If the result is not returned in the handler source as the direct result of a query, one can compose a JSON object (see APEX_JSON and JSON Data and Oracle Database) and return it using htp.print.

-

A handler’s source is not reusable short of copy and paste. If the handler source is likely to be reusable, then encompass it in a procedure or function.

-

One can compose procedures, functions, test scripts, and such in the SQL Workshop using the SQL Commands and SQL Scripts tools.

Outbound Integration using Object Storage

As mentioned above, it is substantially easier to meet data integration needs using a data service pattern than it is using a object storage pattern. The data service pattern in the simplest case requires just a data service. Whereas the RESTful service in the object storage pattern moves the data only part way to its destination since the service achieved its end when the data arrives in object storage. Moreover, the data export pattern generally calls for an asynchronous start because data exports are likely to be long running. With the asynchronous start comes the requirement that one now manage and monitor the export — if for no other reason than to trigger the process that moves the data from object storage to its final destination. The remainder of this example, however, will begin with the description of a hypothetical, short running, synchronous data export approach in order to avoid introducing all the complexities of an asynchronous start. The section will conclude with a brief overview of how one would manage long running asynchronous exports.

Hypothetical Outbound Integration Problem

Consider the following hypothetical problem. The customer wants to export changes to the item master (i.e., RDS_WV_ITEM_MASTER). The customer will use the csn_nbr column to keep track of what has been exported. Specifically, the customer will select rows whose csn_nbr is greater than the max csn_nbr of the previously exported rows. The export process is assumed to be idempotent.

Exporting changes to the item master is a task well suited to a synchronous data service. The item master changes slowly and the volume of data needed to capture those changes is relatively small. If, however, the export concerned transactions, a synchronous data service may or may not be appropriate depending on the data volumes.

One would begin by creating an item master module — in the SQL Workshop RESTful Services Tool — with a name such as item_master. The presumption is that there may be multiple item master RESTful services and using this approach to naming anticipates that possibility. Next, one creates a URI template with a template of “changes/:last_csn_nbr.” The first part of the template, changes, identifies the function of the endpoint. The last part of the template, :last_csn_nbr, is a bind variable that will be used in a query. The last step is to create the GET handler for the service.

The most common GET handler has a source type of collection query. If the source type is a collection query, then all one needs to do is provide a query as the source and ORDS will take care transforming the query result into a JSON string. In order to get the most recent changes to the item master one would use the following source for the GET handler:

select … from RDS_WV_ITEM_MASTER where csn_nbr > :last_csn_nbr The endpoint one would use to get recent changes is:

https://<host>/<tenant>/ords/mfcs/item_master/changes/26771905065where 26771905065 is the maximum CSN number of the items thus far retrieved. This design makes it the responsibility of the caller to keep track of the maximum CSN number used. With that in mind, one needs to make sure that the query returns the csn_nbr. Without it, it will not be possible to keep track of the maximum CSN number. The initial item master will have items with NULL valued CSN numbers. One could obtain all the changes since the initial load using the following endpoint:

https://<host>/<tenant>/ords/mfcs/item_master/changes/0The final part of the GET handler that will be discussed is pagination. Using the pagination, one can retrieve the query result in pages of a specified number of rows. The default pagination size is set at the module level, but can be overridden at the handler level. All results are paginated; however, one can set the page size such that no more than one page is ever returned. The JSON object that is returned has the following form:

{

"items":[…],

"hasMore":true,

"limit":25,

"offset":0,

“count":25,

“links":[

{“rel”:”self”,”href”:".../item_master/changes/26771905065"},

{“rel”:”describedby”,"href":".../metadata-catalog/item_master/changes/item"},

{“rel”:”first”,”href":".../item_master/changes/26771905065?limit=25"},

{“rel”:”next”,”href”:".../item_master/changes/26771905065?offset=50&limit=25"},

{“rel”:”prev","href":".../item_master/changes/0"}

]

}The items value is a list of JSON objects where the keys are column names in the query result. The hasMore value indicates whether there is more data. The limit specifies the limit used in the query. The offset is the row offset of the first row returned. Count is the number of rows actually returned. The links value provides URLs for the first, next, and previous pages of data. Note that if this is the first query — offset = 0 — there will be no prev link. If hasMore is false, there will be no next link.

If one wanted the items included in the initial item master, then one would need to create a new data service with a URI template of simply “initial”. The get handler source would look like the following:

select … from RDS_WV_ITEM_MASTER where csn_nbr is null An interesting variation would be to also create a POST handler to copy the initial item master into object storage. This approach requires an object storage credential. The creation of a credential is something done infrequently. Bear in mind that credentials do expire and at some point credentials need to be refreshed. Once the credential is created, one implements the data export script. The last part, as implied above, entails creating a RESTful service to initiate the data export. In this case, a POST handler will be added to the “initial” URI template.

The construction of credentials in ADW is described in the CREATE_CREDENTIAL Procedure section of the DBMS_CLOUD Subprograms and REST APIs section. Note that the form of the CREATE_CREDENTIAL Procedure one uses is:

|

DBMS_CLOUD.CREATE_CREDENTIAL ( credential_name IN VARCHAR2, user_ocid IN VARCHAR2, tenancy_ocid IN VARCHAR2, private_key IN VARCHAR2, fingerprint IN VARCHAR2); |

One composes the create_credential script in either the SQL Scripts or the SQL Commands tool in the SQL Workshop. A sample create credential script is shown below.

|

BEGIN DBMS_CLOUD.CREATE_CREDENTIAL ( credential_name =>'OCI_KEY_CRED', user_ocid=>’ocid1.user.oc1...zdyfhw33ozkwuontjceel7fok5nq3bf2vwetkpqsoa', tenancy_ocid=>’ocid1.tenancy.oc1...gnemmoy5r7xvoypicjqqge32ewnrcyx2a', private_key=>'MIIEogIBAAKCAQEAtUnxbmrekwgVac6Fd....pESQPD8NM//JEBg=', fingerprint=> 'f2:db:f9:18:a4:aa:fc:94:f4:f6:6c:39:96:16:aa:27'); END; |

Refer to Required Keys and OCIDs for details on obtaining credential information. The easiest way to obtain the needed credentials is by navigating to one’s My Profile page in the Oracle Cloud (i.e., tap the profile button/image in the upper right corner and select My Profile from the drop down). Next tap the API Keys link in the Resources section on the lower left of the screen. Finally tap the Add API Key button and follow the instructions. Part of the process is downloading one’s private key. The downloaded key is in PEM format. The key will need to be reformatted as a single long string without the leading and trailing dashes. There should be no new lines in the key. These final instructions will make more sense once one goes through the Add API Key process.

The last step is the actual export itself. In order to copy data from ADW to object storage, one uses DBMS_CLOUD.EXPORT_DATA, e.g.:

|

DBMS_CLOUD.EXPORT_DATA ( file_uri_list IN CLOB, format IN CLOB, credential_name IN VARCHAR2 DEFAULT NULL, query IN CLOB); |

A sample export script is shown below. Note that a JSON response is composed using json_object and that response is returned using htp.print. To get started with JSON data see JSON Data and Oracle Database. See HTP for more information on hypertext procedures.

|

declare response varchar2(4000); begin dbms_cloud.export_data( credential_name => 'OCI_KEY_CRED', file_uri_list=>'', query => 'select … from RDS_WV_ITEM_MASTER where csn_nbr is null', format => json_object('type' value 'json', 'compression' value 'gzip')); select json_object('status' VALUE 'success') into response from dual; htp.print(response); END; |

The URI format in file_uri_list is the Native URI format, see DBMS_CLOUD Package File URI Formats.

The name of the exported object would have the following form:

transactions1_<part>_<timestamp>.json.gzSince this export is not multi-part, part is equal to "1." The next step is to consume the export. How this step is accomplished depends on customer requirements. One could use a shell oriented approach with Oracle OCI Command Line Interface, see also Object Storage Service. One could also use a Java or Python API.

Retail Home Integrations

A Retail Home integration is an example of outbound integration with a user interface or portal. Retail Home Metric tiles without charts are quite simple to implement. For example, the following data service source (with a source type of collection query) will populate the 2 Metric Tile below:

select

'PO Receipts' as NAME, 25680 as VALUE,

'N' as "VALUE_FORMAT" from dual

union

select

'In Transit' as name, 112300 as value,

'N' as "VALUE_FORMAT" from dual

This data service response is:

{"items":[

{"name":"In Transit","value":112300,"value_format":"N"},

{"name":"PO Receipts","value":25680,"value_format":"N"}

],

"hasMore":false,

"limit":25,

"offset":0

"count":2,

"links":[...]

}

Producing a 4 Metric Summary, however, is more complicated and requires one use a source type of PL/SQL (the following code only provide values for two of four metrics).

declare

response varchar2(4000);

begin

SELECT json_object (

'items' value

json_array(

json_object ('name' value 'Metric 1',

'value' value 0.5,

'valueFormat' value 'PC'),

json_object ('name' value 'Metric 2',

'value' value 0.25,

'valueFormat' value 'PC')

),

'chart' value

json_object ('type' value 'bar',

'items' value

json_array(json_object('name' value 'FEB',

'value' value 2300),

json_object('name' value 'MAR',

'value' value 3100),

json_object('name' value 'APR',

'value' value 2900)

),

'valueFormat' value 'S',

'seriesName' value 'Sales',

'valueLabel' value 'Amount'

)

)

into response FROM DUAL;

htp.print(response);

end;

Filters, if used, become query string parameters and values in the URL. The query string parameters manifest in the source as bind variables.

An Asynchronous Approach

An asynchronous approach is generally called for when the likely wait time for process completion is high. A data export to object storage is generally a good candidate for an asynchronous start. In the simplest case, one needs to implement three data services: job start, job stop, and job status. The DBMS_SCHEDULER package provides the functionality one would need for these services. There is, of course, the option to schedule an export job to repeat and obviate the need to create a job start service. One could still use a job start service to invoke an unscheduled export. The initial item master export described above, however, is both relatively small and infrequent enough (probably once) that it does not warrant addressing it with an asynchronous approach.

One uses the DBMS_SCHEDULER.create_job procedure to create a job that that can be started asynchronously. A typical approach would be to use create job to wrap a procedure. The create job invokes the procedure immediately (by setting the start_date to SYSTIMESTAMP) upon creation and is then dropped automatically upon completion. The service would return a unique job name or execution id to be used to stop and monitor the job.

Another service is used to monitor the job status using the returned execution id. The monitoring service would be used to poll the status of the job. The job status is obtained by executing a query on the DBMS_SCHEDULER.user_scheduler_job_run_details.

Next Steps

The first step is to familiarize oneself with the above concepts. Start with a simple hello world service. First with a GET handler that is invoked from a browser that handles authentication. Next move on to curl or postman where one has generate access tokens. Lastly, build some simple queries. If a data export is anticipated, begin with a synchronous approach before attempting the more complex asynchronous approach.

Monitoring Resource Consumption in RDS

Although ADW is self-tuning, it cannot ensure that one's business priorities and resource consumption are well aligned. For example, the resource consumption of a new, as yet to be tuned, report may adversely impact higher priority tasks. By monitoring the consumption and performance of RDS, one is able to pinpoint which tasks could benefit from additional attention. In some cases, however, even well tuned tasks are long running and resource intensive. If these tasks are of lower priority, the user would like to run them at a lower priority. Ultimately, monitoring allows the user to both effectively employ compute services and determine if resource consumption matches business priorities. Control, on the other hand, gives the user a means to align resource consumption with priorities. Exerting control will be the subject of another chapter whereas this chapter will focus on monitoring using AWR reports.

Monitoring

AWR reports are used to monitor activity in ADW. This section will discuss how to obtain AWR reports, but it will not discuss how to interpret those reports. Given that each customer's monitoring needs differ, there is no ready to use AWR report access built into RDS. In other words, an AWR report is obtained via a customer implemented data service.

There are two steps to creating an AWR report, obtaining snap ids and generating the report using the appropriate pair of snap ids. Sample code for obtaining snap ids is shown in Listing 1. The code is used as the source for a ORDS GET handler. It illustrates the use of two bind variables. The first is part of the URI template, the begin_interval_time. The second optional query string parameter is the end interval time. The times are given as dates for simplicity, but snap ids are based on timestamps. If the query parameter is not given, the value is null.

Note that a procedure, HTPPRN, is used to output the response. HTP.print, which is ultimately used to output the response, only handles varchar2 args. Varchar2 strings have a maximum size of 4000 characters, which is often insufficient. Hence, the output of the query is put into a CLOB. The CLOB is then output using HTPPRN, which is shown in Listing 2.

The second step is generating the report. I hard code the snap ids for simplicity. Using the code in Listing 3 as the source of a GET handler and the URL of the data service, a browser will render the report. It would not be difficult to add additional bind variables to allow one to create a narrower snap id interval and combine the snap id query and the report generation.

LISTING 1: OBTAINING SNAP IDS

DECLARE

from_begin_interval_time date := to_date(:from, 'YY-MM-DD');

to_end_interval_time date := null;

db_id NUMBER;

inst_id NUMBER;

response clob;

BEGIN

dbms_output.enable(1000000);

if :to is not null then to_end_interval_time := to_date(:to, 'YY-MM-DD') + 1;

end if;

SELECT dbid INTO db_id FROM v$database;

SELECT instance_number INTO inst_id FROM v$instance;

SELECT json_arrayagg(

json_object(

'snap_id' value snap_id,

'begin_interval_time' value begin_interval_time,

'end_interval_time' value end_interval_time

returning clob format json)

returning clob) into response

FROM dba_hist_snapshot

WHERE dbid = db_id

AND instance_number = inst_id

and begin_interval_time >= from_begin_interval_time

and (to_end_interval_time is null or

to_end_interval_time >= end_interval_time)

ORDER BY snap_id DESC;

HTPPRN(response);

END;

LISTING 2: THE HTPPRN PROCEDURE

create or replace PROCEDURE HTPPRN(PCLOB IN OUT NOCOPY CLOB)

IS

V_TEMP VARCHAR2(4000);

V_CLOB CLOB := PCLOB;

V_AMOUNT NUMBER := 3999;

V_OFFSET NUMBER := 1;

V_LENGTH NUMBER := DBMS_LOB.GETLENGTH(PCLOB);

V_RESULT CLOB;

BEGIN

WHILE V_LENGTH >= V_OFFSET LOOP

V_TEMP:= DBMS_LOB.SUBSTR(V_CLOB, V_AMOUNT, V_OFFSET);

HTP.PRN(V_TEMP);

V_OFFSET := V_OFFSET + LENGTH(V_TEMP);

END LOOP;

END;

LISTING 3: GENERATING THE AWR REPORT

DECLARE

db_id NUMBER;

inst_id NUMBER;

start_id NUMBER;

end_id NUMBER;

response clob := null;

BEGIN

dbms_output.enable(1000000);

SELECT dbid INTO db_id FROM v$database;

SELECT instance_number INTO inst_id FROM v$instance;

start_id := 12133;

end_id := 12134;

FOR v_awr IN

(SELECT output FROM

TABLE(DBMS_WORKLOAD_REPOSITORY.AWR_REPORT_HTML(db_id,inst_id,start_id,end_id)))

LOOP

response := response || v_awr.output;

END LOOP;

HTPPRN(response);

END;