E Appendix: Conversion APIs

The conversion APIs are designed to enable conversion of certain entities into a production environment which, if converted through the Merchandising Data Conversion tool, may take longer to complete due to the volume of data being processed and require longer downtime for production systems during the phased data load. As these APIs are run in a production environment, they eliminate the need for the lift & shift activity during go-live, thus reducing the overall cutover period. When using the conversion APIs for a phased conversion, the key assumption is that the Merchandising Data Conversion tool was used for the initial phase of conversion. These conversion APIs are compatible with the existing file layout in the Merchandising Data Conversion tool and are designed to be run in the production environment after you have established the data quality and detailed migration process by performing multiple mock cycles and cutover rehearsal in a non-production environment.

These APIs are invoked through batch jobs scheduled using the Process Orchestration and Monitoring (POM) and go through all phases handled in the Merchandising Data Conversion tool (Import from File, Data Validation, and Business Validation).

Entities Supported

The following entities are supported for conversion into the production environment using conversion APIs.

| Functional Area | Notes |

|---|---|

|

Partner |

As in the Merchandising Data Conversion tool, the conversion API supports creating partner and address information. If you have configured any custom flex attributes for partner or associated addresses, these can be converted as well. Also, if using the Retail Financials Integration (RFI) for integration with PeopleSoft, Oracle Financials, or Cloud Financials, there is a cross-reference that would also need to be maintained and needs to be considered in conversion. |

|

Item HTS |

Only applicable if Import Management functionality is configured on. See Data Entities above for information on conversion of HTS. |

|

Item Expenses |

As in the Merchandising Data Conversion tool, you can choose to convert both expenses and assessments associated with item using the conversion API. |

|

Purchase Order |

As in the Merchandising Data Conversion tool, the conversion API supports create, ship, and receive flows for purchase orders. To convert a closed purchase order from your legacy system to Merchandising, you will need to perform entire flow of create, ship, and receive for purchase orders. Currently, there is no provision to directly load closed purchase orders through the data conversion API. Purchase order receipt conversion updates inventory in the Transactional stock ledger ( |

|

Customer Order |

As in the Merchandising Data Conversion tool, the conversion API supports the create, ship, and receive flow of customer orders. |

|

Non-Sellable Inventory |

To convert any non-sellable inventory for item/location combinations. |

|

Stock On Hand |

The relevant item/location records have already been established at this phase of conversion through the item/location ranging process. If not, then this should be a pre-conversion step for stock-on-hand conversion. |

|

Stock Ledger |

Ensure that the inventory ( |

|

Supplier Inventory Management |

As in the Merchandising Data Conversion tool, the conversion API supports conversion of Inventory Management parameters for supplier and supplier sites including supplier replenishment days. |

|

Supplier Org Units |

As in the Merchandising Data Conversion tool, the conversion API supports conversion of association of Org Units to suppliers and supplier sites. |

|

Item Location History |

To convert Item Location History Information. |

Key Considerations

-

The conversion APIs are managed through the

USE_CONVindicator in theCONV_SYSTEM_OPTIONStable. To use conversion APIs, theUSE_CONVparameter should be set toY. In the non-production environment, you can set this indicator using the APEX Data Viewer. To enable this indicator in the production environment, you are required to log an SR with the Oracle Cloud Operations team. -

You will need to do several mock runs using the Conversion APIs in a non-production environment to reach the optimum number for the thread count, chunk size, and parallel files that is suitable for your data load and machine size. Once benchmarked, to configure these in the production environment, log an SR with the Oracle Cloud Operations team. It should be noted when Golden Gate replication is turned on in the production environment, loading huge volumes of data can cause issues with replication.

-

The task execution engine in the Data Conversion tool is not available in the production environment. Some of the features from the task execution engine, like gathering stats on staging tables and so on, are incorporated in the conversion APIs.

-

The conversion APIs do not require you to turn off the MFQUEUE publishing triggers in the production environment. These APIs are designed in such a manner that these will not publish the MFQUEUE records for converted data. However, data captured for integration to downstream systems through other business transactions (for example, through the MFCS UI) will continue to be published. As part of the conversion APIs, the publication tables will be loaded with published flag set to

Yfor setting up the converted data for future publication to downstream systems. -

If other systems need to be seeded with the converted data, then use other data seeding options.

-

When testing in non-production environments, enable Flashback once the Mock conversion has started. This is to mimic the behavior of loading in the production environment where the flashback is always turned on.

-

In a new instance, the database optimizer may settle into a better execution plan after 2-3 runs of execution, so continue loading the files with smaller volumes for a few mock runs even if performance is not as expected.

-

To achieve optimal performance for data conversion using conversion APIs in your production environment, it is recommended that you avoid running heavy SQL APEX queries, conversion of parallel entities, and item induction/purchase order induction in parallel with conversion jobs.

Key Data File Assumptions

When generating your data files based on the template format, there are few key things to keep in mind:

-

When zipping your files, ensure that there is only one file per table.

-

The same file layouts that are used in the Data Conversion tool are extended for the conversion APIs. See the Download Templates and Key Data File Assumptions section in the Prepare for Conversion section for more details on file layout and assumptions.

-

The input files for entities with the file names having the same starting string such as

HALF_DATAandHALF_DATA_BUDGET, are required to be sent in a sequential manner. The files in the object storage are searched with the file name string and may result in upload errors due to an unexpected file. For example: theHALF_DATAfile should be processed first and then theHALF_DATA_BUDGETfile. -

Only the file extension defined for an entity in the

CONV_ENTITYtables is accepted for processing. -

Once your data files upload to the object storage is completed, sending a

.completefile to indicate end of file upload for an entity is not mandatory. -

The incoming prefix in the object storage for uploading the input files for Conversion APIs is different from the Data Conversion tool. Please reach out to the Oracle Cloud Operations team for instruction on the path.

Use Conversion API

Once your files have been created with the expected file name patterns and format, the Merchandising File Transfer service will be used for uploading the files to the object storage incoming prefix. You will receive instruction from the Oracle Cloud Operations team on file upload through the Merchandising File Transfer service that should be followed here. When this is complete, you will be ready to use the conversion APIs to load data from these files into the production environment. The data conversion through these APIs involves a two step process:

-

Load the data from the input files into the conversion staging tables in the production environment.

-

Invoke data checks and business validations to ensure the correctness of data being migrated.

The records that are successfully validated are moved to the main Merchandising tables.

For both these steps, there are separate conversion jobs for each entity that are supported for conversion in the production environment.

Execute the CONV_ARC_PURGE_STATS_JOB_ADHOC job from the Process Orchestration and Monitoring (POM) solution

to both archive and purge the conversion staging tables and gather stats on the Merchandising main tables for the entities

supported in the conversion APIs. It is highly recommended to truncate staging and error tables at regular intervals for an

entity using this job. This will improve the execution timings for the subsequent runs.

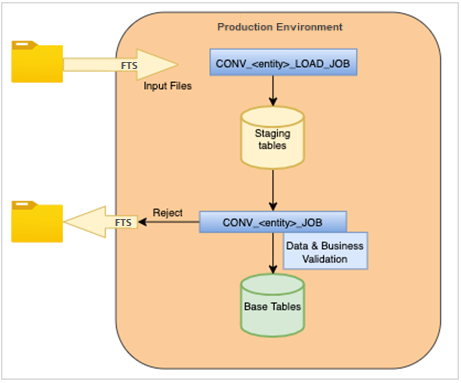

The diagram below shows, at a high level, how the conversion schema is set up in relation to your production environment.

Loading to Staging Tables

The conversion load jobs load the data from input files into the conversion staging tables in the production environment. There is a load job created in POM for each entity supported for the data conversion through the production conversion API. There is no input parameters required for running these jobs.

The common tables that are available for monitoring and troubleshooting any errors reported during data loading to staging tables are described below:

| Table Name | Description |

|---|---|

|

CONV_PROCESS_TRACKER |

Contains information on the data conversion against a process ID. For data loading from input files

to the staging table, it will have a status of |

|

FILE_UPLOAD_STATUS |

Holds the status of the files uploaded during import. It will have status as |

|

FILE_UPLOAD_LOG |

When the |

Loading to Merchandising Main Tables

The conversion job invokes data checks & business validations to ensure the correctness of data being migrated. The

records that are successfully validated will be moved to the main Merchandising tables. The job picks up the process IDs where

the status in the CONV_PROCESS_TRACKER table is END_IMPORT or END_VALIDATE. There is no input parameters required for running this job. The job also invokes the rejection process, which generates

the reject files for any errors and moves them to the OUT directory, so that you can manually correct them

and reload records to trigger a fresh file upload against that data. There is a conversion job created in POM for each entity

supported for the data conversion through the production conversion API.

The common conversion tables that are available for monitoring and troubleshooting any errors reported during data validation and upload to the main tables are described below:

| Table Name | Description |

|---|---|

|

CONV_PROCESS_TRACKER |

Contains information on the data conversion against a process ID. It will hold an intermediate status

of Upon

completion of data upload from staging to the main tables, the process status in the |

|

CONV_VALIDATION_ERROR |

Holds the errors encountered during validation and upload to the Merchandising main table. In case

of validation or upload errors, the record status in the corresponding conversion staging tables will be either |

Other Key Tables

Following is details about few other key tables containing metadata for the solution.

| Table Name | Description |

|---|---|

|

CONV_ENTITY |

Contains information about the entities available for conversion through the conversion API process along with the file format names for the zip files and threading/chunk size information. Configuration changes to this by end users is not supported. |

|

CONV_ENTITY_DETAIL |

Holds information about the staging tables, archive tables and the main tables that are used/loaded. This also contains the file format names for .dat files for each entity supported by the Conversion API Process. Configuration changes to this by end users is not supported. |

|

CONV_ENTITY_GATHER_STATS |

Lists the tables for which statistics have to be gathered prior to conversion process. When the GATHER_STATS_WITH_RUN column is set to ‘Y’, stats are gathered for every conversion cycle while ‘N’ indicates stats are gathered only for archive job. Configuration changes to this by end users is not supported. |

|

CONV_SYSTEM_OPTIONS |

Holds the system level parameters for the conversion process. Configuration changes to this by end users is not supported. |

Stock Ledger Initialization Job

To initialize the starting inventory totals in the stock ledger tables based on the stock-on-hand conversion in your production

environment, run the CONV_SL_INIT_JOB_ADHOC job using POM. There is a parameter named as ACTION_TYPE in the CONV_ENTITY table for entity name SLOPNINIT. This can be configured based on the

business need. The ACTION_TYPE should be set as New if you would like to recalculate the

starting inventory totals after updating the stock on hand to zero. Any other value for this parameter would process only

records with non-zero stock on hand. By default, the ACTION_TYPE would be New and, as needed,

you can reset the parameter value by logging an SR with the Oracle Cloud Operations team. There are no input parameters required

to run these jobs.

For phased rollouts, this job also supports initialization of the stock ledger tables by location and/or Department/Class/Subclass. For this, a filter file needs to be placed in the object storage before running this job.

File naming pattern

stock_ledger_initialize*.zip (which, in turn, contains stock_ledger_initialize*.dat)Filter File Format

Depending on what your rollout is based on, only those values should be passed in the file using comma as separator.

<Location>,<Department>,<Class>,<Subclass>For example:

-

If the rollout is by location (some locations and all department/class/subclass), then only the locations should be provided in the file.

Sample File:

1521,,, 1111,,, 1321,,, -

If rollout is by some locations and some departments/class/subclass, then only those locations/department/class/subclass combinations should be provided in the file.

Sample File:

1521,101,, 1111,102,1, 1321,103,2,3 -

If rollout is by all locations and some departments, then only the departments that are being rolled out should be provided in the file.

Sample File:

,101,, ,102,, ,103,,

Existing data will not be impacted when using filter files as long as the data is not common between the filters provided

in the file and existing ITEM_LOC_SOH data. If no input file is passed, then CONV_SL_INIT_JOB_ADHOC will initialize the stock ledger tables based on the entire ITEM_LOC_SOH table for all locations and department/class/subclass.

This would override the data for existing records as well.

Backup and Purge Job

To archive and purge the conversion staging tables and gather stats on the Merchandising main tables for the entities supported

in the conversion APIs, execute the CONV_ARC_PURGE_STATS_JOB_ADHOC job from POM.

There are two parameters for this job: ENTITY_NAME and GATHER_STATS.

-

GATHER_STATSis an optional parameter and is set toYto gather tables stats. -

ENTITY_NAMEis a required parameter and valid values are:-

CREATE_PURCHASE_ORDERS

-

SHIP_PURCHASE_ORDERS

-

RECEIVE_PURCHASE_ORDERS

-

CLOSE_PURCHASE_ORDERS

-

ITEM_LOC_SOH

-

PARTNER

-

ITEM_HTS

-

ITEM_EXPENSES

-

CREATE_CUSTOMER_ORDERS

-

SHIP_CUSTOMER_ORDERS

-

RECEIVE_CUSTOMER_ORDERS

-

ITEM_LOC_HIST

-

SLOPNINIT

-

PARTNER_ORG_UNIT

-

SUP_INV_MGMT

-

HALF_DATA

-

HALF_DATA_BUDGET

-

INV_STATUS_QTY

-

In addition to archiving and purging the conversion tables for the above entities, this job also archives and purges the

errors captured in the CONV_VALIDATION_ERROR table.

Conversion Process Flow

At a high level, for each entity you wish to convert using the conversion APIs in the production environment, you need to perform the following steps:

-

Place the conversion zip file into the object storage incoming prefix.

-

Run the

CONV_<entity>_LOAD_JOBbatch process using the Process Orchestration and Monitoring (POM). The batch moves the input files from the object storage to an inbound directory for data processing and loading into the conversion staging tables. There is separate load job for each conversion entity. For example, theCONV_PARTNER_LOAD_JOB_ADHOCjob loads the partner data in the related staging table. -

The above batch process will insert a record with a unique process ID into the

CONV_PROCESS_TRACKERtable. You can monitor the load processing by querying this table using the Merchandising APEX Data Viewer link. The statusEND_IMPORTin the table indicates that load was successful. If there are errors during the data load into the staging tables, then the status is recorded asFAILED_IMPORT. -

In case of errors during the data loading into the staging tables, to debug and troubleshoot the load errors, query the

FILE_UPLOAD_STATUSandFILE_UPLOAD_LOGtables. These tables will provide the details of the files loaded and corresponding load errors. -

Run the

CONV_<entity >_JOBbatch process using the Process Orchestration and Monitoring (POM). This job will perform data and business validation on the imported data in the conversion staging tables and upload the successfully validated records to the main Merchandising tables based on the entity being loaded. For example, theCONV_PARTNER_JOB_ADHOCjob validates and uploads the partner data to relevant Merchandising tables. -

After running step 5, the status in the

CONV_PROCESS_TRACKERtable -COMPLETE_NO_REJECTSindicates a successful load into the Merchandising tables andCOMPLETE_WITH_REJECTSindicates the process completed with some rejected records. The statusEND_VALIDATEin theCONV_PROCESS_TRACKERtable is an intermediate status once the job in step 5 completes data validation and is in the process of business validation and loading to Merchandising tables. -

The successful records are inserted into the Merchandising main tables. Any rejected records are written to the

CONV_VALIDATION_ERRORtable. The reject file is generated and moved to the outgoing prefix in the object storage. You can download the file to view the errored data, make relevant corrections, and reprocess the rejects.

The entities below are supported for conversion into the production environment using the conversion APIs.

| ENTITY | CONVERSION LOAD JOB (TO STAGE TABLES) | CONVERSION JOB (TO MAIN TABLES) |

|---|---|---|

|

CREATE_PURCHASE_ORDERS |

CONV_PO_LOAD_JOB_ADHOC |

CONV_PO_CREATE_JOB_ADHOC |

|

SHIP_PURCHASE_ORDERS |

CONV_PO_SHIP_LOAD_JOB_ADHOC |

CONV_PO_SHIP_JOB_ADHOC |

|

RECEIVE_PURCHASE_ORDERS |

CONV_PO_RECEIVE_LOAD_JOB_ADHOC |

CONV_PO_RECEIVE_JOB_ADHOC |

|

CLOSE_PURCHASE_ORDERS |

CONV_PO_CLOSE_LOAD_JOB_ADHOC |

CONV_PO_CLOSE_JOB_ADHOC |

|

ITEM_HTS |

CONV_ITEM_HTS_LOAD_JOB_ADHOC |

CONV_ITEM_HTS_JOB_ADHOC |

|

ITEM_EXPENSES |

CONV_ITEM_EXP_LOAD_JOB_ADHOC |

CONV_ITEM_EXP_JOB_ADHOC |

|

ITEM_LOC_SOH |

CONV_ITEM_LOC_SOH_LOAD_JOB_ADHOC |

CONV_ITEM_LOC_SOH_JOB_ADHOC |

|

PARTNER |

CONV_PARTNER_LOAD_JOB_ADHOC |

CONV_PARTNER_JOB_ADHOC |

|

CREATE_CUSTOMER_ORDERS |

CONV_ORDCUST_LOAD_JOB_ADHOC |

CONV_ORDCUST_CREATE_JOB_ADHOC |

|

SHIP_CUSTOMER_ORDERS |

CONV_ORDCUST_SHIP_LOAD_JOB_ADHOC |

CONV_ORDCUST_SHIP_JOB_ADHOC |

|

RECEIVE_CUSTOMER_ORDERS |

CONV_ORDCUST_RECEIVE_LOAD_JOB_ADHOC |

CONV_ORDCUST_RECEIVE_JOB_ADHOC |

|

HALF_DATA |

CONV_HALF_DATA_LOAD_JOB_ADHOC |

CONV_HALF_DATA_JOB_ADHOC |

|

HALF_DATA_BUDGET |

CONV_HALF_DATA_BUDGET_LOAD_JOB_ADHOC |

CONV_HALF_DATA_BUDGET_JOB_ADHOC |

|

SLOPNINIT |

- |

CONV_SL_INIT_JOB_ADHOC |

|

SUP_INV_MGMT |

CONV_SUP_INV_MGMT_LOAD_JOB_ADHOC |

CONV_SUP_INV_MGMT_JOB_ADHOC |

|

PARTNER_ORG_UNIT |

CONV_PARTNER_ORG_UNIT_LOAD_JOB_ADHOC |

CONV_PARTNER_ORG_UNIT_JOB_ADHOC |

|

INV_STATUS_QTY |

CONV_INV_STATUS_QTY_LOAD_JOB_ADHOC |

CONV_INV_STATUS_QTY_JOB_ADHOC |

|

ITEM_LOC_HIST |

CONV_ITEM_LOC_HIST_LOAD_JOB_ADHOC |

CONV_ITEM_LOC_HIST_JOB_ADHOC |