3 Planning Data Schema Administration

This chapter describes the administration tasks for the Planning Data Schema (PDS).

Introduction

The Planning Data Schema (PDS) consists of multiple database schemas residing on an Oracle Database server: a main schema (internally called a data mart schema) and multiple access schemas. The data mart schema contains all the dimension and fact tables that store all application data. The data mart schema also contains metadata tables, PL/SQL packages, procedures and functions, as well as other database objects. Access schemas are user schemas that are granted necessary privileges to access database objects in the data mart schema.

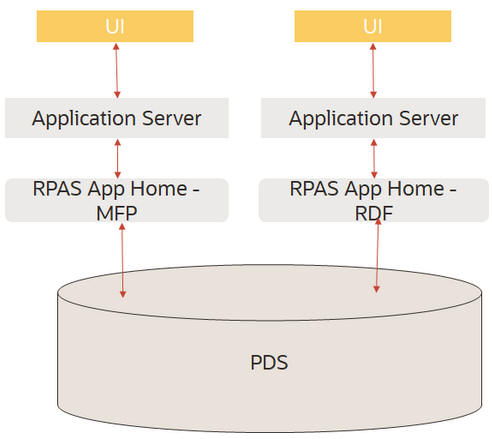

From RPASCE Version 19 to Version 21, the Planning Data Schema (PDS) has evolved from an optional data integration component into a powerful integration/calculation engine that can support multiple applications such as MFP, IPOCS-Demand Forecasting, and ASCS. Figure 3-1 provides a high level graphical description of the system architecture.

The diagram shows two RPASCE applications, MFP and IPOCS-Demand Forecasting, accessing a shared data mart schema. All RPASCE internal processes (except workbook calculations) communicate with PDS to perform their operations. For example, workbooks are built from and committed to PDS, batch calculations run inside PDS, and so on.

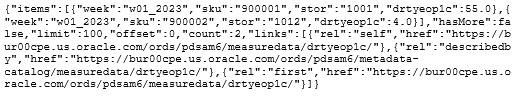

The data of retail business is multi-dimensional by nature, with typical dimensions including Product, Location, Calendar, Supplier, and so on. In the data mart schema, we use the data model called a de-normalized snowflake schema, which is built from the applications' configuration. Figure 3-2 shows a sample Entity Relationship Diagram (ERD) of the data model.

Figure 3-2 PDS Entity Relationship Diagram

Description of "Figure 3-2 PDS Entity Relationship Diagram"

The ERD shows two fact tables and their parent dimension tables (including all the roll-up dimension tables). The data model is a snowflake (as opposed to star) because there is a dimension table for each dimension level. It is de-normalized because each dimension table contains all the higher levels (not just the immediate parent). The data mart schema may contain hundreds of such snowflakes.

Because of the highly configurable nature of RPASCE applications (dimensions, measures, rules, workbook templates, and so on, are configured), the data mart schema is dynamically created, that is, dimension tables and fact tables are dynamically created from the application configuration. The data mart schema also contains a fixed set of metadata tables whose content is dynamically populated with application metadata. Batch calculation rules are dynamically translated into PL/SQL blocks and stored in PDS for IN-DB calculations.

Configuration

In RPASCE Version 21, PDS is built from application configurations. Unlike V19, there is no separate PDS configuration. The users only need to work on application configurations. For details, see Oracle Retail Predictive Application Server Cloud Edition Configuration Tools User Guide. The deployment process internally generates the PDS schema configuration from the application configurations that specify all application dimensions, facts (and mapping between the application measures and facts), and all the necessary metadata required to create all tables, and populates the metadata tables with the application configuration information.

Once an application is deployed, the PDS contains complete information about the application. Unlike V19, the traditional RPASCE application is not required and is no longer built.

When there are changes to the configuration after the application is deployed, the application can be patched. (See details in "Patch PDS".)

Partitioning

In the Planning Data Schema (PDS), fact tables are partitioned. Proper partitioning is crucial for optimal performance. The concept of partitioning PDS is inherited from the previous versions of RPASCE, but in Version 22, it is easier for the administrators. Now, the level DEPT of dimension PROD is the partitioning level. All fact tables with PROD dimension level at or below DEPT are automatically partitioned, with one DEPT in each database partition. With the automatic partitioning, the administrators are not involved in partitioning configuration at all.

A sample partitioninfo.xml is as follows:

<?xml version="1.0" encoding="utf-8"?> <rpas> <partitiondim>pgrp</partitiondim> <rdm-partitions> <partition id="1">9,10</partition> <partition id="2">11</partition> <partition id="3">12</partition> <partition id="4">13</partition> <partition id="5">14</partition> <partition id="6">15</partition> <partition id="6">16</partition> </rdm-partitions> </rpas>

All tags are required. The tag <partitiondim> defines the partitioning level; <partition> defines each partition, with the required id attribute that identifies the partition and a comma-separated list of positions in each partition. The above sample file defines six partitions. New partitions are automatically added when new positions that are not in partitioninfo.xml are loaded, with one partition per new position.

General guidelines for partitioning configuration are as follows:

-

Choose the partitioning dimension level to minimize (or eliminate) aggregation across the partitioning level for batch calculations. This is a limit on how low the partitioning level can be. For example, level CLSS may not be a good choice for MFP because the batch calculations aggregate from below to above CLSS).

-

Try to make the size of partitions as even as possible. The configurator is expected to have some knowledge about the application data volume.

Building PDS

To build PDS for an application, users must have the application configuration and partitioninfo.xml uploaded to the Object Storage. In addition, the input files for dimensions and facts should optionally be loaded. For details, see Oracle Retail Predictive Application Server Cloud Service Implementation Guide.

PDS can be built from the UI by completing the following steps:

-

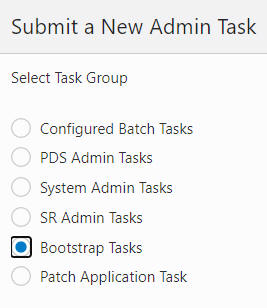

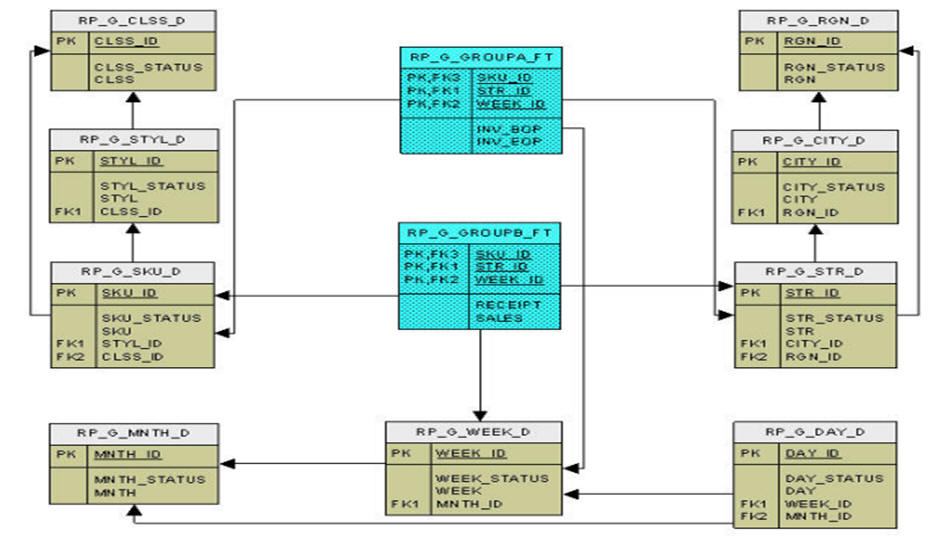

From Online Admin Tools > Submit a New Admin Task, select Bootstrap Tasks, as shown in Figure 3-3.

-

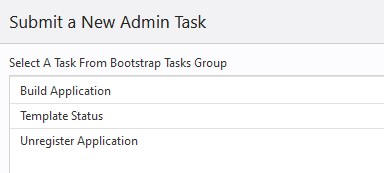

Select Build Application, as shown in Figure 3-4, and click Next.

-

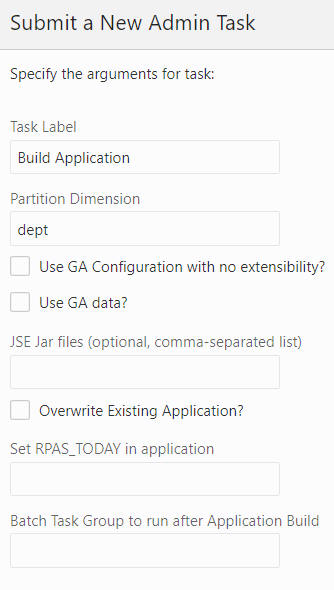

Provide the parameter values, as shown in Figure 3-5, and click Next to schedule or run the task.

-

Select Run ASAP or Run on Schedule and enter the appropriate parameters, as shown in Figure 3-6.

Figure 3-6 Build Application Schedule Parameters

Description of "Figure 3-6 Build Application Schedule Parameters"

Patch PDS

When changes occur in the application configuration, the already-deployed application can be patched. The Patch Application task is designed for this purpose.

To run the Patch Application task, first upload the application configuration <appName>_config.zip, interface.cfg and batch control files to the config folder in the Object Storage.

Then, complete the following steps.

-

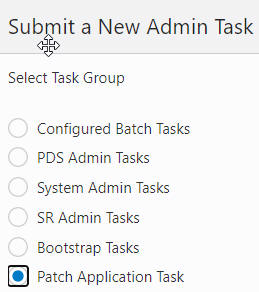

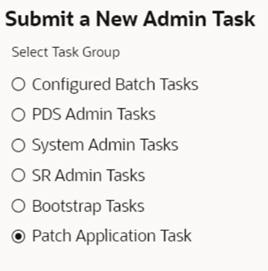

From Online Admin Tools > Submit a New Task, select Patch Application Task, as shown in Figure 3-7, and click Next.

-

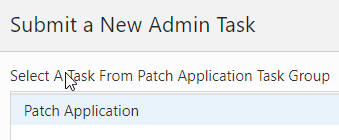

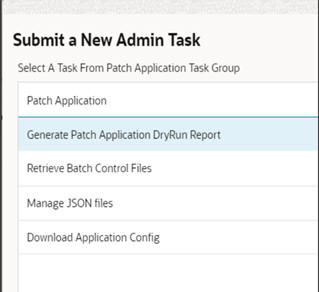

Select Patch Application, as shown in Figure 3-8, and click Next.

-

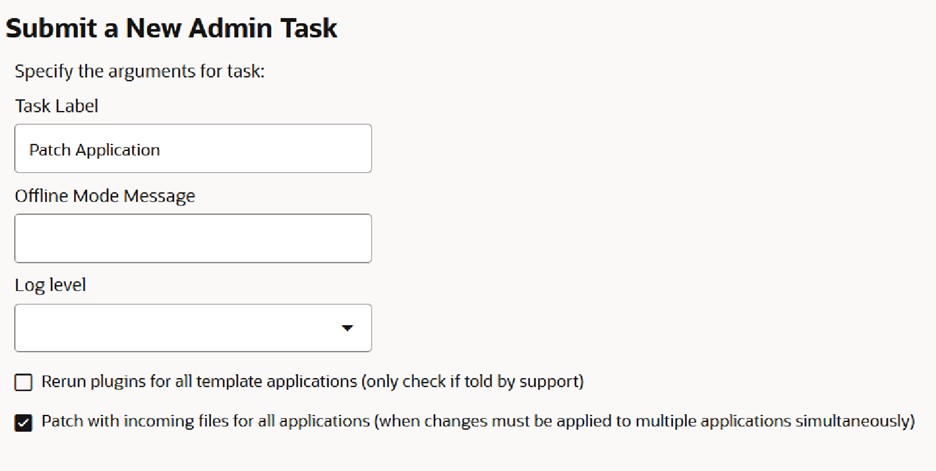

Complete the Patch Application parameters, as shown in Figure 3-9, and click Next to schedule or run the task.

Note:

In a multi-application environment, users have the option of selecting the check box Patch with incoming files for all applications (when changes must be applied to multiple applications simultaneously). When this box is checked, the PDS patching process will check for incoming config.zip, interface.cfg, or batch control files, for all registered applications, and will use any that are found as part of a simultaneous multi-application patch. This supports multiple possible use-cases where a configuration or interface.cfg change must be applied to multiple application configurtions in the same operation to avoid conflicting fact intersection or interfaces, for example. When this check box is unselected, only the current application is patched with its new incoming files.

Patch Dry Run Report Generation

Patch operation can be time consuming and can block a customer environment for an extensive length of time. In some cases, the change of the configuration is not intentional. Therefore, providing a diff report on various stage of the patch before the actual patch operation can help customer to gauge how much time it will take for the operation and help development to identify a problem if it occurs.

The task will generate following report items:

| Type | Detail | Scope |

|---|---|---|

| Configuration change report | Compares the current configuration stored in PDS vs the incoming configuration. A report is provided for each application in multi-application environment. The purpose is to capture any configuration differences either by customer, by code update, or by plug-in update. | Per app |

| interface.cfg diff report | Difference between the interface.cfg stored in PDS vs the incoming interface.cfg. Since an interface change can affect a fact grouping decision, it is important to capture any changse. | Per app |

| Batch control calc diff report | Difference between the batch_calc_list.txt stored in PDS vs the incoming batch_calc_list.txt. Sincea batch rule change can affect a fact grouping decision, it is important to capture any change. | Per app |

| Create integration config | This is a log of the operation that generates the integration configuration from all applications. If an error occurs in the configuration, it will produce an error at this step. | Global |

| Fact grouping report | This is a report on how the facts are grouped. This report is originally generated by the above step and is exposed as an individual item from the task tree. | Global |

| Sandbox verification | A small process to validate the configuration to ensure the application can be built. | Per app |

| Patch PDS dry run report | Lists the operations to convert the current PDS structure to the expecting structure. | Global |

- From Online Admin Tools > Submit a New Task, select Patch Application

Task, as shown in figure below, and click Next.

Figure 3-10 Patch Application Task

- Select the Generate Patch Application DryRun Report Task, as shown

in the figure below, and click Next to submit the task.

Figure 3-11 General Patch Application Dry Run Report

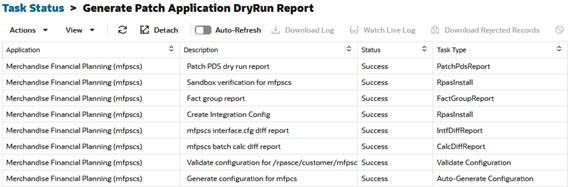

- The following sub-tasks can be seen within the Generate Patch

Application DryRun Report Task.

Figure 3-12 Task Status

Post Build Steps

This section describes the following tasks:

-

Check PDS

-

List PDS Status

-

Create Reporting Views in PDS

-

Load Dimension Data

-

Load Fact Data

-

PDS Manager

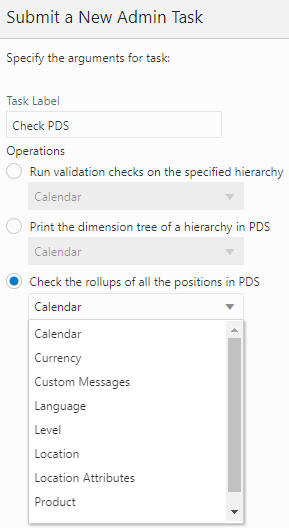

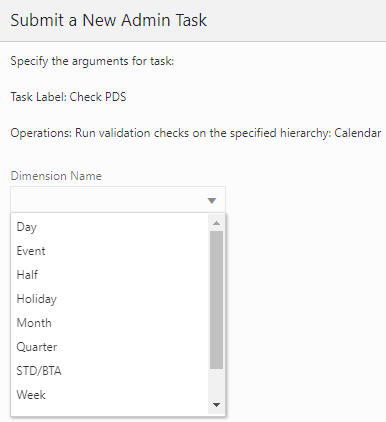

Check PDS

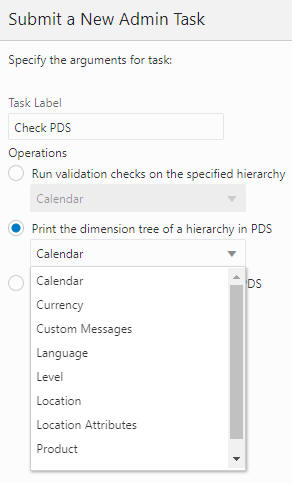

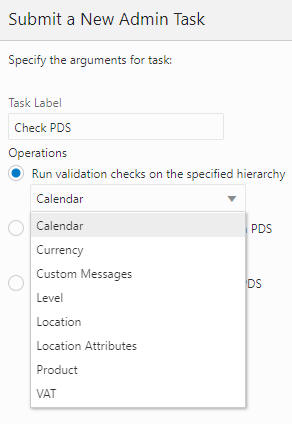

Use the Check PDS task to obtain diagnostic information from PDS. The user can select one of the following operations to perform.

Run validation checks on a specified hierarchy

The user must provide the hierarchy for the validation to be conducted. As shown in Figure 3-13 and Figure 3-14, the user can provide the dimension level to further limit the validation. If the value is left blank, the operation will perform the validation on all dimensions of the hierarchy.

Figure 3-13 Validation Checks on Specified Hierarchy

Description of "Figure 3-13 Validation Checks on Specified Hierarchy"

Figure 3-14 Calendar Arguments for Specified Hierarchy

Description of "Figure 3-14 Calendar Arguments for Specified Hierarchy"

Print the dimension tree of a hierarchy in PDS

This operation prints the dimension tree using the PDS hierarchy information.The user is required to select the hierarchy to be printed, as shown in Figure 3-15.

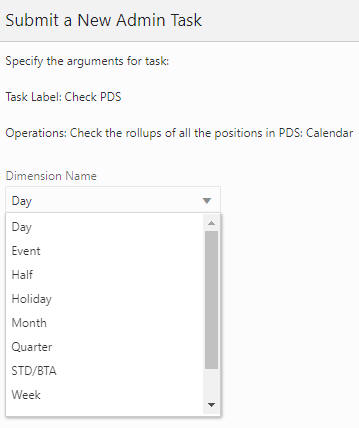

Check the rollups of all the positions in PDS

This operation is used to detect rollup corruption at dimension/level. As shown in Figure 3-16 and Figure 3-17, the user must first provide the hierarchy name and select the dimension for the validation. If the Check All option is enabled, the operation will perform the same rollup validation for all the dimensions that are rollups from the selected dimension. For example, if Day is selected and Check All is enabled, then, in addition to the Day dimension, Month, Quarter, Half, and so on, will also be validated.

List PDS Status

The List PDS Status task, shown in Figure 3-18, does not have any additional parameters. It provides the current state of PDS regarding operation and application consistency.

It has following status codes:

-

1001 - Hierarchy load in one of the applications is in progress.

-

1002 - PDS dimension loading is in progress.

-

1004 - PDS re-partition is in progress.

-

1005 - PDS fact data loading is in progress.

-

1006 - Data is being transfer from one of the applications to PDS. (Obsolete)

-

1007 - Translation loading is in progress.

-

1008 - One of the application registrations is in progress.

-

1009 - One of the application un-registrations is in progress.

-

2001 - There are mismatch positions between one of the applications and the PDS.

-

2002 - PDS repartition is required.

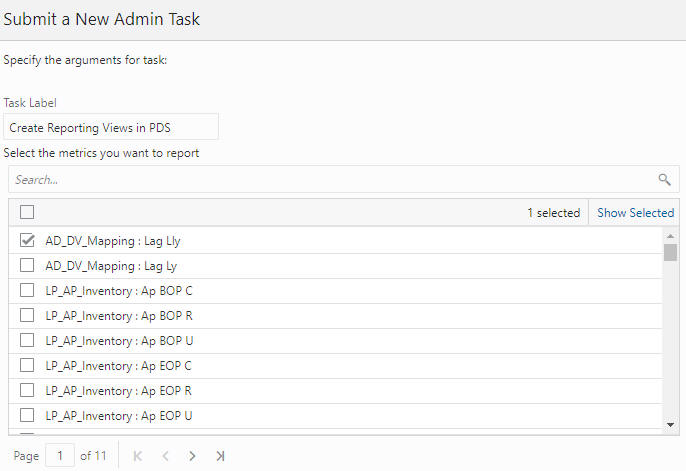

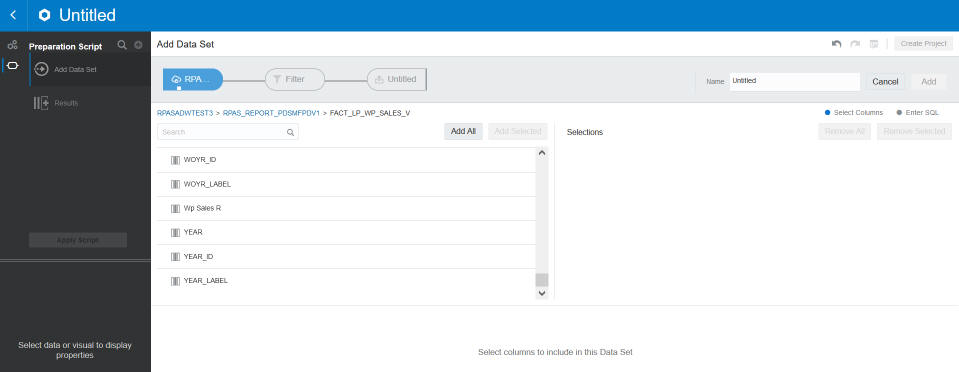

Create Reporting Views in PDS

This operation is used to create database views for facts that can be selected. The views are used by Oracle Analytic Server (OAS) for data visualization/reporting. The facts, as shown in the OAT task Figure 3-19, are configured with Report Categories. (See Oracle Retail Predictive Application Server Cloud Edition Configuration Tools User Guide for details.) Note that the facts in the drop-down list are shown by their labels, which are created at configuration time. Navigation through various metrics is controlled using the Page numbering or Page Navigation options that are available in the bottom left corner of the screen.

There are two types of fact views that can be created using this OAT task.

The first type is for regular facts such as sales or inventory facts. These views have the naming convention fact_<reportCategory>_v. They contain the dimension levels from the facts’ base intersection and all rollup levels.

The second type is for attribute measures/facts. They have the naming convention of attr_<reportCategory>_v. In RPASCE, the attribute measures/facts can be one-dimensional or two-dimensional. Unlike the views for regular facts, the attribute views only contain the base dimension level of the attribute measures. In OAS, the attribute views are meant to be joined with regular fact views so that the report designers can do grouping/aggregation by various attributes (for example, product size, color).

The same fact labels are used as column names in the OAS UI fact views, as shown in Figure 3-20. The fact labels are used as column names in OAS UI with the following restrictions:

-

If the label length is greater than 128 bytes, it will be truncated to 128 bytes (because of column length limit in Oracle DB).

-

Double quotes (") will be replaced with a white space, as they are not allowed in column names.

-

Duplicate labels are not allowed within a fact view. When a duplicate is encountered, it is truncated to 125 bytes, then a unique sequence number (up to three digits) is appended, in order to make the column name unique.

Note:

The columns of the attribute view tables are named after the attribute labels for which those columns contain data. However, the columns names must be altered to the following extent: Oracle does not allow a column name that is identical to a keyword in SQL. As an example, the attribute label Size cannot be used as a column name because Size is an SQL keyword. In cases where an attribute label happens to be identical to a SQL keyword, the underscore character '_' will be added to the end of the column name. For our example attribute Size, the column users of OAS will instead see SIZE_.

Due to the way OAS generates SQL queries on the Oracle Database, in order to pull data for reports, the column names will all be forced to upper case. While this is an inconvenience for users of OAS, it avoids a issue in which the use of mixed case can result in OAS generating bad queries that cause errors when attempting to pull data from the Planning Data Store. For exampl,e, the attribute name Color will appear as a column in OAS as COLOR.

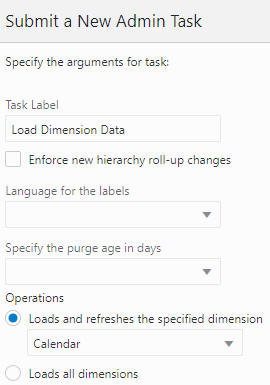

Load Dimension Data

After the PDS is built, the user must load the dimension data and/or fact data to make PDS fully operational. Dimension data can be loaded from flat files.

Loading Dimension Data

Prepare the flat files and place them in the Object Storage under "<SubNamespace>/planning/incoming/input. See Oracle Retail Predictive Application Server Cloud Service Implementation Guide for details regarding uploading and downloading batch files to the Object Storage.

Load Dimension Data, shown in Figure 3-21, is used to load hierarchy information into the PDS from one or more flat files.

Options

-

Language for the labels. Sets the language for the labels provided in the flat file. The default is English.

-

Enforce new hierarchy roll-up changes. When an incoming flat file contains only partial hierarchy data, it is possible that the data has different rollup positions than the records in PDS. Use this option to enforce the rollup from the flat file and apply it to all instances in PDS. If this option is not checked, the task will fail if it detects different rollups in the incoming file and in the records in PDS.

-

Specify the purge age in days. Use to auto purge records that have not been seen from the load file by the number of days specified in this field. If a value is not specified, the purge age defined by the configuration will be used.

-

Operations. Choose between loading a particular dimension (the dimension file must exist) and loading all dimensions. This allows the system to load the dimensions if the corresponded data files have been uploaded.

Note:

When the Load Dimension Data Task is called in dimension/hierarchy load mode, it will look for unarchived data files with the following names in the following order: <dim>.csv.dat.*, <dim>.hdr.csv.dat.*. But if the ALL dimensions option is selected, it will look for an archive with name planning/incoming/input/dimdata.zip from the Object Storage. If it exists, it will be extracted and all files that match the mentioned pattern will be loaded to the designated dimension.

Note:

Virtual dimension/hierarchy cannot be explicitly loaded using the Load Dimension Data task.

Note:

Position names are case insensitive. For example, SKU’s AB1234GH and ab1234gh are considered as the same SKU.

Note:

Records can be rejected from the data source file for various reasons. Some are just warnings, such as invalid position name, missing fields, duplicate records, and so on, and will not stop the loading process. However, if the records are causing rollup conflicts, then the process will stop and the problem records will be reported as rejected records. These rejected records will be available for download on the OAT dashboard. See Appendix A “Troubleshooting”.

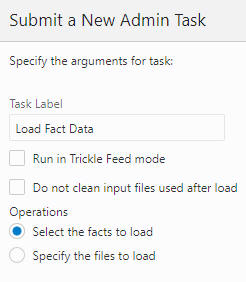

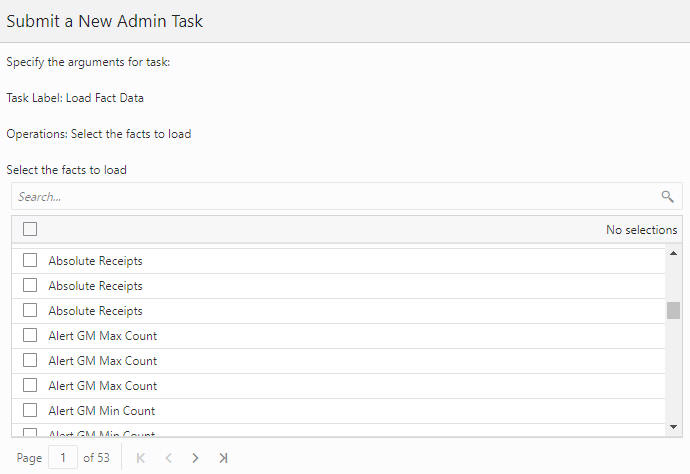

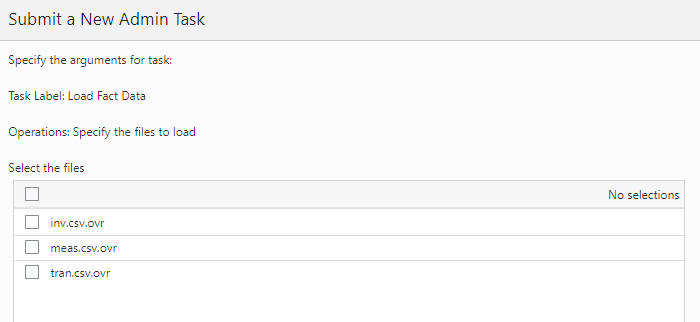

Load Fact Data

Use this task, shown in Figure 3-22, to load measure data into the PDS from flat files. Once present in the PDS, this data is available to any application that shares that measure throughout PDS. Prepare the flat files and place them in the Object Storage under "<SubNamespace>/planning/incoming/input. See Oracle Retail Predictive Application Server Cloud Service Implementation Guide for details regarding uploading and downloading batch files to the Object Storage.

The format of the data file must be CSV with a header line.

Multiple facts in the same fact base intersection, regardless of whether they are shared or non-shared measures, can exist in one data file.

The header line must contain the names of the fact base dimension, and the names of facts. The dimension names are listed in the order of their hierarchies. The non-shared measures must have their names prefixed with the fact prefix override.

sku,str,ut_dh10wd22n,ut_dh10wd22l,ut_dh10wd21n,ut_dh10wd21l,ut_dh10wd31n,ut_dh

10wd31l

sku_22200001,str1000,A01_B01,A01_B01,SK_ST1000,SK_ST1000,WORLD,WORLD

sku_22200001,str0959,A03_B03,A03_B03,SK_ST0959,SK_ST0959,INDIA,INDIA

sku_22200003,str1000,A01_B01,A01_B01,SK_ST1000,SK_ST1000,WORLD,WORLDAdministrators must pay close attention to the extension of the input file. RPASCE supports the following types of loads (identified by file name extension):

-

.ovr (Overlay): Existing values in the measure are overlaid with the values in the input file. Any values not included in the input file are not changed.

Note:

For string type measures, an empty cell in the .ovr file is treated as a valid string; as a result, the Load Fact Data task overwrites the previously loaded string with an empty string. For other measure types, an empty cell in the .ovr file is treated as invalid data. It is discarded and the previously loaded value is retained.

-

.rpl (Replace): The existing data for the facts specified in the data file is cleared, and the values in the input file are taken as the new values for the facts. Existing values for cells that do not exist in the load file are switched to NA. In other words, all data at the base intersection for the facts are removed before cells are populated with the data from the incoming file.

-

.inc (Increment): Increment mode must only be used with numeric measures/facts in which the load file contains incremental values. Therefore, if a cell had a value of 2 and the .inc file provided a value of 3 for the cell, then the new value for the cell is 5 (2 incremented with 3).

The Load Fact Data task allows multiple facts to be loaded from a single file. The multiple facts must have the same base intersection. If the data file contains multiple facts and the option Specify the files to load, all facts (specified in the header line) within the selected data file will be loaded. If a fact is in the header line but does not exist in the application, the corresponding column will be ignored and other facts will be loaded.

If this task skips records for any reason, a warning will be printed out to the log file that can be obtained from the OAT dashboard. The maximum number of skipped records that can be output to the log is 1000. After that, any further reject records will be skipped silently.

Options

-

Run in Trickle Feed mode. When this mode is enabled, loadFactData will use a transactional type of query for inserting and committing. Data will either be all loaded to the PDS or not loaded at all; a partial load is not permitted. This is intended for small data feeds only.

-

Do not clean input files used after the load. By default, data files are removed from the source location. Enable this option to have the file(s) remain at the original location after the load.

-

Operations

-

Select the facts. Select the list of facts to load.

-

Specify the files to load. Select which file to load.

-

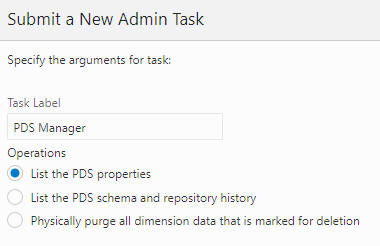

PDS Manager

Use PDS Manager, shown in Figure 3-25 to perform various PDS operations.

-

List the PDS properties. Print the list of properties for PDS.

-

List the PDS schema and repository history. Print the history and the schema.

-

Physically purge all dimension data. Purge all deleted dimension data and associated fact data from the PDS.

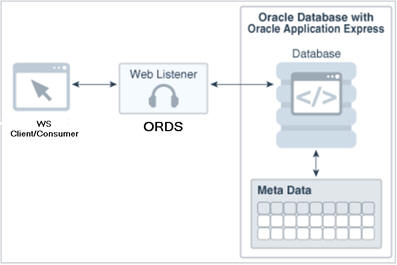

Planning Data Schema Default Web Services (through ORDS)

PDS is a common database platform shared by multiple applications. PDS is built with pre-created web services for measure metadata and measure data. The default web services are automatically available after PDS is built. Web services map to the PDS read-only schema in the database and uses Oracle Restful Services.

Apex User Creation

The types of users in Apex are:

-

Workspace Admin - Responsible for creating workspaces and additional users. This access is only provided to the Oracle/AMS team.

-

RPAS_READ_ONLY_WORKSPACE - Provides viewer access to the workspaces. This user cannot create additional users. These users can access the default web services. If the customer requires a user to be a rest API developer who can log into and build restful services on the PDS read-only schema, the customer must send email to the Oracle/AMS team.

The Oracle/AMS team shares the base url for the web service during provisioning to the customer. This can be accessed by a user in the OCI IAM with the following group association: RPAS_ORDS_GROUP (for example, https://---wts.oracleindustry.com:443/ords).

Any users who are not part of the above group will not be able to access the web service. For more fine-grained control, it is possible to create multiple groups and map groups to specific rest urls.

Note:

In order to control access to the restful services to specific groups in OCI IAM, contact your Oracle/AMS Workspace Admin.

Default Web Services

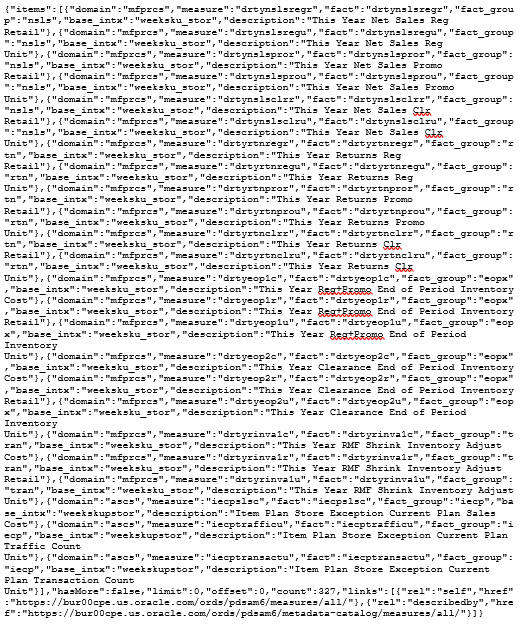

The default web services includes the following data:

-

The metadata web service exposes the following metadata for all measures in PDS: application, measure, (mapped) fact, base intersection, and description.

-

The web services for fact data provide one web service for each fact in PDS. The fact data is retrieved at the fact's base intersection with position names.

Figure 3-26 shows a high level architectural diagram of PDS (Oracle Database) with ORDS. Web services are accessible from any web service client (a web browser is an example of web client) through an ORDS Listener, which is deployed on a Tomcat server. Within the database, an ORDS enabled read-only schema provides access to the necessary database objects.

Web Services URL

All web services are available under the base path: <ords_path>/<schema_alias>, where <ords_path> is determined by the ORDS installation/configuration. In production, this value is https://<cust>-<env>-<app>-wts.oracleindustry.com:443/ords and <schema_alias> is pds.

Get Services

Table 3-1 describes the Web Services URL.

Table 3-1 Web Services URL

| Sl Number | Web Service | Example URL | Description |

|---|---|---|---|

|

1 |

Metadata |

https://<cust>-<env>-<app>-wts.oracleindustry.com:443/ords/pds/measures/all/ |

Web service exposes the following metadata for all measures in PDS: application, measure, (mapped) fact, base intersection, and description. |

|

2 |

Measure |

https://<cust>-<env>-<app>-wts.oracleindustry.com:443/ords/pds/measuredata/<fact_ name>/ |

One web service for each measure in PDS. Measure data is retrieved at the measure's base intersection with position names. |

Example Output:

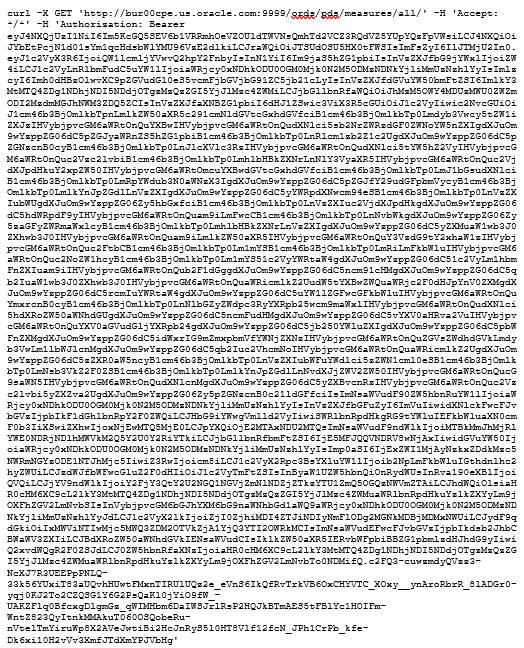

URL for metadata:

https://cust1-prod3-rpasee-wts.oracleindustry.com:443/ords/pds/measures/all

URL for fact data:

https://cust1-prod3-rpasee-wts.oracleindustry.com:443/ords/pds/measuredata/drtynslsregr/

Filtering

Filtering in the web services is supported through ORDS. To add a filter to a web service, append the parameter q=FilterObject, where FilterObject is a JSON object that represents the custom selection and sorting.

For the FilterObject syntax in ORDS version 19.1, see:

Table 3-2 provides examples of the FilterObject.

Table 3-2 Example URLs

| Description | Web Service URL |

|---|---|

|

To select measure metadata for mfp or rdf deployment only. |

https://<cust>-<env>-<app>-wts.oracleindustry.com:443/ords/pds/measures/all/?q={"deploy_id":{"$or": [{"$eq":"ascs"},{"$eq":"rdf"}]}} |

|

To select metadata for deploymnet ID in ("rdf", "mfprcs") and measure name in ("drtyeop1r", "rsal" |

https://<cust>-<env>-<app>-wts.oracleindustry.com:443/ords/pds/measures/all/?q={ "deploy_id": {"$or": [{"$eq": "rdf"},{"$eq": "mfprcs"}]}, "measure": {"$or": [{"$eq":"drtyeop1r"}, {"$eq":"rsal"}]} } |

|

To retrieve measure data for a given week and sku. |

https://<cust>-<env>-<app>-wts.oracleindustry.com:443/ords/pds/measuredata/drtyeop1r/?q={"week":"w01_2017","sku":"1000466"} |

Pagination

Data is returned in pages, with a page size of 100. This means that each web service call returns 100 rows. To retrieve additional data, append ? offset parameter.

For example, the url for the first page (first 100 rows) of rsal data is

https://cust1-prod3-rpasee-wts.oracleindustry.com:443/ords/pds/measuredata/rsal

and the url for the second page is

https://cust1-prod3-rpasee-wts.oracleindustry.com:443/ords/pds/measuredata/rsal/?offset=100

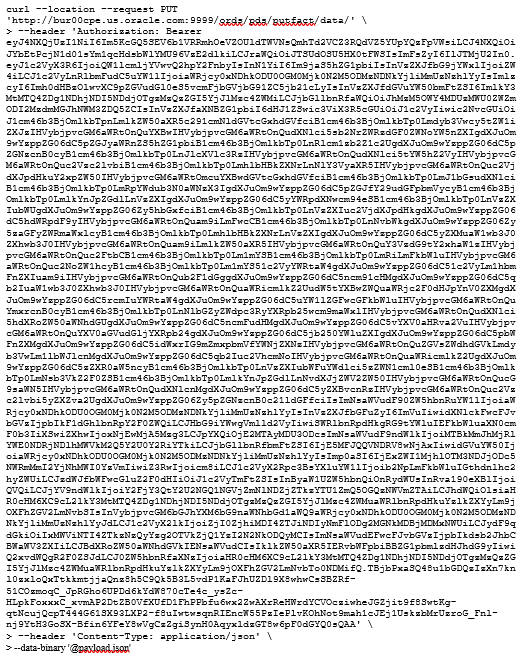

PUT Services

RPASCE also offers the PUT (write) web service that allows customers to write/update source facts in PDS. Source facts are the facts that are usually loaded (not calculated) whose mapping measures are flagged in the application configuration.

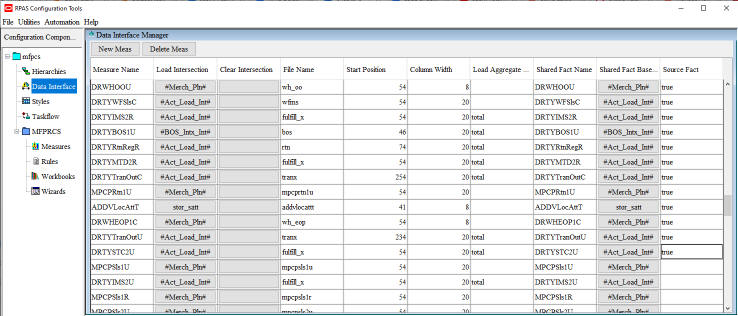

See the last column on the right in Figure 3-27 for source facts.

Example URL for PUT service below.

https://<cust>-<env>-<app>-wts.oracleindustry.com:443/ords/pds/putfact/data/

The payload of the web service will be in a JSON format that can support input data for a fact at any intersection.

Here is a sample JSON file.

{

"fact_names": ["DRTYEOP1C"]

"intersection": ["week","stor","sku"]

"data_rows": [

["w01_2017","900002","1005",1],

["w01_2017","900002","1008",1]

]

}OCI IAM Authorized Access to PDS Web Services in Apex/ORDS

This section provides details about using the REST API call to Oracle Identity Cloud Service to interact with APEX/ORDS to access planning data schema web services. The example shows how the REST API calls are typically executed in Oracle Identity Cloud Service.

The REST APIs provide a way to integrate Oracle Identity Cloud Service with custom applications and clients that support REST, such as Java, C#, Go, Python, or Ruby apps.

Requirements

This tutorial uses Oracle Linux 7.2. It can be executed on other operating systems with a bash terminal (such as Red Hat, Ubuntu, or OSX), or in Windows (using a bash emulator such as git bash). The commands may have small variations on different operating systems. It is recommended that you are familiar with the REST architecture style.

-

Access to Oracle Identity Cloud Service with authorization to manage Applications (Identity Domain Administrator, Security Administrator, or Application Administrator).

-

A Linux machine with cURL utility available.

-

Credentials - Contact an Oracle cloud administrators if you do not have access to the following credentials.

-

IDCS-client-id

-

IDCS-client-secret

-

IDCS-tenant-id

-

IDCS_URL

-

Userid

-

Password

-

Get Bearer Token

The access_token (Bearer) identifies your client access in Oracle Identity Cloud Service and will be used for subsequent REST API calls. This token is encoded following the JSON Web Token (JWT) standard. Replace the following credentials with the correct values for your environment.

curl -i -u "<IDCS-client-id>":"<IDCS-client-secret>" \

https://<idcs-tenant-id>.<IDCS_URL> \

-H 'Content-Type: application/x-www-form-urlencoded' \

-H 'Host: <idcs-tenant-id>.<IDCS_URL>' \

-d 'grant_type=client_credentials' -d 'scope=urn:opc:idm:__myscopes__'

Example Bearer Token Rest Call

curl -i -u "a3399f80531e4eff82637f0ba5c7d49d":"d3e376c2-d5fb-4d3b-b2d0-3bd52df6f5eb" \ https://idcs-148d8548c42947c983343db9b2e378ec.identity.c9dev2.oc9qadev.com/oauth2/v1/token \ -H 'Accept: application/json' \ -H 'Accept-Language: en' \ -H 'Accept-Version: 1.3.0' \ -H 'Content-Type: application/x-www-form-urlencoded' \ -H 'Host: idcs-148d8548c42947c983343db9b2e378ec.identity.c9dev2.oc9qadev.com' \ -d 'username=oci.admin&password=OracleRetail1&grant_type=password&scope=urn%3Aopc%3Aidm%3A__myscopes__%20offline_access' --tlsv1.2

You will see output that contains the following:

-

The Access Token request output in JSON format. The return contains the attributes access_token, token_type, and expires_in.

-

The access_token(Bearer) identifies your client access in Oracle Identity Cloud Service and will be used for subsequent REST API calls. This token is encoded following the JSON Web Token (JWT) standard.

To check the JWT token, you can copy the access_token and verify its value using: https://jwt.io/#debugger-io

-

The token_type identifies the Access Token as a Bearer token type. In future requests, you will use this token type to identify your token in the Authorization header of your request.

-

The expires_in identifies the validity period of the Access Token.

Caution:

These bearer tokens may expire and may have to be regenerated in case a 401 Authorization Required error occurs.

Token

HTTP/1.1 200 OK

Cache-Control: no-store

Content-Length: 4667

Content-Type: application/json;charset=UTF-8

Date: Fri, 08 Jan 2021 20:46:40 GMT

Pragma: no-cache

Server: Oracle Identity Cloud Service

Strict-Transport-Security: max-age=315360000; includeSubDomains

Vary: Accept-Encoding

Via: 1.1 net-idcs-config

X-Content-Type-Options: nosniff

X-Frame-Options: deny

X-Oracle-Dms-Ecid: Roj^J0TA200000000

X-Oracle-Dms-Rid: 0:1

X-Xss-Protection: 1; mode=block

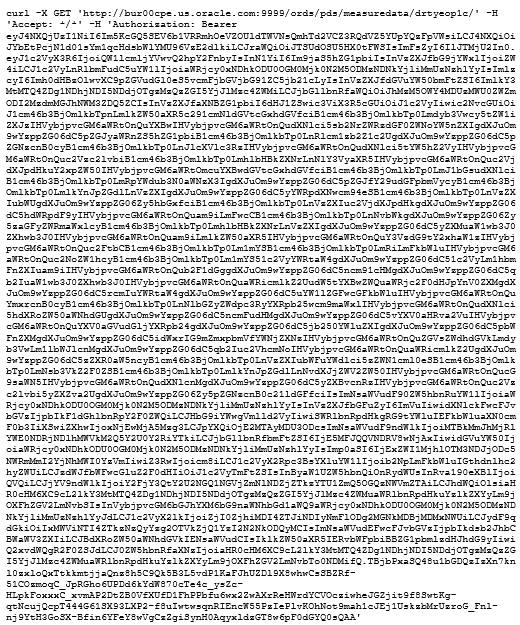

Perform the ORDS Rest API Calls

In this task, you perform your REST API call to Oracle Identity Cloud Service. The objective is to show you how the REST API calls are typically executed in Oracle Identity Cloud Service.To learn more about the application API, refer to the Oracle Identity Cloud Service REST API documentation.

In this example, it is assumed that a publicly accessible ORDS url has been provided to the customer.

Example: http://<hostname>:<port>/ords

RestAPI example url: http://<hostname>:<port>/ords/pds

Replace the bearer token with the value received from Get Bearer TokenNote that these bearer tokens may expire and may have to be regenerated if a "401 Authorization Required" error occurs.

Write PDS Web Service for Facts

Here is a sample payload.json file with the configuration values.

{"rejected_record_threshold_percentage":40, "fact_names": ["drtyeop1c","drtyeop1r","drtyicmkur"], "intersection": ["week","sku","stor"], "data_rows": [["20200208","67150969","1002",1,100,200], ["20200215","67150969","1007",2,400,800] ] }

Invoke the Web Service

Replace the bearer token with the value received inGet Bearer Token

Note that these bearer tokens may expire and may have to be regenerated if a "401 Authorization Required" error occurs.

Write PDS Web Service

PDS web service.

Invoke Read PDS Web Service - Retrieve Data from a Fact

Replace the bearer token with the value received in Get Bearer Token

Note that these bearer tokens may expire and may have to be regenerated if a "401 Authorization Required" error occurs.

Sample Output

PDS Concurrency

PDS Concurrency is a locking mechanism for task exclusivity. In older versions, many tasks were configured to run in offline/exclusive mode. This means, when these tasks were in progress, other submitted/scheduled tasks remained in pending/scheduled state, irrespective of the application. The user access to application was also limited. The PDS concurrency mechanism uses locks in an Oracle database. Tasks acquire read and write locks only when required. Tasks release the locks on exit. This helps to attain high concurrency.

If there are no active locks, a task is granted a lock immediately and can begin its work. If only read locks are active, the task is granted a read lock immediately, but must wait for the write lock. The write lock is granted only once, all previous locks are completed.

| Operation | PDS Property | Default Timeout | |

|---|---|---|---|

| 1 | Wizard | timeout_wb_op | 4 minutes |

| 2 | Workbook build through UI | timeout_wb_main | 1 hour |

| 3 | Workbook build through OAT (wbbatch) | timeout_misc | 12 hours |

| 4 | Workbook refresh through UI | timeout_wb_op | 4 minutes |

| 5 | Workbook refresh through OAT (wbbatch) | timeout_misc | 12 hours |

| 6 | DPM through UI | timeout_wb_op | 4 minutes |

| 7 | DPM through webservice | timeout_misc | 12 hours |

| 8 | Commit |

commit_asap_deadline |

Until deadline, which is 00:01 GMT by default. |

| 9 | Dashboard workbook build through OAT (scheduleDashboard) | timeout_misc | 12 hours |

| 10 | Load Dimension Data & Load Fact Data (OAT or as part of batch) | timeout_misc | 12 hours |

| 11 | System workbook commit | timeout_batch | 24 hours |

| 12 | System workbook build | timeout_batch | 24 hours |

| 13 | Batch Calc | timeout_batch | 24 hours |

The following table explains the lock behavior when two tasks are run in parallel. If there is lock contention, a message "in request_locks: waiting to acquire lock, sleeping 10s" will be displayed in the logs.

| Task 1 | Task 2 | Lock Contention | |

|---|---|---|---|

| 1 | Load Dimension Data through OAT to load CLND hierarchy in MFP. | Load Dimension Data through EE Batch to load PROD hierarchy in MFP. | No |

| 2 | Load Dimension Data through EE Batch to load CLND hierarchy in MFP. | Load Dimension Data through EE Batch to load PROD hierarchy in MFP. | No |

| 3 | Load Dimension Data through OAT to load CLND hierarchy in MFP. | Load Dimension Data through OAT to load PROD hierarchy in MFP. | No |

| 4 | Load Dimension Data through OAT to load CLND hierarchy in MFP. | Load Dimension Data through OAT to load CLND hierarchy in AP. | Yes |

| 5 | Load Dimension Data through OAT to load CLND hierarchy in MFP. | Load Fact Data, whose intersection includes CLND, through EE Batch in MFP. | Yes |

| 6 | Load Dimension Data through OAT to load CLND hierarchy in MFP. | Load Fact Data, whose intersection includes CLND, through EE Batch in AP. | Yes |

| 7 | Load Fact Data through OAT to load measures in MFP. | Load Fact Data through EE Batch to load different measures in MFP. | No |

| 8 | Load Fact Data through EE Batch to load measures in MFP. | Load Fact Data through EE Batch to load different measures in MFP | No |

| 9 | Load Fact Data through OAT to load measures in MFP. |

Load Fact Data through OAT to load different measures in MFP. |

No |

| 10 | Load Fact Data through OAT to load measures in MFP | Load Fact Data through OAT to load same measures in AP. | Yes |

| 11 | Load Dimension Data through OAT to load CLND hierarchy in MFP. |

User goes through CLND wizard in MFP. |

Yes |

| 12 | Load Dimension Data through OAT to load CLND hierarchy in AP. | User goes through CLND wizard in MFP. | Yes |

| 13 | Load Dimension Data through OAT to load CLND hierarchy. | User goes through prod wizard. | No |

| 14 | Load Fact Data through OAT to load mask measure. | User goes through wizard that has pre range mask measure. | Yes |

| 15 | Run batch that loads the mask measure. | User goes through wizard that has pre range mask measure. | Yes |

| 16 | Load Dimension Data through OAT to load CLND hierarchy. | Workbook build that goes through CLND wizard. | Yes |

| 17 | Load Dimension Data through OAT to load CLND hierarchy. | Open existing workbook from recent plans. | No |

| 18 | Load Dimension Data through OAT to load CLND hierarchy. | Perform workbook calc. | No |

| 19 | Load Dimension Data through OAT to load CLND hierarchy. | Create scenario, promote scenario, change rollup, save format, restore format, and so on. | No |

| 20 | Load Dimension Data through OAT to load DPM enabled hierarchy. | DPM via workbook – add informal positions. | Yes |

| 21 | Run custom menu in a workbook that does not do commit. | Load Fact Data through OAT to load measures present in custom menu rules. | No |

| 22 | Run custom menu in a workbook that does a commit. | Load Fact Data through OAT to load measures that are part of custom menu rules. | Yes |

| 23 | Workbook commit. | Load Fact Data through OAT to load facts that are part of commit rules. | Yes |

| 24 | Workbook commit. | Load Fact Data through OAT to load facts that are not part of commit rules. | No |

| 25 | Workbook commit. | Load Dimension Data through OAT to load CLND hierarchy. | Yes |

| 26 | Call DPM webservice that adds informal positions. | Load Dimension Data through OAT to load DPM enabled hierarchy. | Yes |

| 27 | Run batch calc group in MFP. | Load Dimension Data through OAT to load CLND hierarchy in ASCS. | Yes |

| 28 |

Run batch calc group in MFP. |

Load Fact Data to load ASCS only measures. | Yes (if there are shared measures in the batch rule group) |

| 29 |

Run batch calc group in MFP. |

Load Fact Data to load shared measures. |

Yes |

| 30 | Load Dimension Data through OAT to load CLND hierarchy. | Build system workbook. | Yes |

| 31 | Load Dimension Data through OAT to load CLND hierarchy. | Commit system workbook. | Yes |

With the introduction of PDS Concurrency, the number of tasks with an exclusive lock is reduced to three. These tasks are Build application, Patch application, and Unregister application. These tasks still require an exclusive lock because these can change fact tables that are used by all applications.

When these tasks are in progress, other tasks submitted/scheduled from all applications will remain in a pending/scheduled state. For example, when the Patch application is In Progress in AP, tasks submitted from all applications remains in a scheduled/pending state until the AP Patch application completes.

Similarly, these tasks will wait until all tasks In progress are completed. For example, when Run Batch Task Group is In Progress in MFP, the Build application in AP remains in Pending until the batch task completes.

| Scenario | Behavior | |

|---|---|---|

| 1 | Submit Run Batch Task Group task in MFP. While the task is in progress, submit Patch Application in MFP. | Prepatch Patch Report and Run Batch Task Group" executes in parallel. Patch Application remains in Pending until batch task completes. |

| 2 | Submit Run Batch Task Group task in MFP. While the task is in progress, submit "Patch Application" in AP. | Prepatch Patch Report and Run Batch Task Group executes in parallel. Patch Application remains in Pending until batch task completes. |

| 3 | Submit Run Batch Task Group task in MFP. While the task is in progress, submit Build Application in MFP. | Build Application remains in Pending until batch task completes. |

| 4 | Submit Run Batch Task Group task in MFP. While the task is in progress, submit Build Application in AP. | Build Application remains in Pending until batch task completes. |

| 5 | Submit Run Batch Task Group task in MFP. While the task is in progress, submit Unregister Application in MFP. | Unregister Application remains in Pending until batch task completes. |

| 6 | Submit Run Batch Task Group task in MFP. While the task is in progress, submit Unregister Application in AP. | Unregister Application remains in Pending until batch task completes. |

| 7 | Submit Patch Application in AP. When Patch Application is in progress, submit OAT Load Fact Data to load MFP only measures in MFP. | MFP Load Fact Data remains in pending state until AP Patch Application is complete. |

| 8 | Submit Patch Application in AP. When Patch Application is in progress, submit OAT Load Fact Data to load shared measures in MFP. | MFP Load Fact Data remains in pending state until AP Patch Application is complete. |

| 9 | Submit Patch Application task in AP. When Patch Application is in progress, submit Run Batch Calc Group in MFP. | Run Batch Calc Group from mfp remains in pending state until Patch Application is complete. |

| 10 | Submit Patch Application task in AP. When Patch Application is in progress, open any workbook in AP. | Cannot open workbook. DomainExclusiveException: Patch Application. |

| 11 | Submit Patch Application task in AP. When Patch Application is in progress, open any workbook in MFP. | Workbook open successfully. |

| 12 | Submit Patch Application task in AP. When Patch Application is in progress, perform workbook commit in MFP. | Commit submitted. Commit task added to queue. Task remains in pending state until "Patch Application" is complete. |

| 13 | Submit Patch Application task in AP. When Patch Application is in progress, perform workbook commit in AP. | Cannot submit commit. There was an error performing a commit: DomainExclusiveException: Patch Application. |

| 14 | Submit Patch Application task in AP. When Patch Application is in progress, perform workbook refresh in MFP. | Refresh completes successfully. |

| 15 | Submit Patch Application task in AP. When Patch Application is in progress, perform workbook refresh in AP. | Cannot refresh. There was an error refreshing: DomainExclusiveException: Patch Application. |

| 16 | Submit Patch Application in AP. Schedule load dimension data in MFP such that the start time is while Patch Application is in progress. | Load Dimension Data remains in Scheduled state until Patch Application is complete. |

| 17 | While Patch Application is in progress in MFP, perform the workbook operations in MFP. | Restore, Save, or Delete format, Create Scenario failed with DomainExclusiveException: Patch Application. |

| 18 | While Patch Application is in progress in MFP, perform the workbook operations in AP. | Restore, Save, or Delete format completed successfully. For adding scenario, Scenario copy task is added and remains in pending state until patch is completed. On patch completion, scenario copy task also completes successfully. |