Note:

- This tutorial requires access to Oracle Cloud. To sign up for a free account, see Get started with Oracle Cloud Infrastructure Free Tier.

- It uses example values for Oracle Cloud Infrastructure credentials, tenancy, and compartments. When completing your lab, substitute these values with ones specific to your cloud environment.

Connect Alteryx to Oracle Big Data Service Hive Using ODBC and Kerberos

Introduction

Oracle Big Data Service is a cloud-based service provided by Oracle that enables users to create and manage Hadoop clusters, Spark clusters, and other big data services. By default, an Oracle Big Data Service cluster uses simple authentication mechanisms, such as username and password, to authenticate users and services. However, this may not be sufficient for organizations that require higher levels of security.

Kerberos authentication is a widely used mechanism for securing distributed computing systems. It provides a secure way to authenticate users and services, allowing them to communicate securely over a network. In a Kerberos-enabled environment, users and services are issued with cryptographic keys that are used to verify their identity and authorize access to resources.

Kerberos authentication can provide an additional layer of security for an Oracle Big Data Service cluster by enabling strong authentication and encryption between nodes.

Objectives

Explore the process of establishing seamless connectivity between Alteryx and Oracle Big Data Service Hive within a kerberized cluster. By implementing Kerberos authentication, we can ensure a secure environment for data processing and analysis.

- Learn how to kerberize the Oracle Big Data Service cluster

- Learn how to connect Alteryx to Oracle Big Data Service Hive

Prerequisites

An Oracle Big Data Service cluster running on Oracle Cloud Infrastructure (OCI) with required services configured and ports opened.

Note: A highly available (HA) Oracle Big Data Service cluster comes with Kerberos installed. If you want to use non HA cluster you need the following list of Prerequisites.

-

A Kerberos Key Distribution Center (KDC) and Kerberos admin server. You can install and configure the KDC and admin server on a separate server or use the KDC and admin server provided by your organization.

-

The

kadminutility used to create and manage Kerberos principals and keytabs. -

The

kinitutility used to obtain a Kerberos ticket-granting ticket (TGT) for an user or service principal. -

The

klistutility used to list the tickets in an user’s or service principal’s credential cache. -

A Windows machine with Alteryx installed. For the implementation in this tutorial, we have used the trial version of Alteryx.

-

MIT Kerberos.

-

The ODBC driver installed on your machine.

-

The necessary Kerberos principals and keytabs for your Oracle Big Data Service cluster. You will find more details about it in following sections.

-

Access to the ODBC Data Source Administrator tool on your machine.

Note: In this tutorial, we are using an Oracle Big Data Service HA cluster. You should also have a basic understanding of the following concepts and terminologies:

-

Kerberos principals: An entity that is authenticated by the Kerberos system. Principals can be users or services.

-

Keytabs: A file that contains a principal’s secret key. Keytabs are used by services to authenticate to the KDC.

-

Realms: A Kerberos realm is a collection of Kerberos principals that share a common KDC database.

-

Authentication: The process of verifying the identity of an user or service principal.

-

Authorization: The process of determining what resources an user or service principal is allowed to access.

Task 1: Kerberize an Oracle Big Data Service cluster

-

Log in to the Oracle Big Data Service cluster master node either through an

SSHcommand or by utilizing putty with theppkfile using theopcuser credentials. Once logged in, elevate your permissions to therootuser. In this tutorial, we have used putty to login into the nodes. -

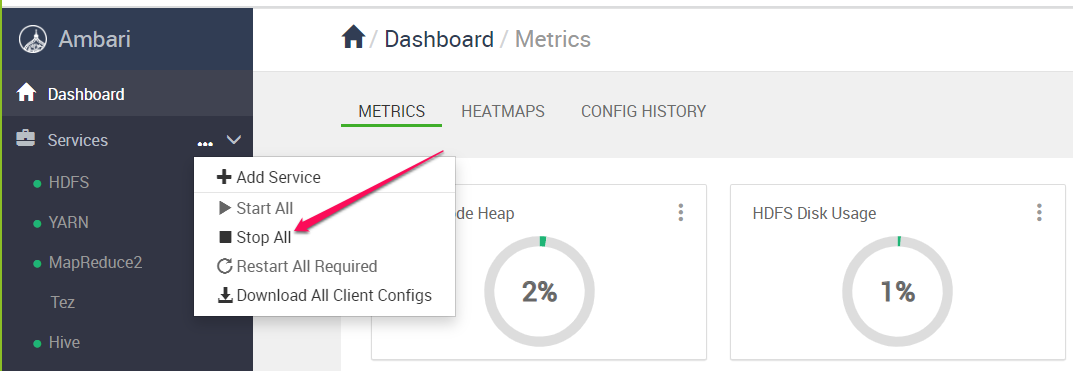

Stop all the running Hadoop and Spark services using Ambari.

-

Create the

bdsuserAdministrator Kerberos Principal by following these steps.-

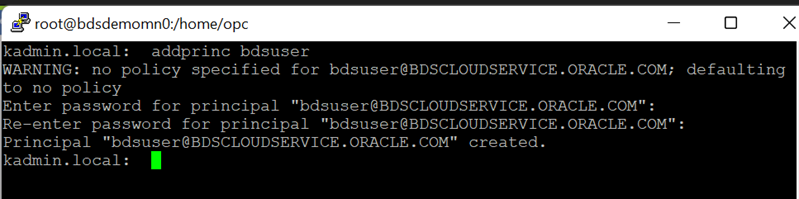

Enter

Kadminprompt using commandKadmin.local. -

At the command line prompt of

kadmin.local, execute theaddprinccommand to create a new Kerberos principal calledbdsuser. When prompted, choose a password of your preference, and confirm it.

-

Enter

exitto return to the original prompt. By default, the realm used here is selected fromkrb5.conf. However, you have the option to customize the realm by updating the configuration.

-

-

Create a group

bdsusergroupby executing commanddcli -C "groupadd bdsusergroup. -

Enter the command

dcli -C "useradd -g bdsusergroup -G hdfs,hadoop,hive bdsuser"at the prompt to create thebdsuseradministrator user and add it to the listed groups on each node in the cluster. -

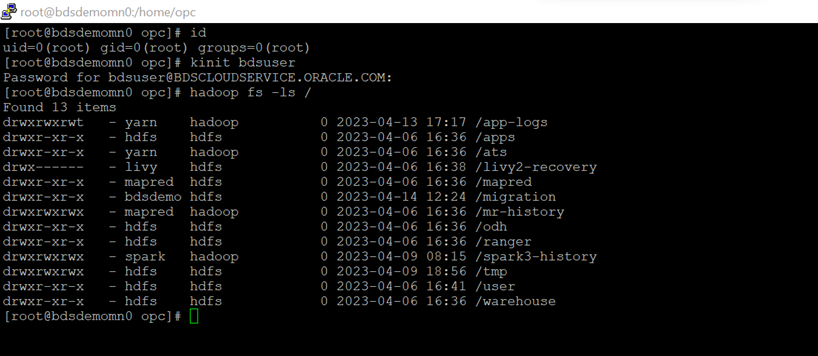

Use the linux

idcommand to confirm the creation of thebdsuserand to list its groups membership. -

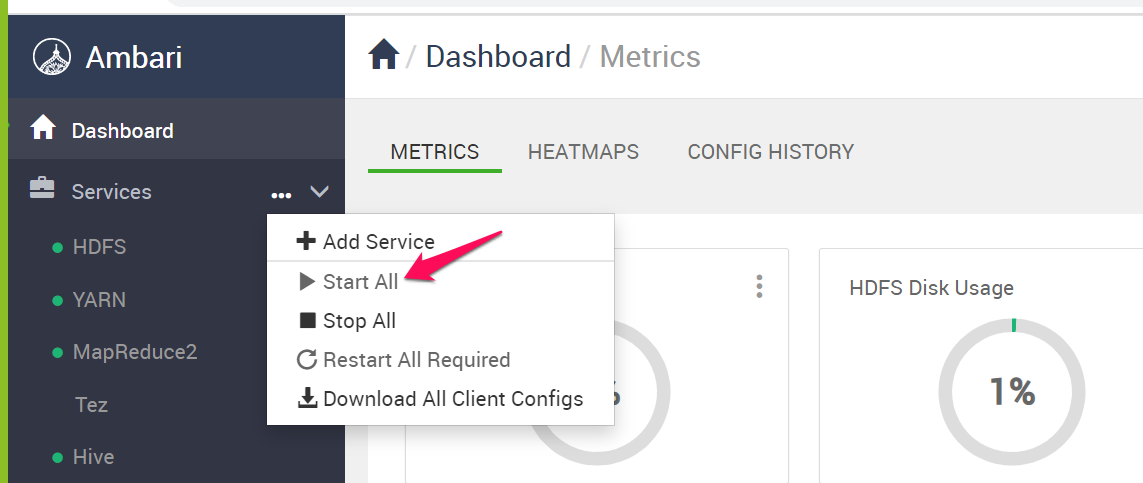

Start all the Oracle Big Data Service services using Ambari.

-

Test

bdsuserby generating Kerberos ticket using commandkinit bdsuserand list files usinghadoop fs -ls /command.

Task 2: Connect Alteryx to Oracle Big Data Service Hive

We explored the kerberization of Oracle Big Data Service cluster, and now we will dive into connecting Alteryx with the Kerberized Oracle Big Data Service Hive using the ODBC driver.

To unleash the full potential of your Oracle Big Data Service Hive data, it is crucial to establish a connection with your preferred tools. In this section, we will guide you through the process of connecting Alteryx to Oracle Big Data Service Hive. To achieve this, we will configure an ODBC connection that utilizes Kerberos authentication.

-

Configure MIT Kerberos by following these steps.

-

Copy

krb5.confandkrb5.keytabfile from cluster master node toC:\Program Files\MIT\Kerberos. -

Change the file extension from

.confto.inifor Windows compatibility. -

Create a new folder

C:/temp(Folder and path can be of your choice). -

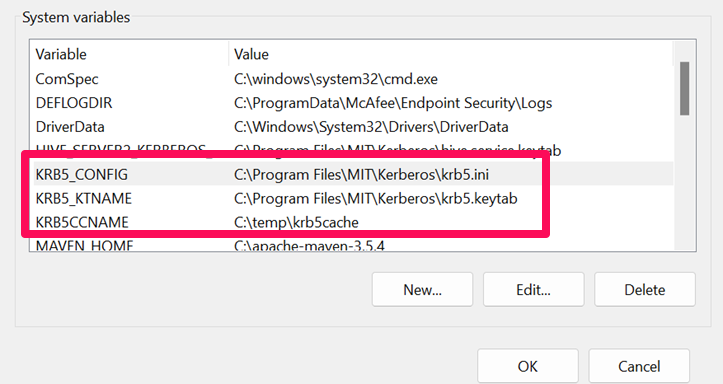

Setup following Environment variable.

-

Run command

Kinit bdsuserfrom command prompt to verify the Kerberos setup and generate Ticket.

-

-

Install the ODBC driver on your Windows machine. Follow the prompts to install the driver.

-

Configure the ODBC driver by following these steps.

-

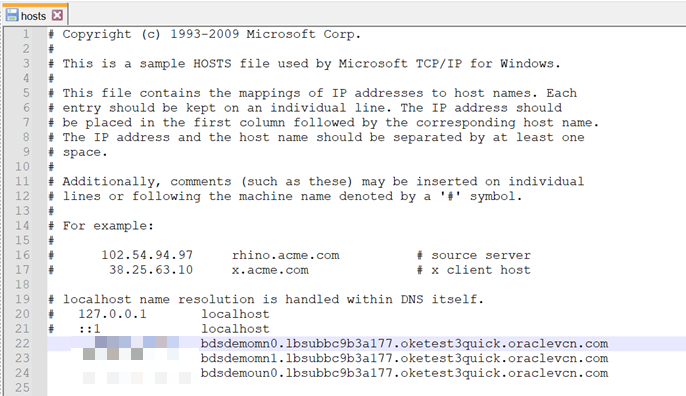

Make DNS and IP entries in hosts file

C:\Windows\System32\drivers\etcfor master and utility node.

-

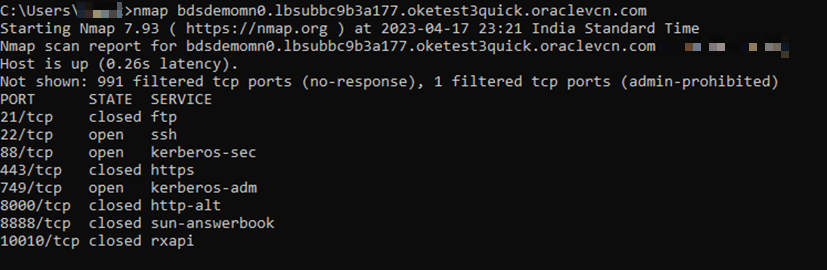

Test the hosts file entries using the

pingcommand.

-

-

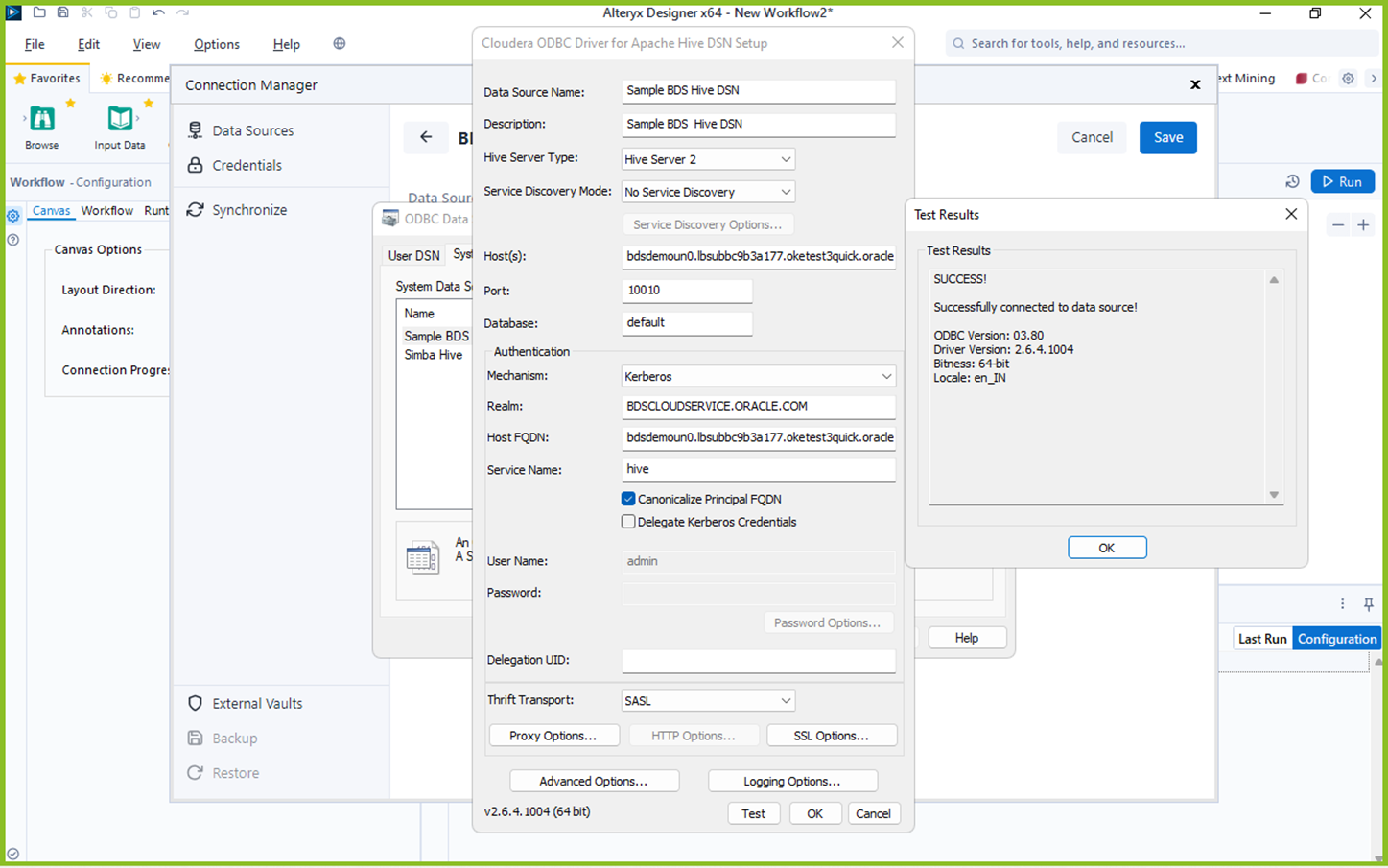

Open the ODBC Data Source Administrator tool on your machine and follow these steps.

-

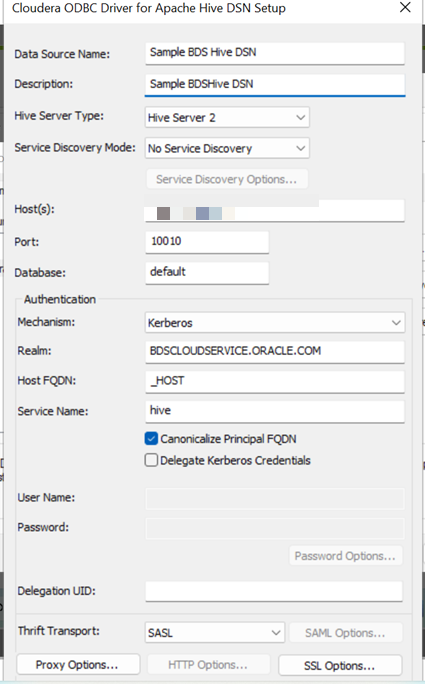

Choose System DSN tab. Click Add and Choose Cloudera ODBC driver for Apache Hive and then click Finish.

-

Follow the screenshot below for the Hive connection configuration.

-

Click Test to check connectivity. If everything is set up correctly, you should see a message indicating that the test succeeded. Click OK to save the setting.

-

-

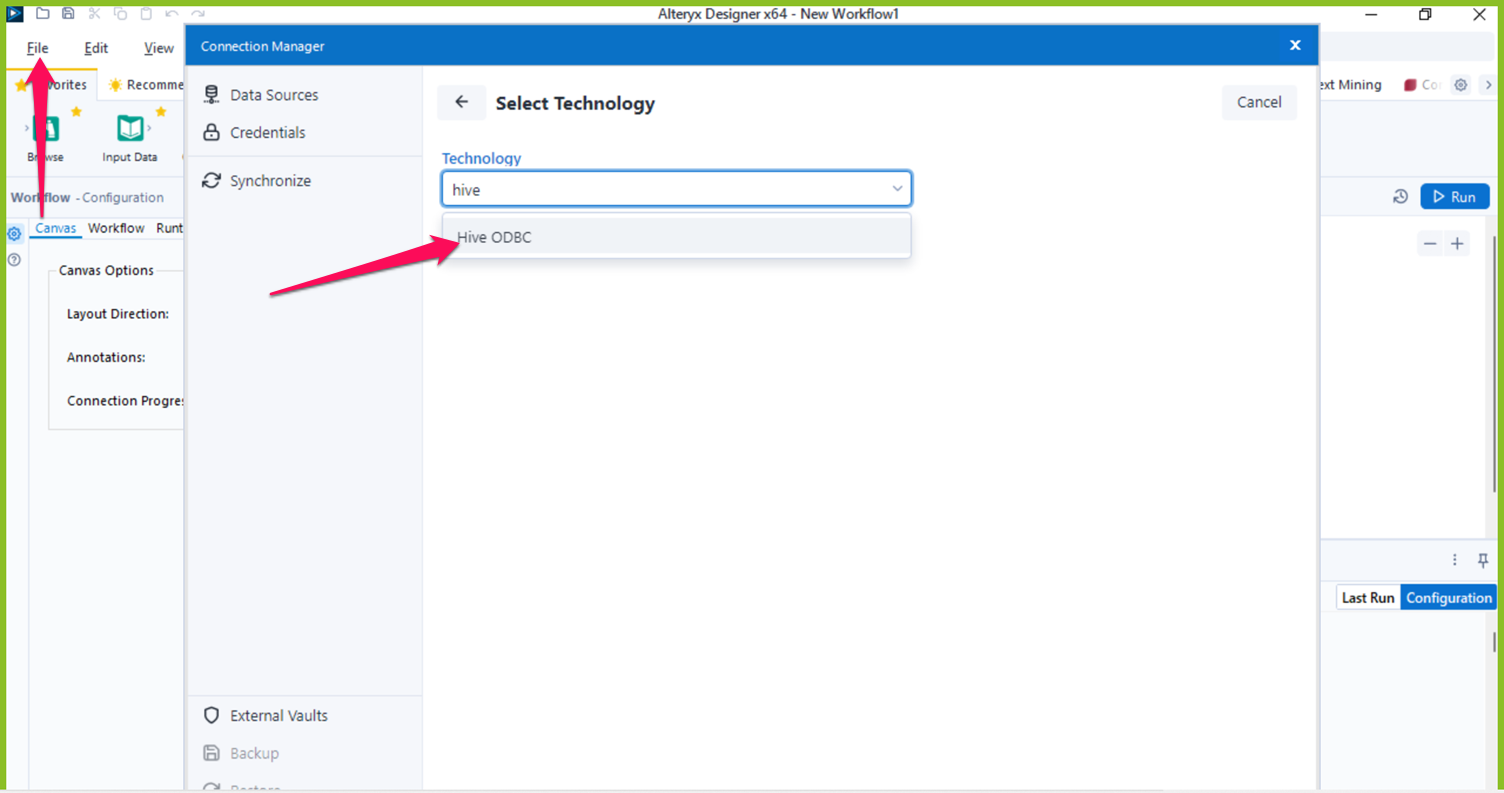

Open Alteryx and follow these steps.

-

Go to File, select Manage Connection and click Add datasource. Choose Hive from the available technology options.

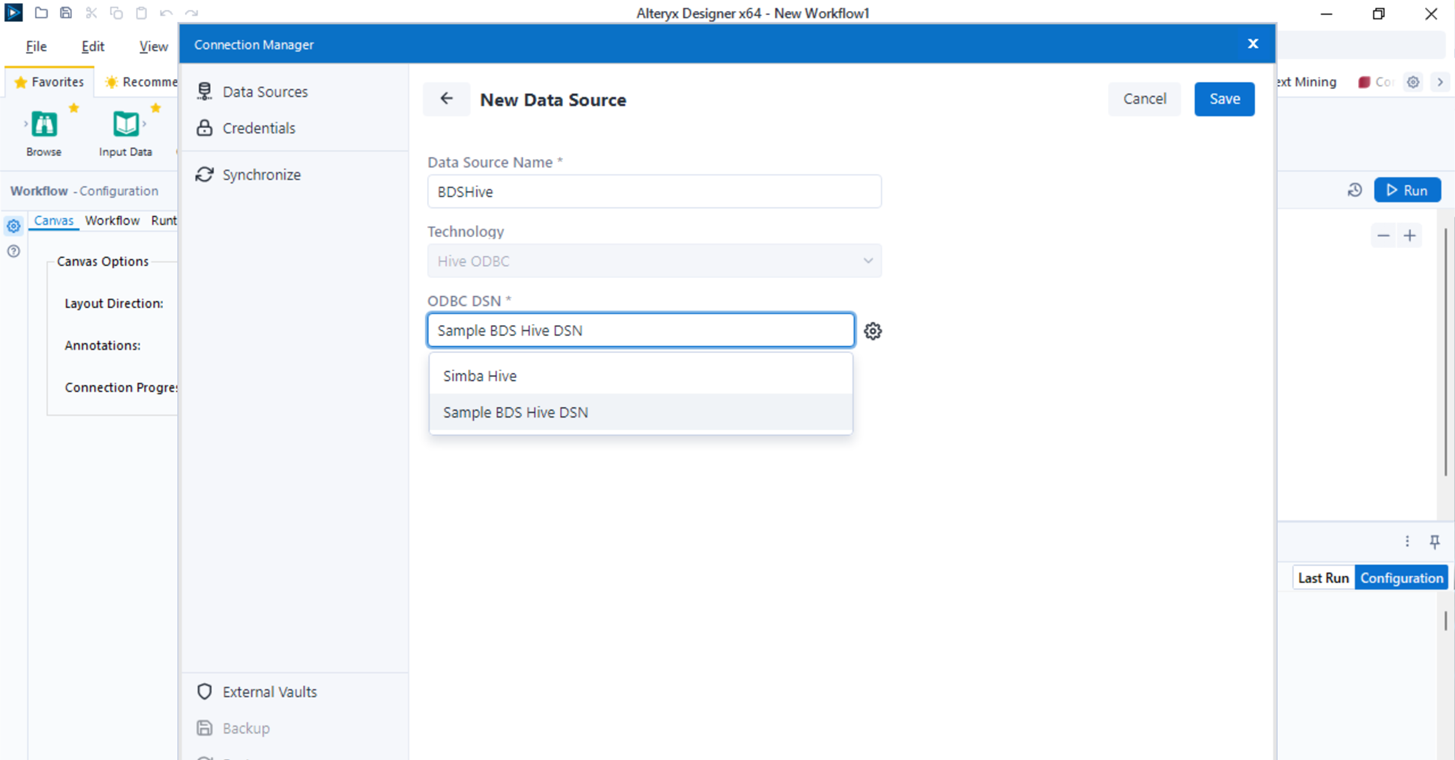

-

Once you have selected the technology option, you will be prompted to the next screen where you need to provide the DSN name. Select the ODBC DSN which you created in the previous step and click Save.

-

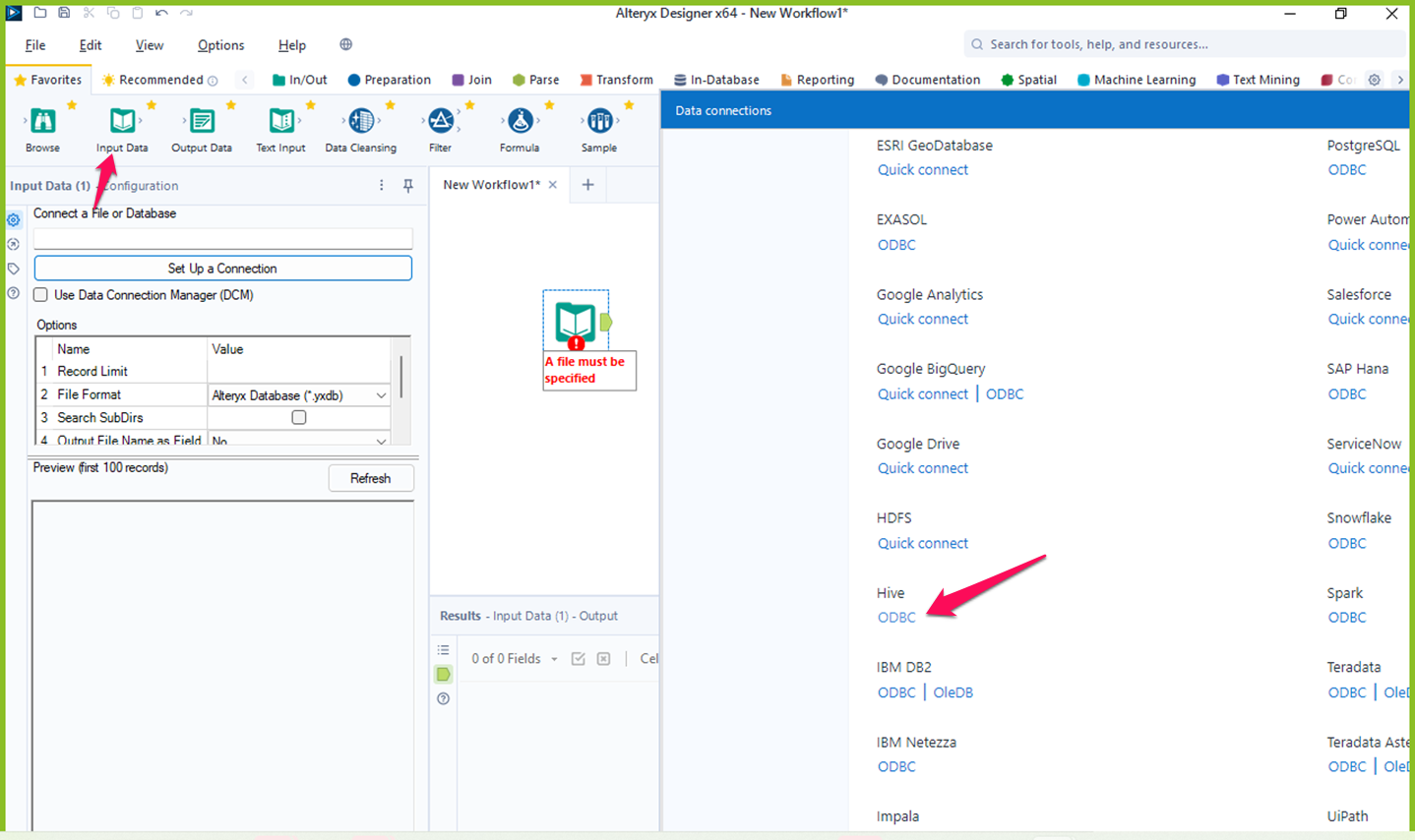

We are done with the connection setup. Now let’s build a sample workflow to read the data from Hive. To create a sample workflow, click New workflow, then drag and drop the input data task.

-

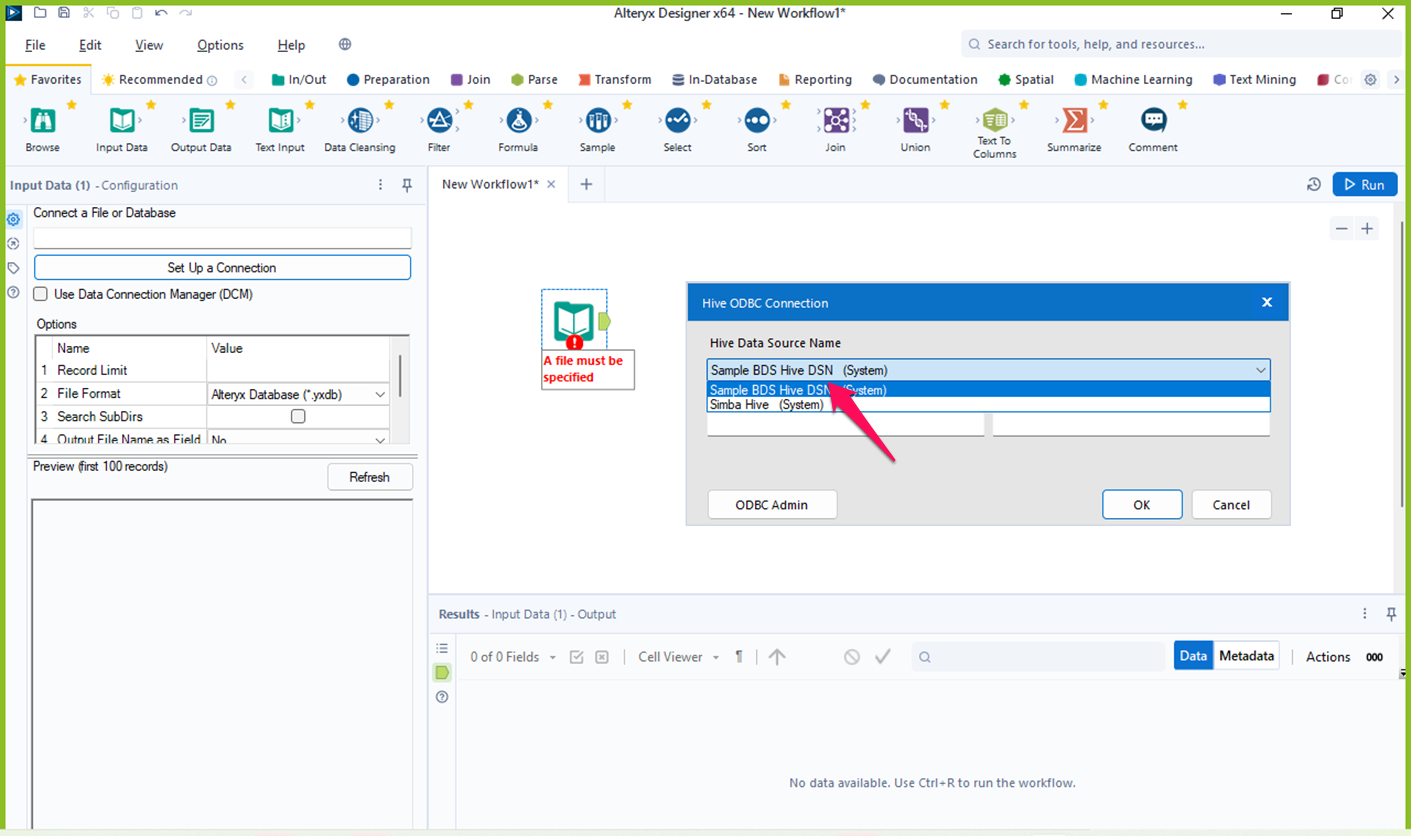

Select Hive ODBC from the connection list.

-

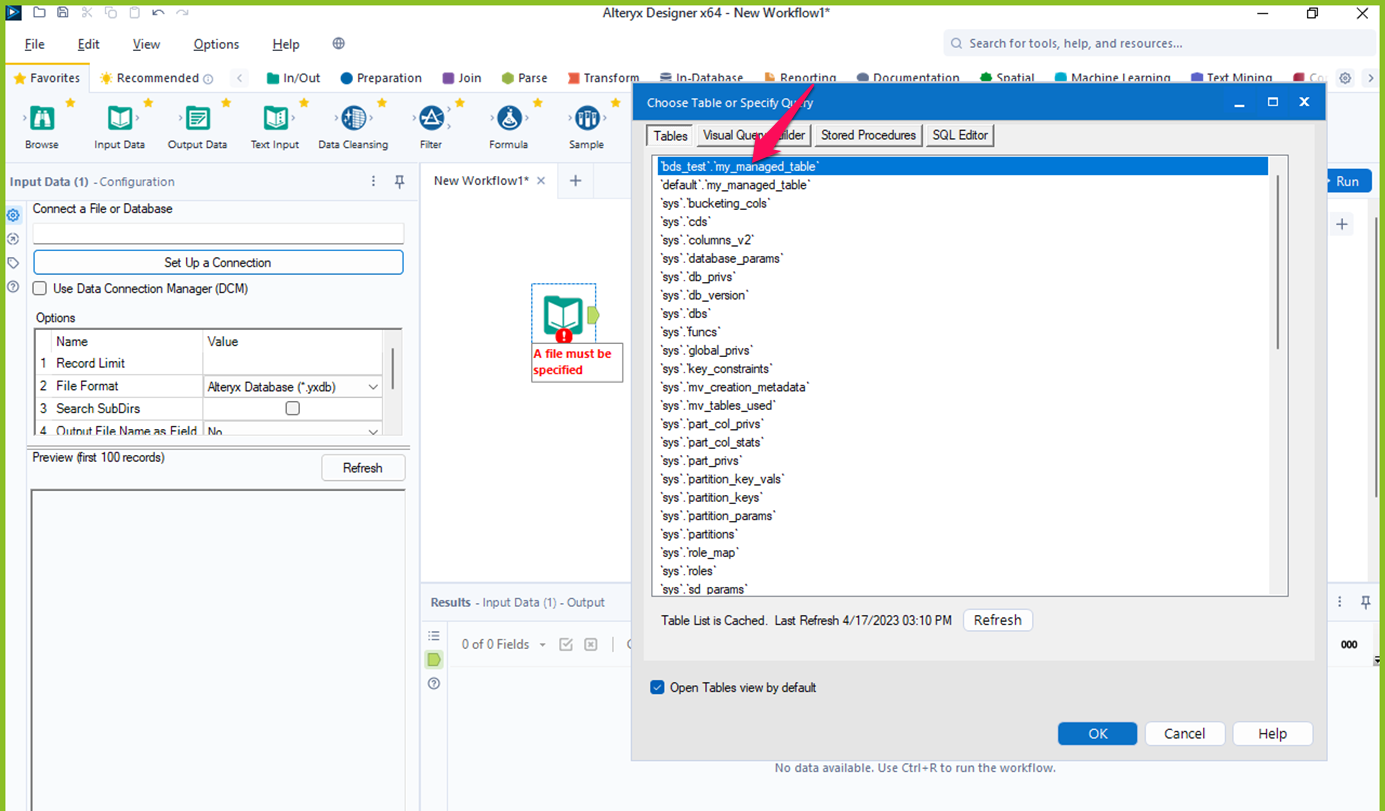

Select the Hive table to fetch data.

-

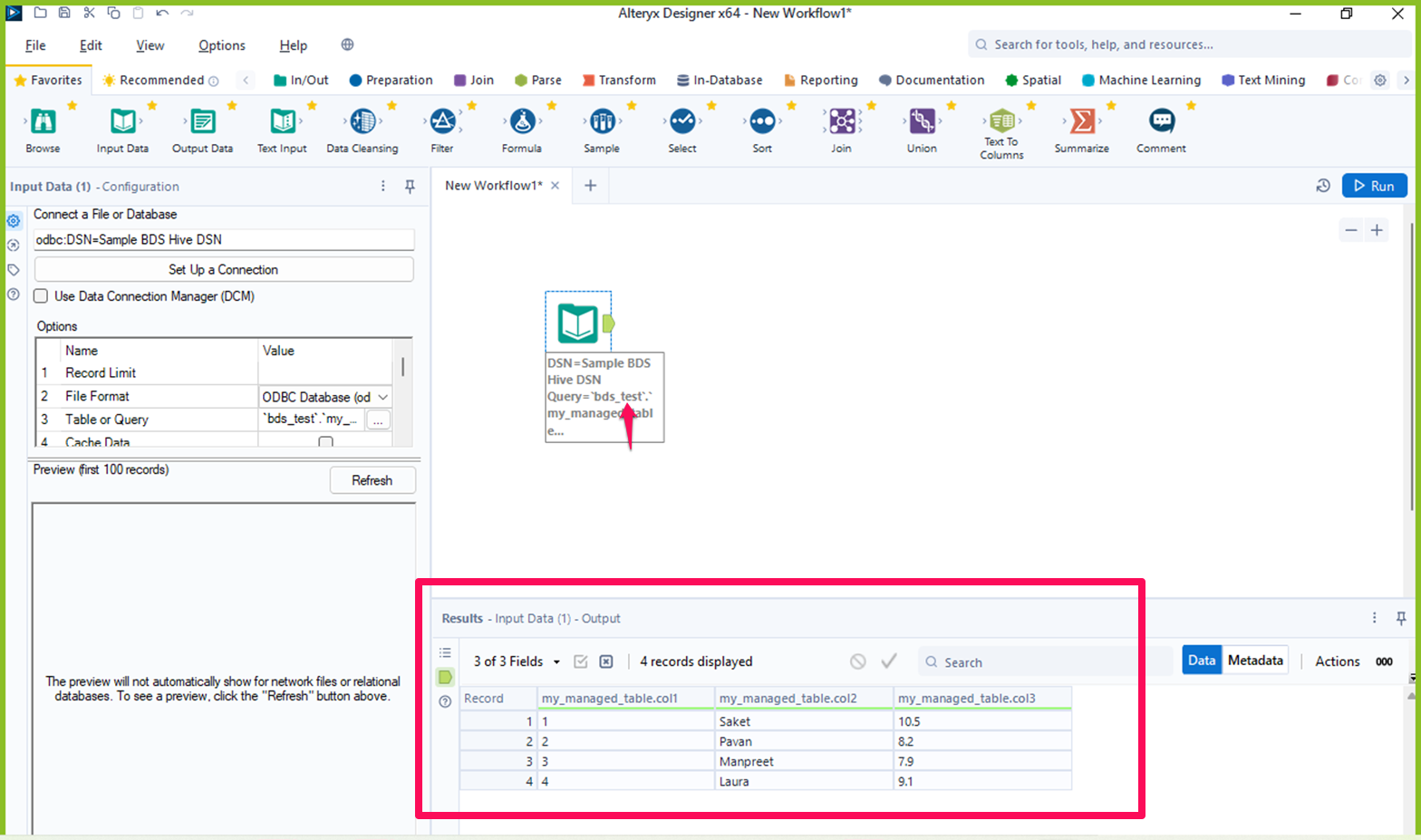

Run the workflow and you can see that Alteryx can successfully retrieve data from Oracle Big Data Service Hive.

-

Next Steps

Kerberos authentication is an essential component of a secure Hadoop cluster. By Kerberizing your Oracle Big Data Service cluster, you can better protect your sensitive data and applications from unauthorized access. While the process of Kerberizing your cluster can be challenging, careful planning and attention to detail can help ensure a successful implementation. Also, connecting Alteryx to Oracle Big Data Service Hive using ODBC and Kerberos authentication can be a bit tricky, but once you’ve got it set up, you’ll have Alteryx for analyzing and visualizing your Oracle Big Data Service data. By following the steps outlined in this tutorial, you should be able to set up the connection easily.

If you do run into issues, you can troubleshoot. With a bit of persistence, you’ll be able to connect Alteryx to Oracle Big Data Service Hive and unlock the full potential of your data.

Related Links

Acknowledgements

- Authors - Pavan Upadhyay (Principal Cloud Engineer), Saket Bihari (Principal Cloud Engineer)

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Connect Alteryx to Oracle Big Data Service Hive Using ODBC and Kerberos

F85222-01

August 2023

Copyright © 2023, Oracle and/or its affiliates.