Note:

- This tutorial requires access to Oracle Cloud. To sign up for a free account, see Get started with Oracle Cloud Infrastructure Free Tier.

- It uses example values for Oracle Cloud Infrastructure credentials, tenancy, and compartments. When completing your lab, substitute these values with ones specific to your cloud environment.

Deploy NVIDIA NIM on OKE for Inference with the Model Repository Stored on OCI Object Storage

Introduction

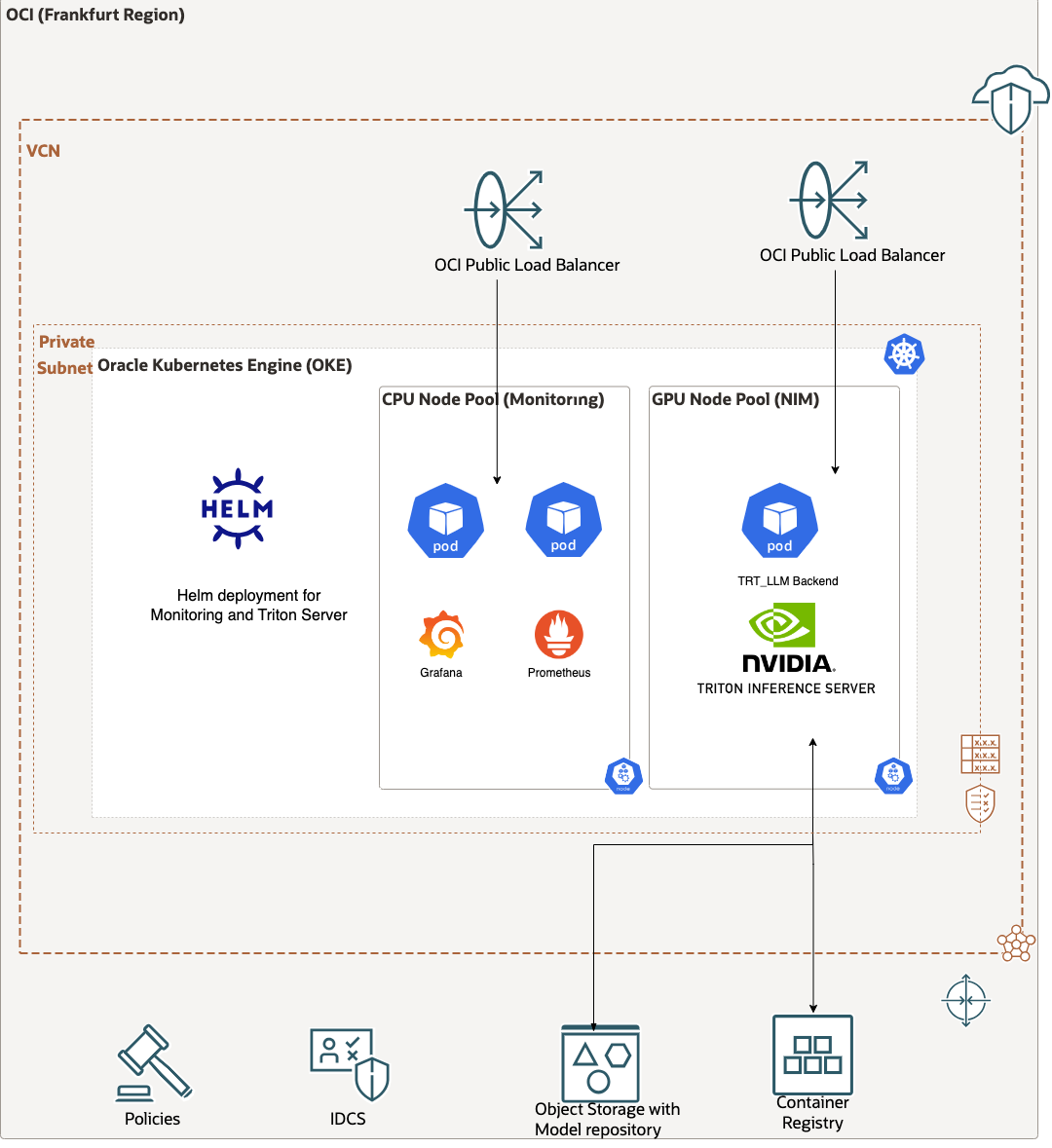

This tutorial demonstrates how to deploy NVIDIA NIM on Oracle Cloud Infrastructure Container Engine for Kubernetes (OKE) with NVIDIA TensorRT-LLM backend and NVIDIA Triton inference server to serve Large Language Models (LLMs) in a Kubernetes architecture. The model used is Llama2-7B-chat on a GPU A10. For scalability, we are hosting the model repository on a bucket in OCI Object Storage.

Note: All the tests of this tutorial have been released with an early access version of NVIDIA NIM for LLM’s with

nemollm-inference-ms:24.02.rc4.

Objectives

- Achieve a scalable deployment of a LLM inference server.

Prerequisites

-

Access to an Oracle Cloud Infrastructure (OCI) tenancy.

-

Access to shapes with NVIDIA GPU such as A10 GPUs (i.e.,

VM.GPU.A10.1). For more information on requests to increase the limit, see Service Limits. -

The ability for your instance to authenticate via instance principal. For more information, see Calling Services from an Instance.

-

Access to NVIDIA AI Enterprise to pull the NVIDIA NIM containers. For more information, see NVIDIA AI Enterprise.

-

A HuggingFace account with an access token configured to download

llama2-7B-chat. -

Knowledge of basic terminology of Kubernetes and Helm.

Task 1: Create a GPU Instance in OCI Compute

-

Log in to the OCI Console, navigate to OCI Menu, Compute, Instances and click Create Instance.

-

Select

VM.GPU.A10.1with the Oracle Cloud Marketplace image NVIDIA GPU Cloud machine image and a boot volume of 250GB. For more information, see Using NVIDIA GPU Cloud with Oracle Cloud Infrastructure. -

Once the machine is up, connect to it using your private key and the machine public IP.

ssh -i <private_key> ubuntu@<public_ip> -

Ensure the boot volume has increased space.

df -h # check the initial space on disk sudo growpart /dev/sda 1 sudo resize2fs /dev/sda1 df -h # check the space after the commands execution

Task 2: Update the NVIDIA Drivers (Optional)

It is recommended to update your drivers to the latest version based on guidance provided by NVIDIA with the compatibility matrix between the drivers and your CUDA version. For more information, see CUDA Compatibility and CUDA Toolkit 12.4 Update 1 Downloads.

sudo apt purge nvidia* libnvidia*

sudo apt-get install -y cuda-drivers-545

sudo apt-get install -y nvidia-kernel-open-545

sudo apt-get -y install cuda-toolkit-12-3

sudo reboot

Make sure you have nvidia-container-toolkit.

sudo apt-get install -y nvidia-container-toolkit

sudo nvidia-ctk runtime configure --runtime=docker

sudo systemctl restart docker

Run the following command to check the new version.

nvidia-smi

/usr/local/cuda/bin/nvcc --version

Task 3: Prepare the Model Registry

It is possible to use pre-built models. However, we choose to run Llama2-7B-chat on a A10 GPU. At the time of writing, this choice is not available and therefore we have to build the model repository ourselves.

-

Create a bucket named

NIMin OCI Object Storage. For more information, see Creating an Object Storage Bucket. -

Go to the terminal window, log in to the NVIDIA container registry with your username and password and pull the container. Run the following command.

docker login nvcr.io docker pull nvcr.io/ohlfw0olaadg/ea-participants/nemollm-inference-ms:24.02.rc4 -

Clone the HuggingFace model.

# Install git-lfs curl -s https://packagecloud.io/install/repositories/github/git-lfs/script.deb.sh | sudo bash sudo apt-get install git-lfs # clone the model from HF git clone https://huggingface.co/meta-llama/Llama-2-7b-chat-hf -

Create the model config.

Copy

model_config.yamlfile and create the directory to host the model store. This is where the model repository generator command will store the output.mkdir model-store chmod -R 777 model-store -

Run the model repository generator command.

docker run --rm -it --gpus all -v $(pwd)/model-store:/model-store -v $(pwd)/model_config.yaml:/model_config.yaml -v $(pwd)/Llama-2-7b-chat-hf:/engine_dir nvcr.io/ohlfw0olaadg/ea-participants/nemollm-inference-ms:24.02.rc4 bash -c "model_repo_generator llm --verbose --yaml_config_file=/model_config.yaml" -

Export the model repository to an OCI Object Storage bucket.

The model repository is located in the directory

model-store. You can use Oracle Cloud Infrastructure Command Line Interface (OCI CLI) to do a bulk upload to one of your buckets in the region. For this tutorial, bucket isNIMwhere we want the model store to be uploaded inNIM/llama2-7b-hf(in case we upload different model configuration to the same bucket).cd model-store oci os object bulk-upload -bn NIM --src-dir . --prefix llama2-7b-hf/ --auth instance_principal

Task 4: Submit a Request to the Virtual Machine (IaaS Execution)

Now, the model repository is uploaded to one OCI Object Storage bucket.

Note: The option parameter

--model-repositoryis currently hardcoded in the container, we cannot simply point to the bucket when we start it. One option will be to adapt the Python script within the container but we will need sudo privilege. The other will be to mount the bucket as a file system on the machine directly. For this tutorial, we choose the second method with rclone. Make surefuse3andjqare installed on the machine. On Ubuntu, you can runsudo apt install fuse3 jq.

-

Get your Namespace, Compartment OCID and Region, either fetching them from the OCI Console or by running the following commands from your compute instance.

#NAMESPACE: echo namespace is : `oci os ns get --auth instance_principal | jq .data` #COMPARTMENT_OCID: echo compartment ocid is: `curl -H "Authorization: Bearer Oracle" -L http://169.254.169.254/opc/v2/instance/ | jq .compartmentId` #REGION: echo region is: `curl -H "Authorization: Bearer Oracle" -L http://169.254.169.254/opc/v2/instance/ | jq .region` -

Download and install rclone.

curl https://rclone.org/install.sh | sudo bash -

Prepare the rclone configuration file. Make sure to update

##NAMESPACE####COMPARTMENT_OCID####REGION##with the your values.mkdir -p ~/rclone mkdir -p ~/test_directory/model_bucket_oci cat << EOF > ~/rclone/rclone.conf [model_bucket_oci] type = oracleobjectstorage provider = instance_principal_auth namespace = ##NAMESPACE## compartment = ##COMPARTMENT_OCID## region = ##REGION## EOF -

Mount the bucket using rclone.

sudo /usr/bin/rclone mount --config=$HOME/rclone/rclone.conf --tpslimit 50 --vfs-cache-mode writes --allow-non-empty --transfers 10 --allow-other model_bucket_oci:NIM/llama2-7b-hf $HOME/test_directory/model_bucket_oci -

In another terminal window, you can check that

ls $HOME/test_directory/model_bucket_ocireturns the content of the bucket. -

In another terminal window, start the container passing the path to the

model-storeas an argument.docker run --gpus all -p9999:9999 -p9998:9998 -v $HOME/test_directory/model_bucket_oci:/model-store nvcr.io/ohlfw0olaadg/ea-participants/nemollm-inference-ms:24.02.rc4 nemollm_inference_ms --model llama2-7b-chat --openai_port="9999" --nemo_port="9998" --num_gpus 1 -

After 3 minutes, the inference server should be ready to serve. In another terminal window, you can run the following request.

Note: If you want to run it from your local machine you will have to use the public IP and open the port

9999at both the machine and subnet level.curl -X "POST" 'http://localhost:9999/v1/completions' -H 'accept: application/json' -H 'Content-Type: application/json' -d '{ "model": "llama2-7b-chat", "prompt": "Can you briefly describe Oracle Cloud?", "max_tokens": 100, "temperature": 0.7, "n": 1, "stream": false, "stop": "string", "frequency_penalty": 0.0 }' | jq ".choices[0].text"

Task 5: Update the cloud-init Script

Note: Ideally, a cleaner way of using rclone in Kubernetes would be to use the rclone container as a sidecar before starting the inference server. This works fine locally using Docker but because it needs the

--deviceoption to usefuse, this makes it complicated to use with Kubernetes due to the lack of support for this feature (FUSE volumes, a feature request from 2015 still very active as of March 2024). For this tutorial, the workaround we choose is to set up rclone as a service on the host and mount the bucket on startup.

In the cloud-init script, replace the value of ##NAMESPACE##, ##COMPARTMENT_OCID## and ##REGION## lines 17, 18 and 19 with the values retrieved in Task 4.1. You can also update the value of the bucket in line 57. By default, it is called NIM and has a directory called llama2-7b-hf.

This cloud-init script will be uploaded on your GPU node in your OKE cluster. The first part consists in increasing the boot volume to the value set. Then it downloads rclone, creates the correct directories and creates the configuration file, the same way as we have done on the GPU VM. Finally, it starts rclone as a service and mounts the bucket to /opt/mnt/model_bucket_oci.

Task 6: Deploy on OKE

The target architecture at the end of the deployment is as shown in the following image.

Now, put everything together in OKE.

Create an OKE cluster with slight adaptations. For more information, see Using the Console to create a Cluster with Default Settings in the ‘Quick Create’ workflow.

-

Start by creating 1 node pool called

monitoringthat will be used for monitoring with 1 node only (i.e.,VM.Standard.E4.Flexwith 5 OCPU and 80GB RAM) with the default image. -

Once your cluster is up, create another node pool with 1 GPU node (i.e.,

VM.GPU.A10.1) calledNIMwith the default image with the GPU drivers (i.e.,Oracle-Linux-8.X-Gen2-GPU-XXXX.XX.XX).Note: Make sure to increase the boot volume (350GB) and add the previously modified cloud-init script in Show advanced options and Initialization script.

Task 7: Deploy using Helm in OCI Cloud Shell

To access OCI Cloud Shell, see To access Cloud Shell via the Console.

-

You can find the Helm configuration in the archive

oke.zip, where you need to updatevalues.yaml. Upload the archive to your OCI Cloud Shell and unzip it. For more information, see To upload a file to Cloud Shell using the menu.unzip oke.zip cd oke -

Review your credentials for the secret to pull the image in

values.yaml. For more information, see Creating Image Pull Secrets.registry: nvcr.io username: $oauthtoken password: <YOUR_KEY_FROM_NVIDIA> email: someone@host.com

Task 8: Deploy the Monitoring

The monitoring consists of Grafana and Prometheus pods. The configuration comes from kube-prometheus-stack .

Here we add a public load balancer to reach the Grafana dashboard from the Internet. Use username=admin and password=xxxxxxxxx to log in. The serviceMonitorSelectorNilUsesHelmValues flag is needed so that Prometheus can find the inference server metrics in the example release deployed.

-

Deploy the monitoring pods.

helm install example-metrics --set prometheus.prometheusSpec.serviceMonitorSelectorNilUsesHelmValues=false --set grafana.service.type=LoadBalancer prometheus-community/kube-prometheus-stack --debug

Note: The default load balancer created with a fixed shape and a bandwidth of 100Mbps. You can switch to a flexible shape and adapt the bandwidth according to your OCI limits in case the bandwidth is a bottleneck. For more information, see Provisioning OCI Load Balancers for Kubernetes Services of Type LoadBalancer.

-

An example Grafana dashboard is available in

dashboard-review.jsonlocated inoke.zip. Use the import function in Grafana to import and view this dashboard. -

You can see the public IP of your Grafana dashboard by running the following command.

$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 2m33s example-metrics-grafana LoadBalancer 10.96.82.33 141.145.220.114 80:31005/TCP 2m38s

Task 9: Deploy the Inference Server

-

Run the following command to deploy the inference server using the default configuration.

cd <directory containing Chart.yaml> helm install example . -f values.yaml --debug -

Use

kubectlto see the status and wait until the inference server pods are running. The first pull might take a few minutes. Once the container is created, loading the model also takes a few minutes. You can monitor the pod with the following command.kubectl describe pods <POD_NAME> kubectl logs <POD_NAME> -

Once the set up is complete, your container should be running.

$ kubectl get pods NAME READY STATUS RESTARTS AGE example-triton-inference-server-5f74b55885-n6lt7 1/1 Running 0 2m21s

Task 10: Use Triton Inference Server on your NVIDIA NIM Container

The inference server is running, you can send HTTP or Google Remote Procedure Call (gRPC) requests to it to perform inferencing. By default, the inferencing service is exposed with a LoadBalancer service type. Use the following to find the external IP for the inference server. In this tutorial, it is 34.83.9.133.

-

Get the services to get the public IP of your inference server.

$ kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ... example-triton-inference-server LoadBalancer 10.18.13.28 34.83.9.133 8000:30249/TCP,8001:30068/TCP,8002:32723/TCP 47m -

The inference server exposes an HTTP endpoint on port

8000, and gRPC endpoint on port8001and a Prometheus metrics endpoint on port8002. You can use curl to get the metadata of the inference server from the HTTP endpoint.$ curl 34.83.9.133:8000/v2 -

From your client machine, you can send a request to the public IP on port

9999.curl -X "POST" 'http://34.83.9.133:9999/v1/completions' -H 'accept: application/json' -H 'Content-Type: application/json' -d '{ "model": "llama2-7b-chat", "prompt": "Can you briefly describe Oracle Cloud?", "max_tokens": 100, "temperature": 0.7, "n": 1, "stream": false, "stop": "string", "frequency_penalty": 0.0 }' | jq ".choices[0].text"The output should look like:

"\n\nOracle Cloud is a comprehensive cloud computing platform offered by Oracle Corporation. It provides a wide range of cloud services, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Oracle Cloud offers a variety of benefits, including:\n\n1. Scalability: Oracle Cloud allows customers to scale their resources up or down as needed, providing the flexibility to handle changes in business demand."

Task 11: Clean up the Deployment

-

Once you have finished using the inference server, you should use helm to delete the deployment.

$ helm list NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE example 1 Wed Feb 27 22:16:55 2019 DEPLOYED triton-inference-server-1.0.0 1.0 default example-metrics 1 Tue Jan 21 12:24:07 2020 DEPLOYED prometheus-operator-6.18.0 0.32.0 default $ helm uninstall example --debug $ helm uninstall example-metrics -

For the Prometheus and Grafana services, you should explicitly delete CRDs. For more information, see Uninstall Helm Chart.

$ kubectl delete crd alertmanagerconfigs.monitoring.coreos.com alertmanagers.monitoring.coreos.com podmonitors.monitoring.coreos.com probes.monitoring.coreos.com prometheuses.monitoring.coreos.com prometheusrules.monitoring.coreos.com servicemonitors.monitoring.coreos.com thanosrulers.monitoring.coreos.com -

You may also delete the OCI Object Storage bucket created to hold the model repository.

$ oci os bucket delete --bucket-name NIM --empty

Related Links

Acknowledgments

- Author - Bruno Garbaccio (AI Infra/GPU Specialist, EMEA)

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Deploy NVIDIA NIM on OKE for Inference with the Model Repository Stored on OCI Object Storage

F96568-01

April 2024