Note:

- This tutorial requires access to Oracle Cloud. To sign up for a free account, see Get started with Oracle Cloud Infrastructure Free Tier.

- It uses example values for Oracle Cloud Infrastructure credentials, tenancy, and compartments. When completing your lab, substitute these values with ones specific to your cloud environment.

Use iPerf to Test the Throughput inside an OCI Hub and Spoke VCN Routing Architecture

Introduction

In today’s rapidly evolving cloud environments, ensuring optimal network performance is crucial for seamless operations. Oracle Cloud Infrastructure (OCI) provides robust networking capabilities, including the Hub and Spoke Virtual Cloud Network (VCN) routing architecture, to facilitate efficient communication and resource management. One essential aspect of maintaining this architecture is regularly testing the network throughput to identify potential bottlenecks and optimize performance.

In this tutorial, we will use iPerf, a powerful network testing tool, to measure and analyze the throughput within an OCI Hub and Spoke VCN routing architecture. By the end of this tutorial, you will be equipped with the knowledge to effectively assess and enhance your OCI network performance, ensuring your applications and services run smoothly.

Note: The test results obtained using iPerf depend highly on various factors, including network conditions, hardware configurations, and software settings specific to your environment. As such, these results may differ significantly from those in other environments. Do not use these results to make any definitive conclusions about the expected performance of your network or equipment. They should be considered as indicative rather than absolute measures of performance.

iPerf Versions

iPerf, iPerf2, and iPerf3 are tools used to measure network bandwidth, performance, and throughput between two endpoints. However, they have some key differences in terms of features, performance, and development status.

Overview:

-

iPerf (original)

- Release: Initially released around 2003.

- Development: The original iPerf has largely been replaced by its successors (iPerf2 and iPerf3).

- Features: Basic functionality for testing network bandwidth using TCP and UDP.

- Limitations: Over time, it became outdated due to a lack of support for modern networking features.

-

iPerf2

- Release: Forked from the original iPerf and maintained independently.

- Development:: Actively maintained, especially by Energy Sciences Network (ESnet).

- Features:

- Supports both TCP and UDP tests.

- Multithreading: iPerf2 supports multithreaded testing, which can be useful when testing high-throughput environments.

- UDP multicast and bidirectional tests.

- Protocol Flexibility: Better handling of IPv6, multicast, and other advanced networking protocols.

- Performance: Performs better than the original iPerf for higher throughput due to multithreading support.

- Use Case: Best for situations where legacy features, such as IPv6 and multicast, are necessary, or if you require multithreading in testing.

-

iPerf3

- Release: Rewritten and released by the same team (ESnet) that maintains iPerf2. The rewrite focused on cleaning up the codebase and modernizing the tool.

- Development: Actively maintained with frequent updates.

- Features:

- Supports both TCP and UDP tests.

- Single-threaded: iPerf3 does not support multithreading, which can be a limitation for high throughput in certain environments.

- Supports reverse mode for testing in both directions, bidirectional tests, and multiple streams for TCP tests.

- JSON output for easier integration with other tools.

- Improved error reporting and network statistics.

- Optimized for modern network interfaces and features like QoS and congestion control.

- Performance: iPerf3 is optimized for modern networks but lacks multithreaded capabilities, which can sometimes limit its performance on high-bandwidth or multi-core systems.

- Use Case: Best for most modern networking environments where simpler performance tests are required without the need for multithreading.

Key Differences:

| Feature | iPerf | iPerf2 | iPerf3 |

|---|---|---|---|

| Development | Discontinued | Actively Maintained | Actively Maintained |

| TCP and UDP Tests | Yes | Yes | Yes |

| Multithreading Support | No | Yes | No |

| UDP Multicast | No | Yes | No |

| IPv6 Support | No | Yes | Yes |

| JSON Output | No | No | Yes |

| Reverse Mode | No | Yes | Yes |

Note: We will use iPerf2 where possible in this tutorial.

Best for High Throughput?

For high-throughput environments, iPerf2 is often the best choice due to its multithreading capabilities, which can take full advantage of multiple CPU cores. This is especially important if you are working with network interfaces capable of handling multiple gigabits per second (Gbps) of traffic.

If multithreading is not crucial, iPerf3 is a good choice for simpler setups or modern networks with features like QoS and congestion control. However, in very high-throughput environments, its single-threaded nature might become a bottleneck.

Why is Maximum Segment Size (MSS) Clamping used?

Note: When traffic is flowing through an Internet Protocol Security (IPSec) tunnel through the pfSense Firewall MSS is something to pay attention to.

MSS clamping refers to Maximum Segment Size Clamping, which is a technique used in network communication, particularly in TCP/IP networks, to adjust the MSS of a TCP packet during the connection set up process. The MSS defines the largest amount of data that a device can handle in a single TCP segment, and it is typically negotiated between the communicating devices during the TCP handshake.

MSS clamping is often employed by network devices such as routers, firewalls, or VPNs to avoid issues related to packet fragmentation. Here is how it works:

-

Packet Fragmentation Issues: If the MSS is too large, packets may exceed the Maximum Transmission Unit (MTU) of the network path, leading to fragmentation. This can cause inefficiency, increased overhead, or in some cases, packet loss if the network does not handle fragmentation well.

-

Reducing the MSS: MSS clamping allows the network device to adjust (or clamp) the MSS value downward during the TCP handshake, making sure that the packet sizes are small enough to traverse the network path without needing fragmentation.

-

Use in VPNs: MSS clamping is commonly used in VPN scenarios where the MTU size is reduced due to encryption overhead. Without MSS clamping, packets might get fragmented, reducing performance.

Example of MSS clamping: If a client device sends an MSS value of 1460 bytes during the TCP handshake but the network’s MTU is limited to 1400 bytes due to VPN encapsulation, the network device can clamp the MSS to 1360 bytes (allowing for the extra overhead) to avoid fragmentation issues.

Important Parameters

-

Ports Used

The default ports used by iPerf2 and iPerf3 for TCP and UDP are:

TCP Port UDP Port iPerf2 5001 5001 iPerf3 5201 5201 Both versions allow you to specify a different port using the

-pflag if necessary.For testing purposes, we recommend opening all ports between the source and destination IP addresses of the iPerf endpoints.

-

MTU Sizes

iPerf will send data between a specific source and destination that you determine up front.

When running an iPerf test, understanding the MTU size is crucial because it directly impacts network performance, packet fragmentation, and test accuracy. Here is what you should consider regarding MTU sizes during an iPerf test.

-

Default MTU Size:

-

The default MTU size for Ethernet is 1500 bytes, but this can vary based on the network configuration.

-

Larger or smaller MTU sizes can affect the maximum size of packets sent during the iPerf test. Smaller MTU sizes will require more packets for the same amount of data, while larger MTU sizes can reduce the overhead.

-

-

Packet Fragmentation:

-

If the MTU size is set too small, or if the iPerf packet size is larger than the network’s MTU, packets may be fragmented. Fragmented packets can lead to higher latency and reduced performance in your test.

-

iPerf can generate packets up to a specific size, and if they exceed the MTU, they will need to be split, introducing extra overhead and making the results less reflective of real-world performance.

-

-

Jumbo Frames: Some networks support jumbo frames, where the MTU is larger than the standard 1500 bytes, sometimes reaching 9000 bytes. When testing in environments with jumbo frames enabled, configuring iPerf to match this larger MTU can maximize throughput by reducing overhead from headers and fragmentation.

-

MTU Discovery and Path MTU:

-

Path MTU discovery helps ensure that packets do not exceed the MTU of any intermediate network. If iPerf sends packets larger than the path MTU and fragmentation is not allowed, the packets might get dropped.

-

It is important to ensure that ICMP Fragmentation Needed messages are not blocked by firewalls, as these help with path MTU discovery. Without it, larger packets may not be successfully delivered, resulting in performance issues.

-

-

TCP vs UDP Testing:

-

In TCP mode, iPerf automatically handles packet size and adjusts according to the path MTU.

-

In UDP mode, the packet size is controlled by the user (using the

-lflag), and this size must be less than or equal to the MTU to avoid fragmentation.

-

-

Adjusting MTU in iPerf:

-

Use the

-loption in iPerf to manually set the length of UDP datagrams. -

For testing with specific MTU sizes, it is useful to ensure that your network and interfaces are configured to match the desired MTU value to avoid mismatches.

-

-

Consistency Across Network Segments: Ensure the MTU size is consistent across all network devices between the two endpoints. Mismatched MTU settings can cause inefficiency due to fragmentation or dropped packets, leading to inaccurate test results.

-

VPN (Virtual Private Network): When using a VPN, MTU size and network performance become even more significant due to the additional layers of encapsulation and encryption. VPNs introduce extra overhead, which can affect the performance of tools like iPerf.

Here is a deeper look at VPN connections and their impact on network testing:

Key Concepts of VPN and MTU:

-

Encapsulation Overhead:

-

VPN protocols, such as IPsec, OpenVPN, WireGuard, PPTP, or L2TP, add extra headers to the original data packet for encryption and tunneling purposes.

-

This extra overhead reduces the effective MTU size because the VPN must accommodate both the original packet and the added VPN headers. For example:

- IPsec adds around 56 to 73 bytes of overhead.

- OpenVPN adds about 40-60 bytes, depending on the configuration (for example, UDP vs TCP).

- WireGuard adds around 60 bytes.

-

If you do not adjust the MTU, packets larger than the adjusted MTU may get fragmented or dropped.

-

-

MTU and Path MTU Discovery in VPNs:

-

VPNs often create tunnels that span multiple networks, and the path MTU between the two ends of the tunnel can be smaller than what would be used on a direct connection. Path MTU discovery helps VPNs avoid fragmentation, but some networks block ICMP messages, which are essential for this discovery.

-

If ICMP messages like Fragmentation Needed are blocked, the VPN tunnel may send packets that are too large for an intermediate network, causing packet loss or retransmissions.

-

-

Fragmentation Issues:

-

When an MTU mismatch occurs, the VPN will either fragment the packets at the network level or, if fragmentation is not allowed (DF, or Do not Fragment bit is set), drop the packets. Fragmentation introduces additional latency, lowers throughput, and can cause packet loss.

-

VPNs often have a lower effective MTU (for example, 1400 bytes instead of 1500), which accounts for the added headers and prevents fragmentation.

-

-

Adjusting MTU for VPN Connections: Most VPN clients or routers allow the user to adjust the MTU size to avoid fragmentation. For example, reducing the MTU size on a VPN tunnel to 1400 or 1350 bytes is common to account for VPN overhead.

-

-

Instance Network Speeds

Within OCI the speed of the network adapter (vNIC) or your instance is bound to the instance shape and the amount of CPUs you have assigned to that shape.

In this tutorial, we will use E4.Flex shapes with an Oracle Linux 8 Image with 1 OCPU. This means we will get a (maximum) network bandwidth of 1 Gbps for all iPerf test results.

- The shape is E4.Flex.

- The OCPU count is 1.

- The network bandwidth is 1 Gbps.

Note: It is possible to increase the network bandwidth by choosing another shape and increasing the amount of OCPUs.

Objectives

- Use iPerf to test the throughput inside an OCI Hub and Spoke VCN routing architecture.

Task 1: Review the OCI Hub and Spoke VCN Routing Architecture

We will use the following architecture for all the iPerf throughput tests in this tutorial.

This is a full hub and spoke routing architecture with on-premises connected with an IPSec VPN tunnel. To recreate this routing topology, see:

-

Route Hub and Spoke VCN with pfSense Firewall in the Hub VCN

-

Connect On-premises to OCI using an IPSec VPN with Hub and Spoke VCN Routing Architecture.

Task 2: Install iPerf3 on the Hub Instances

Note: In this task, we will install iPerf3, and we will install iPerf2 in the next task.

Task 2.1: Install iPerf3 on Hub Step-stone

The hub step-stone is a Windows server instance. There are different iPerf distributions available for Windows here: windows. For this tutorial, we will download from here: Directory Lister.

-

Download the zip file and unpack the file on the hub step-stone.

- Go to the directory where you have unpacked the iPerf zip file.

- Verify if the unpacked folder is available.

- Notice another iPerf folder is there.

- Go to inside the iPerf folder.

- Verify the files in the iPerf folder.

- We need

iPerf.exefile that we will use to perform the actual tests.

-

Run the

iPerf.execommand to see if it works.

Task 2.2: Install iPerf3 on a pfSense Firewall

-

To install iPerf on pfSense, we need to install a package through the Package Manager.

- Go to System Menu.

- Select Package Manager.

-

Click Available Packages.

- Enter iPerf in Search term.

- Click Search.

- Note that there will be one result and this is the iPerf package version 3.0.3 (at the time of writing this tutorial).

- Click +Install.

-

Click Confirm.

-

Note that the number of packages installed is 2.

- Go to Diagnostics Menu.

- Select iPerf.

-

Click Client.

-

Click Server.

Note: The pfSense firewall does not have the option (by default) to install the iPerf version 2 packages.

Task 3: Install iPerf3 on the Spoke Instances

Install iPerf3 on the Linux Instances inside OCI in our architecture.

Task 3.1: Install iPerf3 on Spoke Instance A1 and Instance A2

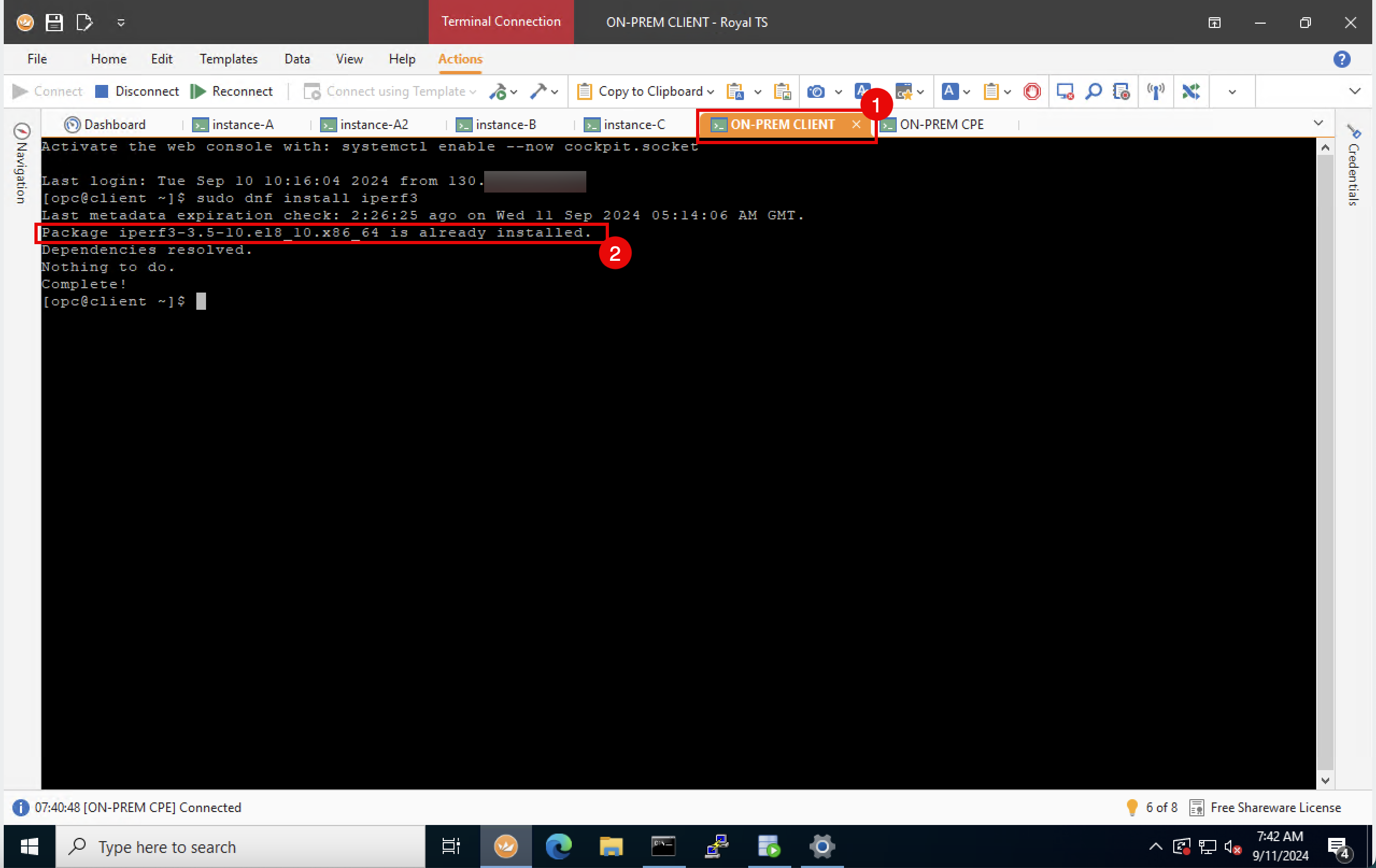

-

Instance A1 already has iPerf3 installed.

- Connect to instance A1.

- Run the

sudo dnf install iPerf3command. - Note that iPerf3 is already installed.

-

Run the

iPerf3 -vcommand to verify the iPerf version that is installed.

-

Install iPerf3 on instance A2.

- Connect to instance A2.

- Run the

sudo dnf install iPerf3command. - Enter

Y.

-

iPerf3 will install and note that the installation has been completed.

Task 3.2: Install iPerf3 on Spoke Instance B

- Connect to instance B.

- Run the

sudo dnf install iPerf3command to install iPerf 3. If iPerf3 is already available, you will get a message that iPerf is already installed.

Task 3.3: Install iPerf3 on Spoke Instance C

- Connect to instance C.

- Run the

sudo dnf install iPerf3command to install iPerf 3. If iPerf3 is already available, you will get a message that iPerf is already installed.

Task 3.4: Install iPerf3 on Instance D

- Connect to instance D.

- Run the

sudo dnf install iPerf3command to install iPerf 3. If iPerf3 is already available, you will get a message that iPerf is already installed.

Task 4: Install iPerf3 on the On-Premises Instances

Install iPerf3 on the on-premises Linux Instances in our architecture.

Task 4.1: Install iPerf3 on Oracle Linux Client

- Connect to the on-premises Linux client instance.

- Run the

sudo dnf install iPerf3command to install iPerf 3. If iPerf3 is already available, you will get a message that iPerf is already installed.

Task 4.2: Install iPerf3 on Oracle Linux Client CPE

- Connect to the on-premises Linux CPE instance.

- Run the

sudo dnf install iPerf3command to install iPerf 3. If iPerf3 is already available, you will get a message that iPerf is already installed.

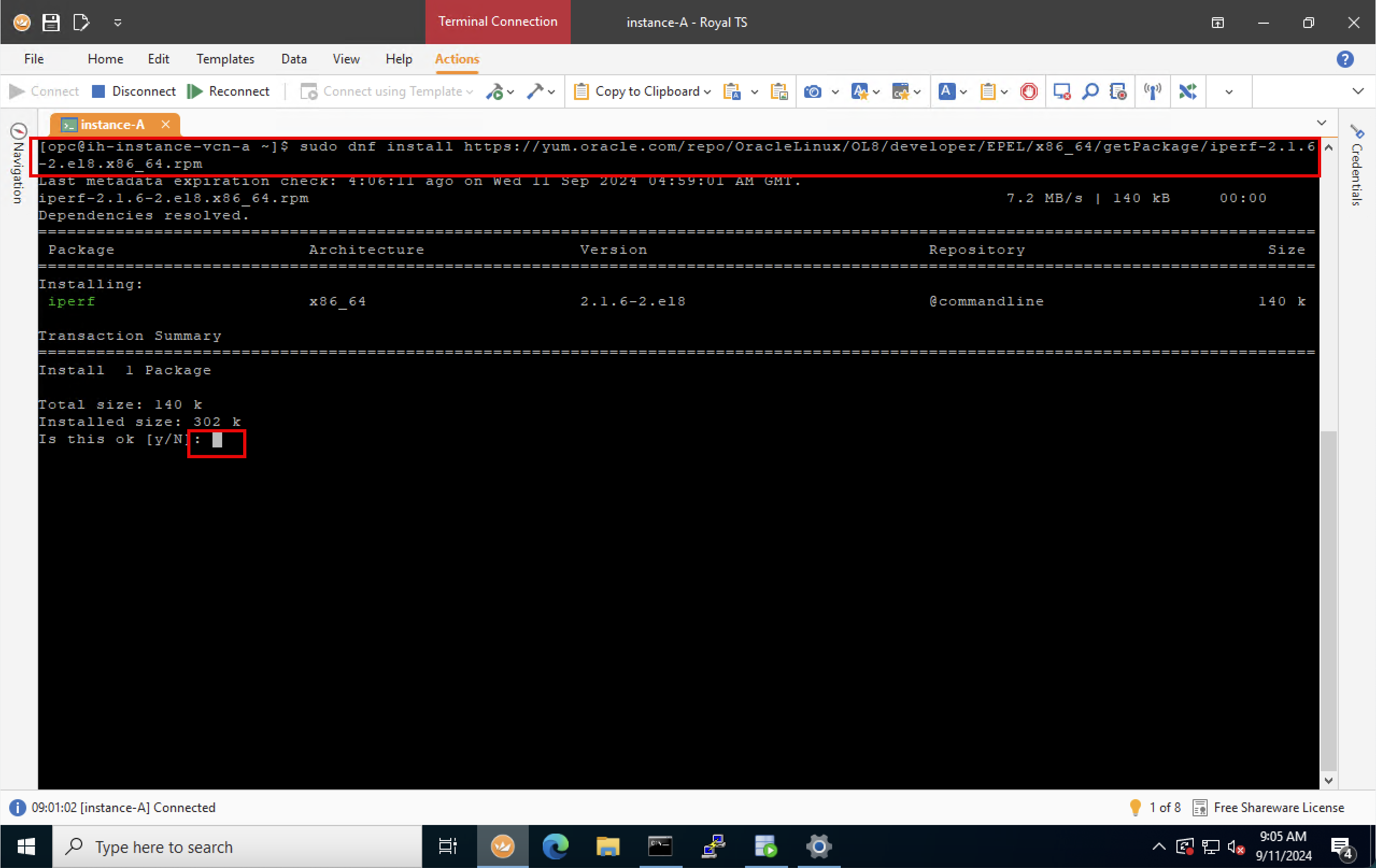

Task 5: Install iPerf2 on all Linux Instances

We have installed iPerf3, now we are going to install iPerf2 on all the Linux instances throughout the architecture.

We are using Oracle Linux 8 so we will need the following iPerf2 package: Oracle Linux 8 (x86_64) EPEL. If you are using Oracle Linux 9, use this package: Oracle Linux 9 (x86_64) EPEL or for another OS or Linux distribution use a package that is compiled for that OS.

-

Run the following command to install iPerf 2 on all Oracle Linux 8 instances.

sudo dnf install https://yum.oracle.com/repo/OracleLinux/OL8/developer/EPEL/x86_64/getPackage/iPerf-2.1.6-2.el8.x86_64.rpm -

Enter

Yto confirm the installation.

-

Note that the installation has been completed.

- Run the

iPerf -vcommand to verify the iPerf version that is installed. - Note that the iPerf version

2.1.6is installed.

Note: Make sure you install iPerf2 on all other instances as well.

- Run the

-

For the Windows based hub step-stone, download from here: iPerf-2.2.n-win64.

- Run the

iPerf.execommand to see if it works. - Run the

iPerf -vcommand to verify the iPerf version that is installed. - Note that iPerf version

2.2.nis installed.

- Run the

Task 6: Define the iPerf Tests and Prepare the iPerf Commands

In this task, we will provide some iPerf commands with the additional flags and explain what they mean. For more information, see Network Performance.

-

Basic iPerf commands for testing with TCP:

-

On the iPerf server side.

iPerf3 -s -

On the iPerf client side.

iPerf3 -c <server_instance_private_ip_address>

-

-

iPerf commands that we will use for testing with TCP:

Note:

- Bi-directional bandwidth measurement (

-r). - TCP Window size (

-w).

-

On the iPerf server side.

iPerf3 -s -w 4000 -

On the iPerf client side.

iPerf3 -c <server_instance_private_ip_address> -r -w 2000 iPerf3 -c <server_instance_private_ip_address> -r -w 4000

- Bi-directional bandwidth measurement (

-

iPerf commands that we will use for testing with UDP:

Note:

- UDP tests (

-u). - Bandwidth settings (

-b).

-

On the iPerf server side.

iPerf -s -u -i 1 -

On the iPerf client side.

iPerf -c <server_instance_private_ip_address> -u -b 10m iPerf -c <server_instance_private_ip_address> -u -b 100m iPerf -c <server_instance_private_ip_address> -u -b 1000m iPerf -c <server_instance_private_ip_address> -u -b 10000m iPerf -c <server_instance_private_ip_address> -u -b 100000m

- UDP tests (

-

iPerf commands that we will use for testing with TCP (with MSS):

Note: Maximum Segment Size (

-m).-

On the iPerf server side.

iPerf -s -

On the iPerf client side.

iPerf -c <server_instance_private_ip_address> -m

-

-

iPerf commands that we will use for testing with TCP (parallel):

-

On the iPerf server side.

iPerf -s -

On the iPerf client side.

iPerf -c <server_instance_private_ip_address> -P 2

Note: For all of the tests we will perform in this tutorial, we will use the following final commands.

-

-

iPerf final command for testing:

Note:

- Bandwidth settings (

-b). - Parallel tests (

-P).

To test the throughput for a 100GB connection with 100Gbps, we set the throughput to 9Gbps with 11 parallel streams.

-

On the iPerf server side.

iPerf -s -

On the iPerf client side.

iPerf -c <server_instance_private_ip_address> -b 9G -P 11

- Bandwidth settings (

Task 7: Perform iPerf Tests within the Same VCN in the Same Subnet

In this task, we are going to perform an iPerf2 throughput test within the same VCN and the same subnet. The following image shows the paths with the arrows between two endpoints where we are going to perform the throughput tests.

Task 7.1: From instance-A1 to instance-A2

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.1.50 |

| IP of the iPerf client | 172.16.1.93 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.1.50 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 1.05 Gbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 7.2: From instance-A2 to instance-A1

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.1.93 |

| IP of the iPerf client | 172.16.1.50 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.1.93 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 1.05 Gbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 8: Perform iPerf Tests Within the Same VCN Across Different Subnets

In this task, we are going to perform an iPerf3 throughput test within the same VCN but two different subnets. The following image shows the paths with the arrows between what two endpoints where we are going to perform the throughput tests.

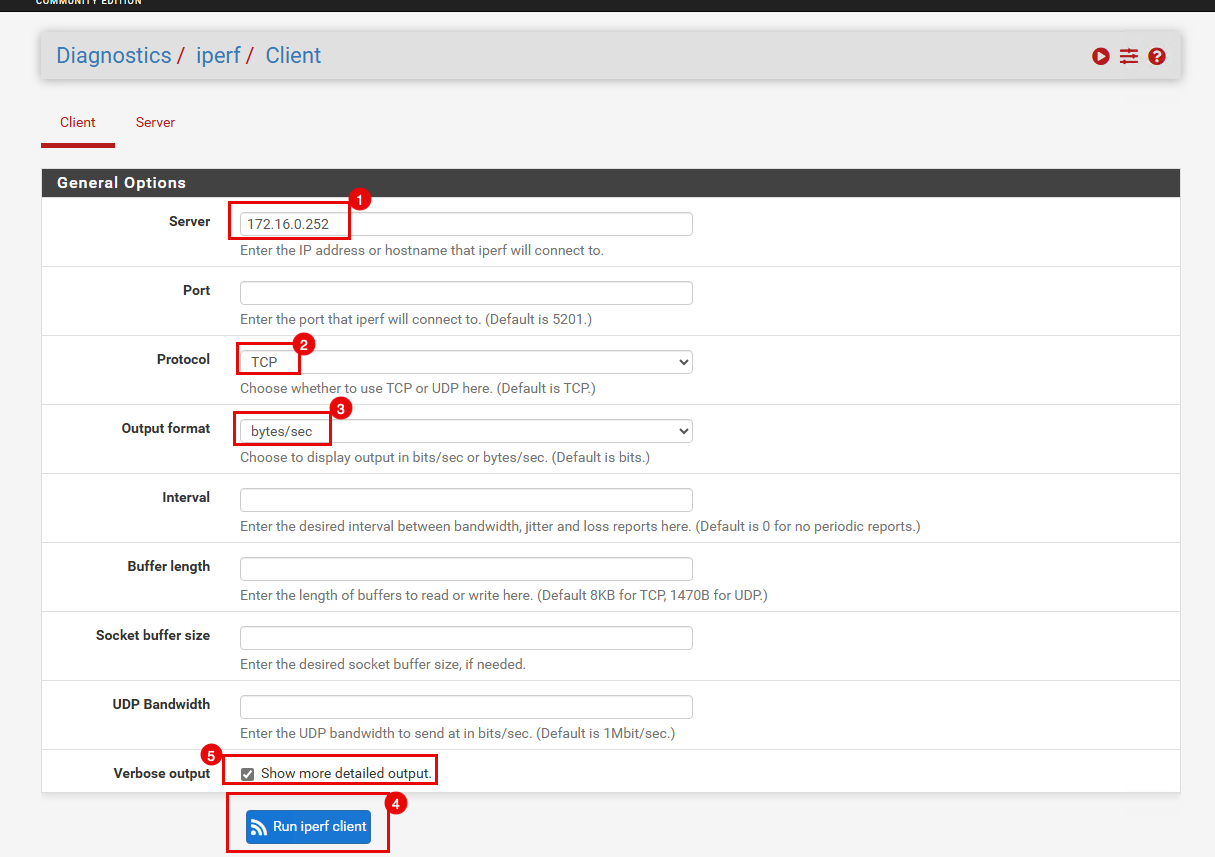

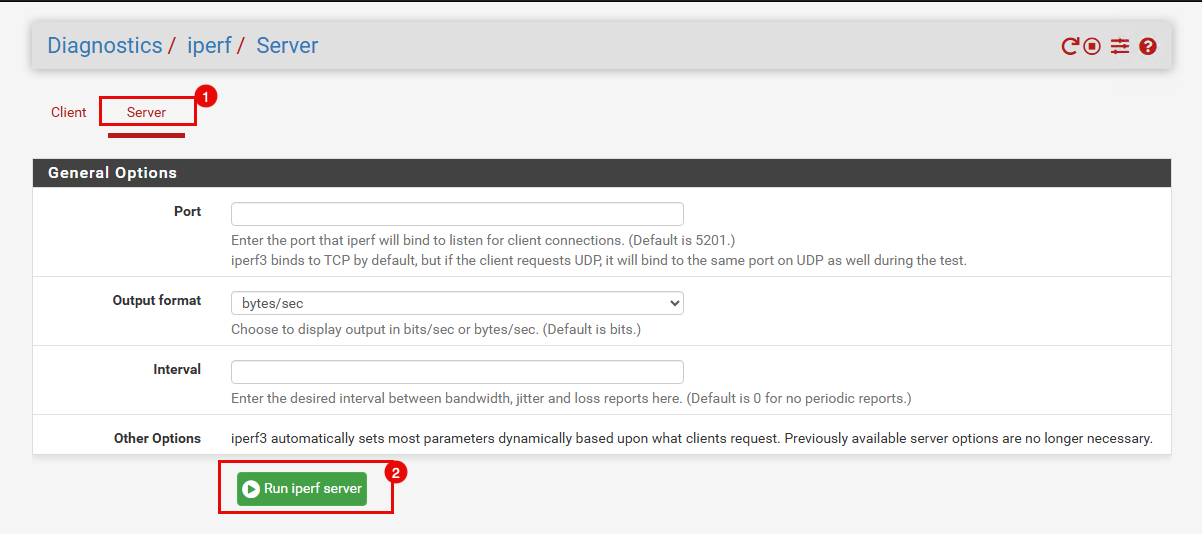

Task 8.1: From pfSense Firewall to Hub Step-Stone

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.0.252 |

| IP of the iPerf client | 172.16.0.20 |

| iPerf command on the server | iPerf3 -s |

| iPerf command on the client | iPerf3 -c 172.16.0.252 |

| Tested Bandwidth (SUM) | 958 Mbytes/sec |

The following images illustrate the commands and full testing output of the iPerf test.

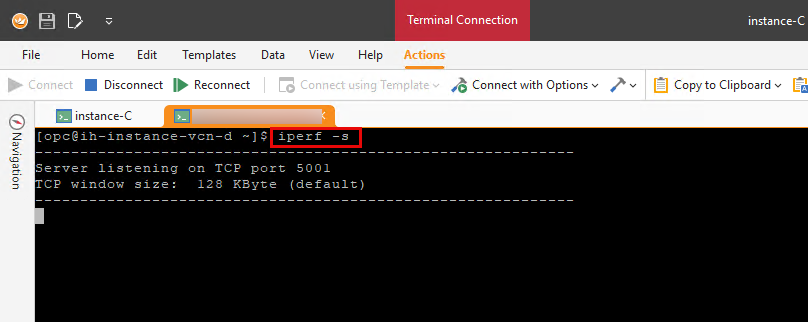

Task 8.2: From Hub Step-Stone to pfSense Firewall

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.0.20 |

| IP of the iPerf client | 172.16.0.252 |

| iPerf command on the server | iPerf3 -s |

| iPerf command on the client | iPerf3 -c 172.16.0.20 |

| Tested Bandwidth (SUM) | 1.01 Gbit/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 9: Perform iPerf Tests Between two Different VCNs

In this task, we are going to perform an iPerf2 throughput test between two different VCNs and two different subnets. Note that the test will go through a firewall that is located in the hub VCN. The following image shows the paths with the arrows between what two endpoints where we are going to perform the throughput tests.

Task 9.1: From Instance-A1 to Instance-B

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.2.88 |

| IP of the iPerf client | 172.16.1.93 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.2.88 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 1.02 Gbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 9.2: From Instance-B to Instance-A1

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.1.93 |

| IP of the iPerf client | 172.16.2.99 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.1.93 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 1.02 Gbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 10: Perform iPerf Tests Between Different VCNs Bypassing the pfSense Firewall

In this task, we are going to perform an iPerf2 throughput test between two different VCNs and two different subnets. Note that the test will bypass the firewall that is located in the hub VCN. The following image shows the paths with the arrows between what two endpoints where we are going to perform the throughput tests.

Task 10.1: From Instance-C to Instance-D

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.4.14 |

| IP of the iPerf client | 172.16.3.63 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.4.14 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 1.04 Gbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 10.2: From Instance-D to Instance-C

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.3.63 |

| IP of the iPerf client | 172.16.4.14 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.3.63 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 1.05 Gbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 11: Perform iPerf Tests Between On-Premises and OCI Hub VCN

In this task, we are going to perform an iPerf2 throughput test between on-premises and OCI using a Site-to-Site IPSec VPN tunnel. Note that the test will go through the firewall that is located in the hub VCN. The following image shows the paths with the arrows between what two endpoints where we are going to perform the throughput tests.

Note:

When you perform throughput tests (with or without iPerf) using a VPN IPSec tunnel and a pfSense firewall Maximum Transmission Unit (MTU) and Maximum Segment Size (MSS) an important factor to take into account, when this is done wrong, the throughput results will be invalid and not as expected.

With iPerf, you can tweak the packet stream so that the packets are sent with a specific MSS, you can use this if you are not able to change the MSS settings on the devices in the path between your source or destination.

Maximum Segment Size Clamping

In this tutorial, the on-premises side had an MTU of 9000 sending a packet with the MSS value of 1500 + IPSec overhead.

The pfSense interface MTU is 1500 … causing fragmentation issues.

By setting the interface MSS to 1300 it changes the size on the fly and this technique is called Maximum Segment Size Clamping.

MSS Change on the pfSense

Task 11.1: From VPN Client Instance (On-Premises) to Hub Step-Stone

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.0.252 |

| IP of the iPerf client | 10.222.10.19 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.0.252 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 581 Mbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 11.2: From Hub Step-Stone to VPN Client Instance (On-Premises)

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 10.222.10.19 |

| IP of the iPerf client | 172.16.0.252 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 10.222.10.19 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 732 Mbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 12: Perform iPerf Tests Between On-Premises and OCI Spoke VCN

In this task, we are going to perform an iPerf2 throughput test between on-premises and OCI using a Site-to-Site IPSec VPN tunnel. Note that the test will go through the firewall that is located in the hub VCN. The following image shows the paths with the arrows between what two endpoints where we are going to perform the throughput tests.

Task 12.1: From VPN Client Instance (On-Premises) to Instance-A1

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.1.93 |

| IP of the iPerf client | 10.222.10.19 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.1.93 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 501 Mbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

New Tests with MSS in iPerf Command:

Note: With iPerf you can tweak the packet stream so that the packets are sent with a specific MSS, you can use the following commands if you are not able to change the MSS settings on the devices in the path between your source or destination.

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.1.93 |

| IP of the iPerf client | 10.222.10.19 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.1.93 -b 9G -P 5 -M 1200 |

| Tested Bandwidth (SUM) | 580 Mbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 12.2: From Instance-A1 to VPN Client Instance (On-Premises)

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 10.222.10.19 |

| IP of the iPerf client | 172.16.1.93 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 10.222.10.19 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 620 Mbits/sec |

In the next screenshots, you will also find the full testing outputs of the iPerf tests.

New Tests with MSS in iPerf Command:

Note: With iPerf you can tweak the packet stream so that the packets are sent with a specific MSS, you can use the following commands if you are not able to change the MSS settings on the devices in the path between your source or destination.

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 10.222.10.19 |

| IP of the iPerf client | 172.16.1.93 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 10.222.10.19 -b 9G -P 5 -M 1200 |

| Tested Bandwidth (SUM) | 805 Mbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 13: Perform iPerf Tests Between On-Premises and OCI Spoke VCN bypassing the pfSense Firewall

In this task, we are going to perform an iPerf2 throughput test between on-premises and OCI using a Site-to-Site IPSec VPN tunnel. Note that the test will bypass the firewall that is located in the hub VCN. The following image shows the paths with the arrows between what two endpoints where we are going to perform the throughput tests.

Task 13.1: From VPN Client Instance (On-Premises) to Instance-D

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 172.16.4.14 |

| IP of the iPerf client | 10.222.10.19 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 172.16.4.14 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 580 Mbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 13.2: From Instance-D to VPN Client Instance (On-Premises)

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 10.222.10.19 |

| IP of the iPerf client | 172.16.4.14 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 10.222.10.19 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 891 Mbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 14: Perform iPerf Tests Between the Internet and the OCI Hub VCN

In this task, we are going to perform an iPerf2 throughput test between a client on the internet and OCI using the internet. The following image shows the paths with the arrows between what two endpoints where we are going to perform the throughput tests.

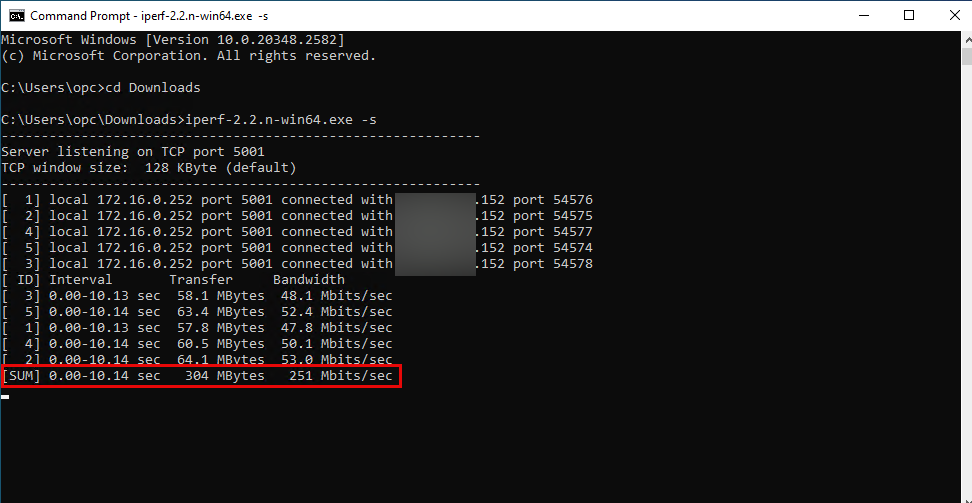

Task 14.1: From Internet to Hub Step-Stone

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | xxx.xxx.xxx.178 |

| IP of the iPerf client | xxx.xxx.xxx.152 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c xxx.xxx.xxx.178 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 251 Mbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 15: Preform iPerf Tests within the Same Subnet On-Premises

In this task, we are going to perform an iPerf2 throughput test between two on-premises instances. The following image shows the paths with the arrows between what two endpoints where we are going to perform the throughput tests.

Task 15.1: From VPN Client Instance (On-Premises) to StrongSwan CPE Instance (On-Premises)

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 10.222.10.70 |

| IP of the iPerf client | 10.222.10.19 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 10.222.10.70 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 1.05 Gbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Task 15.2: From StrongSwan CPE Instance (On-Premises) to VPN Client Instance (On-Premises)

In the following table, you will find the IP address of the client and the server (used in this test), and the commands used to perform the iPerf test with the test results.

| IP of the iPerf server | 10.222.10.19 |

| IP of the iPerf client | 10.222.10.70 |

| iPerf command on the server | iPerf -s |

| iPerf command on the client | iPerf -c 10.222.10.19 -b 9G -P 5 |

| Tested Bandwidth (SUM) | 1.05 Gbits/sec |

The following images illustrate the commands and full testing output of the iPerf test.

Results

In this tutorial, we have performed different types of throughput tests using iPerf2 and iPerf3. The tests were performed on various different sources and destinations in the full network architecture with different paths.

In the following table you can see a summary of of the test results that we collected.

| Test Type | Bandwidth Result | |

|---|---|---|

| Task 7.1: Perform iPerf tests within the same VCN in the same subnet (From Instance-A1 to Instance-A2) | 1.05 Gbits/sec | OCI internal |

| Task 7.2: Perform iPerf tests within the same VCN in the same subnet (From Instance-A2 to Instance-A1) | 1.05 Gbits/sec | OCI internal |

| Task 8.1: Perform iPerf tests within the same VCN across different subnets (From pfSense Firewall to hub Stepstone) | 958 Mbytes/sec | OCI internal |

| Task 8.2: Perform iPerf tests within the same VCN across different subnets (From hub Stepstone to pfSense Firewall) | 1.01 Gbit/sec | OCI internal |

| Task 9.1: Perform iPerf tests between to different VCNs (From Instance-A1 to Instance-B) | 1.02 Gbits/sec | OCI internal |

| Task 9.2: Perform iPerf tests between to different VCNs (From Instance B to Instance A1) | 1.02 Gbits/sec | OCI internal |

| Task 10.1: Perform iPerf tests between different VCNs (bypassing the pfSense Firewall) (From Instance-C to Instance-D | 1.04 Gbits/sec | OCI internal |

| Task 10.2: Perform iPerf tests between different VCNs (bypassing the pfSense Firewall) (From Instance-D to Instance-C) | 1.05 Gbits/sec | OCI internal |

| Task 11.1: Perform iPerf tests between on-premises and OCI Hub VCN (From VPN Client Instance (on-premises) to Hub Stepstone) | 581 Mbits/sec | On-premises to OCI through firewall |

| Task 11.2: Perform iPerf tests between on-premises and OCI Hub VCN (From Hub Stepstone to VPN Client Instance (on-premises)) | 732 Mbits/sec | On-premises to OCI through firewall |

| Task 12.1: Perform iPerf tests between on-premises and OCI Spoke VCN (From VPN Client Instance (on-premises) to Instance-A1) | 501Mbits/sec | On-premises to OCI through firewall |

| Task 12.2: Perform iPerf tests between on-premises and OCI Spoke VCN (From Instance-A1 to VPN Client Instance (on-premises)) | 620 Mbits/sec | On-premises to OCI through firewall |

| Task 13.1: Perform iPerf tests between on-premises and OCI Spoke VCN (bypassing the pfSense Firewall) (From VPN Client Instance (on-premises) to Instance-D) | 580 Mbits/sec | On-premises to OCI firewall bypass |

| Task 13.2: Perform iPerf tests between on-premises and OCI Spoke VCN (bypassing the pfSense Firewall) (From Instance-D to VPN Client Instance (on-premises)) | 891 Mbits/sec | On-premises to OCI firewall bypass |

| Task 14: Perform iPerf tests between the INTERNET and the OCI Hub VCN (From Internet to Hub Stepstone) | 251 Mbits/sec | Internet to OCI |

| Task 15.1: Perform iPerf tests within the same subnet on-premises (From VPN Client Instance (on-premises) to StrongSwan CPE Instance (on-premises)) | 1.05 Gbits/sec | On-premises to on-premises |

| Task 15.2: Perform iPerf tests within the same subnet on-premises (From StrongSwan CPE Instance (on-premises) to VPN Client Instance (on-premises)) | 1.05 Gbits/sec | On-premises to on-premises |

Acknowledgments

- Author - Iwan Hoogendoorn (OCI Network Specialist)

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Use iPerf to Test the Throughput inside an OCI Hub and Spoke VCN Routing Architecture

G17011-01

October 2024