Note:

- This tutorial requires access to Oracle Cloud. To sign up for a free account, see Get started with Oracle Cloud Infrastructure Free Tier.

- It uses example values for Oracle Cloud Infrastructure credentials, tenancy, and compartments. When completing your lab, substitute these values with ones specific to your cloud environment.

Build an AI Agent with Multi-Agent Communication Protocol Server for Invoice Resolution

Introduction

Companies that deal with a large volume of products - such as distributors, industries and retail chains - often face the challenge of identifying products based on inaccurate, incomplete or varied textual descriptions. In environments where data is entered manually, typos, abbreviations and different trade names can make it difficult to correctly identify items in systems such as Enterprise Resource Planning (ERP), Customer Relationship Management (CRM) and e-commerce platforms.

In this scenario, there is a common need for tools that can:

-

Interpret informal or incorrect descriptions provided by users.

-

Suggest the most similar products based on semantic similarity.

-

Guarantee a fallback with traditional algorithms (such as fuzzy matching) if the semantic search does not find relevant results.

-

Integrate with APIs and automated flows of intelligent agents.

In this tutorial, we will learn how to create an AI agent specialized in resolving inconsistencies in customer return invoices. The agent is able to interact with an MCP server that provides vector search and invoice retrieval tools, allowing the agent to automatically find the company’s original A/R invoice based on information provided by the customer.

A MCP Server (Model Context Protocol) is a software component responsible for mediating communication between intelligent agents and external services through a standardized protocol of messages and tools. It acts as a bridge between language models (LLMs) and existing APIs, allowing AI agents to access specific functionalities of legacy systems without the need to rewrite or directly adapt them.

How does it work?

The MCP server:

- Exposes a set of named tools that represent actions or services agents can call.

- Each tool can be connected to a database function, REST API, legacy system, or any programmable operation.

- Receives agent commands (in JSON format), executes the corresponding service, and returns a standardized response.

Usage with Legacy Applications

Legacy applications often already provide functionality through databases, files, or internal APIs. With a MCP Server, you can:

-

Encapsulate calls to REST services, stored procedures, or SQL queries.

-

Make these services accessible to an agent through a unified communication interface.

-

Integrate older systems with modern architectures based on conversational AI and RAG.

Advantages

-

Reuse of existing business logic.

-

No need to refactor legacy systems.

-

Enables LLM agents to interact with external systems in a secure and controlled manner.

-

Facilitates testing, versioning, and modularity in the integration between AI and backend services.

This agent is based on an Oracle Cloud Infrastructure (OCI) Generative AI language model and integrates with dynamically declared tools managed by an MCP server.

With these two components integration, the system enables an agent based on Oracle LLM:

- Use remotely hosted tools using MCP.

- Perform intelligent searches for products and EANs.

- Locate corresponding A/R invoices.

- Record everything in observability using Phoenix and OpenTelemetry.

This modular design allows for reusability and easy evolution of the system to domains other than invoices.

Objectives

-

Configure an AI agent with LangGraph and LangChain to work with structured prompts.

-

Integrate this agent with an MCP server using the

stdioprotocol. -

Use remote tools registered on the server to:

- Perform vector searches from product descriptions.

- Identify the most likely EAN code for an item.

- Search for original invoices based on criteria such as customer, state and price.

-

Monitor agent execution in real time using Phoenix and OpenTelemetry.

-

Simulate a real problem resolution based on a JSON input such as:

{ "customer": "Customer 43", "description": "Harry Potter", "price": 139.55, "location": "RJ" }

Prerequisites

-

Access to an OCI tenancy to manage your cloud resources. You can register for free at

oracle.com/cloud/free. -

Install Python

version 3.12or higher. -

Access to an OCI account with the OCI Generative AI service enabled.

-

Install and configure the Python

Langchainlibrary. -

Access to the

cohere.command-r-08-2024model using OCI Generative AI. -

Install auxiliary libraries:

oracledbsentence_transformersnumpymcp-server-fastmcpasynciolangchain_corelangchain_communitymcplanggraphlangchain_mcp_adaptersphoenix(for observability with OpenTelemetry)opentelemetry-sdk,opentelemetry-exporter-otlp

-

A functional MCP server with the tools:

resolve_eansearch_vectorized_productsearch_invoices_by_criteria

-

Configure the

server_invoice_items.pyfile to run as an MCP server simulating an ERP. -

Download and install the

requirements.txtfile usingpip install -r requirements.txtcommand.

Task 1: Create an Oracle Database 23ai (Always Free)

In this task, we will learn how to provision an Oracle Database 23ai in Always Free mode. This version offers a fully managed environment, ideal for development, testing and learning, at no additional cost.

-

Log in to the OCI Console, navigate to Oracle Database, Autonomous Database and click Create Autonomous Database Instance.

-

Enter the following information.

- Database Name: Enter an identifying name for your instance.

- Workload Type: Select Data Warehouse or Transaction Processing, according to your needs.

- Compartment: Select an appropriate compartment to organize your resources.

-

Select Always Free to ensure that the instance is provisioned for free.

-

Create a secure password for the

ADMINuser, which will be used to access the database. -

Review the settings and click Create Autonomous Database. Wait a few minutes for the instance to be provisioned and available for use.

Task 2: Run the Autonomous Database Table Creation Script

Prepare the database for our use case. Download and run the SQL script from here: script.sql that creates three essential tables (PRODUCTS, INVOICE and ITEM_INVOICE) for the scenario of reconciling invoices with AI agents.

-

Go to the OCI Console, navigate to Oracle Database, Autonomous Database and click the name of the newly created instance.

-

Click Database Actions and SQL to open the SQL console in the browser.

-

Download and open the

script.sqlfile locally and paste all the contents into the SQL console editor. -

Click Run or press Ctrl + Enter. Wait for confirmation that the commands have been executed successfully.

-

Run the following commands to verify that the tables have been created.

SELECT table_name FROM user_tables;

Task 3: Insert Example Data into Tables

Insert dummy data that will simulate a real scenario for the application of AI agents. We will use two SQL scripts:

insert_products_books.sql: Inserts a list of books as products, with their respective EANs and descriptions.invoice_data_insert.sql: Inserts mock A/R invoice records, associated with customers, products and prices.

This data will be used by AI agents to resolve inconsistencies in returns invoices.

-

Go to the OCI Console, navigate to Oracle Database, Autonomous Database and click the name of the newly created instance.

-

Click Database Actions and SQL to open the SQL console in the browser.

-

Download the

insert_products_books.sqlfile content from here:insert_products_books.sqland paste it into the SQL editor. -

Click Run or press Ctrl + Enter.

-

Now, download the

invoice_data_insert.sqlfile content from here:invoice_data_insert.sqlfile and paste it into the editor. -

Click Run or press Ctrl + Enter.

-

Run the following command to check the data.

SELECT * FROM PRODUCTS; SELECT * FROM INVOICE; SELECT * FROM ITEM_INVOICE;

Task 4: Create and Compile the Advanced Search Function in the Database

Create a PL/SQL function called fn_advanced_search, which performs intelligent searches for keywords in product descriptions. This function will be used by AI agents as part of the resolve_ean tool, allowing them to find the nearest EAN code based on the description provided by a customer on the returns note.

What does the function do?

-

Tokenization of input terms (Example:

harry poter stonebecomes [harry,poter,stone]) -

Search techniques with scoring:

-

Direct search in descriptions

(LIKE '%term%')→ +3 points. -

Phonetic search using

SOUNDEX→ +2 points. -

Similar spelling search using

UTL_MATCH.EDIT_DISTANCE <= 2→ +1 point.

-

-

Scoring logic:

- Sums points for each product.

- Returns only products with total score > 0.

-

Return format:

Products are returned as

result_producttype objects containing:code(EAN).description(product description).similarity(calculated search score).

Follow the steps:

-

Copy and paste the complete

similarity_search.sqlscript into the autonomous database SQL console.This includes:

- Creating the

productstable (if it has not already been done). - Creating a text index.

- Types

product_resultandproduct_result_tab. - The

fn_advanced_searchfunction. - Optional tests.

- Creating the

-

Run the complete script. The result should be

Function createdandType created. -

Run the following query to test the function with simulated descriptions.

SELECT * FROM TABLE(fn_advanced_search('harry poter askaban')) ORDER BY similarity DESC;

Task 5: Vectorize Products for Semantic Search with AI

In this task, we will complement advanced SQL-based search with a new approach based on semantic vectors. This will be especially useful for AI agents that use embeddings (numerical representations of phrases) to compare similarity between product descriptions - more flexibly and intelligently than word or phonetic searches.

To do this, we will use the Python script (process_vector_products.py), which connects to the Oracle database, extracts the products from the PRODUCTS table, transforms their descriptions into vectors (embeddings), and builds a vector index using the Oracle database itself.

What does the script do?

- Reads the products from the

productstable usingoracledb. - Generates the embeddings using the

all-MiniLM-L6-v2model from thesentence-transformerspackage. - Create the

embeddings_productstable to store the vectors directly in Oracle. - Insert or update the records and save the vector as a binary BLOB (in serialized

float32format).

Note: The embeddings are converted into bytes with

np.float32.tobytes()to be stored as a BLOB. To retrieve the vectors, usenp.frombuffer(blob, dtype=np.float32).

This format allows future similarity searches to be done directly using SQL or by loading the vectors from the database for operations with np.dot, cosine_similarity or integration with LLMs.

This script generates semantic embeddings for products and writes these vectors to the Oracle Database 23ai. The main points are highlighted below:

-

Configure the Connection to Oracle using Wallet.

The code uses the

oracledblibrary in thin mode and configures secure access using an Oracle Wallet.os.environ["TNS_ADMIN"] = WALLET_PATH connection = oracledb.connect( user=USERNAME, password=PASSWORD, dsn=DB_ALIAS, ... ) -

Consultation of the Product Table.

The

productstable contains the original data (ID, code and description). These descriptions are used as the basis for generating the semantic vectors.cursor.execute("SELECT id, code, description FROM products") -

Generate Embeddings with

sentence-transformersThe

all-MiniLM-L6-v2model is used to transform product descriptions into high-dimensional numerical vectors.model = SentenceTransformer('all-MiniLM-L6-v2') embeddings = model.encode(descriptions, convert_to_numpy=True) -

Create the Embeddings Table (if it does not exist).

The

embeddings_productstable is created dynamically with the following fields:id: Product identifier (primary key).code: Product code.description: Original description.vector: BLOB containing the vector serialized infloat32.

CREATE TABLE embeddings_products ( id NUMBER PRIMARY KEY, code VARCHAR2(100), description VARCHAR2(4000), BLOB vector )Note: The creation uses

EXECUTE IMMEDIATEinside aBEGIN...EXCEPTIONto avoid an error if the table already exists. -

Insert or Update using

MERGE.For each product, the vector is converted into bytes (

float32) and inserted or updated in theembeddings_productstable using aMERGE INTO.vector_bytes = vector.astype(np.float32).tobytes()MERGE INTO embeddings_products ...

Run the Script:

Note: You need Oracle Wallet downloaded and configured.

Run it in the terminal.

python process_vector_products.py

Done! The products in the database have been vectorized.

Why is this important?

Vector search is highly effective for finding products even when the description is subjective, imprecise or in natural language.

Understand the Code: LLM Agent with MCP Server

This project is made up of 3 main components:

- ReAct Agent with LangGraph and OCI LLM (

main.py). - MCP Server with Invoice Resolution Tools (

server_invoice_items.py). - Search for Similar Products with OCI Generative AI and FAISS (

product_search.py).

Understand the functionality of each component and detailed highlights the most important parts of the code.

-

ReAct Agent with LangGraph and LLM from OCI:

This component runs the main application, where the user interacts with the agent based on Oracle Cloud’s Large Language Model (LLM). It communicates with the MCP server using a

stdioprotocol.Main features:

-

Telemetry Configuration with Phoenix and OpenTelemetry.

px.launch_app() ... trace.set_tracer_provider(provider) -

Create LLM Model using

ChatOCIGenAI.llm = ChatOCIGenAI( model_id="cohere.command-r-08-2024", ... ) -

Definition of the Task-Oriented Prompt for Reconciling Invoices.

prompt = ChatPromptTemplate.from_messages([ ("system", """You are an agent responsible for resolving inconsistencies in invoices...""), ("placeholder", "{messages}") ]) -

Local MCP Server Execution using

stdio.# Run the client with the MCP server async def main(): async with MultiServerMCPClient( { "InvoiceItemResolver": { "command": "python", "args": ["server_invoice_items.py"], "transport": "stdio", }, } ) as client: -

Main User Interaction Loop.

while True: query = input("You: ") ... result = await agent_executor.ainvoke({"messages": memory_state.messages}) -

Integration with Tools Exposed by the MCP Server.

agent_executor = create_react_agent( model=llm, tools=tools, prompt=prompt, ) -

Multiples MCP Servers.

If you want to consume multiple MCP servers, just include the servers here:

# Run the client with the MCP server async def main(): async with MultiServerMCPClient( { "InvoiceItemResolver": { "command": "python", "args": ["server_invoice_items.py"], "transport": "stdio", }, "InvoiceItemResolver": { "command": "python", "args": ["another_mcp_server.py"], "transport": "stdio", }, ... } ) as client: tools = client.get_tools() if not tools: print("❌ No MCP tools were loaded. Please check if the server is running.") return

Prompt:

The prompt is essential for establishing the process and operating rules for the AI agent.

-

-

MCP Server with Resolution Tools:

This server responds to agent calls by providing tools that access an Oracle Database with product and invoice information.

Main features:

-

Initialization of the MCP Server with the Name

InvoiceItemResolver.mcp = FastMCP("InvoiceItemResolver") -

Connection to Oracle Database using Oracle Wallet.

connection = oracledb.connect( user=USERNAME, password=PASSWORD, dsn=DB_ALIAS, wallet_location=WALLET_PATH, ... ) -

Implementation of MCP Tools.

-

search_vectorized_product:Searches for similar products with embeddings.

@mcp.tool() def search_vectorized_product(description: str) -> dict: """ Searches for a product by description using embeddings. """ return sercher.search_similar_products(description) -

resolve_ean:Resolves an EAN based on description similarity.

@mcp.tool() def resolve_ean(description: str) -> dict: result = execute_search_ean(description) ... return {"ean": result[0]["code"], ...} -

search_invoices_by_criteria:Searches for A/R invoices based on multiple filters.

@mcp.tool() def search_invoices_by_criteria(customer: str = None, state: str = None, price: float = None, ean: str = None, margin: float = 0.05) -> list: """ Searches for outbound invoices based on customer, state, EAN, and approximate price. Allows one or more fields to be omitted. As long as an EAN has not been established, it is not useful to use this service. """ query = """ SELECT nf.no_invoice, nf.name_customer, nf.state, nf.date_print, inf.no_item, inf.code_ean, inf.description_product, inf.value_unitary FROM invoice nf JOIN item_invoice inf ON nf.no_invoice = inf.no_invoice WHERE 1=1 """ params = {} #if customer: query += " AND LOWER(nf.name_customer) LIKE LOWER(:customer)" params["customer"] = f"%{customer}%" #if state: query += " AND LOWER(nf.state) = LOWER(:state)" params["state"] = state #if ean: query += " AND inf.code_ean = :ean" params["ean"] = ean if price is not None: query += " AND inf.value_unitary BETWEEN :price_min AND :price_max" params["price_min"] = price * (1 - margin) params["price_max"] = price * (1 + margin) result = execute_query(query, params) return [ dict(zip( ["no_invoice", "name_customer", "state", "date_print", "no_item", "code_ean", "description_product", "value_unitary"], row )) for row in result ]

-

-

Run the Server in

stdioMode.if __name__ == "__main__": mcp.run(transport="stdio")

-

-

Search for Similar Products with OCI Generative AI and Vector Database:

This module

product_search.pyimplements a Python class that allows you to search for semantically similar products from a textual description, using:- Embeddings from OCI Generative AI.

- Vector indexes with Oracle Database 23ai.

- Fuzzy comparisons with RapidFuzz as fallback.

Task 6: Configure the Model and Embeddings in the MCP Agent

Let us configure the language model and embeddings used by the conversational agent based on the MCP protocol, using OCI Generative AI services.

-

Configure the Language Model (LLM).

The language model is responsible for interpreting messages, generating responses and acting as the agent’s main brain.

-

Configure in the

main.pyfile.from langchain_community.chat_models.oci_generative_ai import ChatOCIGenAI llm = ChatOCIGenAI( model_id="cohere.command-r-08-2024", service_endpoint="https://inference.generativeai.us-chicago-1.oci.oraclecloud.com", compartment_id="ocid1.compartment.oc1..aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa", auth_profile="DEFAULT", model_kwargs={"temperature": 0.1, "top_p": 0.75, "max_tokens": 2000} )Parameter Description model_idGenerative AI model ID, e.g. cohere.command-r-08-2024service_endpointGenerative AI service regional endpoint compartment_idOCID of the OCI compartment auth_profileName of the profile configured in the file ~/.oci/configmodel_kwargsTemperature, top-p and response size -

List available models.

-

Using the OCI CLI:

oci generative-ai model list --compartment-id <seu_compartment_id> -

Using the Python SDK:

from oci.generative_ai import GenerativeAiClient from oci.config import from_file config = from_file(profile_name="DEFAULT") client = GenerativeAiClient(config) models = client.list_models(compartment_id=config["compartment_id"]) for model in models.data: print(model.display_name, model.model_id)

-

-

-

Configure embeddings for semantic search.

Search for similar products or contextual information depends on vector embeddings.

Example of use in the agent:

@mcp.tool() def search_vectorized_product(description: str) -> dict: """ Searches for a product by description using embeddings. """ return sercher.search_similar_products(description)Change the parameters (

product_search.py) as shown below:class SimilarProductSearch: def __init__( self, top_k=5, minimum_distance=1.0, model_id="cohere.embed-english-light-v3.0", service_endpoint="https://inference.generativeai.us-chicago-1.oci.oraclecloud.com", compartment_id="ocid1.compartment.oc1..aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa", auth_profile="DEFAULT", wallet_path="/WALLET_PATH/Wallet_oradb23ai", db_alias="oradb23ai_high", username="USER", password="Password" ):Parameter Description top_kNumber of suggestions returned. minimum_distanceMaximum distance to consider relevant result. model_idID of the embedding model in the OCI (e.g. cohere.embed-english-light-v3.0).service_endpointOCI Generative AI regional endpoint. compartment_idcompartment OCID. auth_profileProfile name in ~/.oci/configfile.wallet_pathPath to the Oracle Database 23ai wallet. db_aliasDatabase alias. usernameDatabase user. passwordDatabase password. Configure the MCP Server:

Just as you did when executing the

process_vector_products.pycode, you will need to configure the Oracle Wallet for the Oracle Database 23ai database.Modify the parameters according to your settings:

import os # Oracle Wallet settings WALLET_PATH = "/path/to/Wallet" DB_ALIAS = "oradb23ai_high" USERNAME = "admin" PASSWORD = "..." # Define the environment variable required for the Oracle client os.environ["TNS_ADMIN"] = WALLET_PATHAnd then execute the

process_vector_products.pyto vectorize the products into the Oracle Database 23ai.python process_vector_products.py

With this, the LLM model and embeddings are ready to be used by the MCP agent with LangGraph and LangChain.

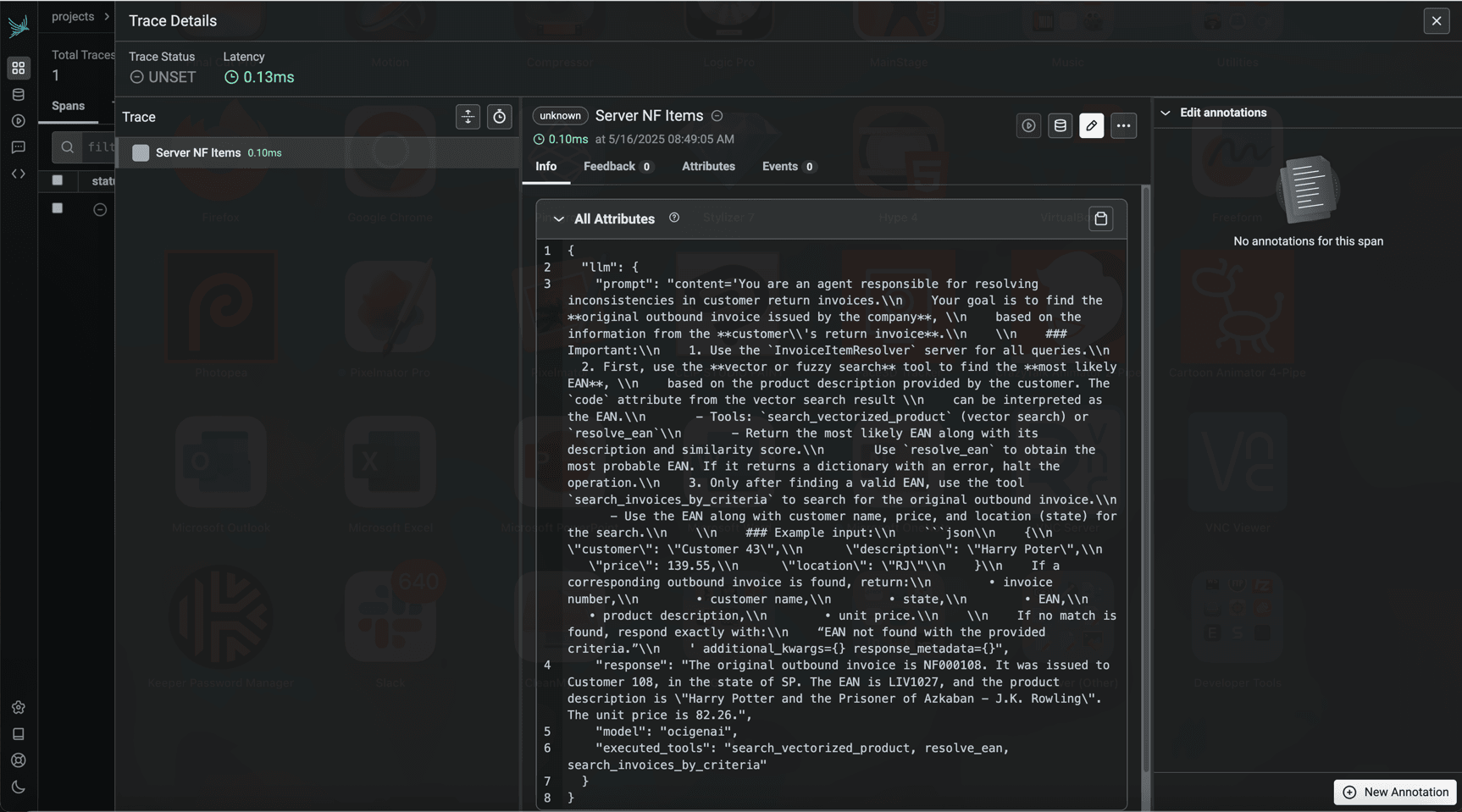

Task 7: Test the Product and Invoice Description Search

-

Run the

main.pyfile using the following command.python main.py -

When the You: prompt appears, enter the following statement.

{ "customer": "Customer 108", "description": "Harry Poter askaban", "price": 82.26, "location": "SP"}Note: The name of the book Harry Potter and Azkaban are spelled wrong, but the engine can find it without any problems.

Note that the services have been executed:

fetch_vectorized_product resolve_ean search_invoices_by_criteria -

Now, enter the following statement.

{ "customer": "Customer 108", "description": "Harry Poter askaban", "price": 82.26}You will see that there was no invoice record found. This is because location is key to finding an invoice.

-

Now, enter the following statement.

{ "customer": "Customer 108", "description": "Harry Poter", "location": "SP"}This time, we enter the location but omit the unit price.

And yet the invoice was found. This is because the price is not fundamental, but it does help to close the gap to be more assertive.

Some examples for testing are:

{ "customer": "Customer 108", "description": "Harry Poter askaban", "price": 82.26, "location": "SP"} { "customer": "Customer 108", "description": "Harry Poter askaban", "price": 82.26} { "customer": "Customer 108", "description": "Harry Poter askaban", "location": "SP"} { "customer": "Customer 108", "description": "Harry Poter askaban", "price": 82.26, "location": "RJ"} { "customer": "Customer 125", "description": "Harry Potter Chamber", "price": 79.16, "location": "SP"} { "customer": "Customer 125", "description": "Harry Potter Chamber", "price": 79.15, "location": "SP"}

Task 8: View the Observability with Phoenix

Enter http://localhost:6006/ in your browser to view the observability with Phoenix.

Related Links

Acknowledgments

- Author - Cristiano Hoshikawa (Oracle LAD A-Team Solution Engineer)

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Build an AI Agent with Multi-Agent Communication Protocol Server for Invoice Resolution

G35013-06

Copyright ©2025, Oracle and/or its affiliates.