5 About a Multi-Data Center Deployment

This chapter describes the multi-data center active-passive or active-passive disaster protection and the multi-data center active-active or active-active disaster protection.

Traditional disaster protection systems, also called multi-data center active-passive or active-passive disaster protection, use a model where one site is running while another site is on standby, to prevent possible failover scenarios. These disaster protection systems usually incur increased operational and administration costs, while the need for continuous use of resources and increased throughput (that is, avoiding situations where the standby machines are idle) have increased through the years.

IT systems’ design is increasingly driven by capacity utilization and even distribution of load, which leads to the adoption of disaster protection solutions that use, all the resources available, as much as possible. These disaster protection systems are called multi-data center active-active or active-active disaster protection.

A Multi-Data Center deployment for Oracle Identity and Access Management is explained in the following topics:

- About the Oracle Identity and Access Management Multi-Data Center Deployment

- Administering Oracle Identity and Access Management Multi-Data Center Deployment

- About the Requirements for Multi-Data Center Deployment

- About the Characteristics of a Multi-Data Center Deployment

Parent topic: Understanding an Enterprise Deployment

About the Oracle Identity and Access Management Multi-Data Center Deployment

Oracle Identity and Access Management consists of two products, each of which has a different availability requirements:

-

Oracle Access Management (OAM), which is used to collect credentials and grant access to other system resources.

-

Oracle Identity Governance (OIG), which has the ability to create new accounts and grant access rights to those accounts.

Many organizations use both products, which they integrate together to provide a complete solution. However, the approach to achieve an Active/Active deployment in each case is different. OAM has had a multi-data center solution since 11g Release 2 (11.1.2.2.0), but this has been a standalone solution, which does have the ability to integrate with OIG. Integration between the products has to be handled carefully to maximize the benefits of the two different solutions.

- About Multi-Data Center Deployment Approach in OAM and OIG

- About the Multi-Data Center Deployment in OAM and OIG

Parent topic: About a Multi-Data Center Deployment

About Multi-Data Center Deployment Approach in OAM and OIG

The two product groups, Oracle Access Management (OAM) and Oracle Identity Governance (OIG) need to be treated differently:

-

OAM uses two independent databases and proprietary OAM replication technologies to keep those databases in sync.

-

OIG has no dedicated multi-data center (MDC) solution and therefore must use a solution similar to that of the traditional disaster recovery solution. That is, this solution uses a single database, which is replicated, to the disaster recovery site. All writes to the database goes to the site, whichever is active.

When a failure occurs in the OIG database tier, both multi-data center deployment active-active and multi-data center active-passive present similar Recovery Time Objective (RTO) and Recovery Point Objective (RPO), since the database is the driver for recovery. In both the cases, the database is active only in one Site and passive in the other site.

The only advantage of multi-data center active-active deployment systems is that an appropriate data source configuration can automate the failover of database connections from the middle tiers, reducing RTO (the recovery time is decreased because restart of the middle tiers is not required).

When a failure occurs in the OAM database tier, there can be no discernible system impact, as the surviving database continues to process requests. When configuring an OAM multi-data center deployment, you tell the system how you wish failover to be handled. The options are:

-

Continue as if nothing has happened; Site–2 will accept authentications from Site–1.

-

Force sessions to be re-authenticated on Site–2.

When the OAM solution is active-active, the OIG solution is usually active-passive.

Whilst OAM and OIG can be treated differently using the OAM MDC solution and OIG using the traditional stretched cluster active-passive solution. They can be treated the same, that is to say using a single active database replicated to a second site using active dataguard, and a Stretched Weblogic Cluster spanning both sites. A single solution is easier to maintain and manage, however if active-active is required, the two sites must be close together. Using the OAM MDC approach combined with OIG running active-passive allows the two sites to be much further apart.

However, note that, even in a distributed OAM active-active solution, OAM policy changes can only be made on one instance, which is designated the master instance.

If you are using a stretched cluster deployment for OIG or OAM, then, whether you can use those sites simultaneously or in an active-passive manner or not, is determined by the speed of the network. The higher the network latency between the two sites, the performance degrades. In a stretched cluster deployment, a network latency of greater than 5ms is normally considered to be unacceptable. This figure is a guide to only many things, including transaction volumes, which affects the minimum value. If you are using a stretched cluster in an active-active deployment, suitable load testing should be conducted to ensure that the performance is acceptable for your given topology.

About the Multi-Data Center Deployment in OAM and OIG

Besides the common performance paradigms that apply to single-datacenter designs, Oracle Identity and Access Management multi-data center active-active systems need to minimize the traffic across sites to reduce the effect of latency on the system’s throughput. In a typical Oracle Identity and Access Management system, besides database access (for dehydration, metadata access, and other database read/write operations that custom services that participate in the system may perform), communication between the different tiers can occur mainly over the following protocols:

-

Incoming HTTP invocations from load balancers (LBR) or Oracle Web Servers (Oracle HTTP Server or Oracle Traffic Director) and HTTP callbacks

-

Incoming HTTP invocations between OAM and OIM

-

Java Naming and Directory Interface (JNDI)/Remote Method Invocation (RMI) and Java Message Service (JMS) invocations between Oracle WebLogic Servers

-

Oracle Access Protocol (OAP) requests between Web Servers and OAM

For improved performance, all of the above protocols should be restrained, as much as possible, to one single site. That is, servers in SiteN ideally should just receive invocations from Oracle Web Servers in SiteN. These servers should make JMS, RMI and JNDI invocations only to servers in SiteN and should get callbacks generated by servers only in SiteX. Additionally, servers should use storage devices that are local to their site to eliminate contention (latency for NFS writes across sites may cause severe performance degradation). Only if a component is available in SiteN, a request be sent to an alternate site.

There are additional types of invocations that may take place between the different Oracle Identity and Access Management servers that participate in the topology. These invocations are:

-

Oracle Coherence notifications: Oracle Coherence notifications need to reach all servers in the system to provide a consistent compoSite and metadata image to all SOA requests, whether served by one site or the other.

-

HTTP session replications: Some Oracle Identity and Access Management components use stateful web applications that may rely on session replication to enable transparent failover of sessions across servers. Depending on the usage patterns and number of users, considerable amount of replication data may be generated. Replication and failover requirements have to be analyzed for each business case, but ideally, session replication traffic should be reduced across sites as much as possible

-

LDAP or policy or identity store access: Access to policy and identity stores is performed by Oracle WebLogic Server infrastructure and Oracle Identity and Access Managementcomponents, for authorization and authentication purposes. In order to enable seamless access to users from either site, a common policy or identity store view needs to be used. Ideally, each site should have an independent identity and policy store that is synchronized regularly to minimize invocations from one site to the other.

Administering Oracle Identity and Access Management Multi-Data Center Deployment

A key aspect of the design and deployment of an Oracle Identity and Access Management multi-data center deployment is the administration overhead introduced by the solution.

In order to keep a consistent reply to requests, the sites involved should use a configuration such that the functional behavior of the system is the same irrespective of which site is processing those requests. Oracle Identity and Access Management keeps its configuration and metadata in the Oracle pdatabase. Hence, multi-data center active-active deployments with a unique active database guarantee consistent behavior at the composite and metadata level (there is a single source of truth for the involved artifacts).

The Oracle WebLogic Server configuration, however, is kept synchronized across multiple nodes in the same domain by the Oracle WebLogic Server Infrastructure. Most of this configuration usually resides under the Administration Server’s domain directory. This configuration is propagated automatically to the other nodes in the same domain that contain Oracle WebLogic Servers. Based on this, the administration overhead of a multi-data center active-active deployment system is very small as compared to any active-passive approach, where constant replication of configuration changes is required.

Oracle Fusion Middleware binaries across all sites must be the same, should be at the same location, and the same patches should be applied. This can be achieved by independent installation or by disk mirroring. If you are using disk mirroring, ensure that at least two different versions are available so that a corrupt patch only impacts half of the deployment (in a site) and the binary corruption is not replicated to the disaster recovery site. For example:

-

FMW binary set 1 – SiteAHost1, SiteAHost3, SiteAHost5

-

FMW binary set 2 – SiteAHost2, SiteAHost4, SiteAHost6

FMW binary set 1 and 2 replicated to Site–2 and mounted to:

-

FMW binary set 1 (copy) – SiteBHost1, SiteBHost3, SiteBHost5

-

FMW binary set 2 (copy) – SiteBHost2, SiteBHost4, SiteBHost6

Parent topic: About a Multi-Data Center Deployment

About the Requirements for Multi-Data Center Deployment

The requirements to set up an Oracle Identity and Access Management multi-data center deployment are:

-

Toplogy

-

Entry points

-

Database

-

Directory Tier

These requirements are explained in the following sections.

- About the Multi-Data Center Deployment Topology

- About the Entry Points in Multi-Data Center Deployment

- About the Databases in Multi-Data Center Deployment

- About the Directory Tier in Multi-Data Center Deployment

- About the Load Balancers in Multi-Data Center Deployment

- Shared Storage Versus Database for Transaction Logs and Persistent stores

Parent topic: About a Multi-Data Center Deployment

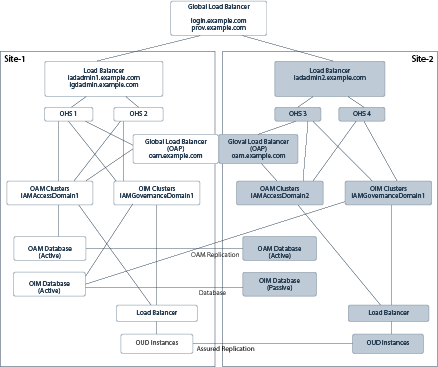

About the Multi-Data Center Deployment Topology

Review the topology model for an Oracle Identity and Access Management active-active multi-data center deployment.

The analysis and recommendations included are based on the topology described in this section. Each site locally uses a slightly modified version of the Oracle Identity and Access Management enterprise deployment Topology. For more information, refer the following sections.

In the Oracle Identity and Access Management active-active multi-data center deployment topology:

-

There are two separate sites (Site–1 and Site–2 for future reference in this document) that are accessed by one unique access point:

-

A global load balancer, which directs traffic to either site (each vendor provides different routing algorithms).

-

A local load balancer, the local access point of each site, which distributes requests to multiple Oracle HTTP Server (OHS) or Oracle Traffic Director (OTD). that, in turn, allocates requests to specific Oracle WebLogic Servers hosting Identity and Access Management components

-

-

Each Oracle Access Management (OAM) implementation accesses a local database which is read/write, the databases are kept in sync using OAM replication.

-

The Oracle Identity Governance (OIG) implementation shares one unique database that is accessed concurrently by servers in both sites (if they are both active).

-

Each site has multiple Lightweight Direct Access Protocol (LDAP) servers which are kept in sync using Oracle Unified Directory (OUD) replication.

-

Site–1 has an administration server for the entire OIG deployment which spans both sites.

-

Site–1 and Site–2 have independent Administration servers for the local OAM deployment.

Parent topic: About the Requirements for Multi-Data Center Deployment

About the Entry Points in Multi-Data Center Deployment

Access and Identity will have different entry points as described in the this guidePreparing the Load Balancer and Firewalls for an Enterprise Deployment. The reason for having two different entry points is that each entry point can be configured independently. For example:

-

login.example.comwill be configured to geographically distribute the requests amongst all active Oracle Access Management sites. -

prov.example.comwill be configured to only send traffic to the active site in an active-passive deployment.

Parent topic: About the Requirements for Multi-Data Center Deployment

About the Databases in Multi-Data Center Deployment

Two different databases are required to support Oracle Identity and Access Management Suite because the changes are propagated between the sites in different ways (unless using the stretched cluster design for both Oracle Access Management (OAM) and Oracle Identity Governance (OIG).

The synchronization of OAM data is performed using proprietary OAM technology. This technology effectively unloads data from the primary site, transfers it to the secondary site, and applies it to the database on that site, using SQL commands. The databases on the primary and secondary sites are independent and are both open to read and write.

The synchronicity requirements and data types used by the different OIG components limit the possible approaches for the OIG database in a multi-data center deployment. This document addresses only a solution where the Oracle Fusion Middleware database used for OIG uses Data Guard to synchronize an active database in Site–1 with a passive database in Site–2. Although other approaches may work, they have not been tested and certified by Oracle and are out of the scope of this document.

In this configuration, we assume that both sites where OIG is deployed, access the same database (as well as the same schemas within that database), and the database is set up in a Data Guard configuration. Data Guard provides a comprehensive data protection solution for the database. It consists of a standby Site 1 at geographically different location than the production site. The standby database is normally in passive mode; it is started when the production site (called production, from the database activity point of view) is not available.

The Oracle databases configured in each site are in an Oracle Real Application Cluster (RAC). Oracle RAC enables an Oracle database to run across a cluster of servers in the same data center; providing fault tolerance, performance, and scalability with no application changes necessary.

In order to facilitate the smooth transformation of database transactions from one site to the other, a role-based database service is created on both the primary and standby database sites. A role-based service is only available when the database is running in the primary role, that is, when the database is open read/write. When a standby database becomes a primary database, the service is automatically enabled on that side.

By configuring the WebLogic data sources to use this role-based service and making the data sources aware of both sites, WebLogic reconfiguration is not required, when the primary database moves between sites

Parent topic: About the Requirements for Multi-Data Center Deployment

About the Directory Tier in Multi-Data Center Deployment

The Multi-Data Center deployment for Oracle Identity Governance (OIG) has been tested using Oracle Unified directory (OUD).

OUD is a loosely coupled deployment. Data is replicated between the OUD instances using OUD replication. The second site is just an extension of that principle with the remote OUD instances becoming part of the OUD replication configuration.

Parent topic: About the Requirements for Multi-Data Center Deployment

About the Load Balancers in Multi-Data Center Deployment

The global load balancer (GLBR) is a load balancer configured to be accessible as an address by users of all of the sites and external locations. The device provides a virtual server which is mapped to a DNS name that is accessible to any client regardless of the site they will be connecting to. The GLBR directs traffic to either Site based on configured criteria and rules. For example, the criteria can be based on the client’s IP. These criteria and rules should be used to create a Persistence Profile which allows the GLBR to map users to the same site on initial and subsequent requests. The GLBR maintains a pool, which consists of the addresses of all the local load balancers. In the event of failure of one of the sites, users are automatically redirected to the surviving active site.

At each site, Local Load Balancer (LBR) receives the request from GLBR and directs requests to the appropriate HTTP server. In either case, the LBR is configured with a persistence method such as Active Insert of a cookie in order to maintain affinity and ensure that clients are directed appropriately. To eliminate undesired routings and costly re-hydrations, the GLBR is also configured with specific rules that route callbacks only to the LBR that is local to the servers that generated them. This is useful also for internal consumers of Oracle Identity and Access Management services.

The GLBR rules can be summarized as follows:

-

If requests come from Site–1 (callbacks from the Oracle Identity and Access Management servers in Site–1 or endpoint invocations from consumers in Site–1), the GLBR routes to the local load balancer (LBR) in Site–1.

-

If requests come from Site–2 (callbacks from the Oracle Identity and Access Management servers in Site–2 or endpoint invocations from consumers in Site–2) the GLBR routes to the LBR in Site–2.

-

If requests come from any other address (client invocations) the GLBR load balances the connections to both LBRs.

-

Client requests may use the GLBR to direct requests to the nearest site geographically.

-

Additional routing rules may be defined in the GLBR to route specific clients to specific sites (for example, the two sites may provide difference response time based on the hardware resources in each case).

Load balancers from any vendor are supported as long as the load balancer meets the requirements listed in Characteristics of the Oracle Access Management Design. The global load balancer should allow rules based on the originating server’s IPs (an example is provided for F5 Networks).

Parent topic: About the Requirements for Multi-Data Center Deployment

Shared Storage Versus Database for Transaction Logs and Persistent stores

The topology illustrated in About the Multi-Data Center Deployment Topology was tested using database-based persistent stores for Oracle WebLogic Server transactions logs and Oracle WebLogic Server JMS persistent stores.

Storing transaction logs and persistent stores in the database provides the replication and high availability benefits inherent from the underlying database system. With JMS, TLOG, and OIM/SOA data in a Data Guard database, cross-site synchronization is simplified and the need for a shared storage sub-system such as a NAS or a SAN is alleviated in the middle tier (they still apply for the Administration Server’s failover). Using TLOGs and JMS in the database has a penalty, however, on the system’s performance.

As of Oracle Fusion Middleware 11g, the retry logic in JMS JDBC persistent stores that takes care of failures in the database is limited to a single retry. If a failure occurs in the second attempt, an exception is propagated up the call stack and a manual restart of the server is required to recover the messages associated with the failed transaction (the server will go into FAILED state due to the persistent store failure). To overcome this faliure, it is recommended to use Test Connections on Reserve for the pertaining data sources and also configure in-place restart for the pertaining JMS Server and persistent stores. Refer to Appendix B for details on configuring in-place restart.

Parent topic: About the Requirements for Multi-Data Center Deployment

About the Characteristics of a Multi-Data Center Deployment

It is important to understand the characteristics of a multi-data center deployment and how to treat the multi-site implementations of Identity and Access differently, by separating the domains.

-

Oracle Access Management (OAM) can use the proprietary multi-site technologies built into the product.

-

Oracle Identity Manager (OIM) can use either the active-passive approach where network latency is high, or if that network latency is low, then be active-active as well. If Governance is running active-passive, the OIM components in Site–2 are shutdown until required.

-

The Global Load Balancer (GLBR) is configured to send traffic only to the active site.

-

Each OAM domain has a distinct entry point for administrative functions.

-

Failover of the OIM Administration server can be accomplished using disk-based replication of the IGD_ASERVER_HOME directory and a virtual IP address (VIP), which can be moved between sites.

-

Failure of the OAM Administration server is handled within the site using standard EDG techniques.

Characteristics of a Multi-Data Center Deployment

Availability (Web Tier):

The Oracle HTTP Server (OHS) configuration is based on a fixed list of servers in each site (instead of the dynamic list, provided by the OHS plug-in and used in typical single-location deployments). This configuration is done to eliminate undesired routing from one site to another, however, this configuration has the disadvantage of slower reaction times to failures in the Oracle WebLogic Server.

If you are using Oracle Exalogic, the web functionality is provided by Oracle Traffic Director, which is configured in the same way as OHS using a fixed list of servers. If you are deploying on Exalogic, all components in the topology need to be configured using the EoIB network as the components in Site–1 will be talking directly to components in Site–2. This configuration of components would not be feasible, if the IPoIB network were to be used.

Note:

Oracle Traffic Directory (OTD) can be used instead of Oracle HTTP Server (OHS) for non-Exalogic deployments, if required. It is possible to have Site–1 using OTD on Exalogic and Site–2 using OHS on commodity hardware.

The following sections describe the characteristics of the OAM design and OIM design:

- Characteristics of the Oracle Access Management Design

Review the characteristics of an Oracle Access Management (OAM) design. - Characteristics of the Oracle Identity Governance Design

Review the characteristics of an Oracle Identity Governance (OIG) design.

Parent topic: About a Multi-Data Center Deployment

Characteristics of the Oracle Access Management Design

Review the characteristics of an Oracle Access Management (OAM) design.

-

Availability

In an OAM multi-data center deployment, there is little impact on operations, as each site is independent. In the OAM multi-data center deployment, one site is nominated as master; if that site fails, the master role has to be passed on to Site–2. This affects the creation of policies only; runtime is not be affected. However, design considerations should be made for runtime.

If a request is authorized at Site 1, and Site 1 becomes unavailable, you have the option to force the user to re-authenticate at Site–2 to accept the authentication that has already occurred.

-

Administration

In a multi-data center active-active deployment, each site is independent of the other. The OAM replication mechanism takes care of the replication of OAM data, but the WebLogic or application configuration needs to be performed at each site independently. OAM does not use runtime artifacts (with the exception of the authentication cookie), so that the process is simplified,. However, having multiple independent configurations increases the administration overhead.

-

Performance

If the appropriate load balancing and traffic restrictions are configured as described in the Load Balancing section, the performance of OAM across sites should be similar to that of a cluster with the same number of servers residing in one single site.

-

Load Balancing

In an OAM deployment, the load balancer virtual hosts are as described in the guide with the following differences:

-

login.example.com: This virtual server is configured both locally and at the global load balancer level with location affinity. -

iadadmin.example.com: This virtual server is unique to each site, that is, there isiadadminsite1.example.comandiadadminsite2.example.com. -

oam.example.com: This virtual server is an additional load balancer entry point which is resolvable in each site and routes requests to the OAM Proxy port on the OAM Managed servers, for example 5575. This load balancer entry point is also configured at the Global Load Balancer (GLBR) level and distribute requests to each of the OAM Managed servers in both Multi-datacenter domains with location affinity. The advantage of configuring it at the GLBR level is that if the managed servers in Site–1 become unavailable but the web tier is available then authentications can still happen. The down side to this approach is that it will generate a lot of cross-site traffic. As each data center is high availability (HA) in its own right, it is unlikely that just the two hosts are effected by an outage, however, if both managed servers are down, all traffic is redirected to the second site. To redirect the traffic, you need to configure thelogin.example.commonitoring service to monitor not just the web servers but also the availability of the OAM service.Note:

As the Oracle Access Protocol (OAP) requests are being handled by the load balancer, a delay can result whilst the load balancer detects that an OAM server is not available.

-

Characteristics of the Oracle Identity Governance Design

Review the characteristics of an Oracle Identity Governance (OIG) design.

-

Availability

The database connection failover behavior and the JMS and RMI failover behaviors are similar to those that take place in a standard enterprise deployment topology. At all times, there is just one single

CLUSTER_MASTERserver, among all the available servers in the multi-data center active-active deployment, which is able to perform automatic recovery. Instances can be recovered equally from Site–1 and Site–2, if a failure occurs on the partner site as follows:-

From Site–1, when Site–2 is up if the

CLUSTER MASTERresides in Site–1. -

From Site–2, when Site–1 is up if the

CLUSTER MASTERresides in Site–2. -

From Site–1, when Site–2 is down.

-

From Site–2, when Site–1 is down.

If a failure occurs in Site–1 that affects all of the middle tiers, recovery of the administration Server is required to resume the Oracle Enterprise Manager Fusion Middleware Control and the Oracle WebLogic Server Administration Console.

The servers that are remote to the Administration Server take longer to restart than in a regular Enterprise Deployment Topology because all communications with the Administration Server (for retrieving the domain configuration upon start), initial connection pool creation, and database access is affected by the latency across sites.

From the perspective of Recovery Point Objective (RPO), transactions that are halted by a site failure can be resumed in the site, which is available, by manually starting the failed servers in that site. Automated server migration across sites is not recommended unless a database is used for JMS and TLOG persistence; otherwise a constant replica of the appropriate persistent stores needs to be set up between the sites. It is also unlikely (depending on the customer’s infrastructure) that the virtual IPs used in one site are valid for migration to the other, as this usually requires additional intervention to enable a listen address initially available in Site–1 to Site–2 and vice versa. This intervention can be automated in pre-migration scripts, but in general, the Recovery time Objective (RTO) increases compared to a standard automated server migration (taking place in the scope of single data center).

-

-

Performance

If the appropriate load balancing and traffic restrictions are configured as described in the Load Balancing section, the performance of a stretched cluster with low latency across sites should be similar to that of a cluster with the same number of servers residing in one single site. The configuration steps provided in the Load Balancing section are intended to constrain the traffic inside each site for the most common and normal operations. This isolation, however, is non-deterministic (for example, there is room for failover scenarios where a JMS invocation could take place across the two sites), which implies that most of the traffic takes place between the OIG Servers and the database. This is the key to the performance of the multi-data center running in an Active-Active scenario.

If the sites are separated by a high latency network, then OIG should be run active-passive to avoid significant performance degradation.

-

Administration

In a multi-data center active-active deployment, the Oracle WebLogic Server Infrastructure is responsible for copying configuration changes to all the different domain directories used in the domain. The Coherence cluster configured is responsible for updating all the servers in the cluster when composites or metadata are updated. Except for the replication requirement for runtime artifacts across file systems, a multi-data center active-active deployment is administrated like a standard cluster, which makes its administration overhead very low.