2 About the Kubernetes Deployment

Containers offer an excellent mechanism to bundle and run applications. In a production environment, you have to manage the containers that run the applications and ensure there is no downtime. For example, if a container goes down, another container has to start immediately. Kubernetes simplifies container management.

This chapter includes the following topics:

- What is Kubernetes?

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services that facilitates both declarative configuration and automation. - Requirements for Kubernetes

To deploy Oracle Identity and Access Management on Kubernetes, your environment should meet certain criteria. - About the Kubernetes Architecture

A Kubernetes host consists of a control plane and worker nodes. - Sizing the Kubernetes Cluster

A Kubernetes cluster must have a minimum of two worker nodes and a highly available control plane to ensure that you maintain a high availability solution. - Key Components Used by an Oracle Enterprise Deployment

An Oracle enterprise deployment uses the Kubernetes components such as pods, Kubernetes services, and DNS.

Parent topic: About an Enterprise Deployment

What is Kubernetes?

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services that facilitates both declarative configuration and automation.

Kubernetes sits on top of a container platform such as Crio or Docker. Kubernetes provides a mechanism which enables container images to be deployed to a cluster of hosts. When you deploy a container through Kubernetes, Kubernetes deploys that container on one of its worker nodes. The placement mechanism is transparent to the user.

Kubernetes provides:

- Service Discovery and Load Balancing: Kubernetes can expose a container using the DNS name or using their own IP address. If traffic to a container is high, Kubernetes balances the load and distributes the network traffic so that the deployment remains stable.

- Storage Orchestration: Kubernetes enables you to automatically mount a storage system of your choice, such as local storages, NAS storages, public cloud providers, and more.

- Automated Rollouts and Rollbacks: You can describe the desired state for your deployed containers using Kubernetes, and it can change the actual state to the desired state at a controlled rate. For example, you can automate Kubernetes to create new containers for your deployment, remove existing containers, and adopt all their resources to the new container.

- Automatic Bin Packing: If you provide Kubernetes with a cluster of nodes that it can use to run containerized tasks, and indicate the CPU and memory (RAM) each container needs, Kubernetes can fit containers onto the nodes to make the best use of the available resources.

- Self-healing: Kubernetes restarts containers that fail, replaces containers, kills containers that do not respond to your user-defined health check, and does not advertise them to clients until they are ready to serve.

- Secret and Configuration Management: Kubernetes lets you store and manage sensitive information such as passwords, OAuth tokens, and SSH keys. You can deploy and update secrets and application configuration without rebuilding your container images, and without exposing secrets in your stack configuration.

Kubernetes strength comes in providing a scalable platform for your mid tiers. Consider Kubernetes as a wrapper for your application tier. That is to say, when designing the architecture, the same network security considerations should be applied as in a traditional deployment. You will still keep the web tier in a separate DMZ outside of the network hosting the Kubernetes cluster, controlling traffic using firewall or security lists. The same applies for the database. The database would exist in a separate network outside of the cluster with a firewall or security lists controlling access from the Kubernetes layer.

When deploying Kubernetes, Oracle highly recommends that you use the traditional recommendations of keeping different workloads in separate Kubernetes clusters. For example, it is not a good practice to mix development and production workloads in the same Kubernetes cluster.

Parent topic: About the Kubernetes Deployment

Requirements for Kubernetes

To deploy Oracle Identity and Access Management on Kubernetes, your environment should meet certain criteria.

- Kubernetes Cluster 1.19.7 or above: The cluster can be either a standalone Kubernetes cluster similar to the one provided by Oracle Cloud Native Environment or a Cloud Managed Kubernetes Environment such as Oracle Container Engine (OKE). There should be sufficient Kubernetes worker nodes with sufficient capacity for the deployment. You must have multiple worker nodes to ensure no single point of failure. If you are using a standalone Kubernetes environment, the Kubernetes control plane must be configured for high availability.

- An administrative host from which to deploy the products: This

host could be a Kubernetes Control host, a Kubernetes Worker host, or an

independent host. This host must have

kubectldeployed using the same version as your cluster, andhelm.

- Oracle Containers for Cloud (OKE) 1.28.2

- Oracle Cloud Native Environment 1.8

- Helm 3.13.1

For information about further deployment considerations, see Technical Brief - Deployment and DevOps Considerations of IAM Containerized Services on Cloud Native Infrastructure.

Parent topic: About the Kubernetes Deployment

About the Kubernetes Architecture

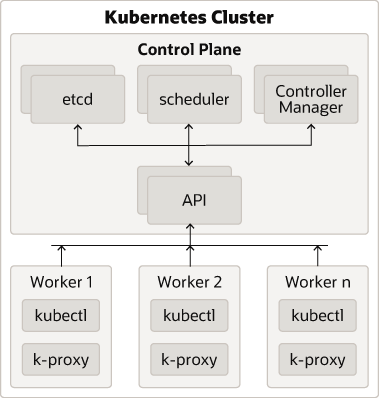

A Kubernetes host consists of a control plane and worker nodes.

Control Plane: A control plane is responsible for managing the Kubernetes components and deploying applications. In an enterprise deployment, you need to ensure that the Kubernetes control plane is highly available so that the failure of a control plane host does not fail the Kubernetes cluster.

Worker Nodes: Worker nodes which are where the containers are deployed.

Note:

An individual host can be both a control plane host and a worker host.Figure 2-1 An Illustration of the Kubernetes Cluster

Description of Components:

- Control Plane: The control plane comprises the following:

- kube-api server: The API server is a component of the control plane that exposes the Kubernetes APIs.

- etcd: It is used to store the Kubernetes backing store and all the cluster data.

- Scheduler: The scheduler is responsible for the placement of containers on the worker nodes. It takes into account resource requirements, hardware and software policy constraints, affinity specifications, and data affinity.

- Control Manager: It is responsible for running the controller

processes. Controller processes consist of:

- Node Controller

- Route Controller

- Service Controller

The control plane consists of three nodes where the Kubernetes API server is deployed, front ended by an LBR.

- Worker Node Components: The worker nodes include the following components:

- Kubelet: An Agent that runs on each worker node in the cluster. It ensures that the containers are running in a pod.

- Kube Proxy: Kube proxy is a network proxy that runs on each node of the cluster. It maintains network rules, which enable inter pod communications as well as communications outside of the cluster.

- Add-ons: Add-ons extend the cluster further, providing such

services as:

- DNS

- Web UI Dashboard

- Container Resource Monitoring

- Logging

- A load balancer is often placed in front of the the worker nodes to make it easier to direct traffic to any worker node. A load balancer also simplifies scaling up and scaling out and thereby reducing the need for application configuration changes. The configuration changes can be more difficult if you are using individual NodePort Services because you may have to create a pathway for each NodePort Service.

Parent topic: About the Kubernetes Deployment

Sizing the Kubernetes Cluster

A Kubernetes cluster must have a minimum of two worker nodes and a highly available control plane to ensure that you maintain a high availability solution.

You can choose to have a large number of small capacity worker nodes or a small number of high capacity worker nodes. If you work with two worker nodes, and one becomes unavailable, you lose 50pct of your system capacity. This setup introduce a single point of failure (the surviving worker node), until the surviving node replaces the failed node. Having a higher number of worker nodes means that even if one worker node fails, more than one remains, thus removing that single point of failure. However, the remaining worker nodes should have enough capacity to run pods that initially ran on the failed worker node.

In sizing the cluster, you need to work out the resource requirements of every pod that will run on the cluster, and then add a 20% overhead for the Kubernetes services. Adding worker nodes as your capacity needs expand is a relatively simple process. If your cluster runs several applications, then the capacity must be such that it can run all the pods associated with all those applications in a highly available manner. That is, if your total application requirement is 20CPUs and 100GB of memory, then you would need at least two worker nodes, each with 10CPUs and 50GB of memory plus 20pct, so 12CPUs and 60GB of memory for each worker node.

Storage requirements depend on the type of storage you are planning to use. Each pod will require a small measure of block storage to host the image and any local data. In addition, data stored in persistent volumes will require some shared storage, such as NFS.

The following tables provide a reference for the sizing of small, medium, and large systems. The requirements are for each product and do not take into account the Kubernetes overheads:

Table 2-1 Sizing for Oracle Unified Directory

| System Size | Number of Users | CPUs | Memory |

|---|---|---|---|

| Development | - | 0.5 | 2GB |

| Small | 5000 | 4 | 16GB |

| Medium | 50000 | 8 | 32GB |

| Large | 2 Million | 16 | 64GB+ |

You require a minimum of two pods to achieve high availability. See Deep Dive into Oracle Unified Directory 12.2.1.4.0 Performance.

Table 2-2 Sizing for Oracle Access Manager

| System Size | Number of Users | CPUs | Memory |

|---|---|---|---|

| Development | - | 1 | 2GB |

| Small | 16000 | 4 | 60GB |

| Medium | 24000 | 8 | 60GB |

| Large | 32000+ | 16 | 120GB |

You require a minimum of two pods to achieve high availability. See Deep Dive into Oracle Access Management 12.2.1.4.0 Performance on Oracle Container Engine for Kubernetes.

Table 2-3 Sizing for Oracle Identity Governance

| System Size | Number of Users | CPUs | Memory |

|---|---|---|---|

| Development | - | 2 | 4GB |

| Small | 50000 | 4 | 5GB |

| Medium | 150000 | 8 | 8GB |

| Large | 5000 | 20 | 12GB |

You require a minimum of two pods for high availability. Large systems should have a minimum of five pods. See Oracle Identity Governance Sizing Guide.

Table 2-4 Sizing for Oracle Advanced Authentication

| System Size | Number of Users | CPUs | Memory |

|---|---|---|---|

| Development | - | 1 | 2GB |

| Small | 16000 | 4 | 60GB |

| Medium | 24000 | 8 | 60GB |

| Large | 32000+ | 16 | 120 |

You require a minimum of two pods to achieve high availability.

Table 2-5 Sizing for Oracle Identity Role Intelligence

| Parameter | Description | Small Scale | Medium Scale | Large Scale |

|---|---|---|---|---|

executorInstances |

Specify the number of executor pods. | 3 | 5 | 7 |

driverRequestCores |

Specify the CPU request for the driver pod. | 2 | 3 | 4 |

driverLimitCores |

Specify the hard CPU limit of the driver pod. | 2 | 3 | 4 |

executorRequestCore |

Specify the CPU request for each executor pod. | 2 | 3 | 4 |

executorLimitCore |

Specify the hard CPU limit of each executor pod. | 2 | 3 | 4 |

driverMemory |

Specify the hard memory limit of the driver pod. | 2GB | 3GB | 4GB |

executorMemory |

Specify the hard memory limit of each executor pod. | 2GB | 3GB | 4GB |

You require a minimum of two pods to achieve high availability. See Tuning Performance in Administering Oracle Identity Role Intelligence.

Parent topic: About the Kubernetes Deployment

Key Components Used by an Oracle Enterprise Deployment

An Oracle enterprise deployment uses the Kubernetes components such as pods, Kubernetes services, and DNS.

This section describes each of these Kubernetes components used by an Oracle enterprise deployment.

- Container Image

- Pods

- Pod Scheduling

- Persistent Volumes

- Kubernetes Services

- Ingress Controller

- Domain Name System

- Namespaces

- Other Products

Parent topic: About the Kubernetes Deployment

Container Image

A container image is an unchangeable, static file that includes executable code. When deployed into Kubernetes, it is the container image that is used to create a pod. The image contains the system libraries, system tools, and Oracle binaries required to run in Kubernetes. The image shares the OS kernel of its host machine.

A container image is compiled from file system layers built onto a parent or base image. These layers encourage the reuse of various components. So, there is no need to create everything from scratch for every project.

A pod is based on a container image. This container image is read-only. Each pod has its own instance of a container image.

A container image contains all the software and libraries required to run the product. It does not require the entire operating system. Many container images do not include standard operating utilities such as the vi editor or ping.

When you upgrade a pod, you are actually instructing the pod to use a different container image. For example, if the container image for Oracle Unified Directory is based on the July 23 patch, then to upgrade the pod to use the October 23 bundle patch, you have to tell the pod to use the October image and restart the pod.

Oracle containers are built using a specific user and group ID. Oracle supplies its container images using the user ID 1000 and group ID 1000. To enable writing to file systems or persistent volumes, you should grant the write access to this user ID. Oracle supplies all container images using this user and group ID.

If your organization already uses this user or group ID, you should reconfigure the image to use different IDs. This feature is outside the scope of this document.

Parent topic: Key Components Used by an Oracle Enterprise Deployment

Pods

A pod is a group of one or more containers, with shared storage/network resources, and a specification for how to run the containers. A pod's contents are always co-located and co-scheduled, and run in a shared context. A pod models an application-specific logical host that contains one or more application containers which are relatively tightly coupled.

In an Oracle enterprise deployment, each WebLogic Server runs in a different pod. Each pod is able to communicate with other pods inside the Kubernetes cluster.

If a node becomes unavailable, Kubernetes (versions 1.5 and later) does not delete the pods automatically. Pods that run on an unreachable node attain the 'Terminating' or 'Unknown' state after a timeout. Pods may also attain these states when a user attempts to delete a pod on an unreachable node gracefully. You can remove a pod in such a state from the apiserver in one of the following ways:

- You or the Node Controller deletes the node object.

- The kubelet on the unresponsive node starts responding, terminates the pod, and removes the entry from the apiserver.

- You force delete the pod.

Oracle recommends the best practice of using the first or the second approach. If a node is confirmed to be dead (for example: permanently disconnected from the network, powered down, and so on), delete the node object. If the node suffers from a network partition, try to resolve the issue or wait for the partition to heal. When the partition heals, the kubelet completes the deletion of the pod and frees up its name in the apiserver.

Typically, the system completes the deletion if the pod is no longer running on a node or an administrator has deleted it. You may override this by force deleting the pod.

Parent topic: Key Components Used by an Oracle Enterprise Deployment

Pod Scheduling

By default, Kubernetes will schedule a pod to run on any worker node that has sufficient capacity to run that pod. In some situations, it is desirable that scheduling occurs on a subset of the worker nodes available. This type of scheduling can be achieved by using Kubernetes labels.

Parent topic: Key Components Used by an Oracle Enterprise Deployment

Persistent Volumes

When a pod is created, it is based on a container image. A container image is supplied by Oracle for the products you are deploying. When a pod gets created, a runtime environment is created based upon that image. That environment is refreshed with the container image every time the pod is restarted. This means that any changes you make inside a runtime environment are lost whenever the container gets restarted.

A persistent volume is an area of disk, usually provided by NFS that is available to the pod but not part of the image itself. This means that the data you want to keep, for example the WebLogic domain configuration, is still available after you restart a pod, that is to say, that the data is persistent.

-

Mount the PV to the pod directly, so that wherever the pod starts in the cluster the PV is available to it. The upside to this approach is that a pod can be started anywhere without extra configuration. The downside to this approach is that there is one NFS volume which is mounted to the pod. If the NFS volume becomes corrupted, you will have to either revert to a backup or have to failover to a disaster recovery site.

-

Mount the PV to the worker node and have the pod interact with it as if it was a local file system. The advantages of this approach are that you can have different NFS volumes mounted to different worker nodes, providing built-in redundancy. The disadvantages of this approach are:

- Increased management overhead.

- Pods have to be restricted to nodes that use a specific version of the file system. For example, all odd numbered pods use odd numbered worker nodes mounted to file system 1, and all even numbered pods use even numbered worker nodes mounted to file system 2.

- File systems have to be mounted to every worker node on which a pod may be started. This requirement is not an issue in a small cluster, unlike in a large cluster.

- Worker nodes become linked to the application. When a worker node undergoes maintenance, you need to ensure that file systems and appropriate labels are restored.

You will need to set up a process to ensure that the contents of the NFS volumes are kept in sync by using something such as the

rsynccron job.If maximum redundancy and availability is your goal, then you should adopt this solution.

Parent topic: Key Components Used by an Oracle Enterprise Deployment

Kubernetes Services

Kubernetes services expose the processes running in the pods regardless of the number of pods that are running. For example, a cluster of WebLogic Managed Servers, each running in different pods will have a service associated with them. This service will redirect your request to the individual pods in the cluster. If you can interact with a pod in different ways, then you would require multiple services.

For example, if you have a cluster of OAM servers where you can interact with them using either HTTP or OAP, then you would create two services: one for HTTP and another for OAP.

Kubernetes services can be internal or external to the cluster. Internal services are of

the type ClusterIP and external services are of the type

NodePort.

Some deployments use a proxy in front of the service. This proxy is typically provided by an 'Ingress' load balancer such as Ngnix. Ingress allows a level of abstraction to the underlying Kubernetes services.

When using Kubernetes, NodePort Services have a similar result as using Ingress. In the NodePort mode, Ingress allows for consolidated management of these services.

This guide describes how to use Ingress or the native Kubernetes NodePort Services. Oracle recommends that you select one method instead of opting for a mix and match. For demonstration purposes, this guide explains the usage of the Nginx Ingress Controller.

The Kubernetes services use a small port range. Therefore, when a Kubernetes

service is created, there will be a port mapping. For instance, if a pod is using port

7001, then a Kubernetes/Ingress service may use

30701 as its port, mapping port 30701 to

7001 internally. It is worth noting that if you are using

individual NodePort Services, then the corresponding Kubernetes service port will be

reserved on every worker node in the cluster.

Kubernetes/Ingress services are known to each worker node, regardless of the worker node on which the containers are running. Therefore, a load balancer is often placed in front of the worker node to simplify routing and worker node scalability.

To interact with a service, you have to refer to it using the format:

worker_node_hostname:Service port. This format is applicable

whether you are using individual NodePort Services or a consolidated Ingress node port

service.

- Load balancer

- Direct proxy calls such as using

WebLogicClusterdirectives for OHS - DNS CNames

Oracle enterprise deployments use direct proxy calls unless otherwise stated.

Parent topic: Key Components Used by an Oracle Enterprise Deployment

Ingress Controller

There are two ways of interacting with your Kubernetes services. You can create an externally facing service for each Kubernetes object you want to access. This type of service is known as the Kubernetes NodePort Service. Alternatively, you can use an ingress service inside the Kubernetes cluster to redirect requests internally.

Parent topic: Key Components Used by an Oracle Enterprise Deployment

About Ingress

Ingress is a proxy server which sits inside the Kubernetes cluster, unlike the NodePort Services which reserve a port per service on every worker node in the cluster. With an ingress service, you can reserve single ports for all HTTP / HTTPS traffic.

An Ingress service works in the same way as the Oracle HTTP Server. It has the concept of virtual hosts and can terminate SSL, if required. There are various implementations of Ingress. However, this guide describes the installation and configuration of NGNIX. The installation will be similar for other Ingress services but the command syntax may be different. Therefore, when you use a different Ingress, see the appropriate manufacturer documentation for the equivalent commands.

Ingress can proxy HTTP, HTTPS, LDAP, and LDAPS protocols.

Ingress is not mandatory. You can interact with the Kubernetes services through an Oracle HTTP Server using individual NodePort Services. However, if you are using only the Oracle Identity and Access Management microservices such as Oracle Identity Role Intelligence (OIRI) and Oracle Advanced Authentication (OAA), you may want to use an Ingress service rather than an Oracle HTTP Server.

Parent topic: Ingress Controller

Deployment Options

- Load Balancer: Load balancer provides an external IP address to which you can connect to interact with the Kubernetes services. This feature is undesirable in an enterprise deployment because you do not want to expose the Kubernetes services directly to the internet to maintain maximum security. The most secure mechanism is to route requests to a load balancer, which in turn forwards the requests to an Oracle HTTP Server that resides in a separate demilitarized zone (DMZ). The HTTP Server then passes on the requests to the application tier.

- NodePort: In this mode, Ingress acts as a simple load

balancer between the Kubernetes services.

The difference between using an Ingress NodePort Service as opposed to individual node port services is that the Ingress controller reserves one port for each service type it offers. For example, one for all HTTP communications, another for all LDAP communications, and so on. Individual node port services reserve one port for each service and type used in an application.

For example, an application such as OAM has four services (two for the Administration Server, one for the OAM Managed Servers, and another for the policy Managed Servers).

- Ingress reserves one NodePort Service regardless of the number of application services.

- Using individual NodePort Services, OAM reserves four ports.

This value will be multiplied by the number of applications deployed in the Kubernetes cluster. However, Ingress will continue to use one port.

Parent topic: Ingress Controller

Secure Socket Layer

You can configure Ingress to handle only HTTP requests. However, this guides explains the setting up of an Ingress controller which will handle both HTTP and HTTPS requests. If you are using a traditional deployment and Oracle HTTP Server, it is unlikely that you will require HTTPS connections. However, if you are using microservices directly, then you will most likely use HTTPS connections.

Parent topic: Ingress Controller

Scope of Ingress

There is usually one Ingress controller per Kubernetes cluster. This controller manages all namespaces within the cluster. It is possible to use multiple Ingresses in the same cluster with different controllers managing different sets of namespaces. Configuring multiple controllers is outside the scope of this document. However, if you want to use multiple Ingresses, see Running Multiple Ingress Controllers.

Parent topic: Ingress Controller

Domain Name System

Every service defined in the cluster (including the DNS server itself) is assigned a DNS name. By default, a client pod's DNS search list includes the pod's own namespace and the cluster's default domain.

The following types of DNS records are created for a Kubernetes cluster:

- Services

Record Type: A or AAAA record

Name format:

my-svc.namespace.svc.cluster-example.com - Pods

Record Type: A or AAAA record

Name format:

podname.namespace.pod.cluster-example.com

Kubernetes uses a built-in DNS server called 'CoreDNS' which is used for the internal name resolution.

login.example.com) may not possible inside the Kubernetes

cluster. If you encounter this issue, you can use one of the following options:

- Option 1 - Add a secondary DNS server to CoreDNS for the company domain.

- Option 2 - Add individual host entries to CoreDNS for the external

hosts. For example:

login.example.com.

Parent topic: Key Components Used by an Oracle Enterprise Deployment

Namespaces

Namespaces enable you to organize clusters into virtual sub-clusters which are helpful when different teams or projects share a Kubernetes cluster. You can add any number of namespaces within a cluster, each logically separated from others but with the ability to communicate with each other.

The enterprise deployment uses different namespaces for each product to keep all the deployed artifacts together. For example, all the Oracle Access Manager components are deployed into a namespace for Oracle Access Manager.

The deployment procedure explained in this guide uses the following namespaces:

Table 2-6 Namespaces Used in this Guide

| Name of the Namespace | Description |

|---|---|

|

INGRESSNS |

Namespace for the Ingress Controller. |

|

ELKNS |

Namespace for Elasticsearch and Kibana. |

|

MONITORING |

Namespace for Prometheus and Grafana. |

|

OUDNS |

Namespace for Oracle Unified Directory (OUD). |

|

OAMNS |

Namespace for Oracle Access Manager (OAM). |

|

OIGNS |

Namespace for Oracle Identity Governance (OIG). |

|

OIRINS |

Namespace for Oracle Identity Role Intelligence (OIRI). |

|

DINGNS |

Namespace for Oracle Identity Role Intelligence Data Ingester. |

|

OPNS |

Namespace for Oracle WebLogic Operator. |

|

OAANS |

Namespace for Oracle Advanced Authentication (OAA). |

Parent topic: Key Components Used by an Oracle Enterprise Deployment

Other Products

In addition to Kubernetes, you can use other products to enhance the Kubernetes experience. These products include:

- Elasticsearch: Elasticsearch is a distributed, free, and open search and

analytics engine for all types of data. Elasticsearch is the central component of

the Elastic Stack, a set of free and open tools for data ingestion, enrichment,

storage, analysis, and visualization. Commonly referred to as the ELK Stack (after

Elasticsearch, Logstash, and Kibana).

Elastic Search is used for:

- Logging and log analytics

- Infrastructure metrics and container monitoring

- Application performance monitoring

- Infrastructure metrics and container monitoring

- Kibana: Kibana is a data visualization and management tool for Elasticsearch. Kibana provides real-time histograms, line graphs, pie charts, and maps. Kibana also includes advanced applications such as Canvas, which allows you to create custom dynamic infographics based on their data; and Elastic Maps for visualizing geospatial data.

- Logstash: Logstash is used in conjunction with Filebeat to scrape log files and to place them into a format that Elasticsearch understands, before transmitting them to Elasticsearch.

- Grafana: Grafana is a complete observability stack that allows you to monitor and analyze metrics, logs, and traces. It allows you to query, visualize, alert on, and understand your data no matter where it is stored. Grafana is often used in conjunction with Prometheus.

- Prometheus: Prometheus is an open-source systems monitoring and alerting toolkit. Prometheus collects and stores its metrics as time series data, that is, metrics information is stored with the timestamp at which it was recorded, alongside optional key-value pairs called labels.

Parent topic: Key Components Used by an Oracle Enterprise Deployment