11 Introduction to Coherence Caches

This chapter includes the following sections:

- Understanding Distributed Caches

A distributed, or partitioned, cache is a clustered, fault-tolerant cache that has linear scalability. - Understanding Replicated Caches

A replicated cache is a clustered, fault tolerant cache where data is fully replicated to every member in the cluster. - Understanding Optimistic Caches

An optimistic cache is a clustered cache implementation similar to the replicated cache implementation but without any concurrency control. - Understanding Near Caches

A near cache is a hybrid cache; it typically fronts a distributed cache or a remote cache with a local cache. - Understanding View Caches

A view cache is a clustered, fault tolerant cache that provides a local in-memory materialized view of data stored in a distributed and partitioned cache. - Understanding Local Caches

While it is not a clustered service, the local cache implementation is often used in combination with various clustered cache services as part of a near cache. - Understanding Remote Caches

A remote cache describes any out-of-process cache accessed by a Coherence*Extend client. - Summary of Cache Types

Compare the features of each cache type to select the right cache for your solution.

Parent topic: Using Caches

Understanding Distributed Caches

Coherence defines a distributed cache as a collection of data that is distributed across any number of cluster nodes such that exactly one node in the cluster is responsible for each piece of data in the cache, and the responsibility is distributed (or, load-balanced) among the cluster nodes.

There are several key points to consider about a distributed cache:

-

Partitioned: The data in a distributed cache is spread out over all the servers in such a way that no two servers are responsible for the same piece of cached data. The size of the cache and the processing power associated with the management of the cache can grow linearly with the size of the cluster. Also, it means that read operations against data in the cache can be accomplished with a "single hop," in other words, involving at most one other server. Write operations are "single hop" if no backups are configured.

-

Load-Balanced: Since the data is spread out evenly over the servers, the responsibility for managing the data is automatically load-balanced across the cluster.

-

Location Transparency: Although the data is spread out across cluster nodes, the exact same API is used to access the data, and the same behavior is provided by each of the API methods. This is called location transparency, which means that the developer does not have to code based on the topology of the cache, since the API and its behavior is the same with a local JCache, a replicated cache, or a distributed cache.

-

Failover: All Coherence services provide failover and failback without any data loss, and that includes the distributed cache service. The distributed cache service allows the number of backups to be configured; if the number of backups is one or higher, any cluster node can fail without the loss of data.

Access to the distributed cache often must go over the network to another cluster node. All other things equals, if there are n cluster nodes, (n - 1) / n operations go over the network:

Figure 11-1 provides a conceptual view of a distributed cache during get operations.

Figure 11-1 Get Operations in a Distributed Cache

Description of "Figure 11-1 Get Operations in a Distributed Cache"

Since each piece of data is managed by only one cluster node, read operations over the network are only "single hop" operations. This type of access is extremely scalable, since it can use point-to-point communication and thus take optimal advantage of a switched network.

Figure 11-2 provides a conceptual view of a distributed cache during put operations.

Figure 11-2 Put Operations in a distributed Cache Environment

Description of "Figure 11-2 Put Operations in a distributed Cache Environment"

In the figure above, the data is being sent to a primary cluster node and a backup cluster node. This is for failover purposes, and corresponds to a backup count of one. (The default backup count setting is one.) If the cache data were not critical, which is to say that it could be re-loaded from disk, the backup count could be set to zero, which would allow some portion of the distributed cache data to be lost if a cluster node fails. If the cache were extremely critical, a higher backup count, such as two, could be used. The backup count only affects the performance of cache modifications, such as those made by adding, changing or removing cache entries.

Modifications to the cache are not considered complete until all backups have acknowledged receipt of the modification. There is a slight performance penalty for cache modifications when using the distributed cache backups; however it guarantees that if a cluster node were to unexpectedly fail, that data consistency is maintained and no data is lost.

Failover of a distributed cache involves promoting backup data to be primary storage. When a cluster node fails, all remaining cluster nodes determine what data each holds in backup that the failed cluster node had primary responsible for when it died. Those data becomes the responsibility of whatever cluster node was the backup for the data.

Figure 11-3 provides a conceptual view of a distributed cache during failover.

Figure 11-3 Failover in a Distributed Cache

Description of "Figure 11-3 Failover in a Distributed Cache"

If there are multiple levels of backup, the first backup becomes responsible for the data; the second backup becomes the new first backup, and so on. Just as with the replicated cache service, lock information is also retained with server failure; the sole exception is when the locks for the failed cluster node are automatically released.

The distributed cache service also allows certain cluster nodes to be configured to store data, and others to be configured to not store data. The name of this setting is local storage enabled. Cluster nodes that are configured with the local storage enabled option provides the cache storage and the backup storage for the distributed cache. Regardless of this setting, all cluster nodes have the same exact view of the data, due to location transparency.

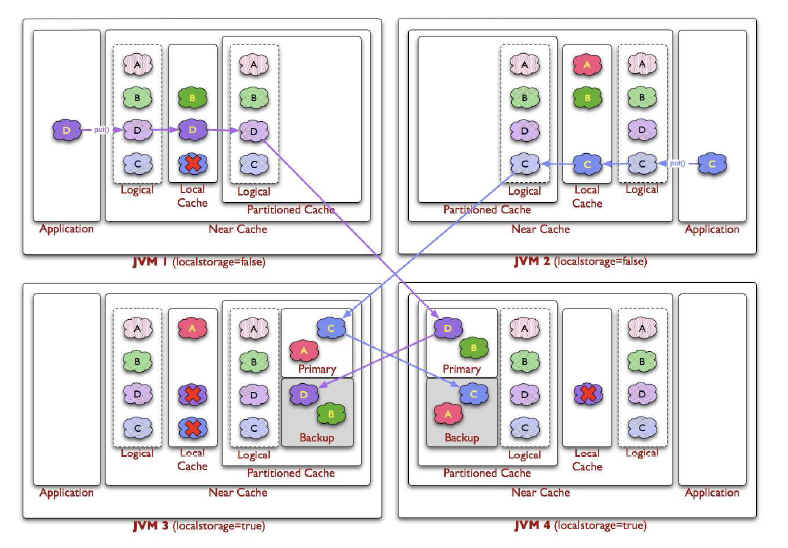

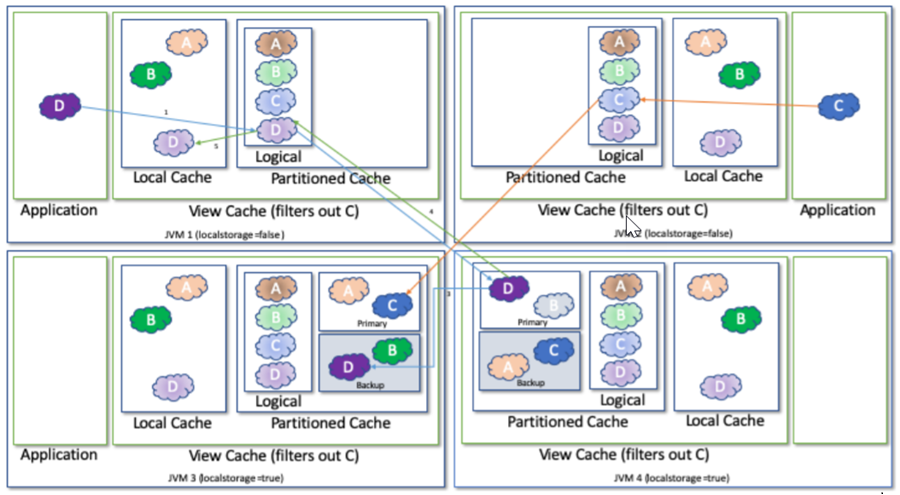

Figure 11-4 provides a conceptual view of local storage in a distributed cache during get and put operations.

Figure 11-4 Local Storage in a Distributed Cache

Description of "Figure 11-4 Local Storage in a Distributed Cache"

There are several benefits to the local storage enabled option:

-

The Java heap size of the cluster nodes that have turned off local storage enabled are not affected at all by the amount of data in the cache, because that data is cached on other cluster nodes. This is particularly useful for application server processes running on older JVM versions with large Java heaps, because those processes often suffer from garbage collection pauses that grow exponentially with the size of the heap.

-

Coherence allows each cluster node to run any supported version of the JVM. That means that cluster nodes with local storage enabled turned on could be running a newer JVM version that supports larger heap sizes, or Coherence's off-heap storage using elastic data. Different JVM versions are fine between storage enabled and disabled nodes, but all storage enabled nodes should use the same JVM version.

-

The local storage enabled option allows some cluster nodes to be used just for storing the cache data; such cluster nodes are called Coherence cache servers. Cache servers are commonly used to scale up Coherence's distributed query functionality.

Parent topic: Introduction to Coherence Caches

Understanding Replicated Caches

Note:

Replicated caches are deprecated in Coherence 12.2.1.4.0 and will be removed in a subsequent release.Replicated cache offers the fastest read performance with linear performance scalability for reads but poor scalability for writes (as writes must be processed by every member in the cluster). Adding servers does not increase aggregate cache capacity because data is replicated to all servers. Replicated caches are typically used for small data sets that are read-only.

Replicated caches have very high access speeds since the data is replicated to each cluster node (JVM), which accesses the data from its own memory. This type of access is often referred to as zero latency access and is perfect for situations in which an application requires the highest possible speed in its data access. However, it is important to remember that zero latency access only occurs after the data has been accessed for the first time. That is, replicated caches store data in serialized form until the data is accessed, at which time the data must be deserialized. Each cluster node where the data is accessed must perform the initial deserialization step as well (even on the node where the data was originally created). The deserialization step adds a performance cost that must be considered when using replicated caches.

Note:

Unlike distributed caches, replicated caches do not differentiate between storage-enabled and storage-disabled cluster members.

There are several challenges to building a reliable replicated cache. The first is how to get it to scale and perform well. Updates to the cache have to be sent to all cluster nodes, and all cluster nodes have to end up with the same data, even if multiple updates to the same piece of data occur at the same time. Also, if a cluster node requests a lock, it should not have to get all cluster nodes to agree on the lock, otherwise it scales extremely poorly; yet with cluster node failure, all of the data and lock information must be kept safely. Coherence handles all of these scenarios transparently and provides a scalable and highly available replicated cache implementation.

Figure 11-5 provides a conceptual view of replicated caches during a get operations.

Figure 11-5 Get Operation in a Replicated Cache

Description of "Figure 11-5 Get Operation in a Replicated Cache"

For put operations, updating a replicated cache requires pushing the new version of the data to all other cluster nodes. Figure 11-6 provides a conceptual view of replicated caches during a put operations.

Figure 11-6 Put Operation in a Replicated Cache

Description of "Figure 11-6 Put Operation in a Replicated Cache"

Coherence implements its replicated cache service in such a way that all read-only operations occur locally, all concurrency control operations involve at most one other cluster node, and only update operations require communicating with all other cluster nodes. The result is excellent scalable performance, and as with all of the Coherence services, the replicated cache service provides transparent and complete failover and failback.

The limitations of the replicated cache service should also be carefully considered:

-

Data is managed by the replicated cache service on every cluster node that has joined the service. Therefore, memory utilization (the Java heap size) is increased for each cluster node and can impact performance.

-

Replicated caches that have a high incidence of updates do not scale linearly as the cluster grows. The cluster suffers diminishing returns as cluster nodes are added.

-

Replicated caches do not support per-entry expiration. Expiry needs to be set at the cache level. Expiry and eviction are enforced locally by the backing map, so data may expire independently on each node. Entries may expire at slightly different times and may be available slightly longer on different nodes. When using expiry and eviction, an application must be designed to accommodate a partial data set. If a system of record (such as a database) is used, then it should be irrelevant to an application whether an item has been expired or evicted.

Parent topic: Introduction to Coherence Caches

Understanding Optimistic Caches

Parent topic: Introduction to Coherence Caches

Understanding Near Caches

Near cache invalidates front cache entries, using a configured invalidation strategy, and provides excellent performance and synchronization. Near cache backed by a partitioned cache offers zero-millisecond local access for repeat data access, while enabling concurrency and ensuring coherency and fail over, effectively combining the best attributes of replicated and partitioned caches.

The objective of a near cache is to provide the best of both worlds between the extreme performance of replicated caches and the extreme scalability of the distributed caches by providing fast read access to Most Recently Used (MRU) and Most Frequently Used (MFU) data. Therefore, the near cache is an implementation that wraps two caches: a front cache and a back cache that automatically and transparently communicate with each other by using a read-through/write-through approach.

The front cache provides local cache access. It is assumed to be inexpensive, in that it is fast, and is limited in terms of size. The back cache can be a centralized or multitiered cache that can load-on-demand in case of local cache misses. The back cache is assumed to be complete and correct in that it has much higher capacity, but more expensive in terms of access speed.

This design allows near caches to configure cache coherency, from the most basic expiry-based caches and invalidation-based caches, up to advanced caches that version data and provide guaranteed coherency. The result is a tunable balance between the preservation of local memory resources and the performance benefits of truly local caches.

The typical deployment uses a local cache for the front cache. A local cache is a reasonable choice because it is thread safe, highly concurrent, size-limited, auto-expiring, and stores the data in object form. For the back cache, a partitioned cache is used.

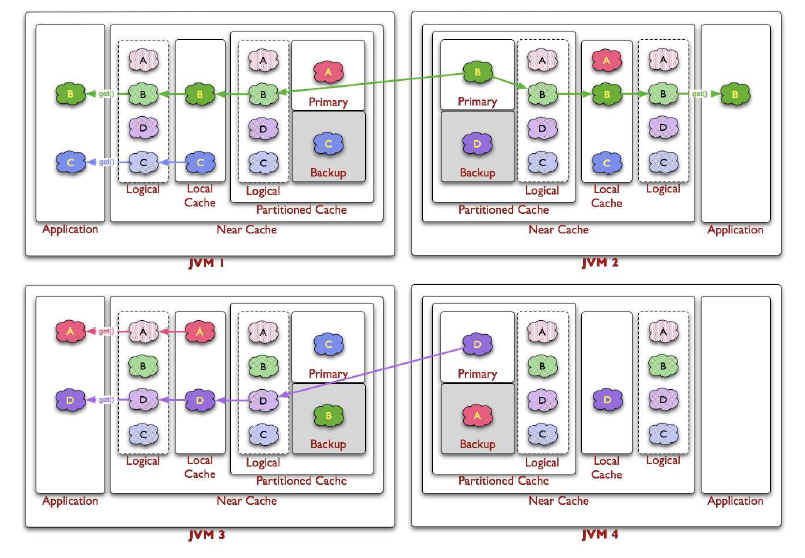

The following figure illustrates the data flow in a near cache. If the client writes an object D into the grid, the object is placed in the local cache inside the local JVM and in the partitioned cache which is backing it (including a backup copy). If the client requests the object, it can be obtained from the local, or front cache, in object form with no latency.

Note:

Because entries are stored in object form in the front of the near cache, the application must take care of synchronizing access by multiple threads in the same JVM. For example, if a thread mutates an entry that has been retrieved from the front of a near cache, the change is immediately visible to other threads in the same JVM.

Figure 11-7 provides a conceptual view of a near cache during put operations.

Figure 11-7 Put Operations in a Near Cache

Description of "Figure 11-7 Put Operations in a Near Cache"

If the client requests an object that has been expired or invalidated from the front cache, then Coherence automatically retrieves the object from the partitioned cache. The front cache stores the object before the object is delivered to the client.

Figure 11-8 provides a conceptual view of a near cache during get operations.

Figure 11-8 Get Operations in a Near Cache

Description of "Figure 11-8 Get Operations in a Near Cache"

Parent topic: Introduction to Coherence Caches

Understanding View Caches

The view cache provides read performance comparable to replicated caches while offering consistent writes leveraging from distributed/partitioned caches. Switching from a replicated cache to view cache provides a number of anciliary benefits including support for the number of features provided by partitioned cache such as partition safety/strength, persistence, and so on.

ContinuousQueryCaches,

which have an internal map to store the contents of the view and a back

NamedCache (optionally) to both gather the data from and listen to

further updates. View caches define ContinuousQueryCache instances in

the cache configuration. Two notable features are added to view caches:

- Caches are populated as a part of activating the

ConfigurableCacheFactory(typically viaDefaultCacheServer.startServerDaemonorConfigurableCacheFactory.activate). - Data is held serialized until accessed.

View caches have very high access speeds since the data is local to the view on each cluster node (JVM), which accesses the data from its own memory. This type of access is often referred to as zero latency access and is perfect for situations in which an application requires the highest possible speed in its data access.

See Understanding Distributed Caches, Using Continuous Query Caching for more information on the components that make up a view.

See Table 11-1 for more specifications of the view cache components.

Figure 11-9 provides a conceptual view of a view cache during put operations.

Figure 11-9 Put Operations in a View Cache

Description of "Figure 11-9 Put Operations in a View Cache"

Parent topic: Introduction to Coherence Caches

Understanding Local Caches

Note:

Applications that use a local cache as part of a near cache must make sure that keys are immutable. Keys that are mutable can cause threads to hang and deadlocks to occur.

In particular, the near cache implementation uses key objects to synchronize the local cache (front map), and the key may also be cached in an internal map. Therefore, a key object passed to the get() method is used as a lock. If the key object is modified while a thread holds the lock, then the thread may fail to unlock the key. In addition, if there are other threads waiting for the locked key object to be freed, or if there are threads who attempt to acquire a lock to the modified key, then threads may hang and deadlocks can occur. For details on the use of keys, see the java.util.Map API documentation.

A local cache is completely contained within a particular cluster node. There are several attributes of the local cache that are worth noting:

-

The local cache implements the same standard collections interface that the clustered caches implement, meaning that there is no programming difference between using a local or a clustered cache. Just like the clustered caches, the local cache is tracking to the JCache API, which itself is based on the same standard collections API that the local cache is based on.

-

The local cache can be size-limited. The local cache can restrict the number of entries that it caches, and automatically evict entries when the cache becomes full. Furthermore, both the sizing of entries and the eviction policies can be customized. For example, the cache can be size-limited based on the memory used by the cached entries. The default eviction policy uses a combination of Most Frequently Used (MFU) and Most Recently Used (MRU) information, scaled on a logarithmic curve, to determine what cache items to evict. This algorithm is the best general-purpose eviction algorithm because it works well for short duration and long duration caches, and it balances frequency versus recentness to avoid cache thrashing. The pure LRU and pure LFU algorithms are also supported, and the ability to plug in custom eviction policies.

-

The local cache supports automatic expiration of cached entries, meaning that each cache entry can be assigned a time to live in the cache. See In-memory Cache with Expiring Entries and Controlling the Growth of a Local Cache.

-

The local cache is thread safe and highly concurrent, allowing many threads to simultaneously access and update entries in the local cache.

-

The local cache supports cache notifications. These notifications are provided for additions (entries that are put by the client, or automatically loaded into the cache), modifications (entries that are put by the client, or automatically reloaded), and deletions (entries that are removed by the client, or automatically expired, flushed, or evicted.) These are the same cache events supported by the clustered caches.

-

The local cache maintains hit and miss statistics. These run-time statistics can accurately project the effectiveness of the cache, and adjust its size-limiting and auto-expiring settings accordingly while the cache is running.

The local cache is important to the clustered cache services for several reasons, including as part of Coherence's near cache technology, and with the modular backing map architecture.

Parent topic: Introduction to Coherence Caches

Understanding Remote Caches

Parent topic: Introduction to Coherence Caches

Summary of Cache Types

Numerical Terms:

-

JVMs = number of JVMs

-

DataSize = total size of cached data (measured without redundancy)

-

Redundancy = number of copies of data maintained

-

LocalCache = size of local cache (for near caches)

Table 11-1 Summary of Cache Types and Characteristics

| Characteristics | Replicated Cache | Optimistic Cache | Partitioned Cache | Near Cache backed by partitioned cache | LocalCache not clustered | View Cache |

|---|---|---|---|---|---|---|

|

Topology |

Replicated |

Replicated |

Partitioned Cache |

Local Caches + Partitioned Cache |

Local Cache |

Local View Cache + Partitioned Cache |

|

Read Performance |

Instant 5 |

Instant 5 |

Locally cached: instant 5 Remote: network speed 1 |

Locally cached: instant 5 Remote: network speed 1 |

Instant 5 |

Instant 5 |

|

Fault Tolerance |

Extremely High |

Extremely High |

Configurable 4 Zero to Extremely High |

Configurable 4 Zero to Extremely High |

Zero |

Configurable; Zero to Extremely High |

|

Write Performance |

Fast 2 |

Fast 2 |

Extremely fast 3 |

Extremely fast 3 |

Instant 5 |

Extremely fast 3 |

|

Memory Usage (Per JVM) |

DataSize |

DataSize |

DataSize/JVMs x Redundancy |

LocalCache + [DataSize / JVMs] |

DataSize |

View Cache + [DataSize / JVMs x Redundancy] |

|

Coherency |

fully coherent |

fully coherent |

fully coherent |

fully coherent 6 |

not applicable (n/a) |

fully coherent |

|

Memory Usage (Total) |

JVMs x DataSize |

JVMs x DataSize |

Redundancy x DataSize |

[Redundancy x DataSize] + [JVMs x LocalCache] |

n/a |

[Redundancy x DataSize] + JVMs x View Cache 7 |

|

Locking |

fully transactional |

none |

fully transactional |

fully transactional |

fully transactional |

fully transactional |

|

Typical Uses |

Metadata |

n/a (see Near Cache) |

Read-write caches |

Read-heavy caches w/ access affinity |

Local data |

Metadata |

Notes:

-

As a rough estimate, with 100mb Ethernet, network reads typically require ~20ms for a 100KB object. With gigabit Ethernet, network reads for 1KB objects are typically sub-millisecond.

-

Requires UDP multicast or a few UDP unicast operations, depending on JVM count.

-

Requires a few UDP unicast operations, depending on level of redundancy.

-

Partitioned caches can be configured with as many levels of backup as desired, or zero if desired. Most installations use one backup copy (two copies total)

-

Limited by local CPU/memory performance, with negligible processing required (typically sub-millisecond performance).

-

Listener-based Near caches are coherent; expiry-based near caches are partially coherent for non-transactional reads and coherent for transactional access.

-

Size of view is dependent on the applied

Filter. By default, a view does not filter entries.

Parent topic: Introduction to Coherence Caches