9 Working with Complex Datatypes and HDFS File Formats

This chapter provides an overview of extended data format support and complex type support.

This chapter includes the following sections:

HDFS File Formats

Supported Formats

ODI can read and write HDFS file data in a variety of formats. The HDFS file formats supported are Json, Avro, Delimited, and Parquet. The format is specified on the Storage Tab of the HDFS data store. When you reverse-engineer Avro, JSON, or Parquet files, you are required to supply a Schema in the Storage Tab. The reverse-engineer process will only use the Schema, and not access the HDFS files themselves. Delimited HDFS files cannot be reverse-engineered, the Attributes (in the Attributes tab of the HDFS data store) will have to be added manually and the parameters, such as field separator should be defined on the Storage Tab.

If you are loading Avro files into Hive, then you will need to copy the Avro Schema file (.avsc) into the same HDFS location as the Avro HDFS files (using the same file name that you specified for the Schema in the Storage Panel).

Complex Types

JSON, Avro, and Parquet formats can contain complex data types, such as array or Object. During the Reverse-Engineering phase, the Datatype field for these Attributes is set to "Complex" and the definition of the complex type is stored in the Data Format field for the Attribute. The Syntax of this definition is the same as Avro uses for its Schema definitions. This information is used by ODI in the Mapping Editor when the flatten component is added to the Mapping.

Table 9-1 HDFS File Formats

| File Format | Reverse-Engineer | Complex Type Support | Load into Hive | Load into Spark | Write from Spark |

|---|---|---|---|---|---|

|

Avro |

Yes (Schema required) |

Yes |

Yes (Schema required) |

Yes (Batch mode only) |

Yes |

|

Delimited |

No |

No |

Yes |

Yes |

Yes |

|

JSON |

Yes (Schema required) |

Yes |

Yes |

Yes |

Yes |

|

Parquet |

Yes (Schema required) |

Yes |

Yes |

Yes (Batch mode only) |

Yes (Batch and Streaming) |

Table 9-2 Complex Types

| Avro | Json | Hive | Parquet |

|---|---|---|---|

|

Record |

Object |

Struct |

Record |

|

enum |

NA |

NA |

enum |

|

array |

array |

array |

array |

|

map |

NA |

map |

map |

|

union |

NA |

union |

union |

|

fixed |

NA |

NA |

fixed |

Working with Complex Datatypes in Mappings

Provides information on working with complex, nested, and user defined metadata that drives the Flatten component.

Oracle Data Integrator provides a Flatten component that can process input data with a Complex structure and produce a flattened representation of the same data using standard data types. The input data may be in various formats, such as a Hive table or a JSON HDFS file.

When you add a Flatten component into a Mapping, you choose the attribute to Flatten from the component upstream.

The Flatten components for Spark and Hive have some advanced usability features that do not exist in the other implementations. Namely, once you've chosen the attribute to flatten from the upstream node, the flattened attributes will be created automatically. The reason this is possible is that the Reverse-Engineering process for Hive and HDFS capture the Complex Type definition in the Attribute's "Data Format" property. You can view this property in the Attribute tab of the Hive or HDFS Data Store. The Flatten component's Collection and Structure properties are also set automatically based on the Attribute definition. That leaves just the "Include Nulls" property to be set manually, based on whether null complex data should be processed. Some technologies, particularly Spark, can drop records containing null complex attributes.

Table 9-3 Properties for Flatten Component

| Flatten Property | Description | Automatically detected for Hive and HDFS (if reverse-engineering was used) |

|---|---|---|

|

Include Nulls |

Indicates whether null complex data should be processed. |

No |

|

Collection |

Indicates whether the Complex Type attribute is a collection such as an array. |

Yes |

|

Structure |

Indicates whether the Complex Type is an object, record, or structure, and not just a collection of scalar types. |

Yes |

Each Flatten component can flatten only one Complex Type attribute. You can chain Flatten components together to flatten more than one attribute, or where nested datatypes are concerned, to access the next level of nesting.

For more information using the Flatten component and the KMs associated with it, see the following sections:

-

Creating Flatten Components in Developing Integration Projects with Oracle Data Integrator.

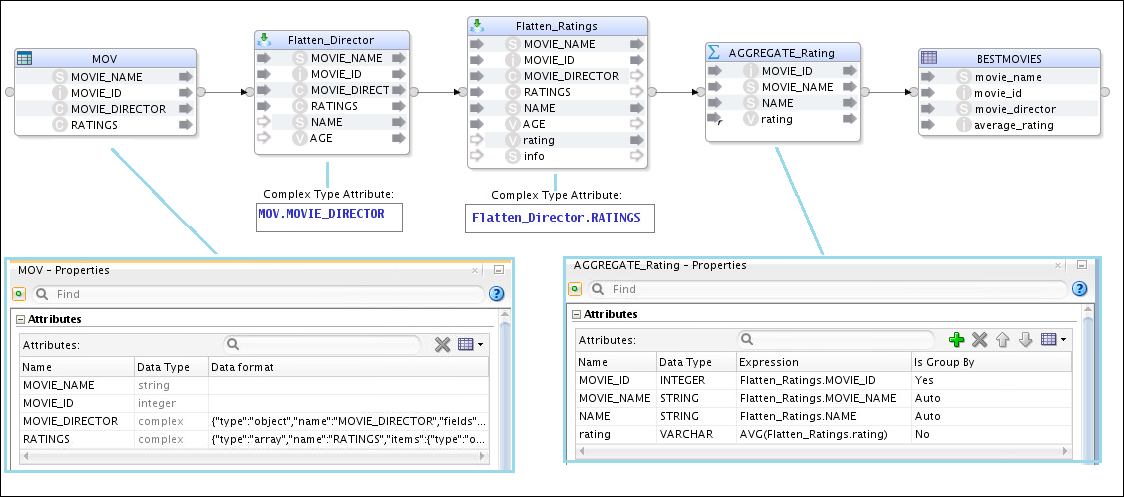

The example in Using Flatten for Complex Types in Hive Mappings shows an example of chaining Flatten components. The Flatten_Director Complex Type Attribute is set to the upstream MOVIE_DIRECTOR attribute from the MOV node. At that point, NAME and AGE are created in Flatten_Director automatically. Flatten_Ratings follows Flatten_Director and uses Flatten_Director.RATINGS as the Complex Type Attribute, after which rating and info attributes are automatically added.

Hive Complex Datatypes

Hive has the following complex data types:

-

Arrays

-

Maps

-

Structs

-

Union

Using Flatten for Complex Types in Hive Mappings

The Flatten component is used to handle Complex Types in Hive mappings.

Consider the JSON snippet below which is a source with two Complex Types:

-

A MOVIE_DIRECTOR field which is a structure consisting of Name and Age.

-

A RATINGS field which is an array of ratings, with each rating comprising a rating and an info field.

{"MOVIE_ID":11,"MOVIE_NAME":"The Lobster","MOVIE_DIRECTOR":{"NAME":"Yorgos Lanthimos","AGE":43},"RATINGS":[{"rating":7,"info":"x"},{"rating":5,"info":"x"}]}

{"MOVIE_ID":12,"MOVIE_NAME":"Green Room","MOVIE_DIRECTOR":{"NAME":"Jeremy Saulnier","AGE":40},"RATINGS":[{"rating":4,"info":"x"},{"rating":3,"info":"x"}]}

{"MOVIE_ID":13,"MOVIE_NAME":"Louder Than Bombs","MOVIE_DIRECTOR":{"NAME":"Joachin Trier","AGE":42},"RATINGS":[{"rating":1,"info":"x"},{"rating":2,"info":"x"}]}

...The Hive table that is to be populated requires the NAME to be extracted from the MOVIE_DIRECTOR complex structure along with the average of the rating values from the RATINGS array.

To accomplish this, a mapping is required which flattens the

-

MOVIE_DIRECTOR field so that the NAME can be extracted.

-

RATINGS array so that the average of the individual ratings for each row can be calculated.

The mapping is as shown in the figure below.

Figure 9-1 Mapping to flatten Complex Types

Description of "Figure 9-1 Mapping to flatten Complex Types"

The populated Hive table appears as shown in the figure below.

Cassandra Complex Datatypes

Cassandra has the following complex data types:

-

Map

-

Set

-

List

-

Tuple

-

User-Defined Type

Map

A map is a set of key-value pairs, where the keys are unique. The map is sorted by its keys.

Set

A set is a collection of unique values. The set is sorted based on the values.

List

A list is a collection of non-unique values which are ordered by their position in the list.

Tuple

A Tuple comprises fixed-length sets of typed positional fields. It can accommodate 32768 fields, and can be used as an alternative to a User-Defined Type.

User-Defined Type

User-Defined Type (UDT) is a complex data type that can be created, updated, and deleted.

Cassandra can be used with LKM Spark to Cassandra and generic SQL KMs.

The Apache Cassandra DataDirect JDBC Driver handles Complex Types differently compared to the Hive JDBC Driver. Due to this, mappings that use Cassandra Complex Types are written slightly differently compared to Hive mappings. To demonstrate this, consider a table defined in Cassandra with the use of UDTs and Lists.

-

You can access UDTs through the Apache Cassandra DataDirect JDBC Driver because the JDBC Driver flattens the UDTs and projects the scalar types as regular columns of the table. This negates the need to use the Flatten Component on the mapping. You will see that the extra columns have been flattened automatically in the Cassandra Data Stores, and can be used directly in mappings.

-

You can access collections of values (Lists in Cassandra; Arrays in Hive) through the Apache Cassandra DataDirect JDBC Driver. The Apache Cassandra DataDirect JDBC Driver normalizes the structure and projects the collection type through a child table. When you reverse-engineer the original table, additional data stores will be created for the collections. The mapping then needs to join these two tables.

-

You cannot access Nested Types in ODI.

How ODI deals with Cassandra Lists and User Defined Types

This is an example that shows how ODI deals with Cassandra Lists and User Defined Types.

Consider a schema, movieRating2 containing a UDT and a List:

-

A

movie_directorattribute which is a UDT consisting of Name and Age. -

A

ratingsattribute which is a list of integers.

create type director_object (name text, age int);

create table movieRating2 (movie_name text, movie_id int PRIMARY KEY, movie_director frozen<director_object>, ratings list<int);

INSERT INTO movierating2 (movie_id, movie_name, movie_director, ratings)

VALUES (1,'Lord of the Rings',('Peter Jackson',32),[1,2]);

INSERT INTO movierating2 (movie_id, movie_name, movie_director, ratings)

VALUES (2,'King Kong',('Peter Jackson',32),[1,2]);

INSERT INTO movierating2 (movie_id, movie_name, movie_director, ratings)

VALUES (3,'District 9',('Peter Jackson',32),[1,3]);

INSERT INTO movierating2 (movie_id, movie_name, movie_director, ratings)

VALUES (4,'The Birds',('Alfred Hitchcock',140),[1,4]);

INSERT INTO movierating2 (movie_id, movie_name, movie_director, ratings)

VALUES (5,'Psycho',('Alfred Hitchcock',140),[1,2,8]);

INSERT INTO movierating2 (movie_id, movie_name, movie_director, ratings)

VALUES (6,'Local Hero',('Bill Forsyth',56),[1,9]);

INSERT INTO movierating2 (movie_id, movie_name, movie_director, ratings)

VALUES (7,'Restless Natives',('Michael Hoffman',45),[1]);

INSERT INTO movierating2 (movie_id, movie_name, movie_director, ratings)

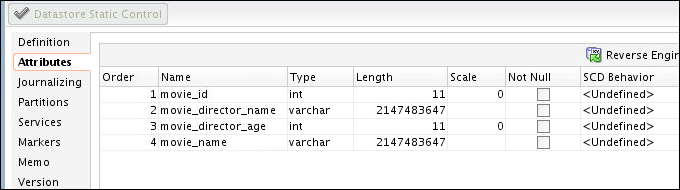

VALUES (8,'Trainspotting',('Danny Boyle',12),[1,4]);On reverse-engineering the movierating2 table in ODI, it appears as shown in the figure below.

Figure 9-3 Reverse-engineered movierating2 table

Description of "Figure 9-3 Reverse-engineered movierating2 table"

This table does not contain the ratings attribute. However, the JDBC driver exposes a virtual table called movierating2_ratings.

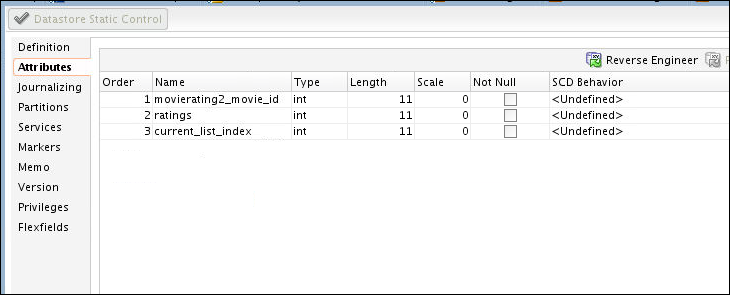

On reverse-engineering this virtual table in ODI, it appears as shown in the figure below.

Figure 9-4 Reverse-engineered movierating2_ratings table

Description of "Figure 9-4 Reverse-engineered movierating2_ratings table"

In this example, the target HDFS file requires that the name is extracted from the movie_director UDT along with the average of the values from the ratings list.

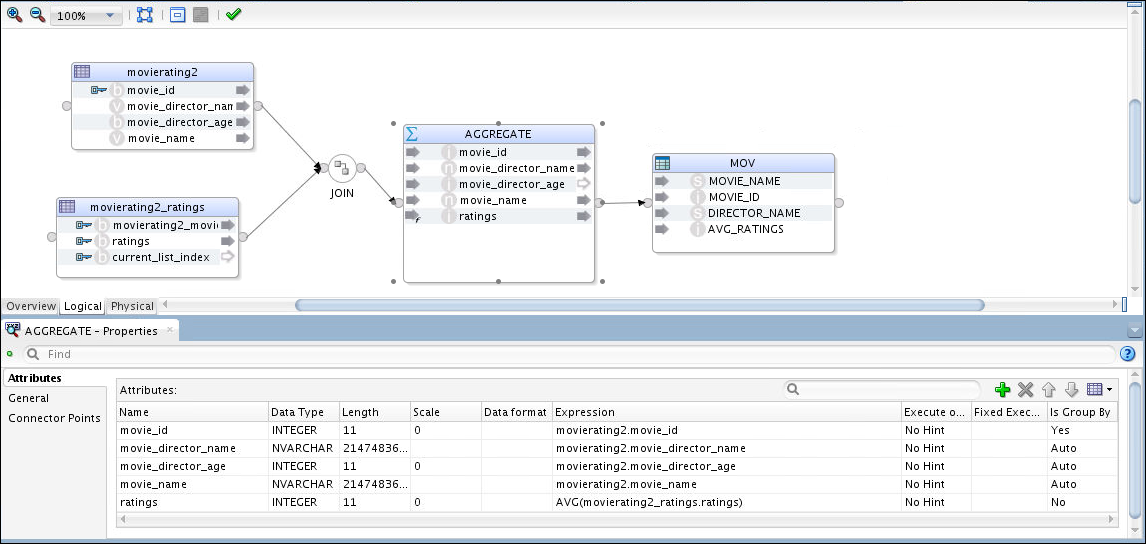

To accomplish this, a mapping is required which joins the movierating2 and movierating2_ratings tables, and averages the ratings for each movie.

The mapping is as shown in the figure below:

Figure 9-5 Mapping to join movierating2 and movierating2_ratings tables

Description of "Figure 9-5 Mapping to join movierating2 and movierating2_ratings tables"

The key point here is that for Cassandra Complex Types, the Flatten component is not required to access the complex fields in the mapping. You'll notice that the similar Hive mapping in Using Flatten for Complex Types in Hive Mappings is designed differently.

After running the mapping, the target HDFS file looks like this:

hdfs dfs -cat AvgRating.json/part-r-00000-4984bb6c-dacb-4ce1-a474-dc5641385e9f

{"MOVIE_NAME":"District 9","MOVIE_ID":3,"DIRECTOR_NAME":"Peter Jackson","AVG_RATINGS":2}

{"MOVIE_NAME":"Lord of the Rings","MOVIE_ID":1,"DIRECTOR_NAME":"Peter Jackson","AVG_RATINGS":1}

{"MOVIE_NAME":"The Birds","MOVIE_ID":4,"DIRECTOR_NAME":"Alfred Hitchcock","AVG_RATINGS":2}

{"MOVIE_NAME":"Restless Natives","MOVIE_ID":7,"DIRECTOR_NAME":"Michael Hoffman","AVG_RATINGS":1}

hdfs dfs -cat AvgRating.json/part-r-00001-4984bb6c-dacb-4ce1-a474-dc5641385e9f

{"MOVIE_NAME":"Psycho","MOVIE_ID":5,"DIRECTOR_NAME":"Alfred Hitchcock","AVG_RATINGS":3}

{"MOVIE_NAME":"Trainspotting","MOVIE_ID":8,"DIRECTOR_NAME":"Danny Boyle","AVG_RATINGS":2}

{"MOVIE_NAME":"King Kong","MOVIE_ID":2,"DIRECTOR_NAME":"Peter Jackson","AVG_RATINGS":1}

{"MOVIE_NAME":"Local Hero","MOVIE_ID":6,"DIRECTOR_NAME":"Bill Forsyth","AVG_RATINGS":5}