2 Hadoop Data Integration Concepts

This chapter includes the following sections:

Hadoop Data Integration with Oracle Data Integrator

When you implement a big data processing scenario, the first step is to load the data into Hadoop. The data source is typically in Files or SQL databases.

When the data has been aggregated, condensed, or processed into a smaller data set, you can load it into an Oracle database, other relational database, HDFS, HBase, or Hive for further processing and analysis. Oracle Loader for Hadoop is recommended for optimal loading into an Oracle database.

After the data is loaded, you can validate and transform it by using Hive, Pig, or Spark, like you use SQL. You can perform data validation (such as checking for NULLS and primary keys), and transformations (such as filtering, aggregations, set operations, and derived tables). You can also include customized procedural snippets (scripts) for processing the data.

A Kafka cluster consists of one to many Kafka brokers handling and storing messages. Messages are organized into topics and physically broken down into topic partitions. Kafka producers connect to a cluster and feed messages into a topic. Kafka consumers connect to a cluster and receive messages from a topic. All messages on a specific topic need not have the same message format, it is a good practice to use only a single message format per topic. Kafka is integrated into ODI as a new technology.

For more information, see Integrating Hadoop Data.

Generate Code in Different Languages with Oracle Data Integrator

Oracle Data Integrator can generate code for multiple languages. For Big Data, this includes HiveQL, Pig Latin, Spark RDD, and Spark DataFrames.

The style of code is primarily determined by the choice of the data server used for the staging location of the mapping.

It is recommended to run Spark applications on yarn. Following this recommendation ODI only supports yarn-client and yarn-cluster mode execution and has introduced a run-time check.

odi.spark.enableUnsupportedSparkModes = trueFor more information about generating code in different languages and the Pig and Spark component KMs, see the following:

Leveraging Apache Oozie to execute Oracle Data Integrator Projects

Apache Oozie is a workflow scheduler system that helps you orchestrate actions in Hadoop. It is a server-based Workflow Engine specialized in running workflow jobs with actions that run Hadoop MapReduce jobs.

Implementing and running Oozie workflow requires in-depth knowledge of Oozie.

However, Oracle Data Integrator does not require you to be an Oozie expert. With Oracle Data Integrator you can easily define and execute Oozie workflows.

Oracle Data Integrator enables automatic generation of an Oozie workflow definition by executing an integration project (package, procedure, mapping, or scenario) on an Oozie engine. The generated Oozie workflow definition is deployed and executed into an Oozie workflow system. You can also choose to only deploy the Oozie workflow to validate its content or execute it at a later time.

Information from the Oozie logs is captured and stored in the ODI repository along with links to the Oozie UIs. This information is available for viewing within ODI Operator and Console.

For more information, see Executing Oozie Workflows.

Oozie Workflow Execution Modes

You can execute Oozie workflows through Task and Session modes. Task mode is the default mode for Oozie workflow execution.

ODI provides the following two modes for executing the Oozie workflows:

-

TASK

Task mode generates an Oozie action for every ODI task. This is the default mode.

The task mode cannot handle the following:

-

KMs with scripting code that spans across multiple tasks.

-

KMs with transactions.

-

KMs with file system access that cannot span file access across tasks.

-

ODI packages with looping constructs.

-

-

SESSION

Session mode generates an Oozie action for the entire session.

ODI automatically uses this mode if any of the following conditions is true:

-

Any task opens a transactional connection.

-

Any task has scripting.

-

A package contains loops.

Loops in a package are not supported by Oozie engines and may not function properly in terms of execution and/or session log content retrieval, even when running in SESSION mode.

-

Note:

This mode is recommended for most of the use cases.

By default, the Oozie Runtime Engines use the Task mode, that is, the default value of the OOZIE_WF_GEN_MAX_DETAIL property for the Oozie Runtime Engines is TASK.

You can configure an Oozie Runtime Engine to use Session mode, irrespective of whether the conditions mentioned above are satisfied or not. To force an Oozie Runtime Engine to generate session level Oozie workflows, set the OOZIE_WF_GEN_MAX_DETAIL property for the Oozie Runtime Engine to SESSION.

For more information, see Oozie Runtime Engine Properties.

Lambda Architecture

Lambda architecture is a data-processing architecture designed to handle massive quantities of data by taking advantage of both batch and stream processing methods.

Lambda architecture has the following layers:

-

Batch Layer: In this layer, fresh data is loaded into the system at regular intervals with perfect accuracy containing complete detail. New data is processed with all available data when generating views.

-

Speed Layer: In this layer, real-time data is streamed into the system for immediate availability at the expense of total completeness (which is resolved in the next Batch run).

-

Serving Layer: In this layer, views are built to join the data from the Batch and Speed layers.

With ODI Mappings, you can create a single Logical Mapping for which you can provide multiple Physical Mappings. This is ideal for implementing Lambda Architecture with ODI.

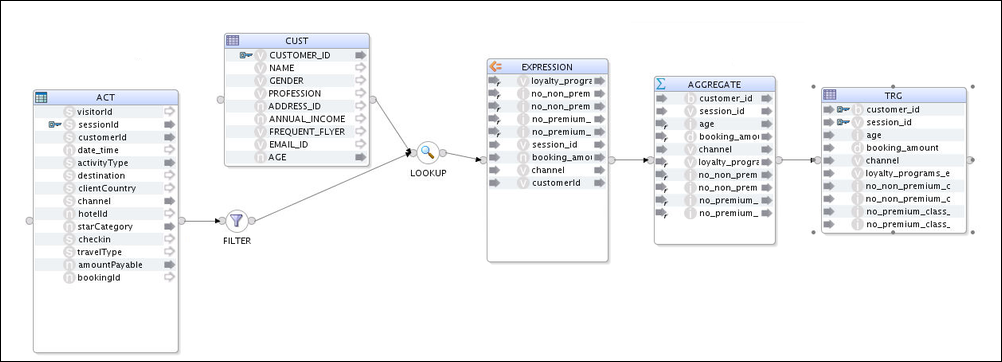

Logical Mapping

In the following figure, the ACT datastore is defined as a Generic data store. The same applies to the TRG node. These can be created by copying and pasting from a reverse-engineered data store with the required attributes.

Figure 2-1 Logical Mapping in Lambda Architecture

Description of "Figure 2-1 Logical Mapping in Lambda Architecture"

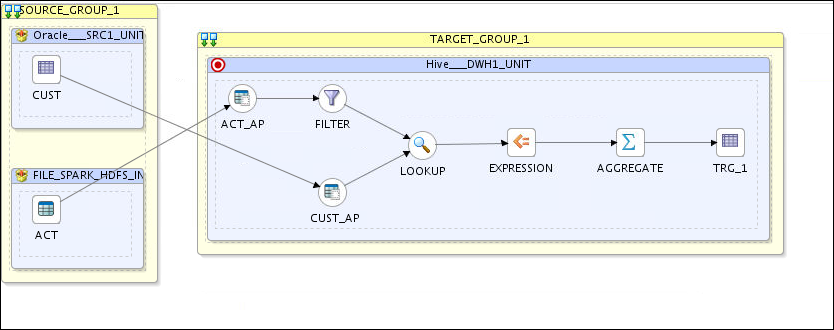

Batch Physical Mapping

As seen in the figure below, for Batch Physical Mapping, ACT has a File Data Store and TRG_1 has a Hive Data Store.

Figure 2-2 Batch Physical Mapping in Lambda Architecture

Description of "Figure 2-2 Batch Physical Mapping in Lambda Architecture"

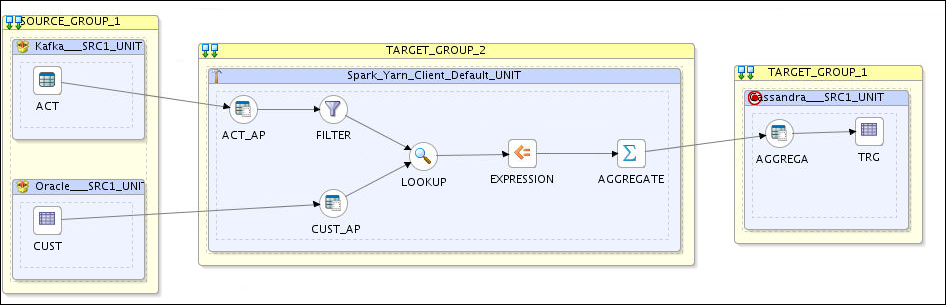

Streaming Physical Mapping

As seen in the figure below, Streaming Physical Mapping has a Kafka Data Store for ACT and a Cassandra Data Store for TRG.

Figure 2-3 Streaming Physical Mapping in Lambda Architecture

Description of "Figure 2-3 Streaming Physical Mapping in Lambda Architecture"

Any changes made to the logical mapping will be synced to each physical mapping, thus reducing the complexity of Lambda architecture implementations.