1.3.9.12 Tokenize

Tokenize is a sub-processor of Parse. The Tokenize sub-processor performs the first step of parsing by breaking up the data syntactically into an initial set of Base Tokens, by analyzing the characters, and sequences of characters, in the data.

Note that in the context of parsing, a Token is any 'unit' of data, as understood by the Parse processor. The Tokenize step forms the first set of Tokens - termed Base Tokens. Base Tokens are typically sequences of the same type of character (such as letters or numbers) separated by other types of character (such as punctuation or whitespace). For example, if the following data is input

The following sample input data for the Tokenize processor is broken up into base tokens (as shown in the table) using the Tokenize step and the processor’s default rules:

Address1

10 Harwood Road

3Lewis Drive

| Address1 | Base Tokens | Pattern of Base Tokens |

|---|---|---|

|

10 Harwood Road |

"10" - tagged 'N' to indicate a number " " - tagged '_' to indicate whitespace "Harwood" - tagged 'A' to indicate a word " " - tagged '_' to indicate whitespace "Road" - tagged 'A' to indicate a word |

N_A_A |

|

3Lewis Drive |

"3" - tagged 'N' to indicate a number "Lewis" - tagged 'A' to indicate a word " " - tagged '_' to indicate whitespace "Drive" - tagged 'A' to indicate a word |

NA_A |

However, you may want to ignore certain Base Tokens in further analysis of the data. For example, you may not want to classify the whitespace characters above, and may want to ignore them when matching resolution rules. It is possible to do this by specifying the characters you want to ignore as of a WHITESPACE or DELIMITER type in the Base Tokenization Reference Data. See Configuration below.

Use Tokenize to gain an initial understanding of the contents of the data attributes that you want to Parse, and to drive the way the data is understood. Normally, you can use the default set of tokenization rules to gain this understanding, and then refine them if needed - for example because a specific character has a specific meaning in your data and you want to tag it differently from other characters. Often, the default tokenization rules will not need to be changed.

Configuration

The tokenization rules consist of the following Options:

| Option | Type | Purpose | Default Value |

|---|---|---|---|

|

Character Map |

Character Token Map |

Maps characters (by Unicode reference) to a character tag, a grouped character tag, and a character type. See Note below. |

*Base Tokenization Map |

|

Split lower case to upper case |

Yes/No |

Splits sequences of letters where there is a change from lower case to upper case into separate tokens (for example, to split "HarwoodRoad" into two base tokens - "Harwood" and "Road"). |

Yes |

|

Split upper case to lower case |

Yes/No |

Splits sequences of letters where there is a change from upper case to lower case into separate tokens (for example, to split "SMITHjohn" into two base tokens - "SMITH" and "john"). |

No |

Note on the Character Map Reference Data

The Reference Data used to tokenize data is of a specific format, and is important to the way Tokenize works.

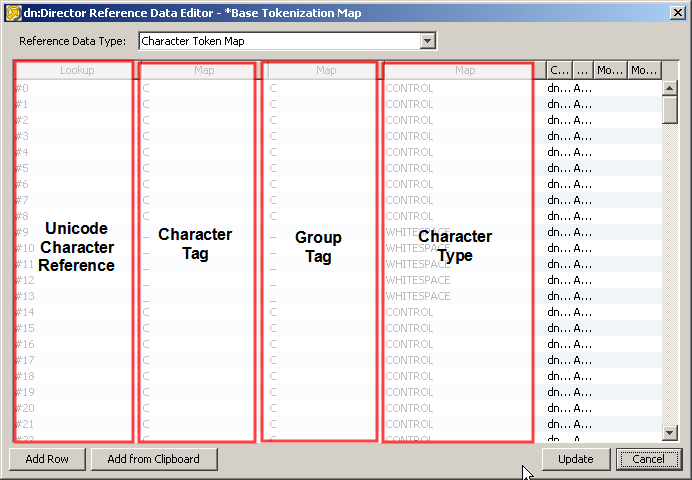

The following screenshot of the default Reference Data explains the purpose of each column. The columns are described below.

Unicode character reference: The Unicode character reference is used by Tokenize in order to identify the character that will be mapped to a given character tag in the first step of tokenization. For example, the character reference #32, representing the Space character, is mapped to a character tag of '_' by default.

Note:

The default *Base Tokenization map is designed for use with Latin-1 encoded data, as are the alternative *Unicode Base Tokenization and *Unicode Character Pattern maps. If these maps are not suited to the character-encoding of the data, it is possible to create and use a new one to take account of, for example, multi-byte Unicode (hexadecimal) character references.

Character tag: The Character tag is used in the first step of tokenization to assign each character in the data (identified by Unicode character reference) a given tag - for example all lower case letters might be assigned a character tag of 'a'.

Group tag: The Group tag is used in the second step of tokenization to group sequences of character tags with the same Group tag. Sequences of character tags with the same group tag, and the same character type, will be grouped to form a single token.For example, the first phase of tokenization might tag the data "103" as "NNN", using character tags, but as there are three characters with the same group tag ('N') and the same character type (NUMERIC), in sequence, these are grouped to form a single Base Token ("103"), with a Base Token Tag of 'N'.

Note that the behavior for ALPHA characters is slightly different, as the user can choose whether or not to split tokens where there is a sequence of lower case and upper case letters. By default, tokens are split on transition from lower case to upper case, but not from upper case to lower case. For example, the data "Michael" has the sequence of character tags 'Aaaaaaa', but has a transition in character type from ALPHA_UPPERCASE to ALPHA_LOWERCASE after the first letter. If the user keeps the default setting of the Split Upper to Lower Case option as not set, this will be grouped to form a single Base Token ("Michael") with a Base Token tag of 'A', as the character tags 'a' and 'A' both share the same group tag, and because the user is not splitting data on the transition of character type.

Character type: The Character type is used to split up data. In general, a change of character type causes a split into separate base tokens. For example, the string 'deluxe25ml' will be split into three base tokens - 'deluxe', '25' and 'ml'. These three base tokens will then be tagged according to their character and group tags. The exception to this rule is that, by default, a change of character type from ALPHA_UPPERCASE to ALPHA_LOWERCASE does not cause a split in tokens. This is in order to preserve tokens that are in proper case - for example, not to split 'Michael' into two tokens ('M' and 'ichael').

The user can change this behavior by selecting the option to Split Upper to Lower case.

The user can also choose to keep all strings of alpha characters together by de-selecting the option to Split Lower to Upper case. This would have the effect of keeping 'DelUXE' as one token.

Also, certain characters may be marked with a type of either WHITESPACE or DELIMITER. These characters can be ignored in later rules for matching sequences of tokens. For example, in Reclassify or Resolve, if you want to match a pattern of <Token A> followed by <Token B>, you may not care whether or not there are whitespace or delimiter characters in between them. The possible character types are NUMERIC, CONTROL, PUNCTUATION, SYMBOL, ALPHA_UPPERCASE, ALPHA_LOWERCASE and UNDEFINED.

Note also that the Comment column in the Reference Data explains the actual character to which the Unicode Character Reference refers - for example to tell you that #32 is the Space character etc.

Using different rules for different input attributes

By default, the same tokenization rules are applied to all the attributes input to the Parse processor. Normally, attribute-specific tokenization rules will not be required. However, you can change this by selecting an attribute on the left-hand side of the pane, and selecting the option to Enable attribute-specific settings. This may be required if you are analyzing many attributes with different characters as significant separators.

When specifying attribute-specific rules, it is possible to copy the settings for one attribute to another, or to reapply the default 'Global' settings using the Copy From option.

Example

In this example, the default rules are used to tokenize some address data, with the following results:

(Note that in this case leading and trailing whitespace was trimmed from each attribute before parsing using the Trim Whitespace processor.)

The following table shows a summary of each distinct pattern of Base Tokens across all input attributes.

| ADDRESS1.trimmed | ADDRESS2.trimmed | ADDRESS3.trimmed | POSTCODE.trimmed | Count | % |

|---|---|---|---|---|---|

|

<A>_<A><,> |

<A> |

[Null] |

<A><N>_<N><A> |

119 |

5.9 |

|

<A>_<A><,>_<A>_<A> |

<A> |

[Null] |

<A><N>_<N><A> |

95 |

4.7 |

|

<A>_<A><,> |

<A> |

<A> |

<A><N>_<N><A> |

73 |

3.6 |

|

<A>_<A><,>_<A>_<A> |

<A> |

<A> |

<A><N>_<N><A> |

58 |

2.9 |

|

<N>_<A>_<A><,> |

<A> |

[Null] |

<A><N>_<N><A> |

55 |

2.7 |

|

<A>_<A><,>_<A> |

<A> |

[Null] |

<A><N>_<N><A> |

49 |

2.4 |

|

<A>_<A><,> |

<A> |

<A><N>_<N><A> |

<A><N>_<N><A> |

40 |

2.0 |

|

<N>_<A>_<A><,> |

<A> |

<A> |

<A><N>_<N><A> |

35 |

1.7 |

The following table shows a drilldown on the top Base Token pattern:

| ADDRESS1.trimmed | ADDRESS2.trimmed | ADDRESS3.trimmed | POSTCODE.trimmed |

|---|---|---|---|

|

Tempsford Hall, |

Sandy |

[Null] |

SG19 2DB |

|

West Thurrock, |

Purfleet |

[Null] |

RM19 1PA |

|

Hayes Lane, |

Stourbridge |

[Null] |

DY9 8PA |

|

Middleton Road, |

Oswestry |

[Null] |

SY11 2RB |

|

Freshwater Road, |

Dagenham |

[Null] |

RM8 1RU |

|

College Road, |

Birmingham |

[Null] |

B8 3TE |

|

Ranelagh Gdns, |

London |

[Null] |

SW6 3PR |

|

Trumpington Road, |

Cambridge, |

[Null] |

CB2 2AG |

The next step in configuring a Parse processor is to Classify data.