3 Launching and Terminating Oracle Stream Analytics

Once you have completed the post installation tasks, you are ready to launch Oracle Stream Analytics, and start using it. Launching and terminating Oracle Stream Analytics is easy and you just need to run simple commands to do them.

Launching Oracle Stream Analytics

After you have installed Oracle Stream Analytics, the next step is to start the Oracle Stream Analytics server that will launch the application.

To launch Oracle Stream Analytics and start the server:

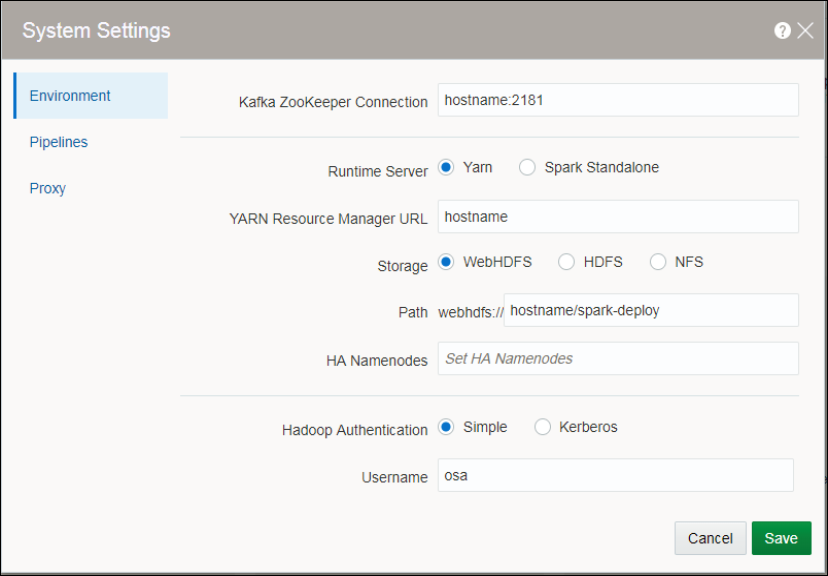

Setting up Runtime for Oracle Stream Analytics Server

Before you start using Oracle Stream Analytics, you need to specify the runtime server, environment, and node details. You must do this procedure right after you launch Oracle Stream Analytics for the first time.

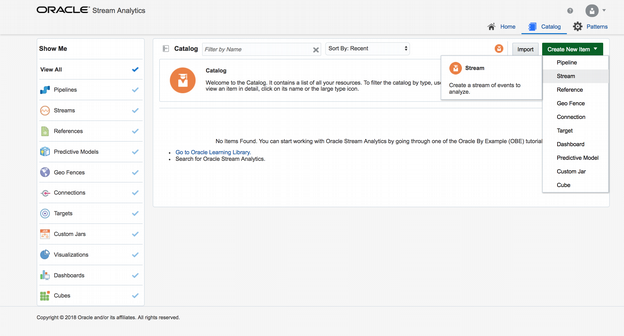

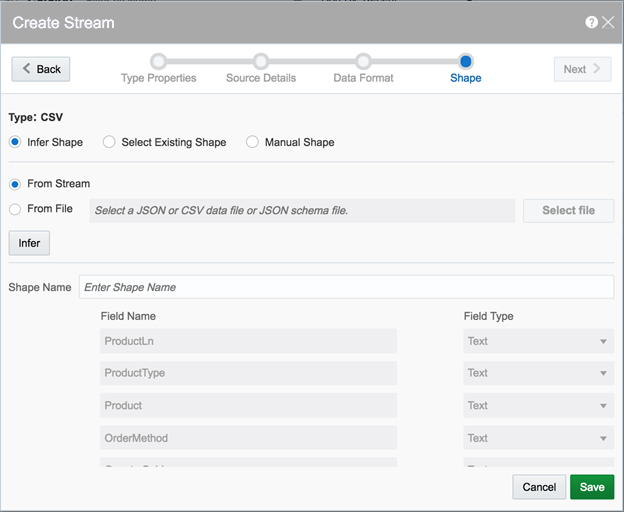

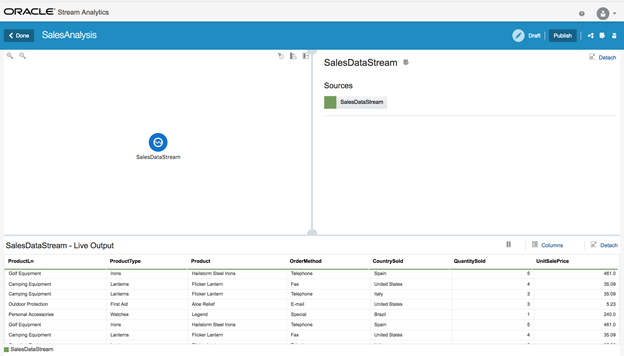

Validating Data Flow to Oracle Stream Analytics

After you have configured Oracle Stream Analytics with the runtime details, you need to ensure that sample data is being detected and correctly read by Oracle Stream Analytics