2 Launching and Terminating GoldenGate Stream Analytics

Once you have completed the post installation tasks, you are ready to launch GoldenGate Stream Analytics (GGSA), and start using it. Launching and terminating GGSA is easy and you just need to run simple commands to do them.

2.1 Retaining https and Disabling http

- By default, the GGSA web application is available on both http (port 9080) and https (port 9443). Follow the procedure below if you intend to disable http.

- Edit file

osa-base/start.d/http.ini. - Comment out as follows:

##--module=http. - Start GGSA web server by running

osa-base/bin/start-osa.sh.

2.2 Setting up Runtime for GoldenGate Stream Analytics Server

Before you start using GoldenGate Stream Analytics, you need to specify the runtime server, environment, and node details. You must do this procedure right after you launch Oracle Stream Analytics for the first time.

- Change directory to

OSA-19.1.0.0.6/osa-base/bin and run ./start-osa.sh. You should see the following message on console.Supported OSA schema versions are: [18.4.3, 18.1.0.1.0, 18.1.0.1.1, 19.1.0.0.0, 19.1.0.0.1, 19.1.0.0.2, 19.1.0.0.3, 19.1.0.0.5, 19.1.0.0.6]The schema is preconfigured and current. No changes or updates are required.If you do not see the above message, please check the log file in

OSA-19.1.0.0.6/osa-base/logsfolder. - the Chrome browser, enter

localhost:9080/osato access Oracle Stream Analytics login page, and login using your credentials.Note:

The password is a plain-text password. - Click the user name at the top right corner of the screen.

- Click System Settings.

- Click Environment.

- Select the Runtime Server. See the sections below for Yarn and Spark Standalone runtime configuration details.

Yarn Configuration

-

-

YARN Resource Manager URL: Enter the URL where the YARN Resource Manager is configured.

-

Storage: Select the storage type for pipelines. To submit a GGSA pipeline to Spark, the pipeline has to be copied to a storage location that is accessible by all Spark nodes.

- If the storage type is WebHDFS:

-

Path: Enter the WebHDFS directory (hostname:port/path), where the generated Spark pipeline will be copied to and then submitted from. This location must be accessible by all Spark nodes. The user specified in the authentication section below must have read-write access to this directory.

-

HA Namenodes: Set the HA namenodes. If the hostname in the above URL refers to a logical HA cluster, specify the actual namenodes here, in the format:

Hostname1:Port, Hostname2:Port.

-

- If storage type is HDFS:

-

Path: The path could be

<HostOrIPOfNameNode><HDFS Path>. For example,xxx.xxx.xxx.xxx/user/oracle/ggsapipelines. Hadoop user must haveWritepermissions. The folder will automatically be created if it does not exist. -

HA Namenodes: If the hostname in the above URL refers to a logical HA cluster, specify the actual namenodes here, in the format:

Hostname1:Port, Hostname2:Port.

-

- If storage type is NFS:

Path: The path could be

/oracle/spark-deploy.Note:

/oracle should exist and spark-deploy will automatically be created if it does not exist. You will needWritepermissions on the /oracle directory.

- If the storage type is WebHDFS:

-

- Hadoop Authentication:

- Simple authentication credentials:

- Protection Policy: Select a protection policy from the drop-down list. This value should match the value on the cluster.

- Username: Enter the user account to use for submitting Spark pipelines. This user must have read-write access to the Path specified above.

- Kerberos authentication credentials:

- Protection Policy: Select a protection policy from the drop-down list. This value should match the value on the cluster.

- Kerberos Realm: Enter the domain on which Kerberos authenticates a user, host, or service. This value is in the

krb5.conf file. - Kerberos KDC: Enter the server on which the Key Distribution Center is running. This value is in the

krb5.conf file. - Principal: Enter the GGSA service principal that is used to authenticate the GGSA web application against Hadoop cluster, for application deployment. This user should be the owner of the folder used to deploy the GGSA application in HDFS. You have to create this user in the yarn node manager as well.

- Keytab: Enter the keytab pertaining to GGSA service principal.

- Yarn Resource Manager Principal: Enter the yarn principal. When Hadoop cluster is configured with Kerberos, principals for hadoop services like hdfs, https, and yarn are created as well.

- Simple authentication credentials:

- Yarn master console port: Enter the port on which the Yarn master console runs. The default port is

8088. - Click Save.

Spark Standalone

- Select the Runtime Server as Spark Standalone, and enter the following details:

-

Spark REST URL: Enter the Spark standalone REST URL. If Spark standalone is HA enabled, then you can enter comma-separated list of active and stand-by nodes.

-

Storage: Select the storage type for pipelines. To submit a GGSA pipeline to Spark, the pipeline has to be copied to a storage location that is accessible by all Spark nodes.

- If the storage type is WebHDFS:

-

Path: Enter the WebHDFS directory (hostname:port/path), where the generated Spark pipeline will be copied to and then submitted from. This location must be accessible by all Spark nodes. The user specified in the authentication section below must have read-write access to this directory.

-

HA Namenodes: If the hostname in the above URL refers to a logical HA cluster, specify the actual namenodes here, in the format:

Hostname1:Port, Hostname2:Port.

-

- If storage type is HDFS:

-

Path: The path could be

<HostOrIPOfNameNode><HDFS Path>. For example,xxx.xxx.xxx.xxx/user/oracle/ggsapipelines. Hadoop user must haveWritepermissions. The folder will automatically be created if it does not exist. -

HA Namenodes: If the hostname in the above URL refers to a logical HA cluster, specify the actual namenodes here, in the format:

Hostname1:Port, Hostname2:Port.This field is applicable only when the storage type is HDFS.

-

- Hadoop Authentication for WebHDFS and HDFS Storage Types:

- Simple authentication credentials:

- Protection Policy: Select a protection policy from the drop-down list.

- Username: Enter the user account to use for submitting Spark pipelines. This user must have read-write access to the Path specified above.

- Kerberos authentication credentials:

- Protection Policy: Select a protection policy from the drop-down list.

- Kerberos Realm: Enter the domain on which Kerberos authenticates a user, host, or service. This value is in the

krb5.conf file. - Kerberos KDC: Enter the server on which the Key Distribution Center is running. This value is in the

krb5.conf file. - Principal: Enter the GGSA service principal that is used to authenticate the GGSA web application against Hadoop cluster, for application deployment. This user should be the owner of the folder used to deploy the GGSA application in HDFS. You have to create this user in the yarn node manager as well.

- Keytab: Enter the keytab pertaining to GGSA service principal.

- Yarn Resource Manager Principal: Enter the yarn principal. When Hadoop cluster is configured with Kerberos, principals for hadoop services like hdfs, https, and yarn are created as well.

- Simple authentication credentials:

- If storage type is NFS:

Path: The path could be

/oracle/spark-deploy.Note:

/oracle should exist and spark-deploy will automatically be created if it does not exist. You will needWritepermissions on the /oracle directory.

- If the storage type is WebHDFS:

-

- Spark standalone master console port: Enter the port on which the Spark standalone console runs. The default port is

8080.Note:

The order of the comma-separated ports should match the order of the comma-separated spark REST URLs mentioned in the Path. - Spark master username: Enter your Spark standalone server username.

- Spark master password: Click Change Password, to change your Spark standalone server password.

Note:

You can change your Spark standalone server username and password in this screen. The username and password fields are left blank, by default. - Click Save.

2.3 Validating Data Flow to GoldenGate Stream Analytics

After you have configured GoldenGate Stream Analytics (GGSA) with the runtime details, you need to ensure that sample data is being detected and correctly read by GGSA.

2.4 Terminating GoldenGate Stream Analytics

You can terminate Oracle Stream Analytics by running a simple command.

Use the following command to terminate GoldenGate Stream Analytics:

./stop-osa.sh from OSA-19.1.0.0.6/osa-base/bin folder

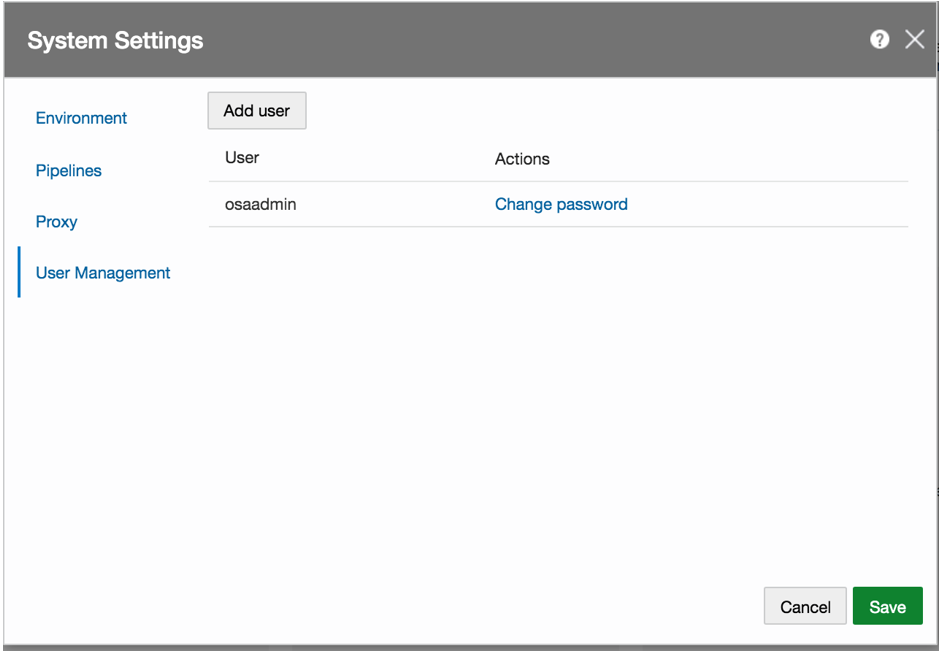

2.5 Changing the Admin Password and Creating New Users

You can change the Admin password and add new users from the System Settings dialog box.

- Click the user profile at the top right corner, and then click System Settings.

- On the System Settings dialog box, click User Management.

Description of the illustration user_management.png - Add new users or change password as required.