3.1.3 Utilizing the Local Spark Cluster

The local Spark cluster is used to run your own Spark jobs, in addition to the GGSA pipelines. Spark jobs can be submitted to the REST endpoint using localhost and port 6066. Spark is installed in /u01/app/spark and you can change the configuration by referring the Spark documentation.

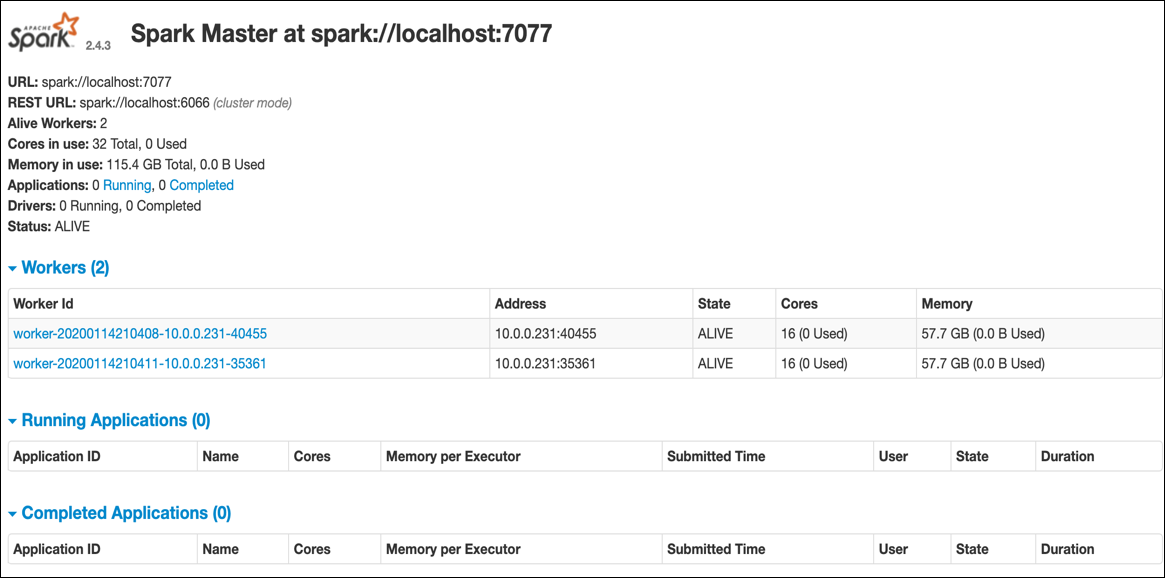

You can access Spark console by typing https://<IP_of_Instance/spark> and providing the username/password. By default the Spark cluster is configured with two workers, each with 16 virtual cores.