1 Overview of Oracle Stream Analytics

Oracle Stream Analytics allows for the creation of custom operational dashboards that provide real-time monitoring and analyses of event streams in an Apache Spark based system. Oracle Stream Analytics enables customers to identify events of interest in their Apache Spark based system, execute queries against those event streams in real time and drive operational dashboards or raise alerts based on that analysis. Oracle Stream Analytics runs as a set of native Spark pipelines.

1.1 About Oracle Stream Analytics

Stream Analytics is an in-memory technology for real-time analytic computations on streaming data. The streaming data can originate from IoT sensors, web pipelines, log files, point-of-sale devices, ATM machines, social media, or from any other data source.

Oracle Stream Analytics is used to identify business threats and opportunities by filtering, aggregating, correlating, and analyzing high volume of data in real time.

More precisely, Oracle Stream Analytics can be used in the following scenarios:

-

Build complex event processing pipelines by blending and transforming data from disparate transactional and non-transactional sources.

-

Perform temporal analytics based on time and event windows.

-

Perform location-based analytics using built-in spatial patterns.

-

Detect patterns in time-series data and execute real-time actions.

-

Build operational dashboards by visualizing processed data streams.

-

Use Machine Learning to score current event and predict next event.

-

Run ad-hoc queries on results of processed data streams.

Some industry specific examples include:

-

Detecting real-time fraud based on incoming transaction data.

-

Tracking transaction losses and margins in real-time to renegotiate with vendors and suppliers.

-

Improving asset maintenance by tracking healthy operating parameters and pro-actively scheduling maintenance.

-

Improving margins by continuously tracking demand and optimizing markdowns instead of randomly lowering prices.

-

Readjusting prices by continuously tracking demand, inventory levels, and product sentiment on social media etc.

-

Marketing and making real-time offers based on customer location and loyalty.

-

Instantly identifying shopping cart defections and improving conversion rates.

-

Upselling products and services by instantly identifying customer’s presence on company website.

-

Improving asset utilization by tracking average time it takes to load and unload merchandise.

-

Improving turnaround time by preparing dock and staff based on estimated arrival time of fleet.

-

Revising schedule estimates based on actual time to enter and exit loading zones, and so on.

1.2 Why Oracle Stream Analytics?

Various reasons and advantages encourage you to use Oracle Stream Analytics instead of similar products available in the industry.

Simplicity

Author powerful data processing pipelines using self-service web-based tool in Oracle Stream Analytics. The tool automatically generates a Spark pipeline along with instant visual validation of pipeline logic.Built on Apache Spark

Oracle Stream Analytics can attach to any version-compliant Yarn cluster running Spark and is first in the industry to bring event-by-event processing to Spark Streaming.Enterprise Grade

Oracle Stream Analytics is built on Apache Spark to provide full horizontal scale out and 24x7 availability of mission-critical workloads. Automated check-pointing ensures exact-once processing and zero data loss. Built-in governance provides full accountability of who did what and when to the system. As part of management and monitoring, Oracle Stream Analytics provides a visual representation of pipeline topology/relationships along with dataflow metrics to indicate number of events ingested, events dropped, and throughput of each pipeline.1.3 How Does Oracle Stream Analytics Work?

Stream Analytics starts with ingesting data from Kafka with first-class support for Oracle GoldenGate change data capture. Examining and analyzing the stream is performed by creating data pipelines.

A data pipeline can query data using time windows, look for patterns, and apply conditional logic while the data is still in motion. The query language used in Stream Analytics is called Continuous Query Language (CQL) and is similar to SQL, but CQL includes additional constructs for pattern matching and recognition. Though CQL is declarative, there is no need to write any code in Stream Analytics. The web-based tool automatically generates queries and the Spark Streaming pipeline. Once data is analyzed and situation is detected, the pipeline can terminate to trigger BPM workflows in Oracle Integration or save results into a Data Lake for deeper insights and intelligence using Oracle Analytics Cloud.

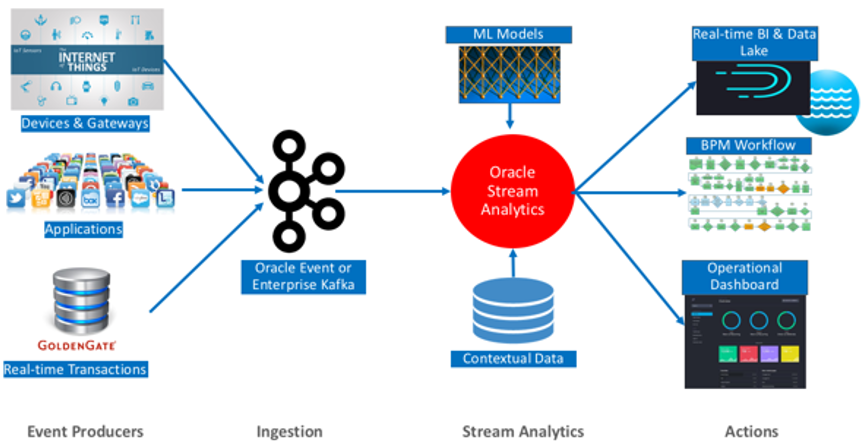

The following diagram illustrates the architecture of Stream Analytics:

Description of the illustration osacs_architecture.png

The analyzed data is used to build operational dashboards, trigger workflows, and it is saved to Data Lakes for business intelligence and ad-hoc queries.