4 Configuring Oracle Traffic Director for High Availability

High availability is the ability of a system or device to be available when it is needed. A high availability architecture ensures that users can access a system without loss of service. Deploying a high availability system minimizes the time when the system is down, or unavailable, and maximizes the time when it is running, or available.

This section describes the instructions for configuring Oracle Traffic Director for failover across Oracle Traffic Director instances. It includes the following sections:

Overview

The high availability solution for Oracle Traffic Director provides the ability to create multiple instances and then configure the IP failover for a given VIP between the instances.

In Oracle Traffic Director, high availability solution creates a redundancy for a given virtual IP address (VIP) by configuring IP failover between two or more instances. The IP failover is configured as a Failover Group which is a grouping of a VIP, an instance designated as the primary and one or more instances designated as the backup instances. The failover is transparent to both the client which is sending the traffic and the Oracle Traffic Director instance that is receiving the traffic.

Failover configuration modes

You can configure the Oracle Traffic Director instances in a failover group to work in the following modes:

-

Active-passive: A single VIP address is used. One instance in the failover group is designated as the primary node. If the primary node fails, the requests are routed through the same VIP to the other instance.

-

Active-active: A single VIP address is used. One of the nodes is the master node, and the other nodes are backup nodes. The incoming requests to VIP is distributed among the OTD instances. If the master node fails, then the backup node having the highest priority will be chosen as the next master node.

Failover in Active-Passive Mode

In the active-passive setup described here, one node in the failover group is redundant at any point in time.

Oracle Traffic Director provides support for failover between the instances in a failover group by using an implementation of the Virtual Routing Redundancy Protocol (VRRP), such as keepalived for Linux and vrrpd (native) for Solaris. This mode of failover is supported only on Solaris and Linux platforms.

Keepalived provides other features such as load balancing and health check for origin servers, but Oracle Traffic Director uses only the VRRP subsystem. For more information about Keepalived, go to http://www.keepalived.org.

VRRP specifies how routers can failover a VIP address from one node to another if the first node becomes unavailable for any reason. The IP failover is implemented by a router process running on each of the nodes. In a two-node failover group, the router process on the node to which the VIP is currently assigned is called the master. The master continuously advertises its presence to the router process on the second node.

Note:

On a Linux host that has an Oracle Traffic Director instance configured as a member

of a failover group, Oracle Traffic Director should be the only consumer of

Keepalived. Otherwise, when Oracle Traffic Director starts and stops the

keepalived daemon for effecting failovers during instance

downtime, other services using keepalived on the same host can be

disrupted.

If the node on which the master router process is running fails, the router process on the second node waits for about three seconds before deciding that the master is down, and then assumes the role of the master by assigning the VIP to its node. When the first node is online again, the router process on that node takes over the master role. For more information about VRRP, see RFC 5798 at http://datatracker.ietf.org/doc/rfc5798.

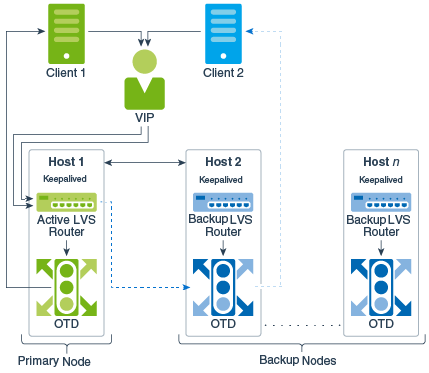

Failover in Active-Active Mode

Oracle Traffic Director provides support for failover between the instances by deploying instances on the nodes which are in the same subnet. One of the nodes is chosen as the active router node and the remaining node(s) are the backup router node(s).The traffic will be managed among all the Oracle Traffic Director instances.

This mode of failover is supported only on the Linux platform. The solution also uses Keepalived v 1.2.13 and Linux Virtual Server (LVS) to perform load balancing and failover tasks. In addition, the following packages are required.

-

ipvsadm (1.26 or later)

-

iptables (1.4.7 or later)

In the beginning, all the nodes are configured as the backup nodes and the nodes are assigned different priorities. The highest priority node is chosen as the master and the other nodes are the backup nodes. If the master node fails, then the backup node having the highest priority is chosen as the next master node. The keepalived master node will also be the master node for LVS.

Keepalived does following:

-

Plumbs the virtual IP on the master

-

Sends out gratuitous ARP messages for the VIP

-

Configure the LVS (ipvsadm)

-

Health-check for Keepalived on other nodes

LVS does following:

-

Balance the load across the Oracle Traffic Director instances

-

Share the existing connection information to the backup nodes via multicasting.

-

To check the integrity of the services on each Oracle Traffic Director instance. In case, an Oracle Traffic Director instance fails then that instance will be removed from the LVS configuration and when it comes back online then it will be added again.

Preparing your System for High Availability

The typical deployment topology for Oracle Traffic Director is a three-node installation which are part of a single domain.

-

There is the WebLogic Server Administration machine on which Oracle Traffic Director is collocated with a WebLogic Server and JRF installation. This hosts the WebLogic Server Administration server.

-

There are two other machines which have the Oracle Traffic Director standalone installations which have only a subset of WebLogic Server binaries and which hosts managed Oracle Traffic Director domains.

-

The two Oracle Traffic Director instances typically provide high availability for a VIP (virtual IP) by forming a failover group.

Prerequisites

| Operating System | High Availability Requirements | System Requirements |

|---|---|---|

|

Linux |

Active-Active

|

|

|

Linux |

Active-Passive

|

|

|

Solaris |

Active-Active |

Not Supported |

|

Solaris |

Active-Passive

|

|

|

Windows |

Active-Active |

Not Supported |

|

Windows |

Active-Passive |

Not Supported |

|

AIX |

Active-Active |

Not Supported |

|

AIX |

Active-Passive |

Not Supported |

Notations

The following tokens are used to represent the same data throughout this chapter.

| Token | Description |

|---|---|

|

|

Host name of the machine on which the primary instance of the failover group is running. |

|

|

Host name of the machine on which the back up instance of the failover group is running. |

|

|

Name specified while creating machine corresponding to OTD_HOST_1 in Weblogic Server Console. |

|

|

Name specified while creating machine corresponding to OTD_HOST_2 in Weblogic Server Console. |

|

|

The virtual IP (on the same subnet as OTD_HOST_1 and OTD_HOST_2) used to create a failover group. |

|

|

Host name of the server on which WebLogic Server admin runs. |