7 Whole Server Migration

The following sections focus on whole server-level migration, where a migratable server instance and all of its services are migrated to a different physical machine upon failure. WebLogic Server also supports service-level migration, as well as replication and failover at the application level. For more information, see Service Migration and Failover and Replication in a Cluster.

This chapter includes the following sections:

- Understanding Server and Service Migration

In a WebLogic Server cluster, most services are deployed homogeneously on all server instances in the cluster, enabling transparent failover from one server instance to another. In contrast, pinned services such as JMS and the JTA transaction recovery system are targeted at individual server instances within a cluster for these services, WebLogic Server supports failure recovery with migration, as opposed to failover. - Migration Terminology

Learn server and service migration terms. - Leasing

Leasing is the process WebLogic Server uses to manage services that are required to run on only one member of a cluster at a time. - Automatic Whole Server Migration

Learn the procedures for configuring automatic whole server migration and how whole server migration functions within a WebLogic Server environment. - Whole Server Migration with Dynamic and Mixed Clusters

WebLogic Server supports whole server migration with dynamic and mixed clusters.

Understanding Server and Service Migration

In a WebLogic Server cluster, most services are deployed homogeneously on all server instances in the cluster, enabling transparent failover from one server instance to another. In contrast, pinned services such as JMS and the JTA transaction recovery system are targeted at individual server instances within a cluster for these services, WebLogic Server supports failure recovery with migration, as opposed to failover.

Migration in WebLogic Server is the process of moving a clustered WebLogic Server instance or a component running on a clustered server instance elsewhere in the event of failure. In the case of whole server migration, the server instance is migrated to a different physical machine upon failure. In the case of service-level migration, the services are moved to a different server instance within the cluster. For more information, see Service Migration.

To make JMS and the JTA transaction system highly available, WebLogic Server provides migratable servers. Migratable servers provide automatic and manual migration at the server-level rather than the service-level.

When a migratable server becomes unavailable for any reason (for example, if it hangs, loses network connectivity, or its host machine fails), migration is automatic. Upon failure, a migratable server is automatically restarted on the same machine, if possible. If the migratable server cannot be restarted on the machine where it failed, it is migrated to another machine. In addition, an administrator can manually initiate migration of a server instance.

Parent topic: Whole Server Migration

Migration Terminology

Learn server and service migration terms.

-

Migratable server: A clustered server instance that migrates in its entirety, along with all the services it hosts. Migratable servers are intended to host pinned services, such as JMS servers and JTA transaction recovery servers, but migratable servers can also host clusterable services. All services that run on a migratable server are highly available.

-

Whole server migration: A WebLogic Server instance to be migrated to a different physical machine upon failure, either manually or automatically.

-

Service migration:

-

Manual Service Migration: The manual migration of pinned JTA and JMS-related services (for example, JMS server, SAF agent, path service, and custom store) after the host server instance fails. See Service Migration.

-

Automatic Service Migration: JMS-related services, singleton services, and the JTA Transaction Recovery Service can be configured to automatically migrate to another member server instance when a member server instance fails or is restarted. See Service Migration.

-

-

Cluster leader: One server instance in a cluster, elected by a majority of the server instances, that is responsible for maintaining the leasing information. See Non-database Consensus Leasing.

-

Cluster master: One server instance in a cluster that contains migratable servers acts as the cluster master and orchestrates the process of automatic server migration in the event of failure. Any Managed Server in a cluster can serve as the cluster master, whether it hosts pinned services or not. See Cluster Master Role in Whole Server Migration.

-

Singleton master: A lightweight singleton service that monitors other services that can be migrated automatically. The server instance that currently hosts the singleton master is responsible for starting and stopping the migration tasks associated with each migratable service. See Singleton Master.

-

Candidate machines: A user-defined list of machines within a cluster that can be a potential target for migration.

-

Target machines: A set of machines that are designated as allowable or preferred hosts for migratable servers.

-

Node Manager: A WebLogic Server utility used by the Administration Server or a standalone Node Manager client, to start and stop migratable servers. Node Manager is invoked by the cluster master to shut down and restart migratable servers, as necessary. For background information about Node Manager and how it fits into a WebLogic Server environment, see Node Manager Overview in Administering Node Manager for Oracle WebLogic Server.

-

Lease table: A database table in which migratable servers persist their state, and which the cluster master monitors to verify the health and liveness of migratable servers. See Leasing.

-

Administration Server: It is used to configure migratable servers and target machines, to obtain the run-time state of migratable servers, and to orchestrate the manual migration process.

-

Floating IP address: An IP address that follows a server instance from one physical machine to another after migration.

Parent topic: Whole Server Migration

Leasing

Leasing is the process WebLogic Server uses to manage services that are required to run on only one member of a cluster at a time.

Leasing ensures exclusive ownership of a cluster-wide entity. Within a cluster, there is a single owner of a lease. Additionally, leases can failover in case of server or cluster failure. This helps to avoid having a single point of failure.

- Features That Use Leasing

- Types of Leasing

- Determining Which Type of Leasing To Use

- High Availability Database Leasing

- Non-Database Consensus Leasing

Parent topic: Whole Server Migration

Features That Use Leasing

The following WebLogic Server features use leasing:

-

Automatic Whole Server Migration: It uses leasing to elect a cluster master. The cluster master is responsible for monitoring other cluster members and for restarting failed members hosted on other physical machines.

Leasing ensures that the cluster master is always running, but is only running on one server instance at a time within a cluster. See Cluster Master Role in Whole Server Migration.

-

Automatic Service Migration: JMS-related services, singleton services, and the JTA Transaction Recovery Service can be configured to automatically migrate from an unhealthy hosting server instance to a healthy active server instance with the help of the health monitoring subsystem. When the migratable target is migrated, the pinned service hosted by that target is also migrated. Migratable targets use leasing to accomplish automatic service migration. See Service Migration.

-

Singleton Services: A singleton service is a service running within a cluster that is available on only one member of the cluster at a time. Singleton services use leasing to accomplish this. See Singleton Master.

-

Job Scheduler: The Job Scheduler is a persistent timer that is used within a cluster. The Job Scheduler uses the timer master to load balance the timer across a cluster.

This feature requires an external database to maintain failover and replication information. However, you can use the non-database version consensus leasing with the Job Scheduler. For more information, see Non-Database Consensus Leasing.

Note:

Beyond basic configuration, most leasing functionality is handled internally by WebLogic Server.

Parent topic: Leasing

Types of Leasing

WebLogic Server provides two types of leasing functionality, depending on your requirements and environment.

-

High-availability database leasing: This version of leasing requires a high-availability database to store leasing information. For information on general requirements and configuration, see High-availability Database Leasing.

-

Non-database consensus leasing: This version of leasing stores the leasing information in-memory within a cluster member. This version of leasing requires that all server instances in the cluster are started by Node Manager. See Non-database Consensus Leasing.

Within a WebLogic Server installation, you can use only one type of leasing. Although it is possible to implement multiple features that use leasing within your environment, each must use the same kind of leasing.

When switching from one leasing type to another, you must restart the entire cluster, not just the Administration Server. Changing the leasing type cannot be done dynamically.

Parent topic: Leasing

Determining Which Type of Leasing To Use

The following considerations will help you determine which type of leasing is appropriate for your WebLogic Server environment:

-

High availability database leasing

Database leasing basis is useful in environments that are already invested in a high availability database, like Oracle Real Application Clusters (RAC), for features like JMS store recovery. The high availability database instance can also be configured to support leasing with minimal additional configuration. This is particularly useful if Node Manager is not running in the system.

-

Non-database consensus leasing

This type of leasing provides a leasing basis option (consensus) that does not require the use of a high availability database. This has a direct benefit in automatic whole server migration. Without the high availability database requirement, consensus leasing requires less configuration to enable automatic server migration.

Consensus leasing requires Node Manager to be configured and running. Automatic whole server migration also requires Node Manager for IP migration and server restart on another machine. Hence, consensus leasing works well since it does not impose additional requirements, but instead takes away an expensive one.

Note:

As a best practice, Oracle recommends configuring database leasing instead of consensus leasing.Parent topic: Leasing

High Availability Database Leasing

In this version of leasing, lease information is maintained within a table in a high availability database. A high availability database is required to ensure that the leasing information is always available to the server instances. Each member of the cluster must be able to connect to the database in order to access leasing information, update, and renew their leases. server instances will fail if the database becomes unavailable and they are not able to renew their leases.

This method of leasing is useful for customers who already have a high availability database within their clustered environment. This method allows you to use leasing functionality without requiring Node Manager to manage server instances within your environment.

The following procedures outline the steps required to configure your database for leasing.

Server Migration with Database Leasing on RAC Clusters

When using server migration with database leasing on RAC Clusters, Oracle recommends synchronizing all RAC nodes in the environment. If the nodes are not synchronized, it is possible that a Managed Server that is renewing a lease will evaluate that the value of the clock on the RAC node is greater than the timeout value of leasing table. If it is more than 30 seconds, the server instance will fail and restart with the following log message:

<Mar 29, 2013 2:39:09 PM EDT> <Error> <Cluster> <BEA-000150> <Server failed to get a connection to the database in the past 60 seconds for lease renewal. Server will shut itself down.>

For more information, see Configuring Time Synchronization for the Cluster in the Installation and Upgrade Guide for Microsoft Windows.

Parent topic: High Availability Database Leasing

Non-Database Consensus Leasing

Note:

Consensus leasing requires you to use Node Manager to control server instances within the cluster. Node Manager must be running on every machine hosting Managed Servers within the cluster, including any candidate machines for failed migratable servers. For more information, see Using Node Manager to Control Servers in Administering Node Manager for Oracle WebLogic Server.

In consensus leasing, there is no highly available database required. The cluster leader maintains the leases in-memory. All of the server instances renew their leases by contacting the cluster leader, however, the leasing table is replicated to other nodes of the cluster to provide failover.

The cluster leader is elected by all of the running server instances in the cluster. A server instance becomes a cluster leader only when it has received acceptance from the majority of the server instances. If Node Manager reports a server instance as shut down, the cluster leader assumes that server instance has accepted it as leader when counting the majority number of server instances.

Consensus leasing requires a majority of server instances to continue functioning. During network partition, the server instances in the majority partition will continue to run while those in the minority partition will voluntarily shut down, as they cannot contact the cluster leader or elect a new cluster leader since they will not have the majority of server instances. If the partition results in an equal division of server instances, then the partition that contains the cluster leader will survive while the other one will fail. Consensus leasing depends on the ability to contact Node Manager to receive the status of the server instances it is managing to count them as part of the majority of reachable server instances. If Node Manager cannot be contacted, due to loss of network connectivity or a hardware failure, the server instances it manages are not counted as part of the majority, even if they are running.

Note:

If your cluster only contains two server instances, the cluster leader will be the majority partition if a network partition occurs. If the cluster leader fails, the surviving server instance will attempt to verify its status through Node Manager. If the surviving server instance is able to determine the status of the failed cluster leader, it assumes the role of cluster leader. If the surviving server instance cannot check the status of the cluster leader, due to machine failure or a network partition, it will voluntarily shut down as it cannot reliably determine if it is in the majority.

To avoid this scenario, Oracle recommends to use a minimum of three server instances running on different machines.

If automatic server migration is enabled, server instances are required to contact the cluster leader and renew their leases periodically. Server instances will shut themselves down if they are unable to renew their leases. The failed server instances will automatically migrate to the machines in the majority partition.

Parent topic: Leasing

Automatic Whole Server Migration

Learn the procedures for configuring automatic whole server migration and how whole server migration functions within a WebLogic Server environment.

- Preparing for Automatic Whole Server Migration

- Configuring Automatic Whole Server Migration

- Using High Availability Storage for State Data

- Server Migration Processes and Communications

Parent topic: Whole Server Migration

Preparing for Automatic Whole Server Migration

Before configuring automatic whole server migration, be aware of the following requirements:

-

To use whole server migration on Oracle Solaris Zones, you must use Exclusive-IP zones as described in Whole Server Migration with Solaris 10 Zones Configuration Guide v1.0 available in My Oracle Support.

-

Each Managed Server uses the same subnet mask. Unicast and multicast communication among server instances requires each server instance to use the same subnet. Server migration will not work without configuring multicast or unicast communication.

See Using IP Multicast and One-to-Many Communication Using Unicast.

-

All server instances hosting migratable servers are time-synchronized. Although migration works when server instances are not time-synchronized, time-synchronized server instances are recommended in a clustered environment.

-

If you are using different operating system versions among migratable servers, ensure that all versions support identical functionality for

ifconfig. -

Automatic whole server migration requires Node Manager to be configured and running for IP migration and server restart on another machine.

-

The primary interface names used by migratable servers are the same. If your environment requires different interface names, then configure a local version of

wlscontrol.shfor each migratable server.For more information about

wlscontrol.sh, see Using Node Manager to Control Servers in Administering Node Manager for Oracle WebLogic Server. -

For a list of supported database, see Databases Supporting WebLogic Server Features in Oracle Fusion Middleware Supported System Configurations.

-

There is no built-in mechanism for transferring files that a server instance depends on between machines. Using a disk that is accessible from all machines is the preferred way to ensure file availability. If you cannot share disks between server instances, you must ensure that the contents of

domain_dir/binare copied to each machine. -

Ensure that the Node Manager security files are copied to each machine using the WLST command

nmEnroll(). See Using Node Manager to Control Servers in Administering Node Manager for Oracle WebLogic Server. -

Use high availability storage for state data. For highest reliability, use a shared storage solution that is itself highly available, for example, a storage area network (SAN). See Using High Availability Storage for State Data.

-

Use a central shared directory for persistent file store data. See Additional Requirement for High Availability File Stores in Administering the WebLogic Persistent Store.

-

For capacity planning in a production environment, remember that server startup during migration taxes CPU utilization. You cannot assume that because a machine can handle a certain number of server instances running concurrently that it also can handle that same number of server instances starting up on the same machine at the same time.

Parent topic: Automatic Whole Server Migration

Configuring Automatic Whole Server Migration

Before configuring server migration, ensure that your environment meets the requirements outlined in Preparing for Automatic Whole Server Migration.

To configure server migration for a Managed Server within a cluster, perform the following tasks:

Parent topic: Automatic Whole Server Migration

Using High Availability Storage for State Data

The server migration process migrates services, but not the state information associated with work in process at the time of failure.

To ensure high availability, it is critical that such state information remains available to the server instance and the services it hosts after migration. Otherwise, data about the work in process at the time of failure may be lost. State information maintained by a migratable server, such as the data contained in transaction logs, should be stored in a shared storage system that is accessible to any potential machine to which a failed migratable server might be migrated. For highest reliability, use a shared storage solution that is itself highly available (for example, a SAN). For more information, see Additional Requirement for High Availability File Stores in Administering the WebLogic Persistent Store.

In addition, if you are using a database to store leasing information, the lease table, described in the following sections, should also be stored in a high availability database. The lease table tracks the health and liveness of migratable servers. See Leasing.

Parent topic: Automatic Whole Server Migration

Server Migration Processes and Communications

The following sections describe key processes in a cluster that contains migratable servers:

- Startup Process in a Cluster with Migratable Servers

- Automatic Whole Server Migration Process

- Manual Whole Server Migration Process

- Administration Server Role in Whole Server Migration

- Migratable Server Behavior in a Cluster

- Node Manager Role in Whole Server Migration

- Cluster Master Role in Whole Server Migration

Parent topic: Automatic Whole Server Migration

Startup Process in a Cluster with Migratable Servers

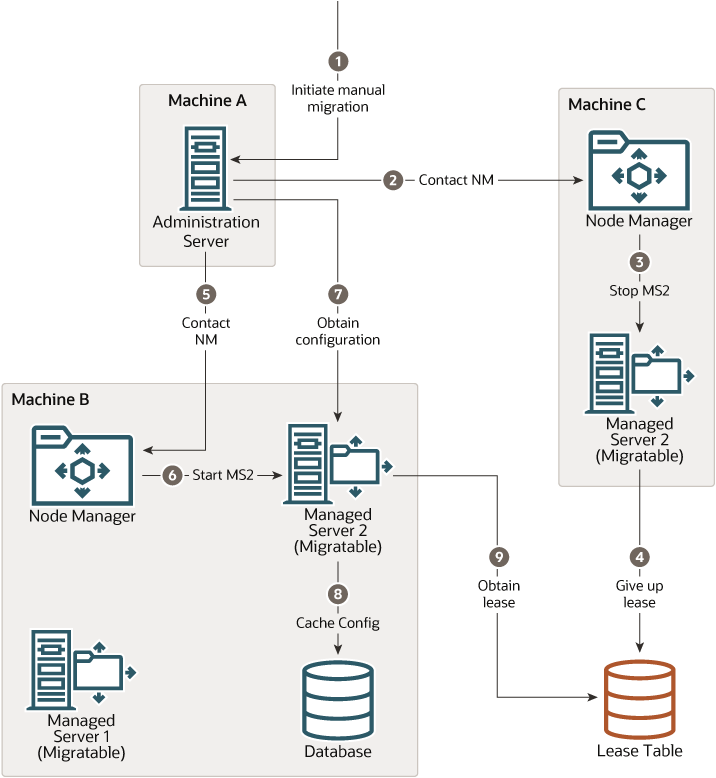

Figure 7-1 illustrates the process and communication that occurs during startup of a cluster that contains migratable servers.

The example cluster contains two Managed Servers, both of which are migratable. The Administration Server and the two Managed Servers each run on different machines. A fourth machine is available as a backup, if one of the migratable servers fails. Node Manager is running on the backup machine and on each machine with a running migratable server.

Figure 7-1 Startup of Cluster with Migratable Servers

Description of "Figure 7-1 Startup of Cluster with Migratable Servers"

The following key steps occur during startup of the cluster, as illustrated in Figure 7-1:

-

The administrator starts the cluster.

-

The Administration Server invokes Node Manager on Machines B and C to start Managed Servers 1 and 2, respectively. See Administration Server Role in Whole Server Migration.

-

The Node Manager instance on each machine starts the Managed Server that runs on that machine. See Node Manager Role in Whole Server Migration.

-

Managed Servers 1 and 2 contact the Administration Server for their configuration. See Migratable Server Behavior in a Cluster.

-

Managed Servers 1 and 2 cache the configuration with which they started.

-

Managed Servers 1 and 2 each obtain a migratable server lease in the lease table. Because Managed Server 1 starts first, it also obtains a cluster master lease. See Cluster Master Role in Whole Server Migration.

-

Managed Server 1 and 2 periodically renew their leases in the lease table, proving their health and liveness.

Parent topic: Server Migration Processes and Communications

Automatic Whole Server Migration Process

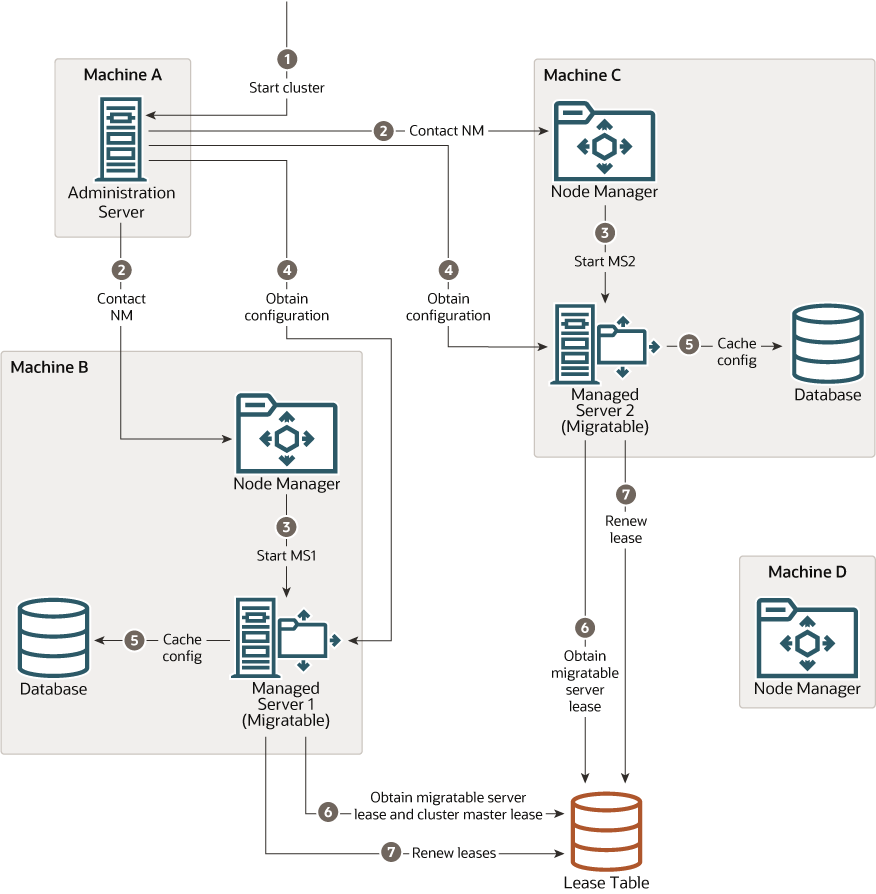

Figure 7-2 illustrates the automatic migration process after the failure of the machine hosting Managed Server 2.

Figure 7-2 Automatic Migration of a Failed Server

Description of "Figure 7-2 Automatic Migration of a Failed Server"

-

Machine C, which hosts Managed Server 2, fails.

-

Upon its next periodic review of the lease table, the cluster master detects that Managed Server 2's lease has expired. See Cluster Master Role in Whole Server Migration.

-

The cluster master tries to contact Node Manager on Machine C to restart Managed Server 2, but it fails as Machine C is unreachable.

Note:

If the Managed Server 2 lease had expired because it was hung, and Machine C was reachable, the cluster master would use Node Manager to restart Managed Server 2 on Machine C.

-

The cluster master contacts Node Manager on Machine D, which is configured as an available host for migratable servers in the cluster.

-

Node Manager on Machine D starts Managed Server 2. See Node Manager Role in Whole Server Migration.

-

Managed Server 2 starts and contacts the Administration Server to obtain its configuration.

-

Managed Server 2 caches the configuration with which it started.

-

Managed Server 2 obtains a migratable server lease.

During migration, the clients of the Managed Server that is migrating may experience a brief interruption in service; it may be necessary to reconnect. On Solaris and Linux operating systems, this can be done using the ifconfig command. The clients of a migrated server do not need to know the particular machine to which they have migrated.

When a migrated machine that previously hosted a server instance becomes available again, the migration process will be reversed (migrating the server instance back to its original host machine), which is known as failback. WebLogic Server does not automate the failback process. An administrator can accomplish failback by manually restoring the server instance to its original host.

The general procedures for restoring a server instance to its original host are as follows:

-

Gracefully shut down the new instance of the server.

-

After you have restarted the failed machine, restart Node Manager and the Managed Server.

The procedures you follow will depend on your server instance and network environment.

Parent topic: Server Migration Processes and Communications

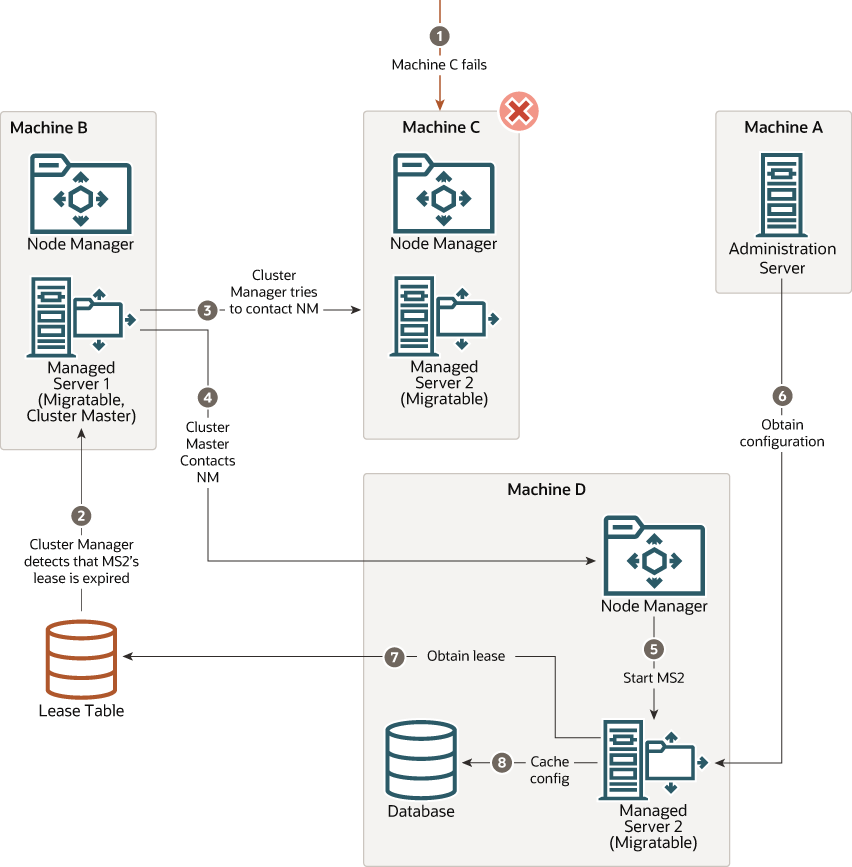

Manual Whole Server Migration Process

Figure 7-3 illustrates what happens when an administrator manually migrates a migratable server.

-

An administrator uses the WebLogic Server Administration Console to initiate the migration of Managed Server 2 from Machine C to Machine B.

-

The Administration Server contacts Node Manager on Machine C. See Administration Server Role in Whole Server Migration.

-

Node Manager on Machine C stops Managed Server 2.

-

Managed Server 2 removes its row from the lease table.

-

The Administration Server invokes Node Manager on Machine B.

-

Node Manager on Machine B starts Managed Server 2.

-

Managed Server 2 obtains its configuration from the Administration Server.

-

Managed Server 2 caches the configuration with which it started.

-

Managed Server 2 adds a row to the lease table.

Parent topic: Server Migration Processes and Communications

Administration Server Role in Whole Server Migration

In a cluster that contains migratable servers, the Administration Server do as follows:

-

Invokes Node Manager on each machine that hosts cluster members to start the migratable servers. This is a prerequisite for server migratability, if a server instance was not initially started by Node Manager, it cannot be migrated.

-

Invokes Node Manager on each machine involved in a manual migration process to stop and start the migratable server.

-

Invokes Node Manager on each machine that hosts cluster members to stop server instances during a normal shutdown. This is a prerequisite for server migratability, if a server instance is shut down directly, without using Node Manager, when the cluster master detects that the server instance is not running, it will call Node Manager to restart it.

In addition, the Administration Server provides its regular domain management functionality, persisting configuration updates issued by an administrator, and providing a run-time view of the domain, including the migratable servers it contains.

Parent topic: Server Migration Processes and Communications

Migratable Server Behavior in a Cluster

A migratable server is a clustered Managed Server that has been configured as migratable. A migratable server has the following key behaviors:

-

If you are using a database to manage leasing information, during startup and restart by Node Manager, a migratable server adds a row to the lease table. The row for a migratable server contains a timestamp and the machine where it is running. See Leasing.

-

When using a database to manage leasing information, a migratable server adds a row to the database as a result of startup. It tries to take on the role of cluster master and succeeds, if it is the first server instance to join the cluster.

-

Periodically, the server renews its lease by updating the timestamp in the lease table.

By default, a migratable server renews its lease every 30,000 milliseconds—the product of two configurable

ServerMBeanproperties:-

HealthCheckIntervalMillis, which by default is 10,000. -

HealthCheckPeriodsUntilFencing, which by default is 3.

-

-

If a migratable server fails to reach the lease table and renew its lease before the lease expires, it terminates as quickly as possible using a Java

System.exit. In this case, the lease table still contains a row for that server instance. For information about how this relates to automatic migration, see Cluster Master Role in Whole Server Migration. -

During operation, a migratable server listens for heartbeats from the cluster master. When it detects that the cluster master is not sending heartbeats, it attempts to take over the role of cluster master and succeeds if no other server instance has claimed that role.

Note:

During server migration, remember that server startup taxes CPU utilization. You cannot assume that because a machine can support a certain number of server instances running concurrently that they also can support that same number of server instances starting up on the same machine at the same time.

Parent topic: Server Migration Processes and Communications

Node Manager Role in Whole Server Migration

The use of Node Manager is required for server migration. It must run on each machine that hosts or is intended to host.

Node Manager supports server migration in the following ways:

-

Node Manager must be used for initial startup of migratable servers.

When you initiate the startup of a Managed Server from the WebLogic Server Administration Console, the Administration Server uses Node Manager to start the server instance. You can also invoke Node Manager to start the server instance using the standalone Node Manager client; however, the Administration Server must be available so that the Managed Server can obtain its configuration.

Note:

Migration of a server instance that is not initially started with Node Manager will fail.

-

Node Manager must be used to suspend, shut down, or force shut down migratable servers.

-

Node Manager tries to restart a migratable server whose lease has expired on the machine where it was running at the time of failure.

Node Manager performs the steps in the server migration process by running customizable shell scripts that are provided with WebLogic Server. These scripts can start, restart and stop server instances, migrate IP addresses, and mount and unmount disks. The scripts are available for Solaris and Linux.

-

In an automatic migration, the cluster master invokes Node Manager to perform the migration.

-

In a manual migration, the Administration Server invokes Node Manager to perform the migration.

-

Parent topic: Server Migration Processes and Communications

Cluster Master Role in Whole Server Migration

In a cluster that contains migratable servers, one server instance acts as the cluster master. Its role is to orchestrate the server migration process. Any server instance in the cluster can serve as the cluster master. When you start a cluster that contains migratable servers, the first server instance to join the cluster becomes the cluster master and starts the cluster manager service. If a cluster does not include at least one migratable server, it does not require a cluster master, and the cluster manager service does not start. In the absence of a cluster master, migratable servers can continue to operate, but server migration is not possible. The cluster master serves the following key functions:

-

Issues periodic heartbeats to the other server instances in the cluster.

-

Periodically reads the lease table to verify that each migratable server has a current lease. An expired lease indicates to the cluster master that the migratable server should be restarted.

-

Upon determining that a migratable server's lease is expired, the cluster master waits for a period specified by the

FencingGracePeriodMillison theClusterMBeanand then tries to invoke the Node Manager process on the machine that hosts the migratable server whose lease is expired, in order to restart the migratable server. -

If unable to restart a migratable server whose lease has expired on its current machine, the cluster master selects a target machine in the following fashion:

-

If you have configured a list of preferred destination machines for the migratable server, the cluster master chooses a machine on that list, in the order the machines are listed.

-

Otherwise, the cluster master chooses a machine on the list of those configured as available for hosting migratable servers in the cluster.

A list of machines that can host migratable servers can be configured at two levels: for the cluster as a whole and for an individual migratable server. You can define a machine list at both levels, but it is necessary to define a machine list on at least one level.

-

-

To accomplish the migration of a server instance to a new machine, the cluster master invokes the Node Manager process on the target machine to create a process for the server instance.

The time required to perform the migration depends on the server configuration and startup time.

-

The maximum time taken for the cluster master to restart the migratable server is (

HealthCheckPeriodsUntilFencing*HealthCheckIntervalMillis) +FencingGracePeriodMillis. -

The total time before the server instance becomes available for client requests depends on the server startup time and the application deployment time.

-

Parent topic: Server Migration Processes and Communications

Whole Server Migration with Dynamic and Mixed Clusters

WebLogic Server supports whole server migration with dynamic and mixed clusters.

When a dynamic server in a dynamic cluster fails, the server instance will be migrated to a different physical machine upon failure as the same way a configured server in a configured or mixed cluster. While configuration differs depending on the cluster type, whole server migration behavior is the same for all clusters. See Dynamic Clusters.

Automatic whole server migration uses leasing to elect a cluster master, which is responsible for monitoring other cluster members and for restarting failed members hosted on other physical machines. You configure leasing in the cluster configuration. See Leasing.

- Configuring Whole Server Migration with Dynamic Clusters

- Configuring Whole Server Migration with Mixed Clusters

Parent topic: Whole Server Migration

Configuring Whole Server Migration with Dynamic Clusters

When configuring automatic whole server migration for configured clusters, select the individual server instances you want to migrate. You can also choose a subset of available machines to which you want to migrate server instances upon failure.

For a dynamic cluster, you can enable or disable automatic whole server migration in the server template. A dynamic cluster uses a single server template to define its configuration, and all dynamic server instances within the dynamic cluster inherit the template configuration. If you enable automatic whole server migration in the server template for a dynamic cluster, all dynamic server instances based on that server template are then enabled for automatic whole server migration. You cannot select individual dynamic server instances to migrate.

Also, you cannot choose the machines to which you want to migrate. After enabling automatic whole server migration in the server template for a dynamic cluster, all machines that are available to use for migration are automatically selected.

You cannot limit the list of candidate machines for migration that the dynamic cluster specifies, as the server template does not list candidate machines. The list of candidate machines for each dynamic server is calculated as follows:

ClusterMBean.CandidateMachinesForMigratableServers = { M1, M2, M3 }

dyn-server-1.CandidateMachines = { M1, M2, M3}

dyn-server-2.CandidateMachines = { M2, M3, M1 }

dyn-server-3.CandidateMachines = { M3, M1, M2 }

dyn-server-4.CandidateMachines = { M1, M2, M3 }

To enable automatic whole server migration for a dynamic cluster using the WebLogic Server Administration Console:

- In the left pane of the WebLogic Server Administration Console, select Environment > Clusters > Server Templates.

- In the Server Templates table, select the server template for your dynamic cluster.

- Select Configuration > Migration.

- Select the Automatic Server Migration Enabled attribute.

Parent topic: Whole Server Migration with Dynamic and Mixed Clusters

Configuring Whole Server Migration with Mixed Clusters

A mixed cluster contains both dynamic and configured servers. To enable automatic whole server migration for a mixed cluster:

Parent topic: Whole Server Migration with Dynamic and Mixed Clusters