8.15 Realtime Message Ingestion to Apache Kafka with Oracle GoldenGate for Distributed Applications and Analytics

Overview

This Quickstart covers a step-by-step process showing how to ingest messages to Apache Kafka in real-time with Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA).

Apache Kafka is an open-source platform designed for handling real-time data streams. It allows applications to publish and subscribe to continuous flows of data, making it ideal for building high-performance data pipelines and streaming applications.

GG for DAA connects Apache Kafka with Kafka Handler and Kafka Connect Handler. GG for DAA reads the source operations from the trail file, formats them, maps to Kafka topics and delivers.

- Prerequisites

- Install Dependency Files

- Create Kafka Producer Properties File

- Create a Replicat in Oracle GoldenGate for Distributed Applications and Analytics

Parent topic: Quickstarts

8.15.1 Prerequisites

To successfully complete this Quicktart, you must have the following:

- An Apache Kafka node up and running.

In this Quickstart, a sample trail file (named tr) which

is shipped with GG for DAA is used. If you want to continue with sample trail file,

it is located at GG_HOME/opt/AdapterExamples/trail/ in your GG for

DAA instance.

8.15.2 Install Dependency Files

GG for DAA uses Java SDK provided by Snowflake. You can download the SDKs using Dependency Downloader utility shipped with GG for DAA. Dependency downloader is a set of shell scripts that downloads dependency jar files from Maven and other repositories.

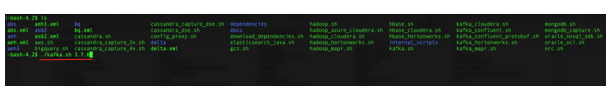

- In your GG for DAA VM, go to dependency downloader utility. It is

located at

GG_HOME/opt/DependencyDownloader/and locatekafka.sh. - Run

kafka.shwith the required version. You can check the version and reported vulnerabilities in Maven Central. This document uses 3.7.0 which is the latest version when this quick start is published.Figure 8-107 Run kafka.sh with the required version.

- A new directory is created in

GG_HOME/opt/DependencyDownloader/dependenciesnamed as<kafka_version>. For example:/u01/app/ogg/opt/DependencyDownloader/dependencies/kafka_3.7.0.

8.15.3 Create Kafka Producer Properties File

In GG for DAA instance, create a

producer.properties file and configure.

bootstrap.servers=localhost:9092 acks = 1 compression.type = gzip reconnect.backoff.ms = 1000 value.serializer = org.apache.kafka.common.serialization.ByteArraySerializer key.serializer = org.apache.kafka.common.serialization.ByteArraySerializer

8.15.4 Create a Replicat in Oracle GoldenGate for Distributed Applications and Analytics

To create a replicat in Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA):

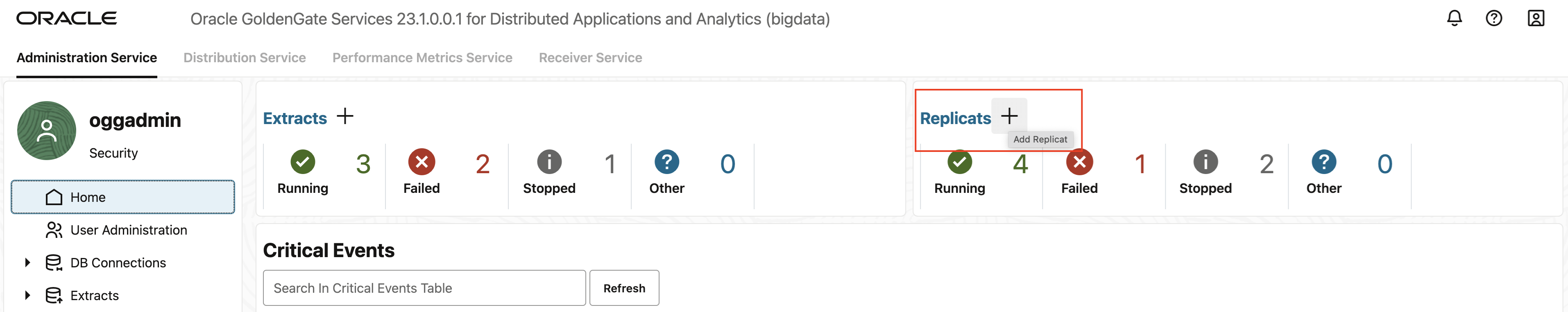

- In the GG for DAA UI, in the Administration Service tab,

click the + sign to add a replicat.

Figure 8-108 Click + in the Administration Service tab.

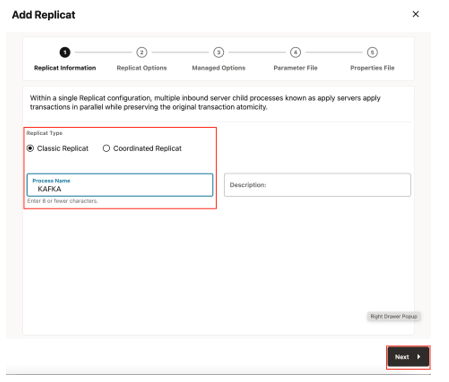

- Select the Classic Replicat Replicat Type and click

Next. There are two different Replicat types available: Classic and

Coordinated. Classic Replicat is a single threaded process whereas Coordinated

Replicat is a multithreaded one that applies transactions in parallel.

Figure 8-109 Add Replicat

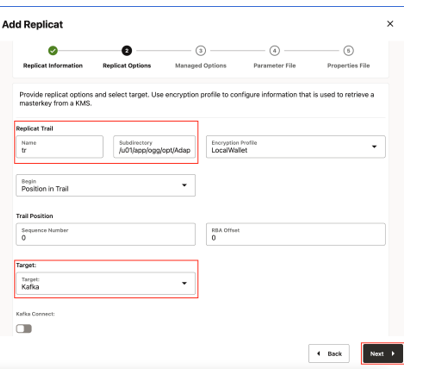

- Enter the basic information, and click Next:

- Target: Kafka

Figure 8-110 Replicat Options

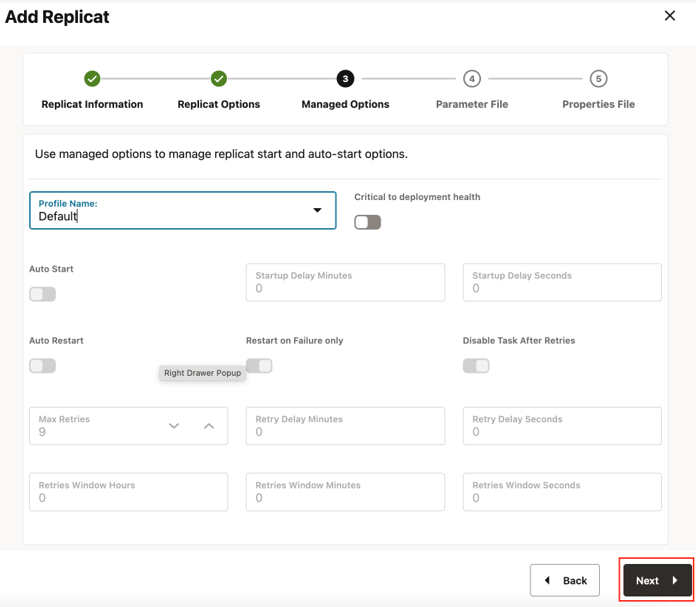

- Leave Managed Options as is and click Next.

Figure 8-111 Managed Options

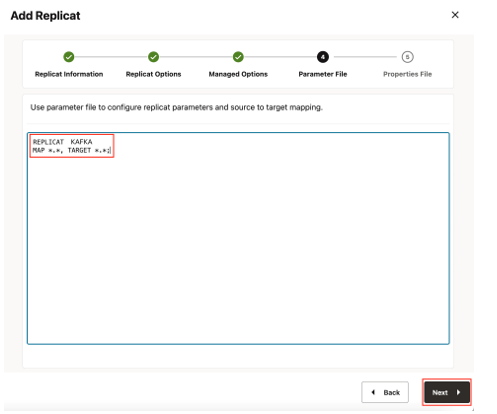

- Enter Parameter File details and click Next. In the

Parameter File, you can specify source to target mapping or leave it as-is with

a wildcard selection.

Figure 8-112 Parameter File

- In the Properties file, update the properties marked as TODO

and click Create and

Run.:

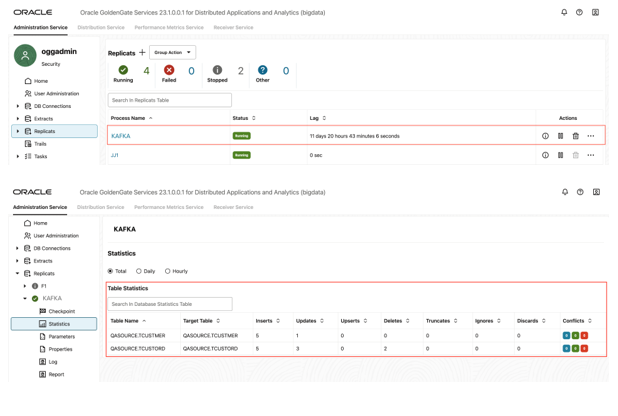

# Properties file for Replicat KFK #Kafka Handler Template gg.handlerlist=kafkahandler gg.handler.kafkahandler.type=kafka #TODO: Set the name of the Kafka producer properties file. gg.handler.kafkahandler.kafkaProducerConfigFile=/path_to/producer.properties #TODO: Set the template for resolving the topic name. gg.handler.kafkahandler.topicMappingTemplate=<target_topic_name> gg.handler.kafkahandler.keyMappingTemplate=${primaryKeys} gg.handler.kafkahandler.mode=op gg.handler.kafkahandler.format=json gg.handler.kafkahandler.format.metaColumnsTemplate=${objectname[table]},${optype[op_type]},${timestamp[op_ts]},${currenttimestamp[current_ts]},${position[pos]} #TODO: Set the location of the Kafka client libraries. gg.classpath= path_to/dependencies/kafka_3.7.0/* jvm.bootoptions=-Xmx512m -Xms32mGG for DAA supports dynamic topic mapping by template keywords. For example, if you assigntopicMappingTemplate as ${tablename}, then GG for DAA creates a topic with the source table name, per each source table and will map the events to these topics. Oracle recommends to usekeyMappingTemplate=${primaryKeys}, GG for DAA sends the source operations with the samepkto the same partition. This will guarantee maintaining the order of the source operations while delivering to Apache Kafka. - If replicat starts successfully, it will be in running state. You

can go to action/details/statistics to see the replication statistics.

Figure 8-113 Replicat Statistics

- You can go to your Kafka topic and check the messages. For more information, see Apache Kafka.

Note:

- If target kafka topic does not exist, it will be auto created by GG for DAA if Auto topic create is enabled in Kafka cluster. You can use the template keywords to dynamically assign topic names.

- You can refer to this blog for improving the performance of the Apache Kafka replication.