3 Configuring Oracle GoldenGate in a Multitenant Container Database

Topics:

Requirements for Configuring Container Databases for Oracle GoldenGate

Here are configuration requirements to enable replication to and from multitenant container databases:

-

The different pluggable databases in the multitenant container database can have different character sets. Oracle GoldenGate captures data from any multitenant database with different character sets into one trail file and replicates the data without corruption due to using different character sets.

-

Oracle GoldenGate on-premise 21c and higher with Oracle Database 21c support the per-PDB-Extract feature. You can create a per-PDB Extract connecting to the local

ggadminuser in a specific pluggable database to create and register the Extract. You do not need the container clause or theSOURCECATALOGto set up the per-PDB Extract. Setting up the Extract as a per-PDB-Extract matches exactly the same procedure as a Non-CDB.If required, you can also set up the root-level Extract connecting to the common

c##ggadminuser in the root container to create and register the Extract for specific pluggable databases using the container. See Granting User Privileges for Oracle Database 21c and Lower depending on the Oracle database installation that you need to configure.See Establishing Oracle GoldenGate Credentials for how to create a user for the Oracle GoldenGate processes and grant the correct privileges.

-

To support source CDB 12.2, Extract must specify the trail format as release 12.3. Due to changes in the redo logs, to capture from a multitenant database that is Oracle 12.2 or higher, the trail format release must be 12.3 or higher.

-

The

dbms_goldengate_auth.grant_admin_privilegepackage grants the appropriate privileges for capture and apply within a multitenant container database. This includes thecontainerparameter, which must be set toALL, as shown in the following example:EXCE DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE ('c##ggadmin',container=>'all') -

DDL replication works as a normal replication for multitenant databases. However, DDL on the root container should not be replicated because Replicats must not connect to the root container, only to PDBs.

You can also see Quickstart Your Data Replication with Oracle GoldenGate Microservices Architecture for a list of required privileges and how to configure the container and pluggable databases for Oracle GoldenGate.

Flush Sequence for Multitenant Container Database

FLUSH SEQUENCE immediately after you start Extract for

the first time during an initial synchronization or a re-synchronization. This command

updates an Oracle sequence, so that initial redo records are available at the time that

Extract starts to capture transaction data. Normally, redo is not generated until the

current cache is exhausted. The flush gives Replicat an initial start point with which

to synchronize to the correct sequence value on the target system. From then on, Extract

can use the redo that is associated with the usual cache reservation of sequence

values.

-

The following Oracle procedures are used by

FLUSH SEQUENCE:Database Procedure User and Privileges Source

updateSequenceGrants

EXECUTEto the owner of the Oracle GoldenGate DDL objects, or other selected user if not using DDL support.Target

replicateSequenceGrants

EXECUTEto the Oracle GoldenGate Replicat user.The

sequence.sqlscript installs these procedures. Normally, this script is run as part of the Oracle GoldenGate installation process, but make certain that was done before usingFLUSH SEQUENCE. Ifsequence.sqlwas not run, the flush fails and an error message similar to the following is generated:Cannot flush sequence {0}. Refer to the Oracle GoldenGate for Oracle documentation for instructions on how to set up and run the sequence.sql script. Error {1}. -

The

GLOBALSfile must contain aGGSCHEMAparameter that specifies the schema in which the procedures are installed. This user must haveCONNECT,RESOURCE, andDBAprivileges. -

Before using

FLUSH SEQUENCE, issue theDBLOGINcommand as the database user that hasEXECUTEprivilege on theupdateSequenceprocedure. If logging into a multitenant container database, log into the pluggable database that contains the sequence that is to be flushed.

FLUSH SEQUENCE must be issued at the PDB level, to create

an Oracle GoldenGate user in each PDB for which the sequence replication is required.

Use DBLOGIN to log into that PDB, and run the FLUSH

SEQUENCE command.

It is recommended that you use the same schema in each PDB, so that it works

with the GGSCHEMA GLOBALS parameter file. Here is an example:

Environment Information OGG 21.3 Oracle 21c to Oracle 21c Replication, Integrated Extract, Parallel Replicat

Source: CDB NORTH, PDB DBEAST

Target: CDB SOUTH, PDB DBWEST

Source OGG Configuration

Container User: C##GGADMIN

PDB User for Sequences: GGATE

sqlplus / as sysdbao

ALTER SESSION SESSION SET CONTAINER=CERTMISSN;

CREATE USER ggadmin CREATE USER ggate IDENTIFIED BY password DEFAULT TABLESPACE USERS TEMPORARY TABLESPACE TEMP QUOTA UNLIMITED ON USERS CONTAINER=CURRENT;Run @sequence

sqlplus / as sysdba

SQL> ALTER SESSION SET CONTAINER=PDBEAST;

SQL> @sequence

GGADMIN GLOBALS

GGSCHEMA GGADMIN

FLUSH

SEQUENCE:DBLOGIN USERIDALIAS ggeast DOMAIN OracleGoldenGate

FLUSH SEQUENCE PDBEAST.HR.*

PDB User: ggadmin

Run @sequence

sqlplus / as sysdba

SQL> ALTER SESSION SET CONTAINER =PDBWEST;

SQL> @sequenceWhen prompted enter ggadmin.

This also applies to the @sequence.sql script, which must

also be run on each PDB from where you are going to capture.

Using the Root Container Extract from PDB

Oracle GoldenGate 21c and higher releases allows capturing from a PDB in

a multitenant container Oracle database. To capture from a multitenant database, you

must use an Extract that is configured at the root level using a

c## account. To apply data into a multitenant database, a

separate Replicat is needed for each PDB, because a Replicat connects at the PDB

level and doesn't have access to objects outside of that PDB

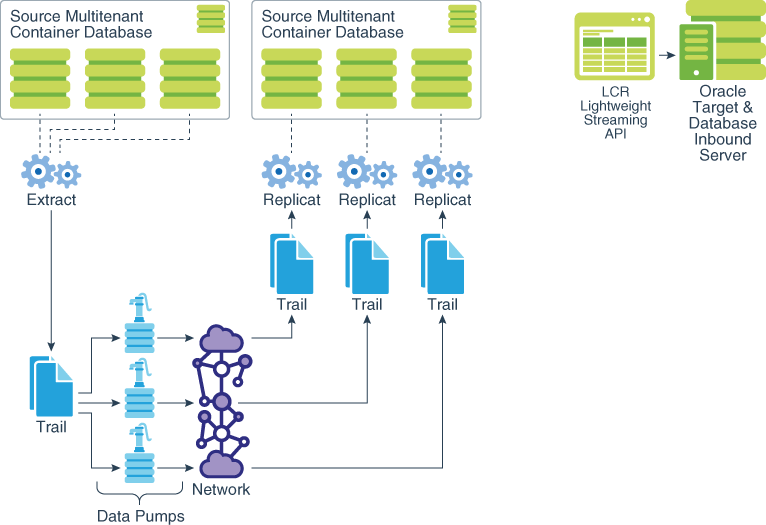

One Extract group can capture from multiple pluggable databases to a single trail. In the parameter file, source objects must be specified in TABLE and SEQUENCE statements with their fully qualified three-part names in the format of container.schema.object.

As an alternative to specifying three-part names, you can specify a default pluggable database with the SOURCECATALOG parameter, and then specify only the schema.object in subsequent TABLE or SEQUENCE parameters. You can use multiple instances of this configuration to handle multiple source pluggable databases. For example:

SOURCECATALOG DBEAST

TABLE hr.employees;

SEQUENCE hr.seq;

SOURCECATALOG DBWEST

TABLE hr.employees;

SEQUENCE hr.seq;You can also refer to the Quickstart Bidirectional Replication for steps to perform in a bidirectional set up for Oracle GoldenGate on Oracle multitenant database.

Topics:

- About Extract

The Oracle GoldenGate Extract process interacts directly with a database logmining server to receive data changes in the form of logical change records (LCRs). - Configuring Primary Extract

The mining database from which the primary Extract captures log change records from the logmining server, can be either local or downstream from the source database. - Setting up the Auto-Capture Mode

- Mining Mode Toggling

About Extract

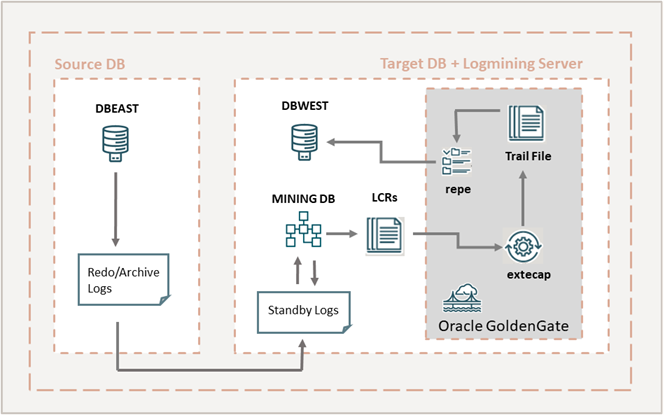

The Oracle GoldenGate Extract process interacts directly with a database logmining server to receive data changes in the form of logical change records (LCRs).

The following diagram illustrates the configuration of Extract.

Description of the illustration downstream_ext.png

Some of the additional features of Oracle GoldenGate Extract are:

-

Extract is fully integrated with the database, allowing seamless interoperability between features such as Oracle RAC, ASM, and TDE.

-

Extract uses the database logmining server to access the Oracle redo stream, with the benefit of being able to automatically switch between different copies of archive logs or different mirrored versions of the online logs. Thus, capture can transparently handle the absence of a log file caused by disk corruption, hardware failure, or operator error, assuming that additional copies of the archived and online logs are available

-

Extract enables faster filtering of tables.

-

Extract handles point-in-time recovery and RAC integration more efficiently.

-

Extract features integrated log management. The Oracle Recovery Manager (RMAN) automatically retains the archive logs that are needed by Extract.

-

Extract supports capture from a multitenant container database and from per-PDB capture mode.

-

Extract and Replicat (integrated) are both database objects, so the naming of the objects follow the same rules as other Oracle database objects. See Specifying Object Names in Oracle GoldenGate Input in Oracle GoldenGate Microservices Documentation.

-

When Extract is running from a remote system, Oracle GoldenGate automatically enables cross endian interoperability. This implies that if the endian value where Extract is running is different from the endian value where the Oracle database is running, then the cross endian support is automatically enabled. For cross endian Extract to work, the compatibility parameter of the source database must be 11.2.0.4 or higher.

Extract Deployment Options

The deployment options for Extract are described in this section and depend on where the mining database is deployed. The mining database is the one where the logmining server is deployed.

-

Local deployment: For a local deployment, the source database and the mining database are the same. The source database is the database for which you want to mine the redo stream to capture changes, and also where you deploy the logmining server. Because integrated capture is fully integrated with the database, this mode does not require any special database setup.

-

Downstream deployment: In a downstream deployment, the source and mining databases are different databases. You create the logmining server at the downstream database. You configure redo transport at the source database to ship the redo logs to the downstream mining database for capture at that location. Using a downstream mining server for capture may be desirable to offload the capture overhead and any other overhead from transformation or other processing from the production server, but requires log shipping and other configuration.

When using a downstream mining configuration, the source database and mining database must be of the same platform. For example, if the source database is running on Windows 64-bit, the downstream database must also be on a Windows 64-bit platform. See Configuring a Downstream Mining Database to configure a downstream mining database.

-

Downstream sourceless Extract deployment: In the Extract parameter file, replace the

USERIDparameter withNOUSERID. You must useTRANLOGOPTIONS MININGUSER. Extract obtains all required information from the downstream mining database. Extract is not dependent on any connection to the source database. The source database can be shutdown and restarted without affecting Extract.Extract will abend if it encounters redo changes that require data to be fetched from the source database.

To capture any tables that are listed as

ID KEYin thedba_goldengate_support_modeview, you need to have aFETCHUSERIDorFETCHUSERIDALIASconnection to support the tables. Tables that are listed asFULLdo not require this. We also need to state that if a customer wants to performSQLEXECoperations that perform a query or execute a stored procedure they cannot use this method as it is incompatible withNOUSERIDbecauseSQLEXECworks withUSERIDorUSERIDALIAS.

Parent topic: About Extract

Configuring Primary Extract

The mining database from which the primary Extract captures log change records from the logmining server, can be either local or downstream from the source database.

These steps configure the primary Extract to capture transaction data from either location. See Configuring a Downstream Mining Database and see the following examples:

-

Example 1: Capturing from One Source Database in Real-time Mode

-

Example 2: Capturing from Multiple Sources in Archive-log-only Mode

-

Example 3: Capturing from Multiple Sources with Mixed Real-time and Archive-log-only Mode

Note:

One Extract group is generally sufficient to capture from a single database or multiple pluggable databases within a multitenant container database. See Configuring Oracle GoldenGate in a Multitenant Container Database. You can also choose per-PDB capture mode when working in an Oracle Autonomous Database or cloud environment. See Configuring Extract to Capture from an Autonomous Database.

- Add the Local Trail

These steps add the local trail to which the primary Extract writes captured data. - Add the Remote Trail

Although it is read by Replicat, this trail must be associated with the Extract, so it must be added on the source system, not the target.

Parent topic: Using the Root Container Extract from PDB

Add the Local Trail

These steps add the local trail to which the primary Extract writes captured data.

On the source system, issue the ADD EXTTRAIL command on the command

line:

ADD EXTTRAIL pathname, EXTRACT group nameWhere:

-

EXTTRAILspecifies that the trail is to be created on the local system. -

pathnameis the relative or fully qualified name of the trail, including the two-character name. -

EXTRACTgroup nameis the name of the primary Extract group.

Note:

Oracle GoldenGate creates this trail automatically during processing.

Example 3-1

ADD EXTTRAIL /north/ea, EXTRACT exteParent topic: Configuring Primary Extract

Add the Remote Trail

Although it is read by Replicat, this trail must be associated with the Extract, so it must be added on the source system, not the target.

These steps add the remote trail:

On the source system, issue the following command:

ADD RMTTRAIL pathname, EXTRACT group nameWhere:

-

RMTTRAILspecifies that the trail is to be created on the target system. -

pathname is the relative or fully qualified name of the trail, including the two-character name.

-

EXTRACTgroup nameis the name of the data-pump Extract group.

Note:

Oracle GoldenGate creates this trail automatically during processing.

Example 3-2

ADD RMTTRAIL /south/re, EXTRACT extsParent topic: Configuring Primary Extract

Setting up the Auto-Capture Mode

The automatic Extract mode captures changes for all the tables that are enabled for logical replication.

-

It has sufficient ID or scheduling-key supplemental log data at table or schema level.

-

It has primary key (PK), unique identifier (UI), foreign key (FK) supplemental log data, and ALLKEYS supplemental log data. ALLKEYS is required in addition to PK, UI and FK because it logs all unique keys at the schema-wide supplemental logging level in the absence of a primary key.

Benefits of Using the Auto Extract Mode

-

Easy to configure captured table set

-

When captured table set changes, you don't need to update the

TABLE/TABLEEXCLUDEparameter, or stop and restart Extract.

Enabling Auto Capture

See TRANLOGOPTIONS for syntax and usage.

Use the following DDLs to enable auto capture at the table level:

CREATE/ALTER TABLE

table_name ENABLE LOGICAL REPLICATION ALLKEYS

or

CREATE/ALTER TABLE table_name ENABLE LOGICAL REPLICATION

ALLOWNONVALIDATEDKEYS

Parent topic: Using the Root Container Extract from PDB

Mining Mode Toggling

Mining mode toggling is not supported. This implies that after you create mining in

ROOT, then the session will stay mining in ROOT

and if the you choose to create mining in PDB, then the session will stay mining in that

particular PDB.

CDB$ROOT to mining in specific PDB, then

there is a migration process that you can follow:

-

Register a new per-PDB Extract. For example, the SCN returned is

X. -

Let the old

ROOTExtract mine the pastX(RECOVERYSCN X). -

Stop the old Extract

-

Alter the new Extract so that its current SCN is set to be

Y. -

Start the new Extract.

This new Extract picks up from where the old Extract left off.

Note:

There may be some duplicate transactions at SCNY, if there were

multiple txs committing at the same SCN Y.

However, Replicat can handle duplicate txs by default.

Parent topic: Using the Root Container Extract from PDB

Applying to Pluggable Databases

Replicat can only connect and apply to one pluggable database. To specify the

correct one, use a SQL*Net connect string for the database user that you specify

with the USERID or USERIDALIAS parameter. For

example: GGADMIN@DBEAST.

In the parameter file, specify only the

schema.object in the TARGET portion

of the MAP statements. In the MAP portion,

identify source objects captured from more than one pluggable database with their

three-part names or use the SOURCECATALOG parameter with two-part

names. The following is an example of this configuration.

SOURCECATALOG pdbeast

MAP hr.employees, TARGET hr.employees;

MAP hr.seq, TARGET hr.employees;

SOURCECATALOG pdbwest

MAP hr.*, TARGET hr.*;

MAP hr.seq, hr.*;The following is an example without the use of SOURCECATALOG to identify the source pluggable database. In this case, the source objects are specified with their three-part names.

MAP pdbeast.hr.employees, TARGET hr.*;

MAP pdbeast.hr.seq, TARGET hr.*;To configure replication from multiple source pluggable databases to multiple target pluggable databases, you can configure parallel Extract and Replicat streams, each handling data for one pluggable database. Alternatively, you can configure one Extract capturing from multiple source pluggable databases, which writes to one trail that is read by multiple Replicat groups, each applying to a different target pluggable database.

Yet another alternative is to use one Extract writing to multiple trails, each trail read by a Replicat assigned to a specific target pluggable database :

See Configuring Replicat for steps for Replicat configuration at the PDB level and adding Replicat groups.

- Add the Replicat Group

These steps add the Replicat group that reads the remote trail and applies the data changes to the target Oracle Database.

Add the Replicat Group

These steps add the Replicat group that reads the remote trail and applies the data changes to the target Oracle Database.

Example 3-3 Adds a Nonintegrated Replicat

ADD REPLICAT repe, EXTTRAIL east/eaExample 3-4 Adds an Integrated Replicat

ADD REPLICAT repn, INTEGRATED, EXTTRAIL north/emParent topic: Applying to Pluggable Databases