Cluster File Systems

-

File access locations are transparent. A process can open a file that is located anywhere in the system. Processes on all cluster nodes can use the same path name to locate a file.

Note - When the cluster file system reads files, it does not update the access time on those files. -

Coherency protocols are used to preserve the UNIX file access semantics even if the file is accessed concurrently from multiple nodes.

-

Extensive caching is used along with zero-copy bulk I/O movement to move file data efficiently.

-

The cluster file system provides highly available, advisory file-locking functionality by using the fcntl command interfaces. Applications that run on multiple cluster nodes can synchronize access to data by using advisory file locking on a cluster file system. File locks are recovered immediately from nodes that leave the cluster, and from applications that fail while holding locks.

-

Continuous access to data is ensured, even when failures occur. Applications are not affected by failures if a path to disks is still operational. This guarantee is maintained for raw disk access and all file system operations.

-

Cluster file systems are independent from the underlying file system and volume management software.

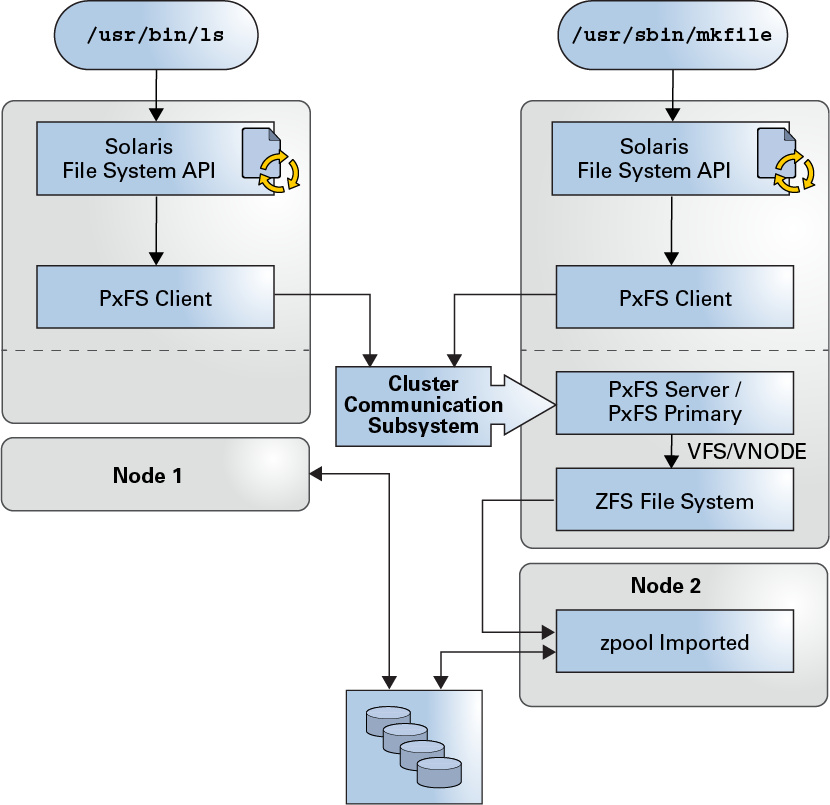

Oracle Solaris Cluster software provides a cluster file system based on the Oracle Solaris Cluster Proxy File System (PxFS). The cluster file system has the following features:

Programs can access a file in a cluster file system from any node in the cluster through the same file name (for example, /global/foo).

A cluster file system is mounted on all cluster members. You cannot mount a cluster file system on a subset of cluster members.

A cluster file system is not a distinct file system type. Clients verify the underlying file system (for example, ZFS).

Using Cluster File Systems

In the Oracle Solaris Cluster software, all multihost disks are placed into device groups, which can be ZFS storage pools, Solaris Volume Manager disk sets, raw-disk groups, or individual disks that are not under control of a software-based volume manager.

For a cluster file system to be highly available, the underlying disk storage must be connected to more than one cluster node. Therefore, a local file system (a file system that is stored on a node's local disk) that is made into a cluster file system is not highly available.

-

Manually (UFS) – Use the mount command and the –g or –o global mount options to mount the cluster file system from the command line, for example:

# mount -g /dev/global/dsk/d0s0 /global/oracle/data

-

Automatically (UFS) - Create an entry in the /etc/vfstab file with a global mount option to mount the cluster file system at boot. You then create a mount point under the /global directory on all nodes. The directory /global is a recommended location, not a requirement. Here's a sample line for a cluster file system from an /etc/vfstab file:

/dev/md/oracle/dsk/d1 /dev/md/oracle/rdsk/d1 /global/oracle/data ufs 2 yes global,logging

-

ZFS - All the datasets of a pool are mounted when the pool is imported. Individual datasets of an imported pool can be umounted or mounted using the zfs unmount or zfs mount commands. If a ZFS pool is managed by an Oracle Solaris Cluster device group or by an HAStoragePlus resource, the pool will be imported and the datasets mounted when the corresponding device group or resource is brought online.

To automatically import a ZFS pool at boot, set the import-at-boot property to true for the ZFS device group. For example:

# cldevicegroup set -p import-at-boot device-group

You can mount cluster file systems as follows:

Note - While Oracle Solaris Cluster software does not impose a naming policy for cluster file systems, you can ease administration by creating a mount point for all cluster file systems under the same directory, such as /global/disk-group. See the Administering an Oracle Solaris Cluster 4.4 Configuration for more information.

HAStoragePlus Resource Type

The HAStoragePlus resource type is designed to make local and global file system configurations highly available. You can use the HAStoragePlus resource type to integrate your shared local or global file system into the Oracle Solaris Cluster environment and make the file system highly available.

Oracle Solaris Cluster systems support the following cluster file system types:

-

UNIX File System (UFS)

-

Oracle Solaris ZFS

Oracle Solaris Cluster software supports the following as highly available failover local file systems:

-

UFS

-

Oracle Solaris ZFS (default file system)

-

StorageTek Shared file system

-

Oracle Automatic Storage Management Cluster File System (Oracle ACFS) - Integrates with Oracle ASM and Oracle Clusterware (also referred to collectively as Oracle Grid Infrastructure)

The HAStoragePlus resource type provides additional file system capabilities such as checks, mounts, and forced unmounts. These capabilities enable Oracle Solaris Cluster to fail over local file systems. In order to fail over, the local file system must reside on global disk groups with affinity switchovers enabled.

See Enabling Highly Available Local File Systems in Planning and Administering Data Services for Oracle Solaris Cluster 4.4 for information about how to use the HAStoragePlus resource type.

You can also use the HAStoragePlus resource type to synchronize the startup of resources and device groups on which the resources depend. For more information, see Resources, Resource Groups, and Resource Types.

syncdir Mount Option (UFS Only)

You can use the syncdir mount option for cluster file systems that use UFS as the underlying file system. However, performance significantly improves if you do not specify syncdir. If you specify syncdir, the writes are guaranteed to be POSIX compliant. If you do not specify syncdir, you experience the same behavior as in NFS file systems. For example, without syncdir, you might not discover an out of space condition until you close a file. With syncdir (and POSIX behavior), the out-of-space condition would have been discovered during the write operation. The cases in which you might have problems if you do not specify syncdir are rare.