2 Setting Up Load Balancing by Using HAProxy

This chapter describes how to configure load balancing by using HAProxy. The chapter also includes configuration scenarios and examples.

Installing and Configuring HAProxy

Before you can set up load balancing by using HAProxy, you must first install and configure the feature.

-

Install the

haproxypackage on each front-end server:sudo dnf install haproxy

-

Edit the

/etc/haproxy/haproxy.cfgfile to configure HAProxy on each server. -

Enable access to the services or ports that you want HAProxy to handle.

To accept incoming TCP requests on port 80, use the following command:

sudo firewall-cmd --zone=zone --add-port=80/tcpsudo firewall-cmd --permanent --zone=zone --add-port=80/tcp -

Enable and start the

haproxyservice on each server:sudo systemctl enable --now haproxy

If you change the HAProxy configuration, reload the

haproxyservice:sudo systemctl reload haproxy

HAProxy Configuration Directives

The /etc/haproxy/haproxy.cfg configuration

file is divided into the following sections:

-

global -

Defines global settings, such as the

syslogfacility and level to use for logging, the maximum number of concurrent connections that are allowed, and how many processes to start in daemon mode. -

defaults -

Defines the default settings for the other sections.

-

listen -

Defines a complete proxy, which implicitly includes the

frontendandbackendcomponents. -

frontend -

Defines the ports that accept client connections.

-

backend -

Defines the servers to which the proxy forwards client connections.

Configuring Round Robin Load Balancing by Using HAProxy

The following example uses HAProxy to implement a front-end server that balances incoming requests between two backend web servers, and which also handles service outages on the backend servers.

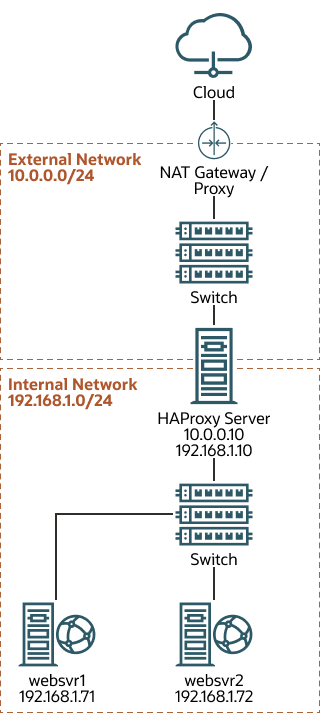

The following figure shows an HAProxy server (10.0.0.10), which is

connected to an externally facing network (10.0.0.0/24) and to an

internal network (192.168.1.0/24). Two web servers,

websrv1 (192.168.1.71) and

websrv2 (192.168.1.72), are accessible on the

internal network. The IP address 10.0.0.10 is in the private address

range 10.0.0.0/24, which can't be routed on the Internet. An upstream

Network Address Translation (NAT) gateway or a proxy server provides access to and from

the Internet.

Figure 2-1 Example HAProxy Configuration for Load Balancing

The following is an example configuration in

/etc/haproxy/haproxy.cfg on the server:

global

daemon

log 127.0.0.1 local0 debug

maxconn 50000

nbproc 1

defaults

mode http

timeout connect 5s

timeout client 25s

timeout server 25s

timeout queue 10s

# Handle Incoming HTTP Connection Requests

listen http-incoming

mode http

bind 10.0.0.10:80

# Use each server in turn, according to its weight value

balance roundrobin

# Verify that service is available

option httpchk HEAD / HTTP/1.1\r\nHost:\ www

# Insert X-Forwarded-For header

option forwardfor

# Define the back-end servers, which can handle up to 512 concurrent connections each

server websrv1 192.168.1.71:80 maxconn 512 check

server websrv2 192.168.1.72:80 maxconn 512 check This configuration balances HTTP traffic between the two backend web servers

websrv1 and websrv2, whose firewalls are

configured to accept incoming TCP requests on port 80. The traffic is distributed

equally between the servers and each server can handle a maximum of 512 concurrent

connections. A health-check is also configured and this performs a request for the HTTP

headers on a request for the web root on each backend server.

After implementing basic /var/www/html/index.html files on the web servers

and using curl to test connectivity, the following output shows

how HAProxy balances the traffic between the servers and how it handles the

httpd service stopping on websrv1:

while true; do curl http://10.0.0.10; sleep 1; done

This is HTTP server websrv1 (192.168.1.71). This is HTTP server websrv2 (192.168.1.72). This is HTTP server websrv1 (192.168.1.71). This is HTTP server websrv2 (192.168.1.72). ... This is HTTP server websrv2 (192.168.1.72). <html><body><h1>503 Service Unavailable</h1> No server is available to handle this request. </body></html> This is HTTP server websrv2 (192.168.1.72). This is HTTP server websrv2 (192.168.1.72). This is HTTP server websrv2 (192.168.1.72). ... This is HTTP server websrv2 (192.168.1.72). This is HTTP server websrv2 (192.168.1.72). This is HTTP server websrv2 (192.168.1.72). This is HTTP server websrv1 (192.168.1.71). This is HTTP server websrv2 (192.168.1.72). This is HTTP server websrv1 (192.168.1.71). ...

In this example, HAProxy detected that the

httpd service had restarted on

websrv1 and resumed using that server in

addition to websrv2.

By combining the load balancing capability of HAProxy with the high-availability capability of Keepalived, you can configure a backup load balancer that ensures continuity of service if the primary load balancer fails. See Enhancing Load Balancing by Using Keepalived With HAProxy for more information on how this configuration can be extended.

Using Weighted Round Robin Load Balancing with HAProxy

HAProxy can also be configured to use the weighted round-robin algorithm to distribute traffic. This algorithm selects servers in turns, according to their weights, and distributes the server load without implementing certain other factors such as server response time. With weighted round-robin, you can balance traffic proportionally between servers based on processing power and resources available to a server.

To implement weighted round-robin, append weight values to each server in the configuration.

For example, to distribute twice the amount of traffic to websrv1, change the

configuration to include different weight ratios, as follows:

server websrv1 192.168.1.71:80 weight 2 maxconn 512 check server websrv2 192.168.1.72:80 weight 1 maxconn 512 check

Adding Session Persistence for HAProxy

HAProxy provides a multitude of load balancing algorithms, some of which provide features

that automatically ensure that web sessions have persistent connections to the same backend

server. You can configure a balance algorithm such as hdr,

rdp-cookie, source, uri, or

url_param to ensure that traffic is always routed to the same web server

for a particular incoming connection during the session. For example, the

source algorithm creates a hash of the source IP address and maps it to a

particular backend server. If you use the rdp-cookie, or

url_param algorithms, you might need to configure the backend web servers

or the web applications for these mechanisms to run efficiently.

If the implementation requires the use of the leastconn,

roundrobin, or static-rr algorithm, you can achieve

session persistence by using server-dependent cookies.

To enable session persistence for all pages on a web server, use

the cookie directive to define the name of

the cookie to be inserted and add the cookie

option and server name to the server lines,

for example:

cookie WEBSVR insert server websrv1 192.168.1.71:80 weight 1 maxconn 512 cookie 1 check server websrv2 192.168.1.72:80 weight 1 maxconn 512 cookie 2 check

HAProxy includes the Set-Cookie: header that identifies the web server in

its response to the client, for example: Set-Cookie: WEBSVR=N;

path=page_path

. If a client specifies the WEBSVR cookie in a request, HAProxy

forwards the request to the web server whose server cookievalue matches the

value of WEBSVR.

To enable persistence selectively on a web server, use the cookie directive

to configure the HAProxy to expect the specified cookie, typically a session ID cookie or

other existing cookie, to be prefixed with the server cookie value and a

~ delimiter, for example:

cookie SESSIONID prefix server websrv1 192.168.1.71:80 weight 1 maxconn 512 cookie 1 check server websrv2 192.168.1.72:80 weight 1 maxconn 512 cookie 2 check

If the value of SESSIONID is prefixed with a

server

cookie value, for

example: Set-Cookie:

SESSIONID=N~Session_ID;,

HAProxy strips the prefix and delimiter from the

SESSIONID cookie before forwarding the

request to the web server whose server cookie

value matches the prefix.

The following example shows how to configure session persistence by using a prefixed cookie:

while true; do curl http://10.0.0.10 cookie "SESSIONID=1~1234;"; sleep 1; done

This is HTTP server websrv1 (192.168.1.71). This is HTTP server websrv1 (192.168.1.71). This is HTTP server websrv1 (192.168.1.71). ...

A real web application would typically set the session ID on the server side, in which case

the first HAProxy response would include the prefixed cookie in the

Set-Cookie: header.