Store And Analyze Your On-Premises Logs In Oracle Cloud Infrastructure

Oracle Cloud Infrastructure Data Flow is a fully managed Apache Spark ™ service that is ideal for processing large volumes of log files.

Log files are being generated all the time. Everything from hardware, operating systems, network devices, web services, and applications generate logs on a continuous basis. Analyzing this log data helps with troubleshooting & diagnostics, predictive repairs, intrusion detection, web access patterns, and much more.

Data Flow allows for centralized storage of log data in Oracle Cloud Infrastructure Object Storage. It enables analysis of the data by creating an Apache Spark application once and then running it on new logs files as they arrive in Object Storage. The output of this analysis can then be loaded to Autonomous Data Warehouse for querying and reporting. And all of this is done with no overhead such as provisioning of clusters or software installs.

Architecture

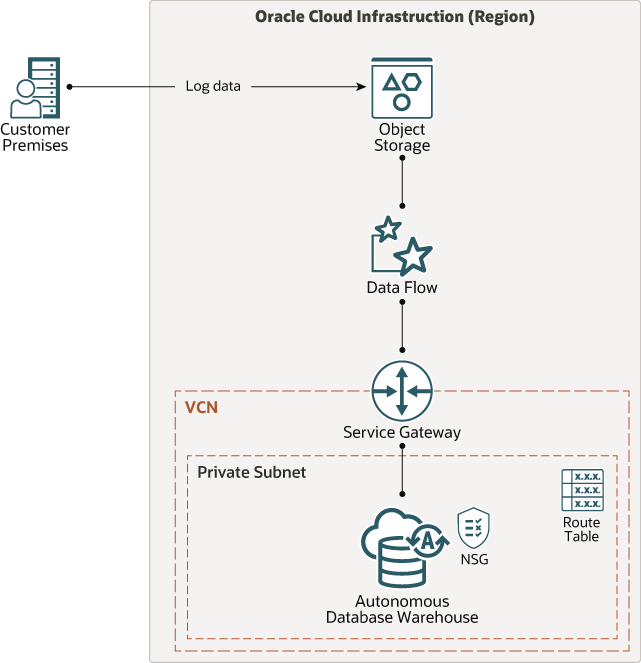

This architecture shows Data Flow connecting to Object Storage, analyzing the log files, and saving the results in Autonomous Data Warehouse for reporting.

The following diagram illustrates this reference architecture.

Description of the illustration architecture-analyze-logs.png

The architecture has the following components:

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domains

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Object storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

- Autonomous Data Warehouse

Oracle Autonomous Data Warehouse is a self-driving, self-securing, self-repairing database service that is optimized for data warehousing workloads. You do not need to configure or manage any hardware, or install any software. Oracle Cloud Infrastructure handles creating the database, as well as backing up, patching, upgrading, and tuning the database.

- Data Flow

Oracle Cloud Infrastructure Data Flow is a fully managed service for running Apache Spark ™ applications. It allows developers to focus on their applications and provides an easy runtime environment to execute them. It has an easy and simple user interface with API support for integration with applications and workflows. You don't need to spend any time on the underlying infrastructure, cluster provisioning, or software installation.

Recommendations

Your requirements might differ from the architecture described here. Use the following recommendations as a starting point.

- VCN

When you create a VCN, determine the number of CIDR blocks required and the size of each block based on the number of resources that you plan to attach to subnets in the VCN. Use CIDR blocks that are within the standard private IP address space.

Select CIDR blocks that don't overlap with any other network (in Oracle Cloud Infrastructure, your on-premises data center, or another cloud provider) to which you intend to set up private connections.

After you create a VCN, you can change, add, and remove its CIDR blocks.

When you design the subnets, consider your traffic flow and security requirements. Attach all the resources within a specific tier or role to the same subnet, which can serve as a security boundary.

- Object Storage

Ingest all the log files into Oracle Cloud Infrastructure Object Storage. Determine the appropriate batch size based on business requirements and run the Data Flow applications to process the files.

- Data Flow

No special configuration is required. However, larger VM shapes for the Spark driver and executors are recommended based on the amount of log data being processed in each run of the application.

- Oracle Autonomous Data Warehouse

Ensure that the wallet required to access the data warehouse is stored in a secure place and can only be accessed by authorized users. One consideration for the deployment of Autonomous Data Warehouse would be to create this in a VCN as a Private Endpoint.

- Security

Use Oracle Cloud Infrastructure Identity and Access Management solution to apply policies and provide access to the users, groups and resources. The Spark application will need access to the buckets to read the log files. Use the vault service to store the password required for accessing Autonomous Data Warehouse.

Considerations

- Frequency

The frequency with which you execute your application depends on the volume and frequency of incoming log files. The Spark application should be written with an appropriate logic so it can handle this incoming batch of data. A general guideline is the time taken to process each batch should be aligned with the frequency of refresh required in the Autonomous Data Warehouse by downstream services consuming the processed output.

- Performance

Several factors affect performance, but the most important is the data distribution and partitioning of incoming log files. The Spark application can be run against each partition in parallel with OCPU and memory resources as required. Oracle Cloud Infrastructure Data Flow gives full elasticity to manage resources required for each execution of the application.

- Security

Use policies to restrict who can access Oracle Cloud Infrastructure resources and to what degree. Use Oracle Cloud Infrastructure Identity and Access Management (IAM) to assign privileges to specific users and user groups for both Data Flow and run management within Data Flow.

Encryption is enabled for Oracle Cloud Infrastructure Object Storage by default and can’t be turned off.

- Cost

Oracle Cloud Infrastructure Data Flow is pay per use, so you pay only when you run the Data Flow application, not when you create it. It is advisable to store the logs using different tiers available: Object Store ("hot" storage) and Archive Store ("cold" storage). The processed data can then be stored in Autonomous Data Warehouse.

Deploy

The Terraform code for this reference architecture is available on GitHub.

- Go to GitHub.

- Clone or download the repository to your local computer.

- Follow the instructions in the

READMEdocument.