Recommended Patterns for Cloud-Based Data Lakes

Depending on your use case, data lakes can be built on Object Storage or Hadoop. Both can scale and seamlessly integrate with existing enterprise data and tools. Consider either the Greenfield or the Migration patterns for your organization. Choose either the Greenfield or the Migration pattern based on whether you plan a completely new implementation, or want to migrate your existing Big Data solution to Oracle Cloud.

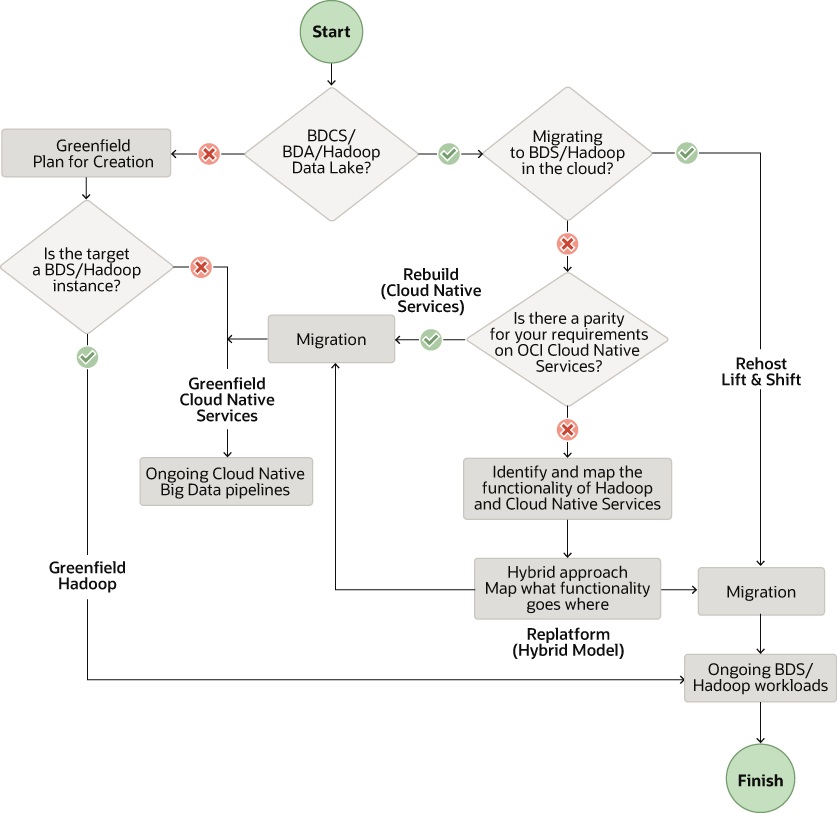

The following workflow shows you the recommended patterns based on your requirements.

Description of the illustration data-lake-solution-pattern.png

Note:

In this document, we focus on the migration of Big Data Appliance (BDA) and Big Data Cloud Services (BDCS) clusters to OCI based on Cloudera Distribution of Hadoop (CDH). However, the recommendations here are applicable to other on-premises and cloud Hadoop distributions.

Build New Data Platform on Oracle Cloud (Greenfield)

You have two options to build data lakes in Oracle Cloud for Greenfield projects. Use Big Data Service (BDS) for HDFS-based data lakes. Use OCI cloud native data services for Object Storage based data lakes without using HDFS.

Cloud Native Data Services

Build a data lake in OCI Object Storage and use Cloud Native Data and AI services. These services include Data Flow, Data Integration, Autonomous Data Warehouse, Data Catalog, and Data Science along with a few others.

Oracle recommends these services to build a new data lake:

- Object Storage as the data lake store for all kinds of raw data

- Data Flow service for Spark batch processes and for ephemeral Spark clusters

- Data Integration service for ingesting data and for ETL jobs

- Autonomous Data Warehouse (ADW) for serving and presenting layer data

- Data Catalog for data discovery and governance

Oracle recommends these additional services to build a new data lake:

- Streaming service for a managed ingestion of real-time data

- Data Transfer Appliance (DTA) service for one-time bulk transfer of data

- GoldenGate service for Change Data Capture (CDC) data and for streaming analytics

- Data Science service for machine learning requirements

- Oracle Analytics Cloud (OAC) service for BI, analytics, and reporting requirements

Big Data Service

Build your data lake in HDFS using Oracle Big Data Service (BDS). BDS provides most commonly used Hadoop components including HDFS, Hive, HBase, Spark, and Oozie.

Oracle recommends these services to build a new data lake using Hadoop clusters:

- Data Integration service for ingesting data and for ETL jobs

- Data Transfer Appliance (DTA) service for one-time bulk transfer of data

- GoldenGate service for CDC data and for streaming analytics

- Data Catalog service for data discovery and governance

- Data Science service for machine learning requirements

- OAC service for BI, analytics, and reporting requirements

- BDS for HDFS and other Hadoop components

Greenfield Pattern Workflow

When you build a new data lake, follow this workflow from requirements through testing and validation:

- Requirements: List the requirements for new environments in OCI

- Assessment: Assess the required OCI services and tools

- Design: Design your solution architecture and sizing for OCI

- Plan: Create a detailed plan mapping your time and resources

- Provision: Provision and configure the required resources in OCI

- Implement: Implement your data and application workloads

- Automate Pipeline: Orchestrate and schedule workflow pipelines for automation

- Test and Validate: Perform validation, functional, and performance testing for the end-to-end solution

Migrate Existing Data Platform on Oracle Cloud

Rebuild Pattern

Use the Rebuild pattern if you don't want to use Hadoop clusters and want to migrate to cloud native services in Oracle Cloud Infrastructure (OCI). Start with a clean slate to architect and begin implementing from scratch in OCI. Leverage managed, cloud native services for all major components in your stack. For example, build a stack using Data Flow, Data Catalog, Data Integration, Streaming, Data Science, ADW, and OAC.

Oracle recommends these services to migrate to a cloud-based data lake without Hadoop clusters:

- Object Storage service as the data lake store for all kinds of raw data

Note:

You can use Object Storage with an HDFS connector as the HDFS store in place of HDFS within the Hadoop or Spark cluster. - Data Integration service for ingesting data and for ETL jobs

- Streaming service for managed ingestion of real-time data, which can replace your self-managed Kafka or Flume services

- Data Transfer Appliance for one-time bulk transfer of data

- GoldenGate for CDC data and for streaming analytics

- Data Flow service for Spark batch processes and for ephemeral Spark clusters

- ADW for serving and presentation layer data

- Data Catalog service for data discovery and governance

- Data Science service for Machine Learning requirements

- OAC service for BI, analytics, and reporting requirements

Replatform Pattern

Use the Replatform migration pattern if you want to use Hadoop clusters on the cloud and replace some of the components with cloud native services. Use Big Data Service for HDFS and other Hadoop components, and redesign part of your stack using our additional managed cloud native services.

You may need to redesign your stack to use the Replatform pattern.

- Include serverless cloud native services along with BDS in OCI

- Leverage managed cloud native services where possible

You can replace some of these components based on your needs.

- BDS for HDFS and other Hadoop components such as Hive, HBase, Kafka, and Oozie

- Data Integration service for ingesting data and for ETL jobs

- Data Transfer Appliance service for one-time bulk transfer of data

- GoldenGate service for CDC data and for streaming analytics

- Data Catalog service for data discovery and governance

- Data Science service for Machine Learning requirements

- OAC service for BI, analytics, and reporting requirements

Rehost Pattern

Migrate your BDA, BDCS, and other Hadoop clusters to build your data lake in HDFS using Big Data Service (BDS). You can use a lift and shift approach when using the Rehost pattern. All the commonly used Hadoop components including HDFS, Hive, HBase, Spark, and Oozie are available in the managed Hadoop clusters provided by BDS.

Migration Pattern Workflow

When you migrate your data lake to Oracle Cloud, follow this workflow from requirements through the cut over to the new environment.

- Discovery and requirements: Discover and catalog the current system to list the requirements for the new OCI environment

- Assessment: Assess the required OCI services and tools

- Design: Design your solution architecture and sizing for OCI

- Plan: Create a detailed plan mapping your time and resources

- Provision: Provision and configure the required resources in OCI

- Migrate Data: Transfer the data and metadata into selected OCI services data storage

- Migrate workload: Migrate your workloads and applications to OCI services using the migration pattern you selected

- Automate Pipeline: Orchestrate and schedule workflow pipelines for automation

- Test and Validate: Plan functional and performance testing and validation for the final OCI environment

- Cut over: Turn off the source environment and cut over to using only using the new OCI based environment