Modern App Development - Big Data and Analytics

Design Principles

When implementing a big data and analytics pattern, use the following design principles for Modern App Development.

- Use fully managed services to eliminate complexity across

application development, runtimes and data management

Your data is only as valuable as your ability to use it. Big data tools are popular in the open source community, and most of the capabilities from them were adopted on-premises through open source projects like Hadoop, Spark, and Hive.

Use the Oracle Big Data Service, which offers all the popular open source Hadoop components as a managed service in Oracle Cloud. For Spark applications, use Oracle Cloud Infrastructure Data Flow, which offers a fully managed, serverless, cloud native Spark platform. Using these services ensures that you can take advantage of the latest innovations in the open source community and your team's existing skills without any concern about vendor lock-in. Continue to use the speed and value of open source with Oracle's native premium capabilities such as Oracle Autonomous Data Warehouse external tables and Oracle Cloud SQL.

Deploying and operating big data services, especially open source components, can have an exponential impact on operating expenses (OpEx). Start with our managed Hadoop offerings, or PaaS services like Data Flow, before taking a do-it-yourself (DIY) approach. Often, managed open source services are much less expensive over time when factoring in OpEx.

- Automate build, test, and deployment

DataOps is important for ensuring that you can derive maximum benefits from your big data pipelines. Use the Oracle Cloud Infrastructure Data Integration service to ingest data, implement ETL processing and ELT pushdown, and create pipelines for connecting tasks in a sequence or in parallel to facilitate a process. Pipelines can include various popular data sources within and outside of Oracle Cloud. Use Data Integration scheduling capabilities to define when and how often to run each task. For Hadoop Distributed File System (HDFS)-based data lakes in the Big Data Service, use tools like Oozie and Airflow to orchestrate your end-to-end data pipelines. Use Oracle Database Cloud Service Management to define database jobs that run against a set of databases on a schedule.

- Use converged databases with full featured support across all

data

Use the best tools that can simplify, automate, and accelerate the consolidation of data for use for maximum business value. When building data lakes for Oracle Cloud Infrastructure Data Science with unstructured, semi-structured, and structured data, use the Object Storage service for your data lake. To leverage HDFS and open source Hadoop tools, use the Big Data Service to build your data lake. For data warehouses, departmental data marts, and serving and presentation layers with structured data, use Autonomous Data Warehouse, which is optimized for these scenarios. Autonomous Data Warehouse also provides connectivity to analytics, business intelligence, and reporting tools like Oracle Analytics Cloud.

- Instrument end-to-end monitoring and tracing

Big data apps typically comprise multiple services owned by different application and business teams. Observability tools are important for gaining visibility into the behavior of these inherently distributed systems.

Monitor the operational health of end-to-end data pipelines by having all your workloads emit health metrics to Oracle Cloud Infrastructure Monitoring. Define custom metric thresholds for alarms, and get notified or take action whenever a given threshold is reached. Use OCI Logging for all the OCI service logs in your tenancy and custom logs that you submit from your data applications. To troubleshoot issues and optimize performance, use OCI Database Management for Autonomous Data Warehouse to see database status, average active sessions, alarms, CPU usage, storage usage, fleet diagnostics, and tuning.

- Implement a defense-in-depth approach to secure the app

lifecycle

Plan to keep your data secure. Track all jobs that bring data in and take data out of your data lake, keep data lineage metadata, and ensure that access control policies are updated. Use Data Catalog to help with governance.

Follow the principle of least privilege, and ensure that users and service accounts have only the minimal privilege necessary to perform their tasks. Control who has access to the data platform components by using Oracle Cloud Infrastructure Identity and Access Management. Use multi-factor authentication in Oracle Cloud Infrastructure Identity and Access Management to enforce strong authentication for administrators. Store sensitive information such as passwords and authentication tokens in the Oracle Cloud Infrastructure Vault service.

For the Big Data Service, configure only the necessary security rules to control the network, and use Apache Ranger to manage data security across your Hadoop cluster. Use Oracle Data Safe to safeguard your data in Autonomous Data Warehouse. Use strong passwords for your databases. Create database resources in private subnets and use virtual cloud network (VCN) security groups or security lists to enforce network access control to database instances. Give database delete permissions to a minimum possible number of Oracle Cloud Infrastructure Identity and Access Management users and groups.

To protect your data sources from any security vulnerability, provide credentials to read-only accounts only for the Data Catalog and Data Integration services.

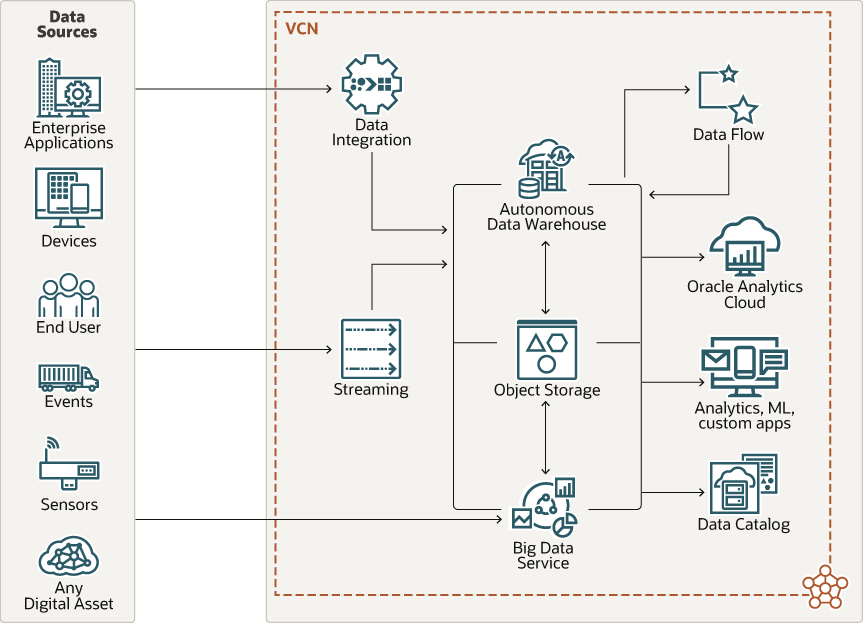

Architecture

Description of the illustration big-data-and-analytics.png

This architecture uses the following data sources:

- Enterprise applications

- Devices

- End user

- Events

- Sensors

- Any digital asset

This architecture has the following components within the VCN:

- Virtual cloud network (VCN)

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Data

Integration

Oracle Cloud Infrastructure Data Integration is a fully managed, serverless cloud service ingests and transforms data for data science and analytics. It helps simplify complex ETL and ELT into data lakes and warehouses with Oracle’s modern, no-code data flow designer. You can use one of the ready-to-use operators—such as a join, aggregate, or expression—to shape your data.

- Streaming

Oracle Cloud Infrastructure Streaming service provides a fully managed, scalable, and durable solution for ingesting and consuming high-volume data streams in real-time. Use Streaming for any use case in which data is produced and processed continually and sequentially in a publish-subscribe messaging model. For example, messaging, metric and log ingestion, web or mobile activity data ingestion, and infrastructure and apps event processing.

- Oracle Big Data

Service

Oracle Big Data Service is a fully managed, automated cloud service that provides clusters with a Hadoop environment. Big Data Service makes it easy for customers to deploy Hadoop clusters of all sizes and simplifies the process of making Hadoop clusters both highly available and secure.

- Oracle Autonomous Data Warehouse

Oracle Autonomous Data Warehouse is a self-driving, self-securing, self-repairing database service that is optimized for data warehousing workloads. You do not need to configure or manage any hardware, or install any software. Oracle Cloud Infrastructure handles creating the database, as well as backing up, patching, upgrading, and tuning the database.

- Object Storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

- Data Flow

Oracle Cloud Infrastructure Data Flow is a fully managed, PaaS-level Spark analytics platform that enables you to create, edit, and run Spark jobs at any scale without the need for clusters, an operations team, or highly specialized Spark knowledge. Because it’s serverless, there’s no infrastructure for you to deploy or manage. It’s entirely driven by REST APIs, giving you easy integration with apps or workflows.

- Oracle

Analytics Cloud

This best-in-class platform for modern analytics in the cloud empowers business analysts and consumers. Oracle Analytics Cloud offers modern AI-powered self-service analytics capabilities for data preparation, discovery, and visualization; intelligent enterprise and on demand reporting together with augmented analysis; and natural language processing and generation. Whether you’re a business analyst, data engineer, citizen data scientist, departmental manager, domain expert, or executive, Oracle Analytics Cloud can help you turn data into insights.

- Analytics, ML, and custom apps

Analytics services, Oracle Machine Learning, and custom applications that will catalog, prepare, process, and analyze big data.

- Data Catalog

Oracle Cloud Infrastructure Data Catalog is a fully managed, self-service data discovery and governance solution for your enterprise data. It provides data engineers, data scientists, data stewards, and chief data officers a single collaborative environment to manage the organization's technical, business, and operational metadata.

With this architecture pattern, you can manage all types of unstructured, semi-structured, and unstructured data with a modern data lake house pattern. Ingest all types of data into an object storage-based data lake by using the Data Integration and Streaming services. Use Oracle Cloud Infrastructure Data Flow and Oracle Big Data Service for processing, use Oracle Cloud Infrastructure Data Catalog for cataloging, use Oracle Autonomous Data Warehouse as the serving store, and use Oracle Analytics Cloud for analytics and business intelligence.

The following process describes the flow shown in the diagram:

- Oracle Cloud Infrastructure Data Integration and Oracle Cloud Infrastructure Streaming ingest data from different types of sources. The service that’s used depends on whether the data is batch, streaming, or synchronized database records, and whether the data is on-premises or in the cloud.

- Data can be delivered to Object Storage for shared access by cloud services and for processing before it’s stored in Oracle Autonomous Data Warehouse or Big Data Service.

- Data can also be delivered directly to Oracle Autonomous Data Warehouse and then transformed by using ELT capabilities, or records from other databases can be directly ingested. Data can also be delivered directly as-is to Big Data Service.

- Oracle Autonomous Data Warehouse can query data from Object Storage or ingest data from Object Storage through an API or with the help of Data Integration. Big Data Service can ingest data from or query data in Object Storage.

- Oracle Analytics Cloud can access data in Oracle Autonomous Data Warehouse for any of the visualization and business analytics capabilities that the service provides.

- Oracle Cloud Infrastructure Data Catalog harvests metadata from Oracle Autonomous Data Warehouse, Object Storage, and Big Data Service Hive data sources. You interact with Data Catalog to harvest, find, and manage the data.

- You can implement any custom apps for analytics and machine learning workloads by using data from Oracle Autonomous Data Warehouse, Big Data Service, and Object Storage.

- Business analysts can use Oracle Analytics Cloud to consume data from both Oracle Autonomous Data Warehouse and Big Data Service.

- Data scientists can use Oracle Machine Learning Notebooks in Oracle Autonomous Data Warehouse and Oracle Machine Learning for Spark in Oracle Big Data Service to train machine learning models and to work with spatial and graph data.

Alternative Architectures

Consider the alternatives to the architecture described in this pattern.

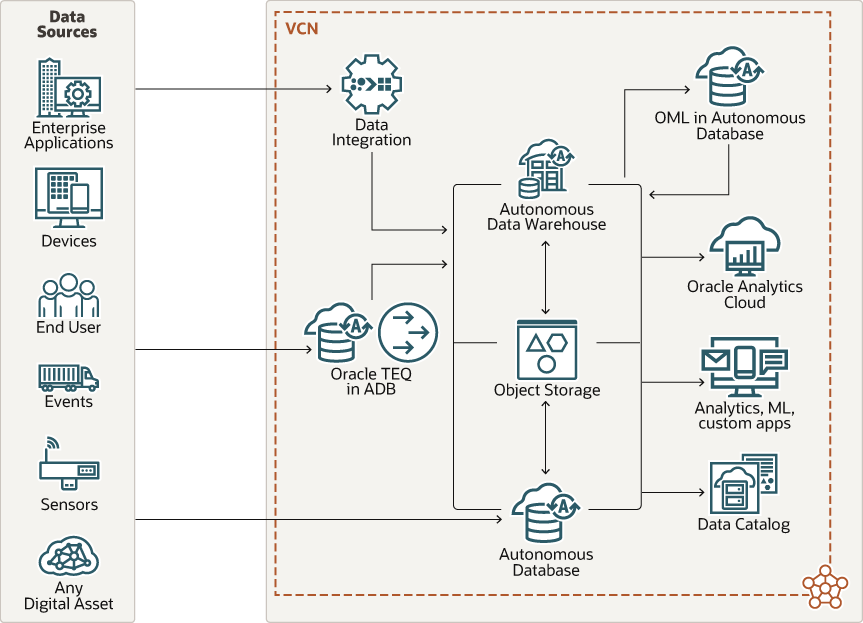

Use a single database or data warehouse to store and analyze all types of data. In this alternative architecture, various data sources (end users, devices, events, sensors, and applications) feed data to the database through data integration (Oracle GoldenGate) and Oracle Transactional Event Queues for streaming data. The data is stored in Oracle Autonomous Database (Oracle Autonomous Transaction Processing and Oracle Autonomous Data Warehouse) along with object store support for big data using Cloud SQL. Use Oracle Machine Learning for model building and deployment, and use Oracle Analytics Cloud and Oracle Data Cloud for insights into the data.

The following diagram illustrates this alternative architecture.

Description of the illustration alt-architecture-big-data.png

This architecture uses the following data sources:

- Enterprise applications

- Devices

- End user

- Events

- Sensors

- Any digital asset

This architecture has the following components within the VCN:

- Virtual cloud network (VCN)

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Data

Integration

Oracle Cloud Infrastructure Data Integration is a fully managed, serverless cloud service ingests and transforms data for data science and analytics. It helps simplify complex ETL and ELT into data lakes and warehouses with Oracle’s modern, no-code data flow designer. You can use one of the ready-to-use operators—such as a join, aggregate, or expression—to shape your data.

- Oracle Cloud Infrastructure Transactional Event Queues (TEQ) in

ADB

Oracle Transactional Event Queues in an autonomous database provide database-integrated message queuing functionality. This highly optimized and partitioned implementation leverages the functions of Oracle database so that producers and consumers can exchange messages with high throughput, by storing messages persistently, and propagate messages between queues on different databases. Oracle Transactional Event Queues are a high performance partitioned implementation with multiple event streams per queue

- Oracle Autonomous Data Warehouse

Oracle Autonomous Data Warehouse is a self-driving, self-securing, self-repairing database service that is optimized for data warehousing workloads. You do not need to configure or manage any hardware, or install any software. Oracle Cloud Infrastructure handles creating the database, as well as backing up, patching, upgrading, and tuning the database.

This cloud data warehouse service eliminates all the complexities of operating a data warehouse, securing data, and developing data-driven applications. It automates provisioning, configuring, securing, tuning, scaling, and backing up the data warehouse. It includes tools for self-service data loading, data transformations, business models, automatic insights, and built-in converged database capabilities that enable simpler queries across multiple data types and machine learning analysis.

- Object Storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

This internet-scale, high-performance storage platform offers reliable and cost-efficient data durability. The Object Storage service can store an unlimited amount of unstructured data of any content type, including analytic data and rich content, like images and videos.

- Autonomous database

Oracle Cloud Infrastructure autonomous databases are fully managed, preconfigured database environments that you can use for transaction processing and data warehousing workloads. You do not need to configure or manage any hardware, or install any software. Oracle Cloud Infrastructure handles creating the database, as well as backing up, patching, upgrading, and tuning the database.

- Oracle Machine Learning in an autonomous database

Oracle Machine Learning in an Oracle Autonomous Database (Autonomous Transaction Processing and Autonomous Data Warehouse).

- Oracle

Analytics Cloud

This best-in-class platform for modern analytics in the cloud empowers business analysts and consumers. Oracle Analytics Cloud offers modern AI-powered self-service analytics capabilities for data preparation, discovery, and visualization; intelligent enterprise and on demand reporting together with augmented analysis; and natural language processing and generation. Whether you’re a business analyst, data engineer, citizen data scientist, departmental manager, domain expert, or executive, Oracle Analytics Cloud can help you turn data into insights.

- Analytics, ML, and custom apps

Analytics services, Oracle Machine Learning, and custom applications that will catalog, prepare, process, and analyze big data.

- Data Catalog

Oracle Cloud Infrastructure Data Catalog is a fully managed, self-service data discovery and governance solution for your enterprise data. It provides data engineers, data scientists, data stewards, and chief data officers a single collaborative environment to manage the organization's technical, business, and operational metadata.

Oracle Cloud Infrastructure Data Catalog is a metadata management service that helps data professionals discover data and support data governance.

- Oracle GoldenGate

This fully managed service offers a real-time, log-based change data capture (CDC) and replication software platform to meet the needs of today’s transaction-driven applications. The software provides capture, routing, transformation, and delivery of transactional data across heterogeneous environments in real time.

An alternative is to build and run your own open source platforms on Oracle Cloud Infrastructure Compute. However, this option can result in high OpEx.

Considerations and Antipatterns

Consider the following for big data and analytics.

- Reduce data copies and movement

Data movement is costly, consumes resources and time, and can reduce data fidelity. Choose the right service to store and process your data, depending on data types, data quality, and required transformations. Use Object Storage for your data lake storage for all types of raw data. Use Oracle Big Data Service to leverage HDFS and Hadoop ecosystem tools. Use Oracle Autonomous Data Warehouse to store transformed data for presentation. Using the right store helps you avoid copying and moving data and reduces duplicate copies of data, which can be hard to maintain and keep synchronized.

- Provide your users the data interface they need

Enterprise data and analytics platforms have many types of users: data engineers, data analysts, application developers, big data engineers, database admins, business analysts, data scientists, data stewards, and other consumers. All of them have different needs and preferences for consuming data. Understanding all your use cases and data consumer requirements is important. For Hadoop ecosystem tools, use Big Data. For SQL queries and interfacing with business intelligence tools, use Autonomous Data Warehouse. For Spark applications, use the Oracle Cloud Infrastructure Data Flow service.

- Catalog your data assets and establish a common vocabulary

Data in enterprises is typically a shared asset across multiple teams. Use Data Catalog to harvest metadata from data sources across OCI and on-premises to create an inventory of data assets. Doing so helps data consumers easily find the data that they need for analytics. Use Data Catalog to also create and manage enterprise glossaries with categories, subcategories, and business terms to build a taxonomy of business concepts with user-added tags to make search more productive.

- Be cost and performance conscious

Costs for data and analytics platforms can quickly rise unless the platforms are designed and operated properly. All data has certain performance requirements related to latency and throughput. Right size your environments by using the smallest compute shape and least amount of storage in the service that still meets your performance requirements. Terminate any unused resources. Use Data Flow for Spark apps because you can choose the number of cores to use for your job, which gives you the performance you need while minimizing costs. For Autonomous Data Warehouse, scale the number of CPU cores or the storage capacity of the database based on your needs. Also use its autoscaling feature, which lets your database automatically use up to three times the current base number of CPU cores at any time and automatically decreases the number of cores when not required.

Antipatterns

When designing an implementation, consider the following:

- Lack of data cataloging and governance can convert data lakes into data swamps.

- Storing data lake data in block volumes instead of object storage leads to a higher-cost solution.

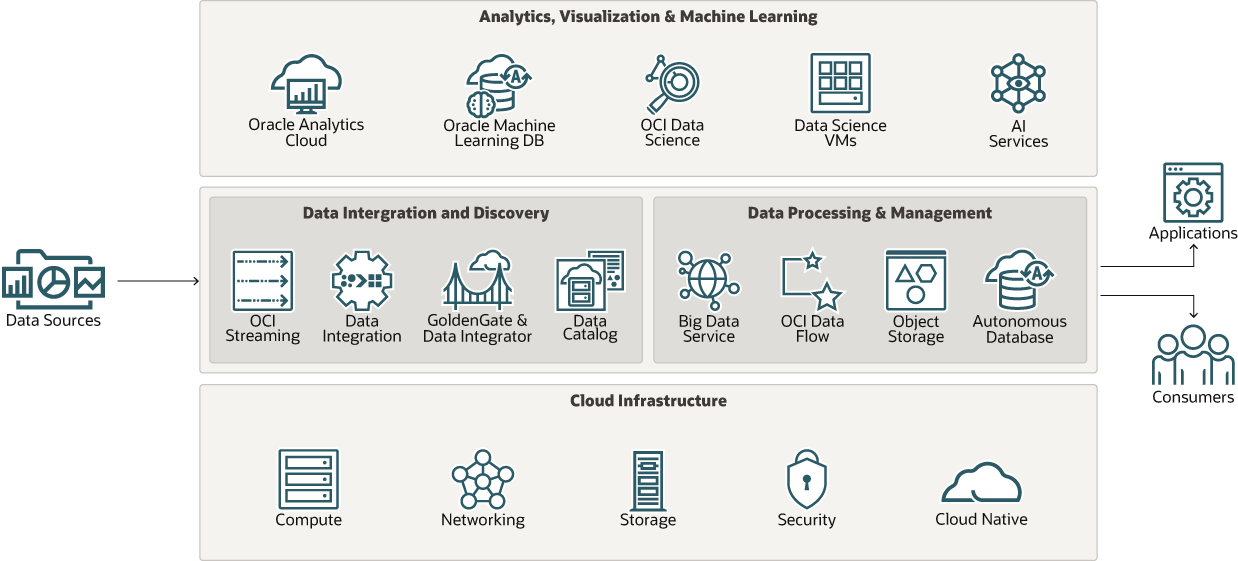

Big Data and Analytics Pattern

This architecture pattern provides guidance on how to use Oracle Cloud Infrastructure (OCI) data and analytics services to ingest, store, catalog, prepare, process, and analyze big data to implement several use cases.

These use cases include data warehousing; analytics, business intelligence, and reporting; extract, transform, and load (ETL) and extract, load, and transform (ELT) patterns; data lake and lake house patterns; and training machine learning models.

The following diagram shows Oracle services related to data and analytics.

Description of the illustration big-data-and-analytics-pattern.png

- Use Oracle Autonomous Data Warehouse to write SQL queries for structured data as well as over external tables of unstructured and semi-structured data.

- Use Oracle Big Data Service to use Apache Hadoop ecosystem tools such as Hive, Spark, Kafka, and HBase to ingest, store, and process all kinds of unstructured and semi-structured data.

- Use Oracle Cloud Infrastructure Object Storage to store big data and build data lakes for all types of data.

- Use Oracle Cloud Infrastructure Data Flow for Apache Spark native jobs.

- Use Oracle Cloud Infrastructure Data Integration to ingest data from various data sources along with simplifying ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processing.

- Use Oracle Cloud Infrastructure Data Catalog to harvest metadata from various data sources to build an inventory of assets, a business glossary, and a common metastore for data lakes.

- Use streaming to ingest real-time data streams with Kafka-compatible APIs.

Example Use Cases

The following are example implementations that use Oracle Cloud Infrastructure (OCI) data and analytics services to ingest, store, catalog, prepare, process, and analyze big data.

- Data Warehousing and business analytics

Use Oracle Autonomous Data Warehouse as a data warehouse or data mart with Oracle Analytics Cloud.

- Data Integration ingests data from intended sources. The type of data integration used depends on whether the data is batch, streaming, or synchronized database records, and whether the data is on-premises or in the cloud.

- Data can be delivered to Object Storage for shared access by cloud services and for processing before it’s stored in Autonomous Data Warehouse or Big Data. Data can also be delivered directly to Autonomous Data Warehouse and then transformed using ELT capabilities, or records from other databases can be directly ingested.

- Oracle Analytics Cloud provides visualization of data in the database, including machine learning results. Oracle Analytics Cloud pushes down as much processing as possible to Autonomous Data Warehouse for data flow processing.

- Object Storage is optional for active archive or data sharing. An active archive is where less frequently used data is moved from ADW to a lower-cost storage tier (Object Storage). The data can still be queried from Object Storage, but the performance is slower. Object Storage can also be used to store data that is shared between cloud services.

- Oracle Cloud Infrastructure Data Catalog harvests metadata from Autonomous Data Warehouse and Object Storage data sources. You interact with Data Catalog to use and manage the catalog.

- Manage all types of data with a data lake and data warehouse for a

lake house pattern

Manage data in both Autonomous Data Warehouse and Big Data, and use Oracle Analytics Cloud for visualization of the data.

- Data Integration ingests data from intended sources. The type of data integration used depends on whether the data is batch, streaming, or synchronized database records, and whether the data is on-premises or in the cloud.

- Data can be delivered to Object Storage for shared access by cloud services and for processing before it’s stored in Autonomous Data Warehouse or Oracle Big Data Service. Data can also be directly delivered to Autonomous Data Warehouse and then transformed using ELT capabilities, or records from other databases can be directly ingested. Data can also be delivered directly as-is to Big Data.

- Autonomous Data Warehouse can query data from Object Storage or ingest data from Object Storage through an API or with the help of Oracle Cloud Infrastructure Data Integration. Big Data can ingest data from or query data in Object Storage.

- Data can be transferred from Big Data to Autonomous Data Warehouse by using the Big Data connectors.

- Oracle Analytics Cloud can access data from multiple sources, including Autonomous Data Warehouse and Big Data, to deliver augmented analytics, data visualizations, and self-service business analytics capabilities.

- Business analysts can use Oracle Analytics Cloud to consume data from both Autonomous Data Warehouse and Big Data.

- Data Catalog harvests metadata from Autonomous Data Warehouse, Object Storage, and Big Data Hive data sources. You interact with Data Catalog to harvest, find, and manage the data.

- Build a data lake with OCI cloud-native services

Build a data lake in Object Storage and use cloud native data and AI services to modernize and leverage the latest technical innovations.

- Use Data Flow for Spark batch processes and for ephemeral Spark clusters.

- Use Object Storage with the Hadoop Distributed File System (HDFS) connector as the HDFS store, in place of HDFS within the Apache Hadoop or Spark cluster.

- Use Oracle Cloud Infrastructure Data Integration to ingest data and for ETL jobs.

- Use Oracle Cloud Infrastructure Data Catalog for data discovery and governance.

- Use Oracle Cloud Infrastructure Data Science for machine learning requirements.

- Use Oracle Cloud Infrastructure Streaming for managed ingestion of streams, and use Data Integration for a managed integration service. These services might replace self-managed Kafka or Flume.

- For rest of the components in the stack for which it’s not easy to use a managed OCI native service, use the Oracle Cloud Infrastructure Compute and storage service.

- Build HDFS based data lake using Oracle Big Data Service

Use Oracle Big Data Service to build your data lake in HDFS. All the Apache Hadoop components, including Hive, HBase, Spark, and Oozie, are made available by the managed Hadoop clusters provided by Oracle Big Data Service, and you can use them based on your requirements. Use managed cloud native services where possible.

- Use Big Data for HDFS and other Hadoop components, including Hive, HBase, and Oozie.

- Used Data Flow for Spark batch processes and for ephemeral Spark clusters to reduce Big Data cluster size where possible.

- Use Data Catalog for data discovery and governance.

- Use Data Science for machine learning requirements.

- Data lab with Oracle Big Data Service

Explore and experiment with data. Oracle Big Data Service provides the core data management and data science tools in this use case.

- Oracle Analytics Cloud provides additional capabilities to visualize data that is useful in understanding both source data and machine learning results.

- Object Storage provides additional low-cost storage for sharing data with other cloud services and persisting data in Oracle Big Data when the data lab is suspended.

- Data Integration can be added to ingest data into Object Storage if needed.

- Data Catalog harvests metadata from Object Storage and Big Data Hive. You interact with Data Catalog to use and manage the catalog.

- Data scientists use Oracle Machine Learning for Spark in Oracle Big Data to build machine learning models.

- Self-service data discovery and governance with Oracle Cloud Infrastructure Data Catalog

Data Catalog harvests metadata from different types of data sources to create a catalog of data entities and their attributes. Business analysts, data scientists, data engineers, and data stewards can search the catalog and build a business glossary for attributes.

- Spark processing with Oracle Cloud Infrastructure Data Flow

Spark jobs are submitted to Data Flow. When the job runs, data is read from Object Storage and processed according to the job code, and the result is written back to Object Storage. Other services can retrieve the results from Object Storage as needed.

- Training machine learning models directly in Oracle Autonomous Data Warehouse and Oracle Big Data

Service

See the Data Science based machine learning model for details about training machine learning models using the Oracle Cloud Infrastructure Data Science. The objective of this use case is to manage data in both Oracle Autonomous Data Warehouse and Oracle Big Data Service. Oracle Analytics Cloud provides visualization of data, including machine learning results. Functionality is limited to the capabilities of Oracle Machine Learning.

-

Oracle Cloud Infrastructure Data Integration ingests data from intended sources. The type of data integration used depends on whether the data is batch, streaming, or synchronized database records, and whether the data is on-premises or in the cloud.

- Data can be delivered to Object Storage for shared access by cloud services and for processing before it’s stored in Oracle Autonomous Data Warehouse or Oracle Big Data Service. Data can be directly delivered to Oracle Autonomous Data Warehouse and then transformed using ELT capabilities, or records from other databases can be directly ingested. Data can also be delivered directly as-is to Oracle Big Data Service.

- Oracle Autonomous Data Warehouse can query data from Object Storage or ingest data from Object Storage through an API or with the help of Data Integration. Oracle Big Data Service can ingest data from or query data in Object Storage.

- Data can be transferred from Oracle Big Data Service to Oracle Autonomous Data Warehouse by using the Big Data connectors.

- Oracle Analytics Cloud can access data from multiple sources, including Oracle Autonomous Data Warehouse and Oracle Big Data Service, to deliver augmented analytics, data visualizations, and self-service business analytics capabilities.

- Business analysts and data scientists can use Oracle Analytics Cloud to consume data from both Oracle Autonomous Data Warehouse and Oracle Big Data Service.

- Data scientists can use Oracle Machine Learning Notebooks in Oracle Autonomous Data Warehouse to create machine learning models and to work with spatial data. They can also use Oracle Machine Learning for Spark in Big Data to create machine learning models and to work with spatial and graph data.

- Oracle Cloud Infrastructure Data Catalog harvests metadata from Oracle Autonomous Data Warehouse, Big Data Hive, and Object Storage data sources. You interact with Data Catalog to use and manage the catalog.

-