Deploy the BeeGFS parallel file system

BeeGFS is a parallel cluster file system, developed with a strong focus on input-output performance and designed for easy installation and management. Using BeeGFS, you can build a high-performance computing (HPC) file server on Oracle Cloud Infrastructure.

BeeGFS transparently spreads user data across multiple servers. By increasing the number of servers and disks in the system, you can scale the performance and capacity of the file system from small clusters up to enterprise-class systems with thousands of nodes.

Architecture

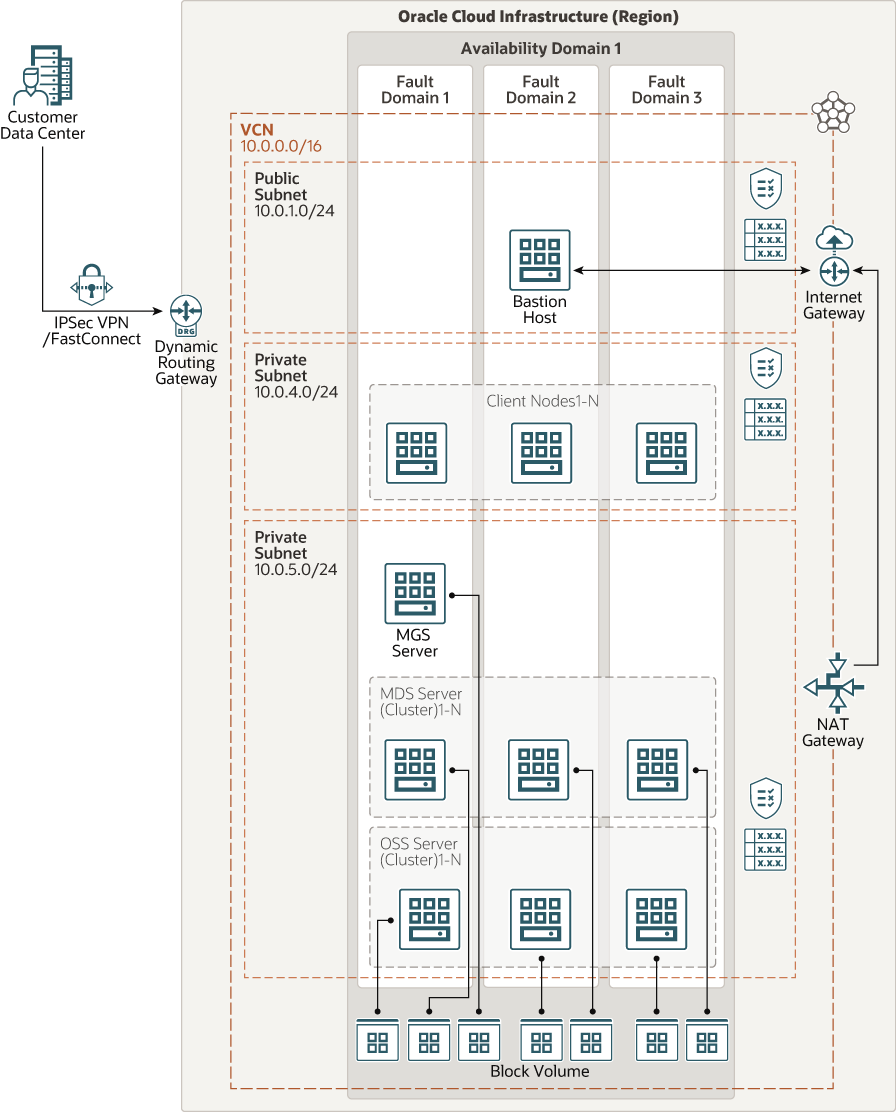

This reference architecture uses a region with a single availability domain and regional subnets. You can use the same reference architecture in a region with multiple availability domains. We recommend that you use regional subnets for your deployment, regardless of the number of availability domains.

The following diagram illustrates this reference architecture.

Description of the illustration architecture-deploy-beegfs.png

The architecture has the following components:

- Region

A region is a localized geographic area composed of one or more availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or continents).

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domains

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you place Compute instances across multiple fault domains, applications can tolerate physical server failure, system maintenance, and many common networking and power failures inside the availability domain.

- Virtual cloud network (VCN) and subnets

A VCN is a software-defined network that you set up in an Oracle Cloud Infrastructure region. VCNs can be segmented into subnets, which can be specific to a region or to an availability domain. Both region-specific and availability domain-specific subnets can coexist in the same VCN. A subnet can be public or private.

- Security lists

For each subnet, you can create security rules that specify the source, destination, and type of traffic that must be allowed in and out of the subnet.

- Route tables

Virtual route tables contain rules to route traffic from subnets to destinations outside the VCN, typically through gateways.

- Internet gateway

The internet gateway allows traffic between the VCN and the public internet.

- Client nodes

Clients are Compute instances that access the BeeGFS file system.

- Management server

The management server (MGS) is a meeting point for the BeeGFS metadata, storage, and client services. An MGS stores configuration information for one or more file systems and provides this information to other hosts. This global resource can support multiple file systems.

- Metadata service

The metadata service (MDS) stores information about the data, such as directory information, file and directory ownership, and the location of user file contents on storage targets. The metadata service is a scale-out service, which means that you can use one or many metadata services in a BeeGFS file system.

The metadata content is stored on volumes called metadata targets (MDTs).

- Object Storage service

The Object Storage service (OSS) is the main service for storing user file contents, or data chunk files. Object Storage servers are also referred to as storage servers.

Similar to the metadata service, the Object Storage service is based on a scale-out design. An OSs instance has one or more object storage targets.

Each storage server provides access to a set of storage volumes, called Object Storage targets (OSTs). Each OST contains several binary objects that represent the data for files.

Recommendations

Your requirements might differ from the architecture described here. Use the following recommendations as a starting point.

- VCN

When you create the VCN, determine how many IP addresses your cloud resources in each subnet require. Using the Classless Inter-Domain Routing (CIDR) notation, specify a subnet mask and a network address range that's large enough for the required IP addresses. Use an address range that's within the standard private IP address space.

Select an address range that doesn’t overlap with your on-premises network, so that you can set up a connection between the VCN and your on-premises network, if necessary.

After you create a VCN, you can't change its address range.

When you design the subnets, consider your traffic flow and security requirements. Attach all the compute instances within the same tier or role to the same subnet, which can serve as a security boundary.

- Security lists

Use security lists to define ingress and egress rules that apply to the entire subnet.

- Bastion Host

A bastion host is used to access any nodes in the private subnet. Use the VM.Standard.E2.1 shape.

- Management Server (MGS)

Because the MGS is not resource-intensive, you can choose to deploy it with the MDS Server. If you do deploy it separately, the VM.Standard2.2 shape is sufficient.

Use a 50GB balanced performance tier block volume. The block volume can be resized if more space is needed.

- Metadata Service (MDS) Server

Use a VM.Standard2.8 or higher shape. Requirements depend on whether your workload is metadata-intensive (for small file workloads) or not, how many metadata instances are running per node, and so on.

For highest performance, a bare metal shape such as BM.Standard2.52 is recommended because it has two physical NICs, each with a 25-Gbps network speed. Use one NIC for all traffic to block storage, and use the other NIC for incoming data to the MDS nodes from client nodes.

Use block volume storage; the size and number changes per deployment requirement for more storage. If more space is needed, the block volume can be resized.

- Object Storage Service (OSS) Server

Use VM.Standard2.8 or higher. Requirement depends on what Aggregate IO throughput in GBps is required from the file system.

For highest performance, a bare metal shape such as BM.Standard2.52 is recommended because it has two physical NICs, each with 25-Gbps network speed. Use one NIC for all traffic to block storage, and use the other NIC for incoming data to the OSS nodes from client nodes.

- Client Nodes

Choose a VM shape based on your deployment plans. The shape determines the network bandwidth that's available for the instance to read and write to the file system. For example, a VM.Standard2.16 shape has a maximum network bandwidth of 16.4 Gbps, which means that the maximum IO throughput is 2.05 GBps.

Both Intel and AMD VM and bare metal Compute shapes can be used for clients.

Considerations

- Performance

To get the best performance, choose the correct Compute shape with appropriate bandwidth.

- Availability

Consider using a high-availability option based on your deployment requirement.

- Cost

Bare metal service provides higher network bandwidth but for a higher cost. Evaluate your requirements to choose the appropriate Compute shape.

- Monitoring and Alerts

Set up monitoring and alerts on CPU and memory usage for your MGS, MDS, and OSS nodes to scale the VM shape up or down as needed.

Deploy

The Terraform code for this reference architecture is available on GitHub.

You can deploy using either the Terraform script directly, or through the Oracle Cloud Infrastructure Resource Manager service.

- Go to GitHub.

- Clone or download the repository to your local computer.

- To use the Terraform script, follow the instructions in the

READMEdocument. - To use Oracle Cloud Infrastructure Resource

Manager, follow the instructions in the

READMEinside theormdirectory of the repository.