Deploy Elasticsearch and Kibana

Elasticsearch is an open source, enterprise-grade search engine. Accessible through an extensive API, Elasticsearch can power quick searches that support your data discovery applications. It can scale thousands of servers and accommodate petabytes of data. Its large capacity results directly from its elaborate, distributed architecture.

The most common use cases for Elasticsearch are analytics using super-fast data extractions from structured and unstructured data sources, and full-text search powered by an extensive query language and autocompletion.

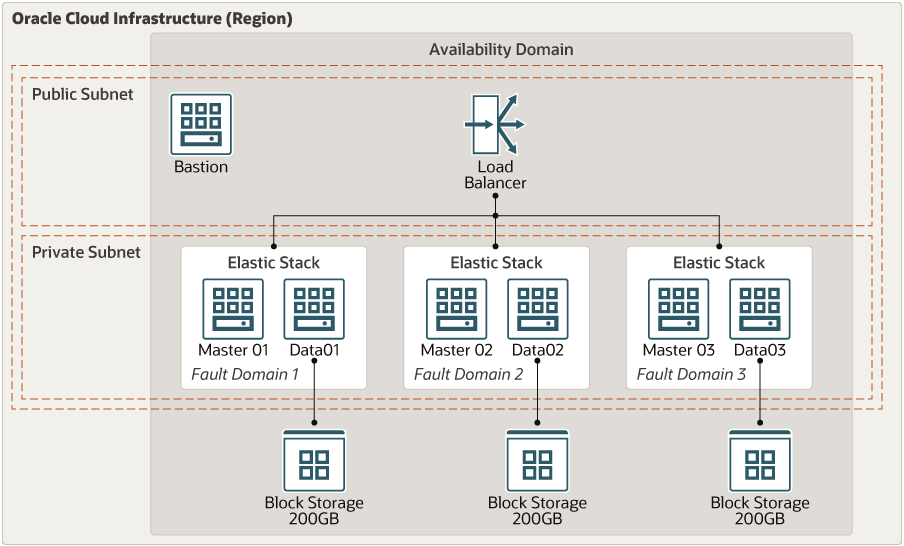

Architecture

This reference architecture shows a clustered deployment of Elasticsearch and Kibana.

Description of the illustration elk-oci.png

This architecture has the following components:

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domains

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Bastion host

The bastion host is a compute instance that serves as a secure, controlled entry point to the topology from outside the cloud. The bastion host is provisioned typically in a demilitarized zone (DMZ). It enables you to protect sensitive resources by placing them in private networks that can't be accessed directly from outside the cloud. The topology has a single, known entry point that you can monitor and audit regularly. So, you can avoid exposing the more sensitive components of the topology without compromising access to them.

- Load balancer

The load balancer balances index operations to the data nodes and Kibana access to the master nodes. It uses two listeners, one for Kibana and one for index data access, using master node back ends and data node back ends. The load balancer is in a public subnet with a public IP address.

- Master nodes

Master nodes perform cluster management tasks, such as creating indexes and rebalancing shards. They don’t store data. Three master nodes (recommended for bigger clusters) are deployed across three fault domains to ensure high availability.

- Data nodes

Three data nodes are deployed across three fault domains for high availability. We recommend memory-optimized Compute instances because Elasticsearch depends on the amount of memory available. Each data node is configured with a 200 GiB block storage. In addition to VMs, Oracle Cloud Infrastructure offers powerful bare metal instances that are connected in clusters to a no-oversubscription 25 Gb network infrastructure. This configuration guarantees low latency and high throughput, which provide for high-performance distributed streaming workloads.

- Kibana

Like the master nodes, Kibana has relatively light resource requirements. Most computations are pushed to Elasticsearch. In this deployment, Kibana runs on the master nodes.

Recommendations

Your requirements might differ from the architecture described here. Use the following recommendations as a starting point.

- VCN

When you create a VCN, determine the number of CIDR blocks required and the size of each block based on the number of resources that you plan to attach to subnets in the VCN. Use CIDR blocks that are within the standard private IP address space.

After you create a VCN, you can change, add, and remove its CIDR blocks.

When you design the subnets, consider your traffic flow and security requirements. Attach all the resources within a specific tier or role to the same subnet, which can serve as a security boundary.

Use regional subnets.

- Network security groups (NSGs)

You can use NSGs to define a set of ingress and egress rules that apply to specific VNICs. We recommend using NSGs rather than security lists, because NSGs enable you to separate the VCN's subnet architecture from the security requirements of your application. In the reference architecture, all the network communication is controlled through NSGs.

- Block storage

This architecture uses 200 GB of block storage. We recommend that you configure a logical volume manager (LVM) to allow the volume to grow if you need more space. Each block volume is configured to use balanced performance and provides 35K IOPS and 480 MBps of throughput.

- Compute Shapes

This architecture uses a virtual machine shape (VM.Standard2.24) for all the data nodes. These compute instances can push traffic at 25 Gbps and provide 320 GB RAM.

Considerations

- Performance

Elasticsearch depends on the amount of memory available. To get the best performance, use Compute shapes that provide you with a good amount of memory. You can use bare metal shapes, where you can get up to 768 GB of RAM.

- Availability

Fault domains provide the best resilience within an availability domain. If you need higher availability, consider using multiple availability domains or multiple regions for your deployment.

- Scalability

Although you can scale master and data nodes in and out manually, we recommend using autoscaling to minimize the chance that services become starved for resources under high loads. An autoscaling strategy must consider both master and data nodes.

- Cost

The cost of your Elasticsearch deployment depends on your requirements for memory and availability. The Compute shapes that you choose have a higher impact on the costs associated with this architecture.

Deploy

The code required to deploy this reference architecture is available in GitHub. You can pull the code into Oracle Cloud Infrastructure Resource Manager with a single click, create the stack, and deploy it. Alternatively, download the code from GitHub to your computer, customize the code, and deploy the architecture by using the Terraform CLI.

- Deploy by using Oracle Cloud Infrastructure Resource

Manager:

- Click

If you aren't already signed in, enter the tenancy and user credentials.

- Review and accept the terms and conditions.

- Select the region where you want to deploy the stack.

- Follow the on-screen prompts and instructions to create the stack.

- After creating the stack, click Terraform Actions, and select Plan.

- Wait for the job to be completed, and review the plan.

To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again.

- If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply.

- Click

- Deploy by using the Terraform CLI:

- Go to GitHub.

- Download or clone the code to your local computer.

- Follow the instructions in

cluster/single-ad/README.md.