Deploy a Scalable, Distributed File System Using GlusterFS

A scalable, distributed network file system is suitable for data-intensive tasks such as image processing and media streaming. When used in high-performance computing (HPC) environments, GlusterFS delivers high-performance access to large data sets, especially immutable files.

Architecture

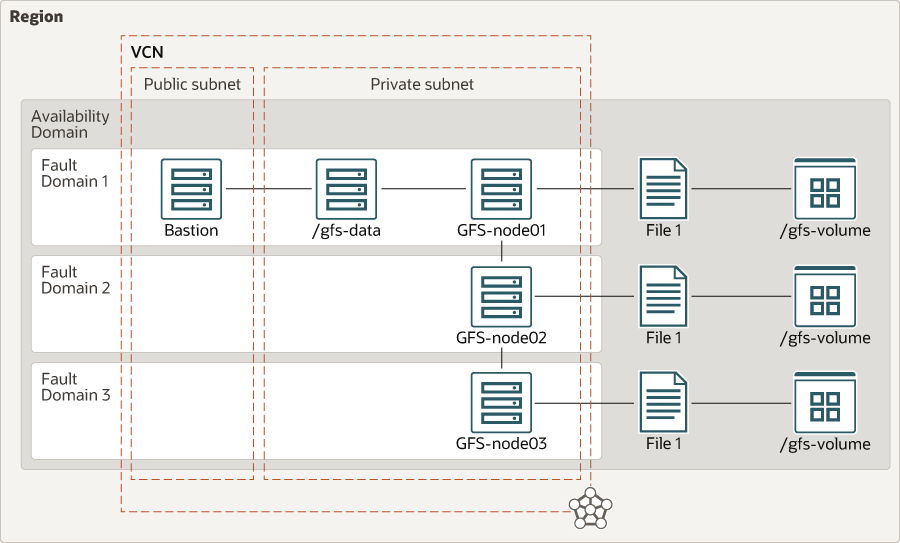

This reference architecture contains the infrastructure components required for a distributed network file system. It contains three bare metal instances, which is the minimum required to set up high availability for GlusterFS.

In a three-server configuration, at least two servers must be online to allow write operations to the cluster. Data is replicated across all the nodes, as shown in the diagram.

Description of the illustration glusterfs-oci.png

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Availability domain

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domain

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Virtual cloud network and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

This architecture uses two subnets: a public subnet to create the DMZ and host the bastion server, and a private subnet to host the GlusterFS nodes.

- Network security group (NSG)

NSGs act as virtual firewalls for your cloud resources. With the zero-trust security model of Oracle Cloud Infrastructure, all traffic is denied, and you can control the network traffic inside a VCN. An NSG consists of a set of ingress and egress security rules that apply to only a specified set of VNICs in a single VCN.

- Security list

For each subnet, you can create security rules that specify the source, destination, and type of traffic that must be allowed in and out of the subnet.

- GFS-nodes

These are the GlusterFS headends, with 1 TB of block storage attached to each instance.

- /gfs-data

In the reference architecture, the client mounts the GlusterFS volume at the mount point

/gfs-data, through which your application accesses the file system. Multiple servers can access the headend nodes in parallel. - Bastion host

The bastion host is a compute instance that serves as a secure, controlled entry point to the topology from outside the cloud. The bastion host is provisioned typically in a demilitarized zone (DMZ). It enables you to protect sensitive resources by placing them in private networks that can't be accessed directly from outside the cloud. The topology has a single, known entry point that you can monitor and audit regularly. So, you can avoid exposing the more sensitive components of the topology without compromising access to them.

Recommendations

Your requirements might differ from the architecture described here. Use the following recommendations as a starting point.

- GlusterFS architecture

This architecture uses replicated GlusterFS volumes; the data is replicated across all the nodes. This configuration provides the highest availability of data but also uses the largest amount of space. As shown in the architecture diagram, when

File1is created, it is replicated across the nodes.GlusterFS supports the following architectures. Select an architecture that suits your requirements:- Distributed volumes

This architecture is the default GlusterFS configuration and is used to obtain maximum volume size and scalability. There is no data redundancy. Therefore, if a brick in the volume fails, it will lead to complete loss of data.

- Replicated volumes

This architecture is most used where high availability is critical. Data loss problems arising from brick failures are avoided by replicating data across two or more bricks. This reference architecture uses the Replicated volumes configuration.

- Distributed replicated volumes

This architecture is a combination of distributed and replicated volumes and is used for obtaining larger volume sizes than a replicated volume and higher availability than a distributed volume. In this configuration, data is replicated onto a subset of the total number of bricks. The number of bricks must be a multiple of the replica count. For example, four bricks of 1 TB each will give you a distributed space of 2 TB with a two-fold replication.

- Striped volumes

This architecture is used for large files that will be divided into smaller chunks and each chunk is stored in a brick. The load is distributed across the bricks and the file can be fetched faster but no data redundancy is available.

- Distributed striped volumes

This architecture is used for large files with distribution across more number of bricks. The trade-off with this configuration is that if you want to increase the volume size, then you must add bricks in multiples of the stripe count.

- Distributed volumes

- Compute shapes

This architecture uses a bare metal shape (BM.Standard2.52) for all the GlusterFS nodes. These bare metal compute instances have two physical NICs that can push traffic at 25 Gbps each. The second physical NIC is dedicated for GlusterFS traffic.

- Block storage

This architecture uses 1 TB of block storage. We recommend that you configure a logical volume manager (LVM) to allow the volume to grow if you need more space. Each block volume is configured to use balanced performance and provides 35K IOPS and 480 MB/s of throughput.

- Virtual cloud network (VCN)

When you create a VCN, determine the number of CIDR blocks required and the size of each block based on the number of resources that you plan to attach to subnets in the VCN. Use CIDR blocks that are within the standard private IP address space.

Select CIDR blocks that don't overlap with any other network (in Oracle Cloud Infrastructure, your on-premises data center, or another cloud provider) to which you intend to set up private connections.

After you create a VCN, you can change, add, and remove its CIDR blocks.

When you design the subnets, consider your traffic flow and security requirements. Attach all the resources within a specific tier or role to the same subnet, which can serve as a security boundary.

- Network security groups (NSGs)

You can use NSGs to define a set of ingress and egress rules that apply to specific VNICs. We recommend using NSGs rather than security lists, because NSGs enable you to separate the VCN's subnet architecture from the security requirements of your application. In the reference architecture, all the network communication is controlled through NSGs.

- Security lists

Use security lists to define ingress and egress rules that apply to the entire subnet.

Considerations

- Performance

To get the best performance, use dedicated NICs for communication from the application to your users and to the GlusterFS headends. Use the primary NIC for communication between your application and the users. Use the secondary NIC for communication with the GlusterFS headends. You can also change the volume performance for block storage to increase or decrease the IOPS and throughput of your disk.

- Availability

Fault domains provide the best resiliency within an availability domain. If you need higher availability, consider using multiple availability domains or multiple regions. For mission-critical workloads, consider using distributed striped GlusterFS volumes.

- CostThe cost of your GlusterFS deployment depends on your requirements for disk performance and availability:

- You can choose from the following performance options: high performance, balanced performance, and low cost.

- For higher availability, you need a larger number of GlusterFS nodes and volumes.

Deploy

The code required to deploy this reference architecture is available in GitHub. You can pull the code into Oracle Cloud Infrastructure Resource Manager with a single click, create the stack, and deploy it. Alternatively, download the code from GitHub to your computer, customize the code, and deploy the architecture by using the Terraform CLI.

- Deploy by using Oracle Cloud Infrastructure Resource

Manager:

- Click

If you aren't already signed in, enter the tenancy and user credentials.

- Review and accept the terms and conditions.

- Select the region where you want to deploy the stack.

- Follow the on-screen prompts and instructions to create the stack.

- After creating the stack, click Terraform Actions, and select Plan.

- Wait for the job to be completed, and review the plan.

To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again.

- If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply.

- Click

- Deploy by using the Terraform CLI:

- Go to GitHub.

- Clone or download the repository to your local computer.

- Follow the instructions in the

READMEdocument.

Change Log

This log lists significant changes:

| November 5, 2021 |

|

| June 17, 2021 | Updated the Deploy section to include automatic deployment. |