About Scaling OCI Queue Consumers in Kubernetes

This playbook shows how to use the features of Oracle Cloud Infrastructure (OCI) Queue and provides a recipe that will suit the majority of use cases requiring dynamic scaling, based on the amount of client demand, without incurring back pressure or performance bottleneck issues.

You can deploy a microservice to Oracle Kubernetes Engine (OKE), which processes messages from an OCI Queue, and then take advantage of the Queue depth to horizontally scale microservice instances running on an OKE cluster. The example includes a message generator that you can run from anywhere; for example, from your local machine or as part of another container or VM.

This playbook also provides code and detailed setup instructions, which can be found in the Oracle-DevRel GitHub repository, oci-arch-queue-oke-demo (which you can access from the Explore More section of this playbook). This solution provides all the Java code, scripts, and configuration files, along with detailed steps on building, deploying, and running the building blocks. This document will provide an architectural view, and examine key elements of the implementation, including identifying the parts of the code you'll need to modify to adapt the solution to your own needs.

Learn About OCI Queue APIs

While Queue can cushion applications from demand fluctuations, when users are involved, you don’t want a lot of time to pass between message creation and message processing. Therefore, the back end needs to be able to scale dynamically, based on demand. That demand is determined by the number of messages sitting within the queue; more messages means more demand and thus more computing effort needed. Conversely, an empty queue represents no demand and therefore requires minimal backend resources. Dynamic scaling addresses these constant variations.

Architecture

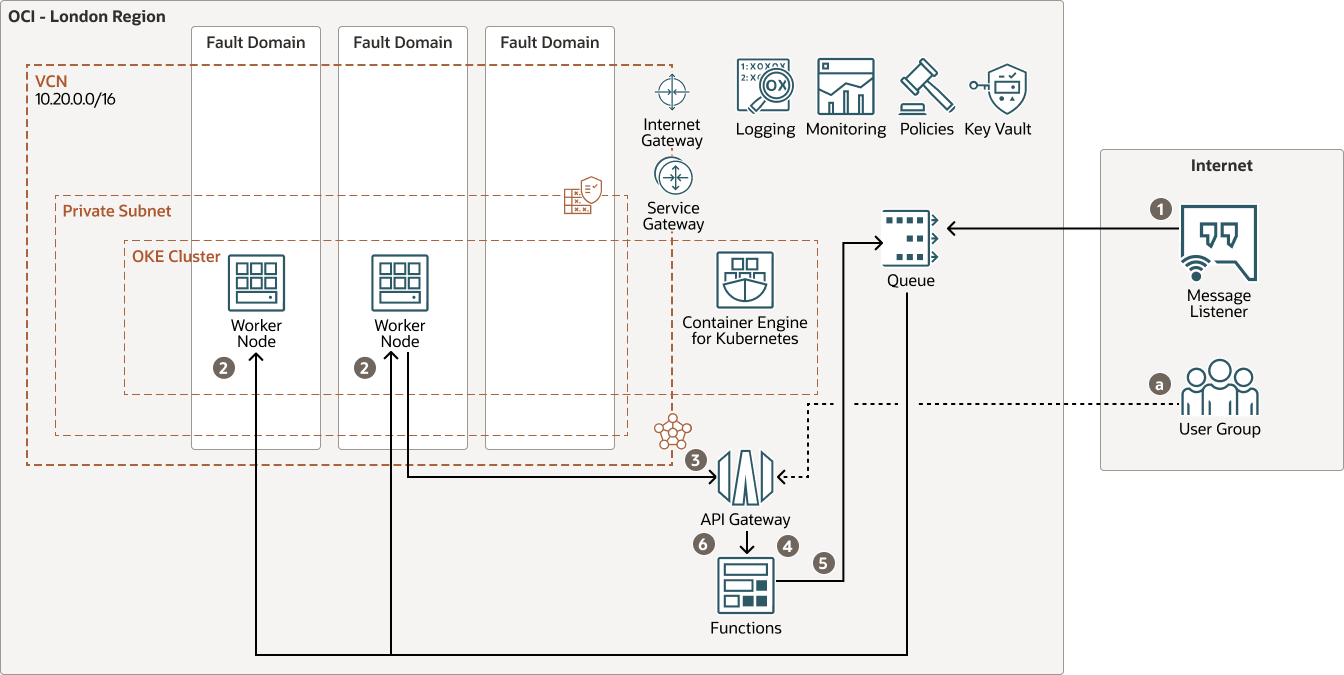

This architecture requires a queue for the messages that reside outside of our visible fault domains as it is a fully managed service.

Within OCI, you can run Kubernetes with client-managed nodes or with Oracle-managed nodes. This scenario uses the older approach of client nodes, so you need Compute nodes attached to the cluster. These nodes are best spread across the fault domains, within an availability zone.

Note:

In this playbook, demand generation comes from your local machine and, therefore, outside the OCI environment.The last two key elements control scaling. First, an OCI Function is periodically invoked and retrieves details of the queue depth. To abstract the way the queue depth is ascertained, an API Gateway is used, which facilitates calling OCI Functions.

Finally, we need ancillary services, such as Vault, to store credentials for accessing the Queue, monitoring for general health observation, and so on.

Description of the illustration queue-scaling-oke-arch.png

queue-scaling-oke-arch-oracle.zip

- The locally hosted producer puts messages into the OCI Queue.

- Our OCI Consumer instance(s) retrieve messages from the queue. Within the code, the consumption rate is constrained by using a delay. This ensures the provider is generating more messages than a single consumer can remove from the queue. As a result, the scaling mechanisms will work.

- Periodically, a Kubernetes scheduled job will support KEDA to invoke the published API to get the number of messages on the queue.

- The API Gateway directs the request onto an instance of the OCI Function.

- The OCI Function interrogates the OCI Queue.

- The response is returned, which will result in KEDA triggering an increase or decrease the instances of the microservice.

- Region

An OCI region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure services such as power, cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domains

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Compartment

Compartments are cross-region logical partitions within an OCI tenancy. Use compartments to organize your resources in Oracle Cloud, control access to the resources, and set usage quotas. To control access to the resources in each compartment, you define policies that specify who can access the resources and what actions they can perform.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an OCI region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Compute instances

OCI Compute lets you provision and manage compute hosts. You can launch compute instances with shapes that meet your resource requirements (CPU, memory, network bandwidth, and storage). After creating a compute instance, you can access it securely, restart it, attach, and detach volumes, and terminate it when you don't need it.

- Functions

Oracle Functions is a fully managed, multitenant, highly scalable, on-demand, Functions-as-a-Service (FaaS) platform. It is powered by the Fn Project open-source engine. Functions enable you to deploy your code, and either call it directly or trigger it in response to events. Oracle Functions uses Docker containers hosted in OCI Registry.

- Container Registry

OCI Registry is an Oracle-managed registry that enables you to simplify your development-to-production workflow. Registry makes it easy for you to store, share, and manage development artifacts, like Docker images. The highly available and scalable architecture of OCI ensures that you can deploy and manage your applications reliably.

- Container Engine for Kubernetes

OCI Container Engine for Kubernetes is a fully managed, scalable, and highly available service that you can use to deploy your containerized applications to the cloud. You specify the compute resources that your applications require, and Container Engine for Kubernetes provisions them on OCI in an existing tenancy. Container Engine for Kubernetes uses Kubernetes to automate the deployment, scaling, and management of containerized applications across clusters of hosts.

- API Gateway

Oracle API Gateway enables you to publish APIs with endpoints to manage the visibility of functionality such as Functions, Microservices and other application interfaces. The endpoints provide fine grained security controls for backend solutions as well as abstracting how an API maybe implemented.

- Queue

Queue is a serverless highly scalable asynchronous communication mechanism. It is ideal for enabling the decoupling of services and message delivery.

Considerations

When deploying a microservice into Kubernetes to processes messages from OCI Queue you should consider the following:

- Considerations for Policies for Queues

Policies for controlling and configuring OCI Queues and policies for creating and consuming messages are separated. This gives you fine grain control over operations available through the APIs. This means you need to give some consideration to your application’s requirements and security needs. For production, tight controls is recommended; this implementation uses relaxed configuration settings, which minimise the chance of a configuration error preventing the demo from working.

- Considerations for Kubernetes scaling limits

You need to consider the rate and limits on the backend scaling. You need to address factors, such as how deep the queue can get before additional instances of the microservice are started. The maximum number of additional instances of a microservice should be running. While it would be tempting not to bind this, in real world, practical terms, if someone (deliberately or accidentally) starts flooding your queue with erroneous messages, you don’t want to absorb the costs of the additional compute power needed to run away. You also need to consider how quickly you spin down the additional instances of your microservice. Spin down too quickly and you’ll find your environment constantly starting and stopping services. Regardless of how efficient the microservice, there will always be an overhead cost of starting and stopping service instances which ultimately costs.

- Considerations for scaling control

The last aspect of controlling scaling is whether you want to apply scaling and how to control it. This scenario leverages a CNCF project called KEDA (Kubernetes Event Driven Autoscaler). In addition to how the backend scaling is executed, you need to consider how the API is invoked. In this case, you can configure a Kubernetes scheduled job (as the job is performed by a worker node the diagram reflects this flow) to invoke the API.

Prerequisites

Before you begin, you need to prepare you environment by addressing the prerequisites described here.

- For the message generation, you need Java 8 or later (Java 8 installed along with Apache Maven).

- The interaction with OCI Queue will need users and associated

credentials to allow access. Set these policies:

- Set this policy to identify the compartment you will use

(

compartment-name):allow any-user to manage queues in compartment compartment-name - To limit users to just reading or writing to the OCI Queue,

create OCI Groups called

MessageConsumersandMessageProducersand allocate the appropriate users to these groups. Use the following policies:allow group MessageConsumers to use queues in compartment compartment-name allow group MessageProducers to use queues in compartment compartment-name - Provide the producer client with the standard OCI configuration attributes so they can authenticate with OCI and use the API.

- You need to set policies to support the dynamic behaviour

because new resources will come and go and will need access to Queue.

Set these policies by creating a Dynamic Group with the resources you'll

need to control elastically. Since this control happens within OCI, use

an instance principal. Call this group

queue_dg. Use the following policy statements:

Where theALL {instance.compartment.id='instance Compartment id (OCID)'} allow dynamic-group queue_dg to use queues in compartment queue_parent_compartmentinstance Compartment id (OCID)is the compartment containing the worker nodes.

- Set this policy to identify the compartment you will use

(