Enable Financial Services Industry Analysis with DataSynapse GridServer on Oracle Cloud Infrastructure

Banks and financial services organizations are quickly turning to the cloud to deploy their mission-critical financial analyses, often requiring high-performance computing (HPC) resources not internally available. Increasing competition and regulations are prompting more companies to look to the cloud for their growing workloads of financial analyses.

Oracle Cloud Infrastructure (OCI) provides a comprehensive second-generation cloud infrastructure that enables banks and financial services organizations to quickly deploy the cloud for financial analysis. Oracle has deep and extensive experience managing and analyzing customer data, allowing them to provide such resources within their cloud infrastructure.

DataSynapse GridServer, a software product of TIBCO, is a highly scalable software infrastructure which allows application services to operate in a virtualized fashion, not tying these services to any specific hardware resource. GridServer dynamically provisions service requests to available hardware resources in a highly scalable manner through the ability to readily process multiple requests.

Architecture

GridServer was tested on a variety of HPC clusters on OCI, comprised of various compute instance shapes including bare metal (BM) and virtual machines (VM). These clusters were instantiated using an HPC stack in the OCI Resource Manager, which uses a terraform template containing the parts which turn a collection of instances into a functional HPC cluster. The stack can add network file share (NFS) volumes, extra block volumes, or another file system (such as the OCI File Storage service). After the test HPC clusters were built, GridServer was installed by following the software installation instructions.

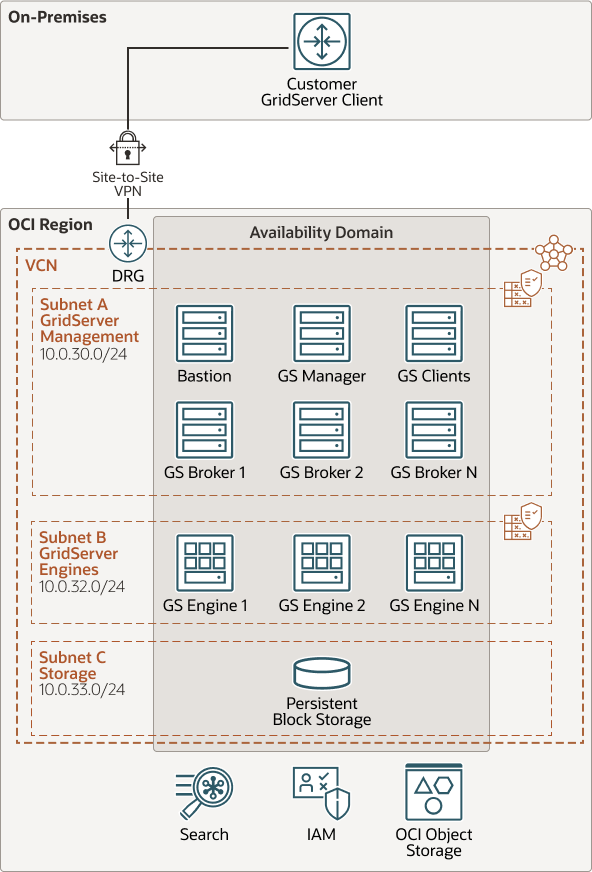

The clusters tested are based on the following architecture.

datasynapse-gridserver-oci-architecture.zip

The architecture has the following components:

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Dynamic routing gateway (DRG)

The DRG is a virtual router that provides a path for private network traffic between VCNs in the same region, between a VCN and a network outside the region, such as a VCN in another Oracle Cloud Infrastructure region, an on-premises network, or a network in another cloud provider.

- Site-to-Site VPN

Site-to-Site VPN provides IPSec VPN connectivity between your on-premises network and VCNs in Oracle Cloud Infrastructure. The IPSec protocol suite encrypts IP traffic before the packets are transferred from the source to the destination and decrypts the traffic when it arrives.

- On-premises network

This network is the local network used by your organization. It is one of the spokes of the topology.

- Bastion host

The bastion host is a compute instance that serves as a secure, controlled entry point to the topology from outside the cloud. The bastion host is provisioned typically in a demilitarized zone (DMZ). It enables you to protect sensitive resources by placing them in private networks that can't be accessed directly from outside the cloud. The topology has a single, known entry point that you can monitor and audit regularly. So, you can avoid exposing the more sensitive components of the topology without compromising access to them.

- Route table

Virtual route tables contain rules to route traffic from subnets to destinations outside a VCN, typically through gateways.

- Security list

For each subnet, you can create security rules that specify the source, destination, and type of traffic that must be allowed in and out of the subnet.

- Block volume

With block storage volumes, you can create, attach, connect, and move storage volumes, and change volume performance to meet your storage, performance, and application requirements. After you attach and connect a volume to an instance, you can use the volume like a regular hard drive. You can also disconnect a volume and attach it to another instance without losing data.

- Identity and Access Management (IAM)

Oracle Cloud Infrastructure Identity and Access Management (IAM) is the access control plane for Oracle Cloud Infrastructure (OCI) and Oracle Cloud Applications. The IAM API and the user interface enable you to manage identity domains and the resources within the identity domain. Each OCI IAM identity domain represents a standalone identity and access management solution or a different user population.

- Object storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

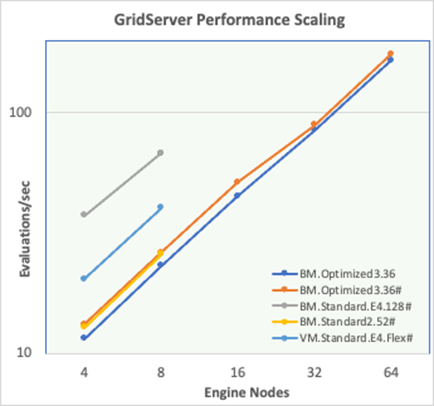

After the GridServer clusters were created, benchmarks were carried out using a case from the Stata-Examples of the OpenGamma library. Initial testing indicated the best combination of performance and price to performance were obtained placing the GridServer Director, Broker(s), and Client(s) on a single public-facing instance, with engines on separate compute instances. Both BM and VM shapes were tested for the GridServer Director, Broker, Client instance with no observed difference in performance. The table below provides a description of the clusters tested.

| Engine Shape | Engines Per Node | Engine Nodes | Total Engines |

|---|---|---|---|

| BM.Optimized3.36 | 36,72* | 4-64 | 4608 |

| BM.Standard.E4.128 | 256* | 4-8 | 2048 |

| BM.Standard2.52 | 52* | 4-8 | 416 |

| VM.Standard.E4.Flex | 128* | 4-8 | 1024 |

Hyperthreading was enabled or disabled on some of the systems during the tests. Results obtained with Hyperthreading enabled are denoted with an asterisk (*). In OCI, a physical core is denoted as an OCPU. By default, GridServer configures a single engine per core. When Hyperthreading is enabled, GridServer assigns an engine to each of the two core threads, doubling the number of available engines per node. The VM shape, VM.Standard.E4.Flex, is configurable with a variable number of OCPUs. For our tests, we configured each of the shapes with 64 OCPUs (128 total engines with Hyperthreading enabled).

Our tests used GridServer to carry out 25,000 unique OpenGamma analyses, simulating a typical Monte Carlo benchmark test. We obtained the elapsed time for each of the tests from the job summary off the GridServer console. We carried out the tests on each of the clusters starting with using four engines for the simulation, then doubling the number of engines per test until all nodes running engines in the cluster were being utilized. The plot below shows our test results on cluster based on various shape instances.

Here the results are displayed in evaluations per second, the speed in which the cluster is able to perform the Monte Carlo simulation evaluations. For each benchmark, this value was simply the total number of simulations (25,000) divided by the simulation elapsed time in seconds. Overall, for each of the clusters tested, the performance scaled nearly linearly with the number of engines used. In terms of relative performance observed for the various instance shapes, there was a strong correlation between the node performance and the numbers of cores per shape, indicating that the per-core engine performance was somewhat invariant to the shape. The cluster with BM.Optmized3.36 shapes was tested with both Hyperthreading enabled and disabled. Tests with other shapes displayed the same effect with Hyperthreading being preferred, and this data was left off the chart for clarity.

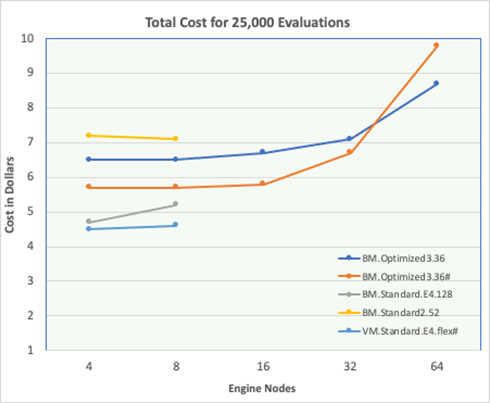

While performance is a critical factor in large-scale Monte Carlo analyses, cost is also a factor. We examined this for the above tests by plotting the total OCI cost on the chart below (using prices published on the Oracle website).

Ideally, the total simulation cost should not vary with cluster size, but parallel inefficiencies tend to increase with the size of the cluster making the simulation cost increase. There is a general assumption for all business practices that there is a trade-off in price as performance improves, and this is no different. Our OpenGamma tests showed the shapes based on the AMD EPYC (BM.Standard.E4.128, VM.Standard.E4.Flex) offered both the best node performance and price performance. We encourage customers to test their financial models on OCI with a 30-day free trial , as different models may be better suited to other HPC shapes.