High Performance Computing: LS-DYNA on Oracle Cloud Infrastructure

LS-DYNA is an advanced finite element program used for simulating real-world engineering problems.

It models the response of materials to short periods of severe loading, and is often used for computational fluid dynamics (CFD) simulations.

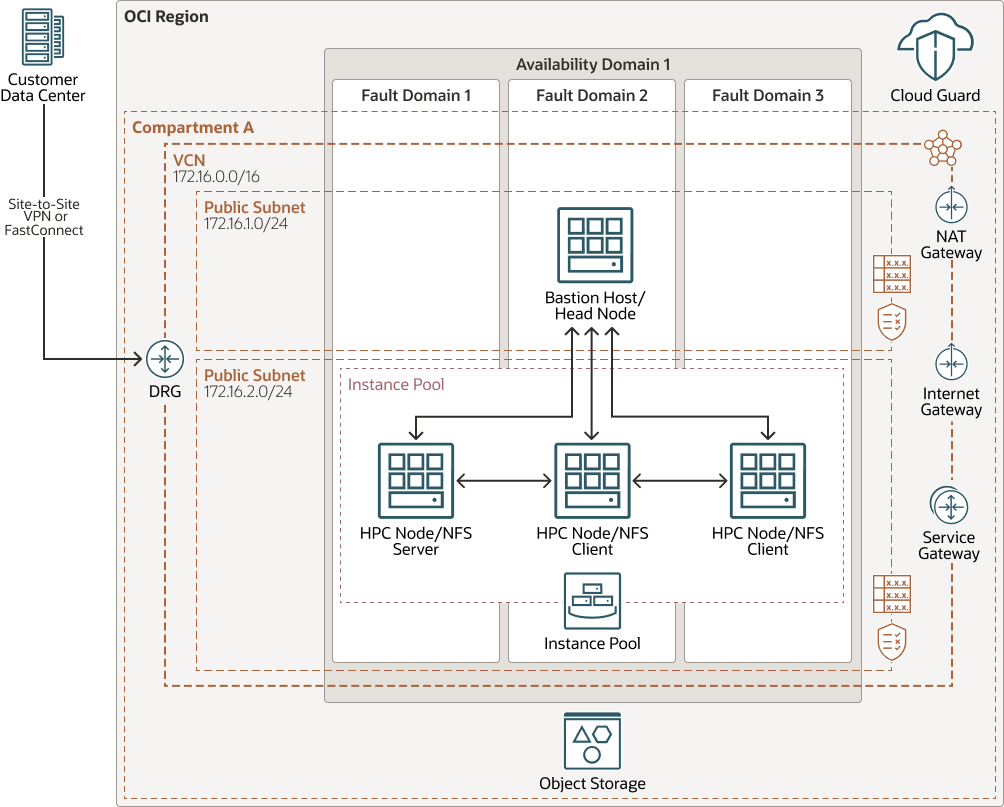

Architecture

The architecture uses one bastion/head node to connect to the HPC cluster.

The head node contains the LS-DYNA installation and the model. It has the message passing interface (MPI), and orchestrates and runs the job. The job results are saved on the head node.

The following diagram illustrates this reference architecture.

Description of the illustration architecture-hpc.png

The architecture has the following components:

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domains

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Security List

For each subnet, you can create security rules that specify the source, destination, and type of traffic that must be allowed in and out of the subnet.

- Instance Pool

Instance pools let you create and manage multiple Compute instances within the same region as a group. They also enable integration with other services, such as the Load Balancing service and IAM service.

- Bastion Node/Head Node

Use a web-based portal to connect to the head node and schedule HPC jobs. The job request comes through FastConnect or IPSec VPN to the head node. The head node also sends the customer data set to file storage and can do some preprocessing on the data.

The head node provisions HPC node clusters and deletes HPC clusters on job completion.

- HPC Cluster Node

The head node provisions and terminates these compute nodes, which are RDMA-enabled clusters. They process the data stored in file storage and return the results to file storage.

- Cloud Guard

You can use Oracle Cloud Guard to monitor and maintain the security of your resources in the cloud. Cloud Guard examines your resources for security weakness related to configuration, and monitors operators and users for risky activities. When any security issue or risk is identified, Cloud Guard recommends corrective actions and assists you in those actions, based on security recipes that you can define.

- NFS Server

One of the HPC nodes will be promoted as NFS server.

Recommendations

Your requirements might differ from the architecture described here. Use the following recommendations as a starting point.

- VCN

When you create a VCN, determine the number of CIDR blocks required and the size of each block based on the number of resources that you plan to attach to subnets in the VCN. Use CIDR blocks that are within the standard private IP address space.

Select CIDR blocks that don't overlap with any other network (in Oracle Cloud Infrastructure, your on-premises data center, or another cloud provider) to which you intend to set up private connections.

After you create a VCN, you can change, add, and remove its CIDR blocks.

When you design the subnets, consider your traffic flow and security requirements. Attach all the resources within a specific tier or role to the same subnet, which can serve as a security boundary.

- Security

Use Oracle Cloud Guard to monitor and maintain the security of your resources in OCI proactively. Cloud Guard uses detector recipes that you can define to examine your resources for security weaknesses and to monitor operators and users for risky activities. When any misconfiguration or insecure activity is detected, Cloud Guard recommends corrective actions and assists with those actions, based on responder recipes that you can define.

For resources that require maximum security, Oracle recommends that you use security zones. A security zone is a compartment associated with an Oracle-defined recipe of security policies that are based on best practices. For example, the resources in a security zone must not be accessible from the public internet and they must be encrypted using customer-managed keys. When you create and update resources in a security zone, Oracle Cloud Infrastructure validates the operations against the policies in the security-zone recipe, and denies operations that violate any of the policies.

- HPC Nodes

There are two scenarios:

- Deploy on VM shapes using Instance Pool, as shown in the architecture diagram. This scenario offers lower cost but also lower performance.

Use VM.Standard.E3.Flex or VM.Standard.E4.Flex with file storage service .

- Deploy with HPC BARE Metal shapes to get full performance.

Use BM.HPC2.36 shapes with 6.4-TB local NVMe SSD storage, 36 cores, and 384-GB memory per node.

- Deploy on VM shapes using Instance Pool, as shown in the architecture diagram. This scenario offers lower cost but also lower performance.

Considerations

Consider the following points when deploying this reference architecture.

- Performance

Depending on the size of the workload, determine how many cores you want OpenFOAM to run on. This decision ensures that the simulation is completed in a timely manner.

To get the best performance, choose the correct Compute shape with appropriate bandwidth.

- Availability

Consider using a high-availability option, based on your deployment requirements and region. Options include using multiple availability domains in a region and fault domains.

- Cost

A bare metal GPU instance provides the necessary CPU power for a higher cost. Evaluate your requirements to choose the appropriate Compute shape.

You can delete the cluster when there are no jobs running.

- Monitoring and Alerts

Set up monitoring and alerts on CPU and memory usage for your nodes, so that you can scale the shape up or down as needed.

- Storage

On top of the NVMe SSD storage that comes with the HPC shape, you can also attach block volumes at 32k IOPS per volume, backed by Oracle’s highest performance SLA. If you're using our solutions to launch the infrastructure, an nfs-share is installed by default on the NVMe SSD storage in /mnt. You can also install your own parallel file system on top of either the NVMe SSD storage or block storage, depending on your performance requirements.

- Visualizer Node

You can create a visualizer node, such as a GPU virtual machine (VM) or bare metal node, depending on your requirements. This visualizer node can be your bastion host or separate. Depending on the security requirements for the workload, the visualizer node can be placed in the private or public subnet.

Deploy

The code required to deploy this reference architecture is available in GitHub. You can pull the code into Oracle Cloud Infrastructure Resource Manager with a single click, create the stack, and deploy it. Alternatively, download the code from GitHub to your computer, customize the code, and deploy the architecture by using the Terraform CLI.

- Deploy by using Oracle Cloud Infrastructure Resource

Manager:

- Click

If you aren't already signed in, enter the tenancy and user credentials.

- Review and accept the terms and conditions.

- Select the region where you want to deploy the stack.

- Follow the on-screen prompts and instructions to create the stack.

- After creating the stack, click Terraform Actions, and select Plan.

- Wait for the job to be completed, and review the plan.

To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again.

- If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply.

- Click

- Deploy using the Terraform code in GitHub:

- Go to GitHub.

- Clone or download the repository to your local computer.

- Follow the instructions in the

READMEdocument.