Learn About AI Voice Generation Inference with TorchServe on NVIDIA GPUs

You can design a Text-to-Speech service to run on Oracle Cloud Infrastructure Kubernetes Engine using TorchServe on NVIDIA GPUs. This technique can also be applied to other inference workloads such as image classification, object detection, natural language processing, and recommendation systems.

An inference server is a specialized system that hosts trained machine learning models and serves their predictions (inferences) using APIs. It handles the critical production challenges of scaling, batching, and monitoring: transforming a static model into a reliable, high-performance web service.

TorchServe is the official, model-serving framework for PyTorch.

Its job is to take your trained .mar file (a packaged model file) and make it available from RESTful API or gRPC endpoints. Instead of writing custom web servers and logic, you package your model and hand it to TorchServe. It instantly provides you with:

- Scalability: Automatically manages workers to handle high-volume traffic.

- Low-Latency Performance: Uses advanced features like multi-model batching to group requests for efficient processing.

- Model Management: Allows you to register, version, and roll back models without downtime.

- Built-in Monitoring: Tracks metrics like inference latency and requests per second out of the box.

Essentially, TorchServe bridges the gap between PyTorch experimentation and production deployment, turning your powerful models into a robust prediction service with minimal effort. It's the essential tool for taking PyTorch from the research lab to the real world.

Note:

TorchServe is currently in limited maintenance mode. While it remains free to use and is still employed by some existing customers for specific use cases or testing convenience, we recommend evaluating its long-term suitability for production deployments. Please review the official PyTorch TorchServe documentation at https://docs.pytorch.org/serve/ for current status and considerations.These are some of the inference servers in the market:

| Server | Developer | Key Features | Best For | Framework Support |

|---|---|---|---|---|

| NVIDIA Triton | NVIDIA |

|

High-performance, multi-model deployments | PyTorch, TF, ONNX, TensorRT |

| TorchServe | PyTorch (previously Meta) |

|

PyTorch-focused deployments | PyTorch only |

| TensorFlow Serving |

|

TensorFlow ecosystems | TensorFlow |

Architecture

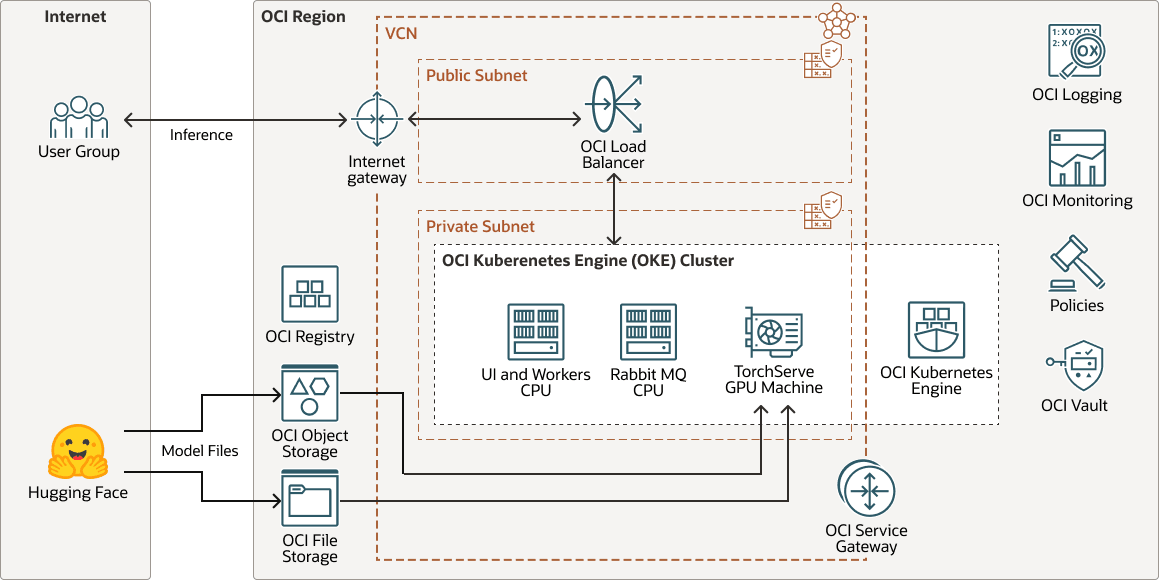

This architecture shows an example of an AI voice generation inference deployment.

transperfect-oke-arch-oracle.zip

A trained and customized voice model, fine-tuned from a base Hugging Face model, is deployed on TorchServe for inference. TorchServe acts as the serving layer, hosting the model and managing incoming user requests efficiently.

When a user sends a text input, TorchServe processes the request, invokes the model, and renders the output as high-quality synthetic speech.

For text to speech segments, each document can contain multiple blocks, and each block is made up of segments. For example:

Document (chapter)

└── Block (paragraph)

└── Segment (sentence)This architecture supports the following components:

- OCI region

An OCI region is a localized geographic area that contains one or more data centers, hosting availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- OCI virtual cloud

network and subnet

A virtual cloud network (VCN) is a customizable, software-defined network that you set up in an OCI region. Like traditional data center networks, VCNs give you control over your network environment. A VCN can have multiple non-overlapping classless inter-domain routing (CIDR) blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- OCI Registry

Oracle Cloud Infrastructure Registry is an Oracle-managed service that enables you to simplify your development-to-production workflow. Registry makes it easy for you to store, share, and manage development artifacts, like Docker images.

- OCI Object Storage

OCI Object Storage provides access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store data directly from applications or from within the cloud platform. You can scale storage without experiencing any degradation in performance or service reliability.

Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

- OCI File Storage

Oracle Cloud Infrastructure File Storage provides a durable, scalable, secure, enterprise-grade network file system. You can connect to OCI File Storage from any bare metal, virtual machine, or container instance in a VCN. You can also access OCI File Storage from outside the VCN by using Oracle Cloud Infrastructure FastConnect and IPSec VPN.

- OCI Block Volumes

With Oracle Cloud Infrastructure Block Volumes, you can create, attach, connect, and move storage volumes, and change volume performance to meet your storage, performance, and application requirements. After you attach and connect a volume to an instance, you can use the volume like a regular hard drive. You can also disconnect a volume and attach it to another instance without losing data.

- Load balancer

Oracle Cloud Infrastructure Load Balancing provides automated traffic distribution from a single entry point to multiple servers.

- OCI Kubernetes Engine

Oracle Cloud Infrastructure Kubernetes Engine (OCI Kubernetes Engine or OKE) is a fully-managed, scalable, and highly available service that you can use to deploy your containerized applications to the cloud. You specify the compute resources that your applications require, and OKE provisions them on OCI in an existing tenancy. OKE uses Kubernetes to automate the deployment, scaling, and management of containerized applications across clusters of hosts.

- Service

gateway

A service gateway provides access from a VCN to other services, such as Oracle Cloud Infrastructure Object Storage. The traffic from the VCN to the Oracle service travels over the Oracle network fabric and does not traverse the internet.

- Internet

gateway

An internet gateway allows traffic between the public subnets in a VCN and the public internet.

- OCI LoggingOracle Cloud Infrastructure Logging is a highly-scalable and fully-managed service that provides access to the following types of logs from your resources in the cloud:

- Audit logs: Logs related to events produced by OCI Audit.

- Service logs: Logs published by individual services such as OCI API Gateway, OCI Events, OCI Functions, OCI Load Balancing, OCI Object Storage, and VCN flow logs.

- Custom logs: Logs that contain diagnostic information from custom applications, other cloud providers, or an on-premises environment.

- OCI Monitoring

Oracle Cloud Infrastructure Monitoring actively and passively monitors your cloud resources, and uses alarms to notify you when metrics meet specified triggers.

- Policy

An Oracle Cloud Infrastructure Identity and Access Management policy specifies who can access which resources, and how. Access is granted at the group and compartment level, which means you can write a policy that gives a group a specific type of access within a specific compartment or to the tenancy.

- OCI Vault

Oracle Cloud Infrastructure Vault enables you to create and centrally manage the encryption keys that protect your data and the secret credentials that you use to secure access to your resources in the cloud. The default key management is Oracle-managed keys. You can also use customer-managed keys which use OCI Vault. OCI Vault offers a rich set of REST APIs to manage vaults and keys.

- Hugging Face

Hugging Face is a collaborative platform and hub for machine learning that provides pre-trained AI models, development tools, and hosting infrastructure for AI applications, making advanced machine learning accessible to developers worldwide.

About the Solution Design Workflow

This voice generation solution implements the following design workflow.

- The user initiates a project conversion.

- The app adds a message to the RabbitMQ queue for each conversion task within the project.

- Each worker retrieves a message from the queue.

- The worker processes the message and sends a request to TorchServe.

- TorchServe performs inference and returns the results to the worker.

- The worker processes the results and places a message back onto the queue.

- The app retrieves the results message from the queue and stores it in the database.

- The user is notified of the results in the UI.

Note:

For lightweight models, a worker sends the inference request to CPU nodes.