Performance and Shape Considerations

There are significant price and performance considerations for running Hadoop on Oracle Cloud Infrastructure. You should also consider how your requirements affect deployment shapes.

Comparative Analysis

Oracle Cloud Infrastructure offers both performance and cost advantages for enterprises interested in running Hadoop clusters on Oracle Cloud.

TeraSort is a common benchmark for Hadoop because it leverages all elements of the cluster (compute, memory, storage, network) to generate, map/reduce, and validate a randomized dataset.

One comparative analysis normalized Oracle Cloud Infrastructure clusters at 300 OCPU, and used block volumes for HDFS storage. That particular analysis found that Oracle Cloud Infrastructure ran three times faster and provided over an 80% lower cost than a comperable deployment on a competitor's cloud.

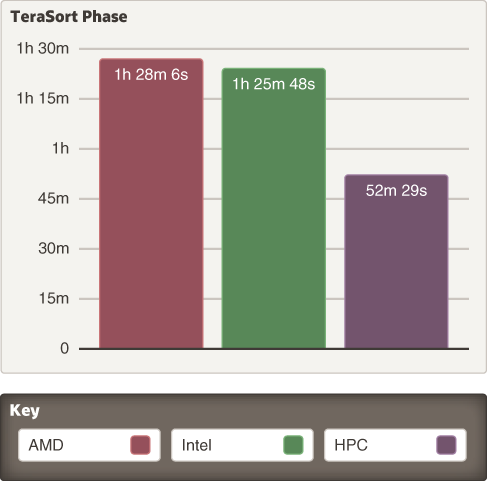

The following chart demonstrates the overall performance of various Oracle Cloud Infrastructure shapes when running this 10TB TeraSort at the normalized cluster size:

Description of the illustration comparison-terasort-phase-cpu.png

Oracle Cloud Infrastructure provides standard bare metal compute instances with Intel and AMD CPUs, as well as a specialized HPC option. As the chart shows, the specialized HPC clusters ran faster than the Intel and AMD counterparts, even though these instances have lower core counts. This result occurred primarily because this cluster uses more nodes to achieve the same normalized OCPU count, which directly affects the overall HDFS aggregate throughput, increasing performance.

The HPC shapes also benefit from a 100-GBps network capability, which allows for faster intracluster data transfer.

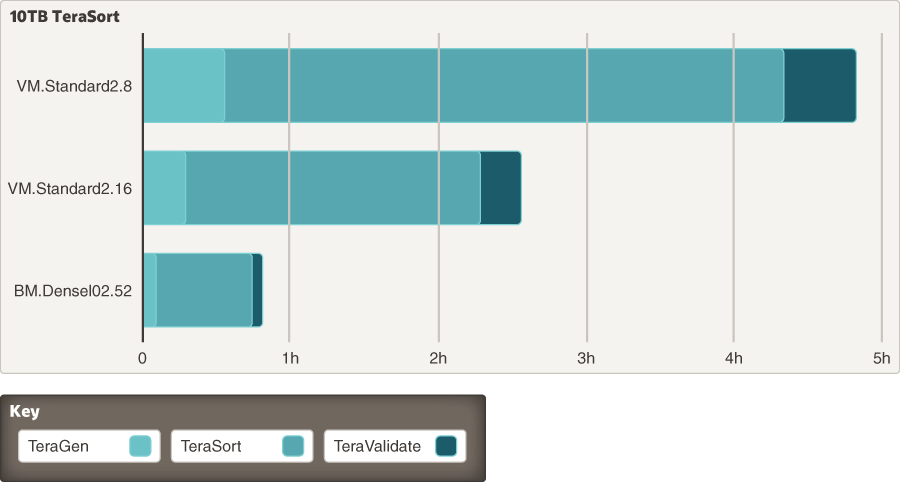

The following figure compares the performance of VM-based workers with block volumes, and bare metal NVMe, running Cloudera and normalized to 10 worker nodes in the cluster:

Description of the illustration comparison-terasort-vm-bm-performance.png

The performance gain from doubling the worker VM size from 8 to 16 cores is about twice more efficient, which makes sense because the VM network throughput is a fractional share of the underlying physical NIC. The advantages of bare metal with local NVMe are also apparent. The cluster takes advantage of the high speed of local NVMe storage combined with the 25-Gbps network interface.

Deciding which shapes to leverage for compute in a Hadoop cluster is consistent between Hadoop ISVs.

Compute Instance Selection

This section provides best practices when selecting a shape for each node role.

Master Nodes

Master nodes are applicable only to Cloudera, Hortonworks, and Apache Hadoop distributions. By default, MapR doesn’t segregate cluster services from worker nodes. In practice, Oracle recommends using a good memory density on master nodes to support the overhead of cluster and service management. Master nodes run Zookeeper, NameNode, JournalNode, Resource Manager, HBase, Spark, and cluster management (Cloudera Manager and Ambari) services.

- Minimum shape: VM.Standard2.8

- Recommended shape: VM.Standard2.24

Worker Nodes

Worker nodes are consistent between all Hadoop ISVs as well as Apache. Scale the shape for the worker node as appropriate to meet workload requirements. This is applicable for both compute and memory requirements because many customers are looking for aggregate memory to use with Spark-based workloads. Traditional map/reduce workloads also benefit from an increased memory footprint on worker nodes.

- Minimum shape: VM.Standard2.8

- Recommended shape: BM.DenseIO2.52

Supporting Nodes

Supporting infrastructure includes edge nodes or other nodes that might be running ancillary cluster services or custom application code. The requirements for these nodes vary depending on scale and use case. For edge nodes, we recommend a minimum size of VM.Standard2.2. Scale this up depending on the number of users per edge node. We recommend multiple edge nodes for HA between fault domains and for creating multiple pathways for users to interact with the cluster.

Network Considerations

Hadoop heavily depends on the network for intracluster traffic. As such, the shapes that you choose to deploy for each role in the cluster topology have a direct impact on intracluster connectivity.

When using bare metal shapes, compute hosts can use full 25-GB virtual network interface cards (VNICs). VM shapes are scaled relative to their compute size.

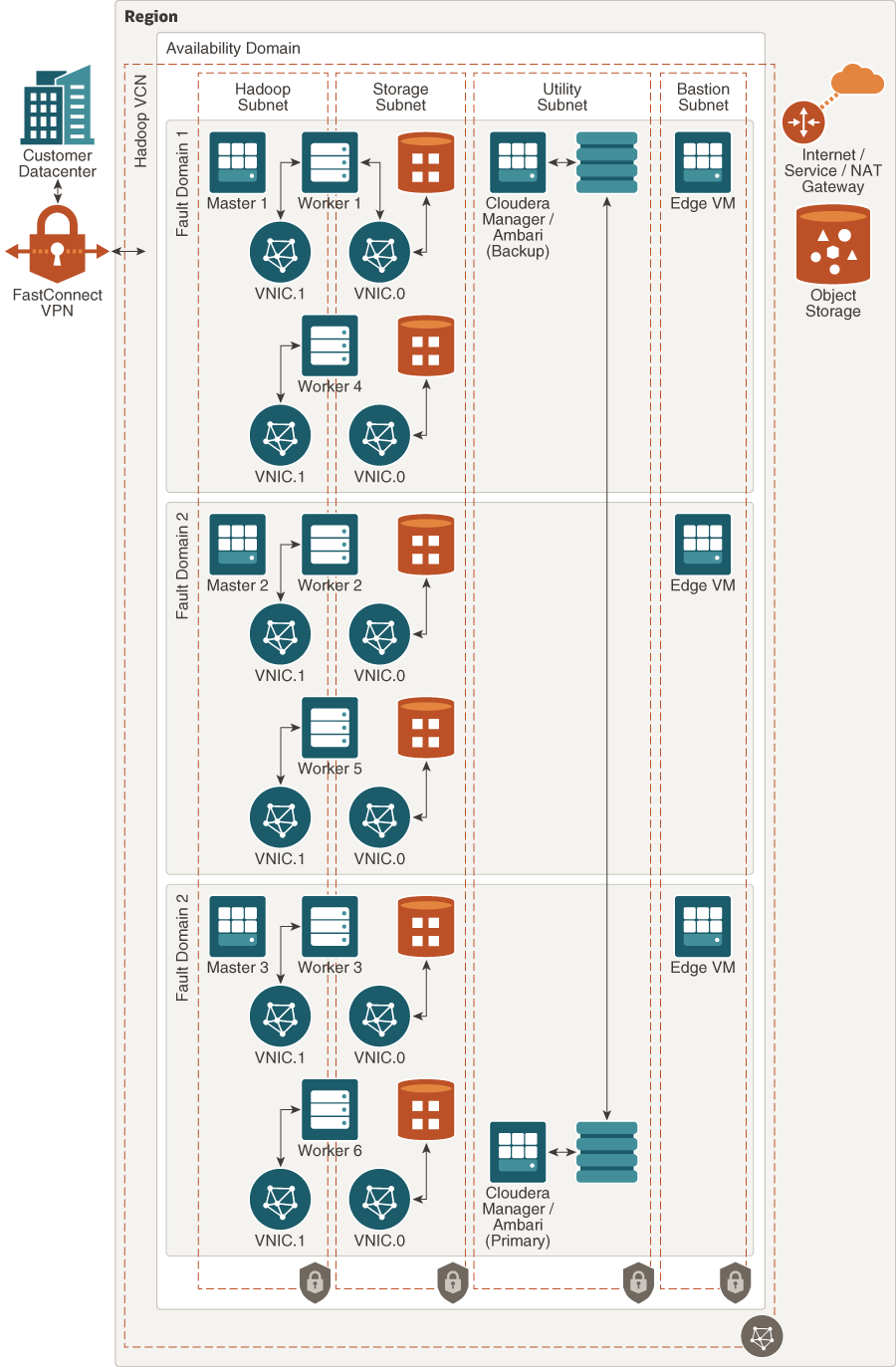

When you use block volumes for HDFS capacity, remember that the I/O traffic for a block volume shares the same VNIC as application traffic (by default). One way to optimize this when using bare metal shapes is to create a secondary VNIC to use for cluster connectivity, on the second physical interface. This reserves the primary VNIC for only block volume traffic, thus optimizing network utilization.

The following diagram demonstrates this concept:

Description of the illustration architecture-vnic.png

When using block volumes, also consider that aggregate HDFS I/O directly relates to the quantity and size of the block volumes associated with each worker node. To achieve the needed HDFS capacity, Oracle recommends scaling a greater number of volumes per worker rather than a small number of larger volumes.

Storage Considerations

For Hadoop deployments, two types of storage can be used for HDFS capacity: DenseIO shapes that have local NVMe, and block volumes. For MapR deployments, a single storage type must be chosen for worker nodes (homogenous) unless you have licensing for Data Tiering. Other Hadoop ISVs support heterogenous storage (mixing DenseIO NVMe and block volumes) without additional licensing costs.

Vendor Supported Storage Configurations

Cloudera and Hortonworks support all forms of storage on Oracle Cloud Infrastructure for use with Hadoop:

- Local NVMe on DenseIO shapes

- Block volumes

- Object Storage (Using S3 compatibility)

Cloudera supports data tiering (heterogenous storage) for deployments consisting of local NVMe and block volumes for HDFS.

MapR deployments can leverage either local NVMe on DenseIO shapes or block volumes for deployment.

- Object Storage: Refer to MapR XD Object Tiering.

- MapR doesn’t support heterogenous storage without additional licensing.

DenseIO NVMe

DenseIO NVMe is the most performant storage option for Hadoop on Oracle Cloud Infrastructure. High-speed local NVMe drives for use with HDFS are available in both bare metal and virtual machine shapes.

When using local NVMe, Oracle recommends setting DFS replication to three replicas to ensure data redundancy.

Alternatively, for Cloudera clusters, consider using erasure coding to increase storage efficiency for specific types of data.

Block Volumes

Block volumes on Oracle Cloud Infrastructure are a great performance option and provide flexible configuration for HDFS capacity. Block volumes are a network attached storage, and as such they use VNIC bandwidth for I/O. Block volumes also scale in IOPS and MB/s based on their configured size (per GB). Individual block volume throughput maxes out at 320 MB/s for 700GB or larger volumes.

The following table shows throughput scaling for a single worker node using 700GB volumes:

| Block Volumes | Aggregate throughput (GB/s) |

|---|---|

| 3 | 0.94 |

| 4 | 1.25 |

| 5 | 1.56 |

| 6 | 1.88 |

| 7 | 2.19 |

| 8 | 2.50 |

| 9 | 2.81 |

| 10 | 3.13 |

| 11 | 3.44 |

Oracle has found that after 11 block volumes, adding additional block volumes resulted in diminishing throughput gains.

When you use VMs as workers, remember that block volume traffic shares the same VNIC as Hadoop (application) traffic. The following table shows the recommended maximum block volumes and measured VNIC bandwidth for a selection of Oracle Cloud Infrastructure shapes, permitting instances enough additional bandwidth to support application traffic on top of disk I/O:

| Oracle Cloud Infrastructure Shape | VNIC Bandwidth | Suggested Max Block Volumes for HDFS |

|---|---|---|

| BM.DenseIO2.52 | 25Gbps | 11 |

| BM.Standard2.52 | 25Gbps | 11 |

| VM.Standard2.24 | 24.6Gbps | 6 |

| VM.Standard2.16 | 16.4Gbps | 4 |

| VM.Standard2.8 | 8.2Gbps | 3 |

When you use block volumes for HDFS capacity, we recommend using a larger number of small block volumes rather than a smaller number of large block volumes per worker to achieve HDFS target capacity.

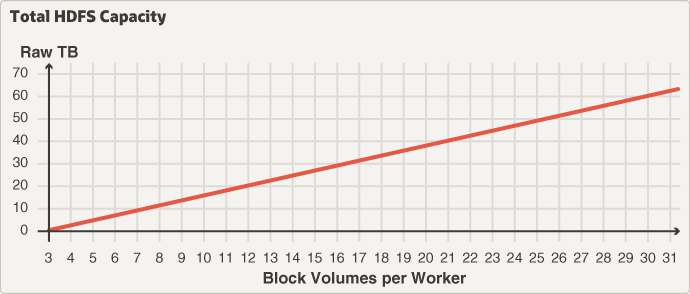

Using the minimum of three worker nodes as an example, with 700GB block volume size for HDFS:

Description of the illustration block-volume-hdfs-capacity-chart.png

Notice that three block volumes per worker is the minimum requirement. Oracle recommends scaling block volume quantity and size to optimize for required HDFS capacity, knowing that more block volumes per worker increases the overall aggregate HDFS bandwidth available. This is especially important for large, high-throughput workloads, which require more aggregate I/O in the cluster.

It's also important for persistent clusters to leave additional overhead for growth or refactoring.

Logging and Application Data

When you use block volumes for HDFS, you need to reserve some block volumes for use with Hadoop logs and application data (Cloudera Parcels, NameNode, and Journal metadata). Although OS volume can be expanded to accommodate this data, it's better to use dedicated block volumes for this purpose. Block volumes give you more portability if you want to change the instance type for your master nodes, and more flexibility if you need more I/O performance for a particular data location. Adding a few more block volumes to the instance, creating a RAID array, and migrating the existing data is easier when you have spare volume attachments to use.

The same is true for HDFS. Having overhead to add more block volumes can be easier than decommissioning workers, rebuilding them with larger disks, adding them back to the cluster, and rebalancing HDFS. Both approaches are valid; it's just a matter of whether your cluster workload requires a full complement of block volumes to support data throughput. If that's the case, consider switching workers to bare metal Dense IO shapes with the faster NVMe storage, and using a heterogenous storage model with data tiering.

Object Storage

Object Storage integration is possible with all Hadoop ISVs, including Apache Hadoop. Oracle Cloud Infrastructure has a native HDFS Connector that is compatible with Apache Hadoop. Hadoop ISVs (Cloudera, Hortonworks, and MapR) list allowed external endpoints for Object Storage integration, and require the use of S3 Compatibility for Object Storage integration to work correctly.

One caveat is that the S3 Compatibility works only with the tenancy home region. This means that for any integration done with Object Storage, Hadoop clusters should also be deployed in the same (home) region.

Another caveat is that when you use Object Storage to push or pull data into or out of the cluster, HDFS is not used as a caching layer. In fact, the OS file system is where data is cached as it transits between Object Storage and HDFS. If a large volume of data is moving back and forth between cluster HDFS and Object Storage, we recommend creating a block volume caching layer on the OS to support this data movement.

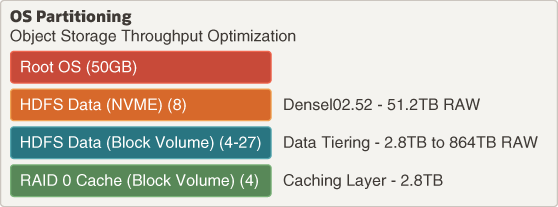

The following figure shows a typical partition diagram to support S3 data movement, along with data tiering for worker nodes.

Description of the illustration object-storage-partitioning-throughput.png

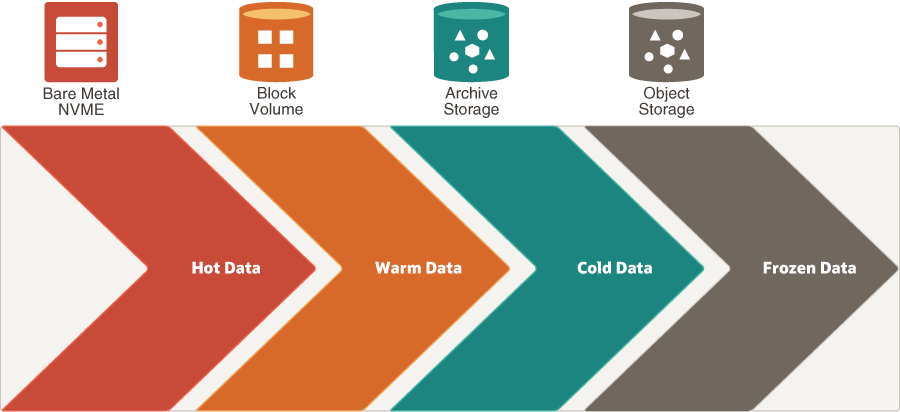

Data Tiering

You can combine all of the preceding storage options when you use Apache Hadoop, Cloudera, or Hortonworks to create a robust data tiering (heterogenous) storage model. You can also use data lifecycle management to push data from one storage tier to another as data ages, to optimize HDFS storage costs.

Description of the illustration data-lifecycle-tiering.png

Erasure Coding

As of Hadoop 3.0, erasure coding (EC) is supported on HDFS deployments. EC reduces the storage requirements for local HDFS data by storing data with a single copy augmented by parity cells. HDFS topology can be tagged as EC, which enables this functionality for any data stored in that tagged location. This effectively reduces the raw storage requirement for EC-tagged HDFS data, allowing for increased storage efficiency.

There are some caveats regarding EC:

- Minimum worker node counts are required to enable EC.

- Data Locality is lost for EC tagged data.

- EC is appropriate for medium sized files which are accessed infrequently. It is inefficient for small files, and for files accessed frequently.

- EC increases the number of block objects present in Name Node Metadata as compared to similar data with traditional (3x) replication.

- Recovery of EC data requires compute from the cluster (parity rebuild). This recovery time is longer than traditional (3x) replicated data.

Monitoring Performance

The Oracle Cloud Infrastructure Monitoring service lets you actively and passively monitor your cloud resources by using the Metrics and Alarms features. Setting alarms to notify when you hit performance or capacity thresholds is a great way to leverage Oracle Cloud Infrastructure native tools to manage your infrastructure

Reference Architectures

This topic provides reference material for each Hadoop ISV. The following reference architectures and bills of materials reflect a minimum required footprint for vendor-supported deployment of Hadoop on Oracle Cloud Infrastructure.

As an open-source project, the Apache Hadoop deployment does not have a vendor-supplied minimum required footprint. Oracle recommends using the Hortonworks deployment shown here as a reasonable guide for a minimum Apache deployment.

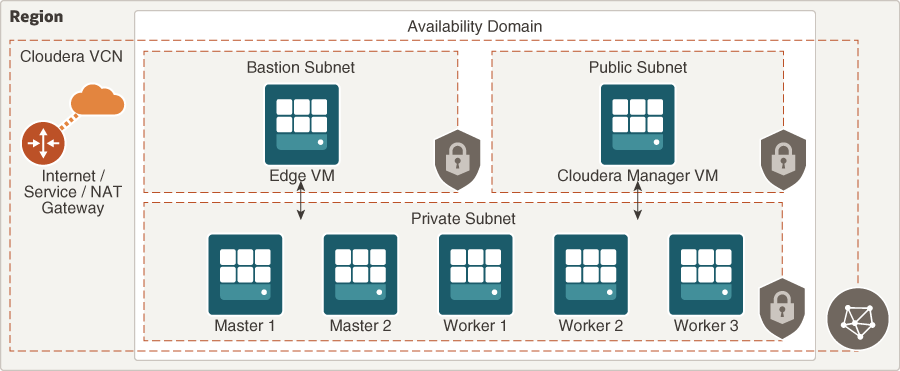

Cloudera

Description of the illustration architecture-cloudera.png

The minimum requirements for deployment of Hadoop using Cloudera are:

| Role | Quantity | Recommended Compute Shape |

|---|---|---|

| Edge | 1 | VM.Standard2.4 |

| Cloudera Manager | 1 | VM.Standard2.16 |

| Master Node | 2 | VM.Standard2.16 |

| Worker Node | 3 | BM.DenselO2.52 |

In this minimal deployment, the Cloudera Manager node also runs master services.

You must bring your own license to deploy Cloudera on Oracle Cloud Infrastructure; all software support is through Cloudera.

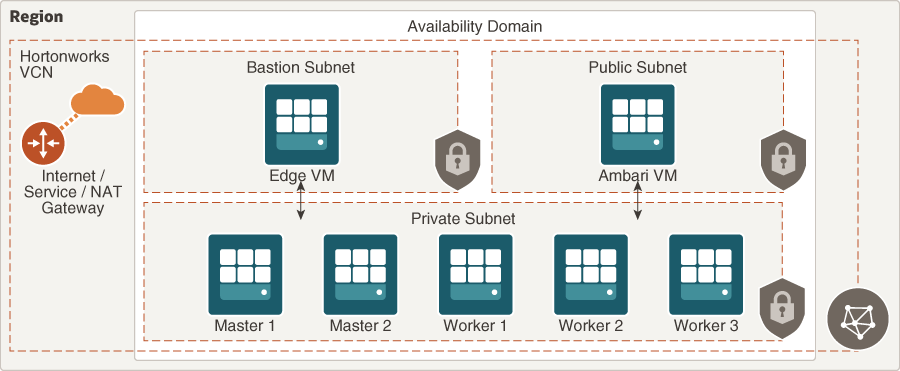

Hortonworks

Description of the illustration architecture-hortonworks.png

The minimum requirements for deployment of Hadoop using Hortonworks are:

| Role | Quantity | Recommended Compute Shape |

|---|---|---|

| Edge | 1 | VM.Standard2.4 |

| Ambari | 1 | VM.Standard2.16 |

| Master Node | 2 | VM.Standard2.16 |

| Worker Node | 3 | BM.DenselO2.52 |

In this minimal deployment, the Ambari node also runs master services.

You must bring your own license to deploy Hortonworks on Oracle Cloud Infrastructure; all software support is through Hortonworks.

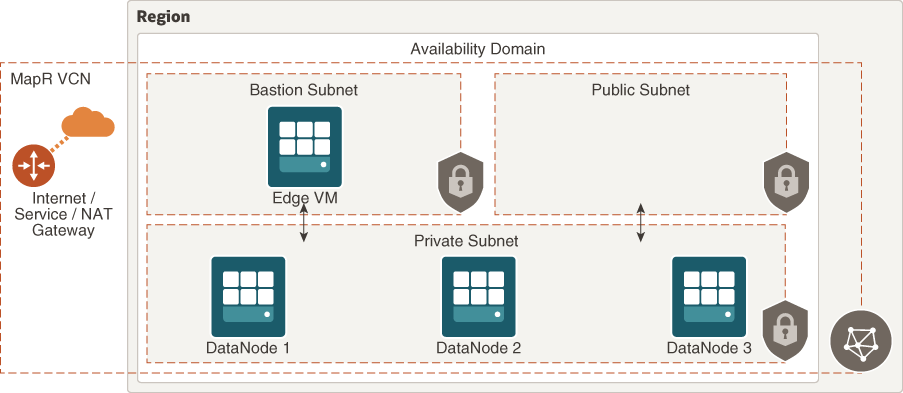

MapR

Description of the illustration architecture-mapr.png

The minimum requirements for deployment of Hadoop using MapR are:

| Role | Quantity | Recommended Compute Shape |

|---|---|---|

| Edge | 1 | VM.Standard2.4 |

| Data Node | 3 | BM.DenselO2.52 |

You must bring your own license to deploy MapR on Oracle Cloud Infrastructure; all software support is through MapR.

Terraform Deployments

You can find Terraform templates for each Hadoop ISV in the Download Code section of this solution.

Cloudera and Hortonworks repositories have Oracle Resource Manager-compliant templates. Note that these are specific to Resource Manager. The base (master) branch contains a standalone Terraform template. Resource Manager templates for Cloudera and Hortonworks also contain a YAML schema that easily populates the Terraform variables for each template. This provides drop-down UI selection for variables, which you can customize for your use cases, essentially populating each template with permissible shape options and security/configuration settings.